单层感知机

感知机是一个二分类模型,是最早的AI模型之一

它的求解算法等价于使用批量大小为1的梯度下降

它不能拟合XOR函数,导致第一次AI寒冬

多层感知机

多层感知机使用隐藏层和激活函数来得到非线性模型

常用激活函数是Sigmoid,Tanh,ReLU

超参数:隐藏层数、每层隐藏层的大小

点击查看代码

import torch

from torch import nn

from d2l import torch as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# 隐藏层数1 每层隐藏层的大小256

num_inputs, num_outputs, num_hiddens = 784, 10, 256

# 全0或全1会怎样

W1 = nn.Parameter(torch.randn(num_inputs, num_hiddens, requires_grad=True))

# W1 = nn.Parameter(torch.zeros(num_inputs, num_hiddens, requires_grad=True))

# W1 = nn.Parameter(torch.ones(num_inputs, num_hiddens, requires_grad=True))

b1 = nn.Parameter(torch.zeros(num_hiddens, requires_grad=True))

W2 = nn.Parameter(torch.randn(num_hiddens, num_outputs, requires_grad=True))

# W2 = nn.Parameter(torch.zeros(num_hiddens, num_outputs, requires_grad=True))

# W2 = nn.Parameter(torch.ones(num_hiddens, num_outputs, requires_grad=True))

b2 = nn.Parameter(torch.zeros(num_outputs, requires_grad=True))

params = [W1, b1, W2, b2]

# 实现ReLU激活函数

def relu(X):

a = torch.zeros_like(X)

return torch.max(X, a)

# 感知机模型

def net(X):

X = X.reshape((-1, num_inputs))

H = relu(X @ W1 + b1)

return (H @ W2 + b2)

# 损失函数

loss = nn.CrossEntropyLoss()

# 训练次数、学习率

num_epochs, lr = 3, 0.05

# 优化函数

updater = torch.optim.SGD(params, lr=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, updater)

W全0或全1会怎样

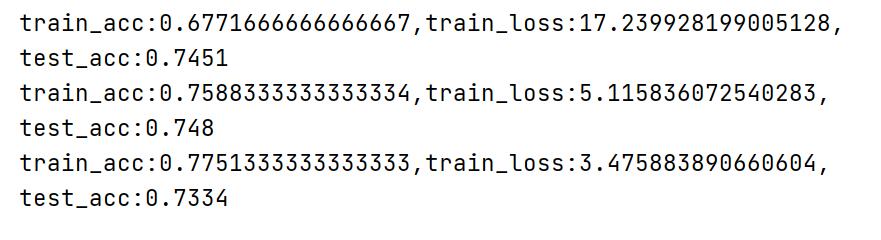

随机

![image]()

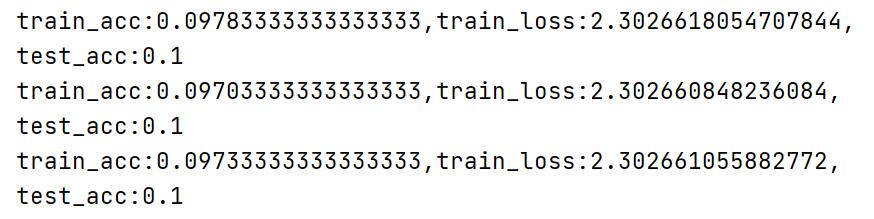

全0

![image]()

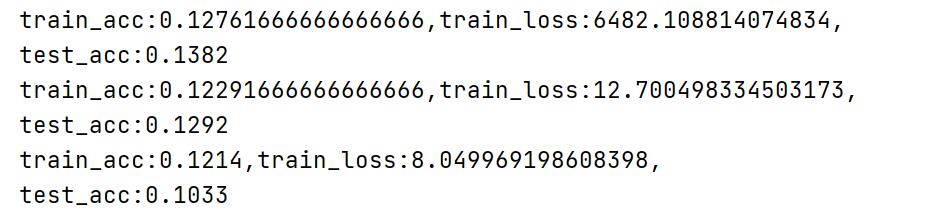

全1

![image]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号