下载几千个搜狗细胞词库

import sys import requests from bs4 import BeautifulSoup as BS def get_links (url): links = [] try: r = requests.get(url); r.raise_for_status() # 失败抛出异常 links = BS(r.text, 'html.parser').find_all('a') # find_all()找不到时返回[], find则None except Exception: pass r = [] for a in links: # Tag有text等属性,但href这类标签属性需要用get # 无href时返回''而非None r.append((a.text, a.get('href', ''))) return r B = 'https://pinyin.sogou.com/' S = '/dict/detail/index/' T = '?rf=dictindex' if len(sys.argv) == 1: for a in get_links(B + 'dict/'): if a[1].find(S) != -1: print(a[0], a[1].replace(S, '').replace(T, '')) else: for a in get_links(B + S + sys.argv[1] + T): if a[1].find('download_cell') != -1: print('https:' + a[1])

试了一通wget -r -A '*.scel' 没成功,请AI写了个link_extractor.py改了改。

$ py le.py ... 汽车词汇大全 15153 歌手人名大全 20658 热门电影大全 20652 $ py le.py 15153 ... https://pinyin.sogou.com/d/dict/download_cell.php?id=82331&name=斯柯达 https://pinyin.sogou.com/d/dict/download_cell.php?id=93870&name=考啦维护 https://pinyin.sogou.com/d/dict/download_cell.php?id=93866&name=考啦学车 https://pinyin.sogou.com/d/dict/download_cell.php?id=93868&name=考啦陪驾

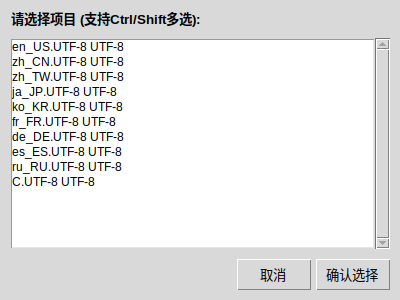

让AI“用python, tikinter写个程序,从列表里选择一项或多项,并返回index,像dkpg-reconfigure locales那样”即可。

PySimpleGUI现在用不了。pip install pysimplegui飞快地装上的没用 (16KB)。

>>> import PySimpleGUI PySimpleGUI is now located on a private PyPI server. Please add to your pip command: -i https://PySimpleGUI.net/install The version you just installed should uninstalled: python -m pip uninstall PySimpleGUI python -m pip cache purge Then install the latest from the private server: python -m pip install --upgrade --extra-index-url https://PySimpleGUI.net/install PySimpleGUI

按照说明把本尊请来后,…首次运行…99美元…

使用-i或--input-file选项,可以让wget从文件中读取多个 URL并依次下载它们。

我的~/.wgetrc

debug=off random_wait=off header=Connection: keep-alive header=sec-ch-ua: "Not/A)Brand";v="8", "Chromium";v="126" header=sec-ch-ua-mobile: ?0 header=User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.6478.251 Safari/537.36 header=sec-ch-ua-platform: "Linux" header=Sec-Fetch-Site: same-origin header=Sec-Fetch-Mode: no-cors #header=Accept-Encoding: gzip, deflate, br, zstd header=Accept-Language: zh-CN,zh-TW;q=0.9,zh;q=0.8,en-US;q=0.7,en;q=0.6

抄的360浏览器的。获取这些header有许许多多种方法,其中之一是用Python写个最最简单的httpd并print.

补充:

- 遇到gzip压缩的,wget -r下载index.html后就停了。应注释掉Accept-Encoding: gzip, deflate, br, zstd

- 加那个不是为了装B,而是为了从B站下载视频

- 可耻地失败了。但用you-get下了1477集《四郎讲棋》。除了程序高档和漂亮,人还有api-key

- 加那个不是为了装B,而是为了从B站下载视频

- Python程序未能下载全部.scel——不是所有链接都处理了(新:scel-url.txt.7z)

-

用wget -r爬了搜狗网站,grep download_cell后去重scel-url.txt 8498行

-

我只搞到了8476个.scel, 453M;网络流行新词.scel是手工下载的

-

有838个是.html,不知为啥。转txt后303M,就这样吧

- 有糟粕如 少年激霸弹 女王之刃第二季玉座的继承者 nv wang zhi ren di er ji yu zuo de ji cheng zhe…

- 战斗之魂·少年激霸弹是2009年播出的日本卡牌战斗题材电视动画。

- 女皇之刃 王座的继承者是《女皇之刃》系列的第二季动画。故事设定为每四年一次的皇位争夺战,参赛者全是女性,包括人类、天使、魔物等,她们需要通过武力决定谁当女皇。 动画以美女战士、硬核战斗和奇幻设定为看点。

-

- 旧Pyhton程序转不了网络流行新词.scel,增加了对b'\x40\x15\0\0\x45\x43\x53\x01\x01\0\0\0'型的处理

- 把全国网友的劳动成果据为己有,是互联网精神么?

from http.server import * from threading import Thread class ReqHandler (SimpleHTTPRequestHandler): def do_GET (m): print(m.headers) def httpd_thread (): httpd = ThreadingHTTPServer(('', 8000), ReqHandler) httpd.allow_reuse_port = True # 服务器崩溃后快速重启时重用同一端口 # SO_REUSEPORT 允许多个socket同时绑定到完全相同的IP和端口组合,内核通过哈希分配连接请求到不同socket print('Listening at', httpd.server_address[1]) httpd.serve_forever() Thread(daemon=True, target=httpd_thread).start() try: while True: input() except BaseException: pass

浙公网安备 33010602011771号

浙公网安备 33010602011771号