iOS底层原理(七)多线程(上)

基本概念

进程和线程

- 进程:进程是指在系统中正在运行的一个应用程序

- 线程:1个进程要想执行任务,必须得有线程(每1个进程至少要有1条线程)

- 一个进程(程序)的所有任务都在线程中执行

- 1个线程中任务的执行是串行的

进程和线程的比较

- 线程是CPU调用(执行任务)的最小单位

- 进程是CPU分配资源和调度的单位

- 一个程序可以对应多个进程,一个进程中可以有多个线程,但至少要有一个线程

- 同一个进程内的线程共享进程的资源

多线程

- 1个进程中可以开启多条线程,每条线程可以并行(同时)执行不同的任务

- 同一时间,CPU只能处理1条线程,只有1条线程在工作(执行)

- 多线程并发(同时)执行,其实是CPU快速地在多条线程之间调度(切换)

- 如果CPU调度线程的时间足够快,就造成了多线程并发执行的假象

优点

- 能适当提高程序的执行效率

- 能适当提高资源利用率(CPU、内存利用率)

缺点

- 创建线程是有开销的

- 如果开启大量的线程,会降低程序的性能

- 线程越多,CPU在调度线程上的开销就越大

- 程序设计更加复杂:比如线程之间的通信、多线程的数据共享

主线程

- 一个iOS程序运行后,默认会开启1条线程,称为“主线程”或“UI线程”

- 显示\刷新UI界面

- 处理UI事件(比如点击事件、滚动事件、拖拽事件等)

- 别将比较耗时的操作放到主线程中

iOS中的常见多线程方案

NSThread、GCD、NSOperation底层都是基于pthread来实现的

NSThread

判断以及获取线程的方法

// 1.获得主线程

NSThread *mainThread = [NSThread mainThread];

// 2.获得当前线程

NSThread *currentThread = [NSThread currentThread];

// 3.判断主线程

// 类方法

BOOL isMainThreadA = [NSThread isMainThread];

// 对象方法

BOOL isMainThreadB = [currentThread isMainThread];

创建线程的方法

// 1.手动启动线程

// 可以拿到线程对象进行详细设置

// object:需要传递的参数

NSThread *threadA = [[NSThread alloc]initWithTarget:self selector:@selector(run:) object:@"ABC"];

// 设置属性

threadA.name = @"线程A";

//设置优先级 取值范围 0.0 ~ 1.0 之间 最高是1.0 默认优先级是0.5

threadA.threadPriority = 1.0;

// 启动线程

[threadA start];

// 2.自动启动线程

// 无法对线程进行更详细的设置

[NSThread detachNewThreadSelector:@selector(run:) toTarget:self withObject:@"分离子线程"];

// 3.开启一条后台线程

[self performSelectorInBackground:@selector(run:) withObject:@"开启后台线程"];

其他常用方法

// 1.阻塞线程

[NSThread sleepForTimeInterval:2.0];

[NSThread sleepUntilDate:[NSDate dateWithTimeIntervalSinceNow:3.0]];

// 2.回到主线程

// waitUntilDone:是否需要等待

[self performSelectorOnMainThread:@selector(showImage:) withObject:image waitUntilDone:YES];

[self.imageView performSelectorOnMainThread:@selector(setImage:) withObject:image waitUntilDone:YES];

// 3.可以设置在哪个线程执行

[self performSelector:@selector(showImage:) onThread:[NSThread mainThread] withObject:image waitUntilDone:YES];

// 4.退出线程

//注意:线程死了不能复生

[NSThread exit];

NSOperation

NSOperation的基本使用

// 1.创建操作

// alloc init 方式创建操作

NSInvocationOperation *op1 = [[NSInvocationOperation alloc]initWithTarget:self selector:@selector(download1) object:nil];

// block 方式创建操作

NSBlockOperation *op2 = [NSBlockOperation blockOperationWithBlock:^{

NSLog(@"1----%@",[NSThread currentThread]);

}];

// 2.追加任务

// 注意:如果一个操作中的任务数量大于1,那么会开子线程并发执行任务

// 注意:不一定是子线程,有可能是主线程

[op2 addExecutionBlock:^{

NSLog(@"2---%@",[NSThread currentThread]);

}];

// 3.启动

[op1 start];

[op2 start];

// 4.操作监听

// 执行操作完毕后会执行该回调

op2.completionBlock = ^{

NSLog(@"%@",[NSThread currentThread]);

};

// 5.设置依赖

// 注意点:不能循环依赖

// 可以跨队列依赖

[op1 addDependency:op2];

队列的基本使用

第一种创建方式

// 1.创建操作,封装任务

NSInvocationOperation *op1 = [[NSInvocationOperation alloc]initWithTarget:self selector:@selector(download1) object:nil];

// 2.创建队列

// 非主队列: (同时具备并发和串行的功能)

// 默认情况下,非主队列是并发队列

NSOperationQueue *queue = [[NSOperationQueue alloc] init];

// 3.添加操作到队列中

[queue addOperation:op1]; //内部已经调用了[op1 start]

第二种创建方式

// 1.创建操作

NSBlockOperation *op1 = [NSBlockOperation blockOperationWithBlock:^{

NSLog(@"1----%@",[NSThread currentThread]);

}];

NSBlockOperation *op2 = [NSBlockOperation blockOperationWithBlock:^{

NSLog(@"2----%@",[NSThread currentThread]);

}];

// 追加任务

[op2 addExecutionBlock:^{

NSLog(@"3----%@",[NSThread currentThread]);

}];

// 2.创建队列

NSOperationQueue *queue = [[NSOperationQueue alloc]init];

// 3.添加操作到队列

[queue addOperation:op1];

[queue addOperation:op2];

第三种创建方式

// 1.创建队列

NSOperationQueue *queue = [[NSOperationQueue alloc]init];

// 2.队列直接添加一个操作(省略创建操作)

[queue addOperationWithBlock:^{

NSLog(@"1----%@",[NSThread currentThread]);

}];

第四种创建方式

// 1.创建队列

// LLOperation继承自NSOperation

LLOperation *op1 = [[LLOperation alloc]init];

// 2.LLOperation内部重写 main 方法

- (void)main {

NSLog(@"main---%@",[NSThread currentThread]);

}

// 3.创建队列

NSOperationQueue *queue = [[NSOperationQueue alloc]init];

// 4.添加操作到队列

[queue addOperation:op1];

队列的其他用法

NSOperationQueue *queue = [[NSOperationQueue alloc]init];

// 1.设置最大并发数量

/*

同一时间最多有多少个任务可以执行

串行执行任务!=只开一条线程 (线程同步)

maxConcurrentOperationCount >1 那么就是并发队列

maxConcurrentOperationCount == 1 那就是串行队列

maxConcurrentOperationCount == 0 不会执行任务

maxConcurrentOperationCount == -1 特殊意义 最大值 表示不受限制

*/

queue.maxConcurrentOperationCount = 5;

// 2.暂停(可以恢复)

// YES代表暂停队列,NO代表恢复队列

/*

队列中的任务也是有状态的:已经执行完毕的 | 正在执行 | 排队等待状态

不能暂停当前正在处于执行状态的任务

*/

[queue setSuspended:YES];

// 3.取消(不可以恢复)

// 该方法内部调用了所有操作的cancel方法

[queue cancelAllOperations];

// 4.创建主队列

// 会在主线程执行操作,不开线程

NSOperationQueue *queue = [NSOperationQueue mainQueue];

总结:

NSOperation是个抽象类,并不具备封装操作的能力,必须使用它的子类- 默认情况下,

NSInvocationOperation调用了start方法后并不会开一条新线程去执行操作,而是在当前线程同步执行操作;

只有将NSOperation放到一个NSOperationQueue中,才会异步执行操作 - 只要

NSBlockOperation封装的操作数> 1,就会异步执行操作 - 操作之间不能相互依赖,会造成循环依赖

- 经常通过

- (BOOL)isCancelled方法检测操作是否被取消,对取消做出响应

GCD

GCD的队列

GCD的队列可以分为2大类型

- 并发队列(Concurrent Dispatch Queue)

- 可以让多个任务并发(同时)执行(自动开启多个线程同时执行任务)

- 并发功能只有在异步

dispatch_async函数下才有效

- 串行队列(Serial Dispatch Queue)

- 让任务一个接着一个地执行(一个任务执行完毕后,再执行下一个任务)

// 1.创建队列

/*

第一个参数:C语言的字符串,标签

第二个参数:队列的类型

DISPATCH_QUEUE_CONCURRENT:并发

DISPATCH_QUEUE_SERIAL:串行

*/

// 并发队列

dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_CONCURRENT);

// 串行队列

dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_SERIAL);

// 2.获得全局并发队列

// 第一个参数:可以设置优先级

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

// 3.获得主队列

dispatch_queue_t queue = dispatch_get_main_queue();

// 4.异步函数

dispatch_async(queue, ^{

NSLog(@"download1----%@",[NSThread currentThread]);

});

// 5.同步函数

dispatch_sync(queue, ^{

NSLog(@"download2----%@",[NSThread currentThread]);

});

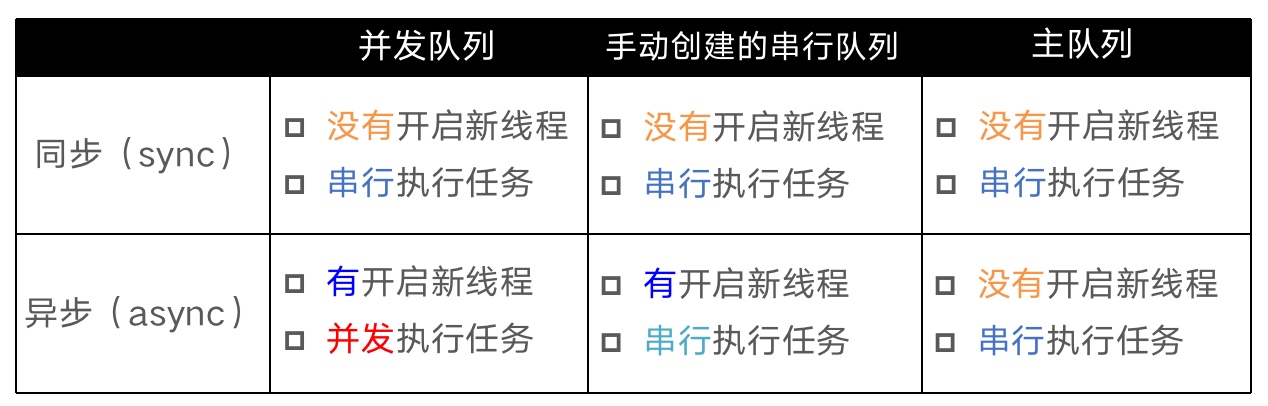

同步、异步、并发、串行的注意点:

- 同步和异步主要影响:能不能开启新的线程 - 同步:在当前线程中执行任务,不具备开启新线程的能力 - 异步:在新的线程中执行任务,具备开启新线程的能力- 并发和串行主要影响:任务的执行方式 - 并发:多个任务并发(同时)执行 - 串行:一个任务执行完毕后,再执行下一个任务

创建一个同步串行队列

// 不论是哪种队列,都不会开启新线程

dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_SERIAL);

// dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_CONCURRENT);

// dispatch_queue_t queue = dispatch_get_main_queue();

dispatch_sync(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

dispatch_sync(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

// 打印输出:

// <NSThread: 0x6000020198c0>{number = 1, name = main}

// <NSThread: 0x6000020191c0>{number = 1, name = main}

创建一个异步并发队列

// 并发队列

dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

// 打印输出:

// <NSThread: 0x6000020198c0>{number = 4, name = (null)}

// <NSThread: 0x6000020191c0>{number = 5, name = (null)}

创建一个异步串行队列

// 串行队列

dispatch_queue_t queue = dispatch_queue_create("com.haha", DISPATCH_QUEUE_SERIAL);

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

// 打印输出:

// <NSThread: 0x6000020198c0>{number = 5, name = (null)}

// <NSThread: 0x6000020191c0>{number = 5, name = (null)}

在主队列中,不论是同步还是异步都不会开启子线程

dispatch_queue_t queue = dispatch_get_main_queue();

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

// 打印输出:

// <NSThread: 0x6000020198c0>{number = 1, name = main}

但是使用sync函数往当前串行队列中添加任务,会卡住当前的串行队列(产生死锁)

综上所述可以用一张图来概述

dispatch_get_global_queue和dispatch_queue_create的区别

我们在代码里分别创建两种队列,然后打印发现,全局队列的地址都是同一个,而dispatch_queue_create的对象都不相同

dispatch_queue_t queue1 = dispatch_get_global_queue(0, 0);

dispatch_queue_t queue2 = dispatch_get_global_queue(0, 0);

dispatch_queue_t queue3 = dispatch_queue_create("queu3", DISPATCH_QUEUE_CONCURRENT);

dispatch_queue_t queue4 = dispatch_queue_create("queu4", DISPATCH_QUEUE_CONCURRENT);

dispatch_queue_t queue5 = dispatch_queue_create("queu5", DISPATCH_QUEUE_CONCURRENT);

NSLog(@"%p %p %p %p %p", queue1, queue2, queue3, queue4, queue5);

// 分别输出:0x10c5d8080 0x10c5d8080 0x6000037c3180 0x6000037c1580 0x6000037c3200

GCD的队列组

第一种创建方式

// 1.创建队列

dispatch_queue_t queue = dispatch_get_global_queue(0, 0);

// 2.创建队列组

dispatch_group_t group = dispatch_group_create();

// 3.把任务添加到队列中

dispatch_group_async(group, queue, ^{

NSLog(@"1----%@",[NSThread currentThread]);

});

dispatch_group_async(group, queue, ^{

NSLog(@"2----%@",[NSThread currentThread]);

});

// 4.拦截通知,当队列组中所有的任务都执行完毕的时候回进入到下面的方法

dispatch_group_notify(group, queue, ^{

NSLog(@"-------dispatch_group_notify-------");

});

第二种创建方式

// 1.创建队列

dispatch_queue_t queue = dispatch_get_global_queue(0, 0);

// 2.创建队列组

dispatch_group_t group = dispatch_group_create();

// 3.在该方法后面的异步任务会被纳入到队列组的监听范围,进入群组

// dispatch_group_enter|dispatch_group_leave 必须要配对使用

dispatch_group_enter(group);

dispatch_async(queue, ^{

NSLog(@"1----%@",[NSThread currentThread]);

//离开群组

dispatch_group_leave(group);

});

dispatch_group_enter(group);

dispatch_async(queue, ^{

NSLog(@"2----%@",[NSThread currentThread]);

//离开群组

dispatch_group_leave(group);

});

// 拦截通知

// 内部本身是异步的

dispatch_group_notify(group, queue, ^{

NSLog(@"-------dispatch_group_notify-------");

});

第三种方式

// 1.创建队列

dispatch_queue_t queue = dispatch_get_global_queue(0, 0);

// 2.创建队列组

dispatch_group_t group = dispatch_group_create();

// 3.把任务添加到队列中

dispatch_group_async(group, queue, ^{

NSLog(@"1----%@",[NSThread currentThread]);

});

dispatch_group_async(group, queue, ^{

NSLog(@"2----%@",[NSThread currentThread]);

});

// 4.会阻塞线程

// 直到队列组中所有的任务都执行完毕之后才能执行

dispatch_group_wait(group, DISPATCH_TIME_FOREVER);

NSLog(@"----end----");

其他常用方法

// 1.延迟执行的几种方法

// 1.1

[self performSelector:@selector(task) withObject:nil afterDelay:2.0];

// 1.2

// repeats:是否重复调用

[NSTimer scheduledTimerWithTimeInterval:2.0 target:self selector:@selector(task) userInfo:nil repeats:YES];

// 1.3

// 可以设置队列控制在哪个线程执行延迟

dispatch_queue_t queue = dispatch_get_global_queue(0, 0);

dispatch_after(dispatch_time(DISPATCH_TIME_NOW, (int64_t)(2.0 * NSEC_PER_SEC)), queue, ^{

NSLog(@"GCD----%@",[NSThread currentThread]);

});

// 2.一次性代码

// 整个程序运行过程中只会执行一次

// onceToken用来记录该部分的代码是否被执行过

static dispatch_once_t onceToken;

dispatch_once(&onceToken, ^{

NSLog(@"---once----");

});

// 3.快速遍历

// 开多个线程进行遍历

/*

第一个参数:遍历的次数

第二个参数:队列(并发队列)

第三个参数:index 索引

*/

dispatch_apply(10, dispatch_get_global_queue(0, 0), ^(size_t index) {

NSLog(@"%zd---%@",index,[NSThread currentThread]);

});

// 4.栅栏函数

// 栅栏函数不能使用全局并发队列

// 栅栏函数之后的线程都会延后执行

dispatch_queue_t queue = dispatch_queue_create("download", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

dispatch_barrier_async(queue, ^{

NSLog(@"+++++++++++++++++++++++++++++");

});

dispatch_async(queue, ^{

NSLog(@"%@", [NSThread currentThread]);

});

分析底层实现

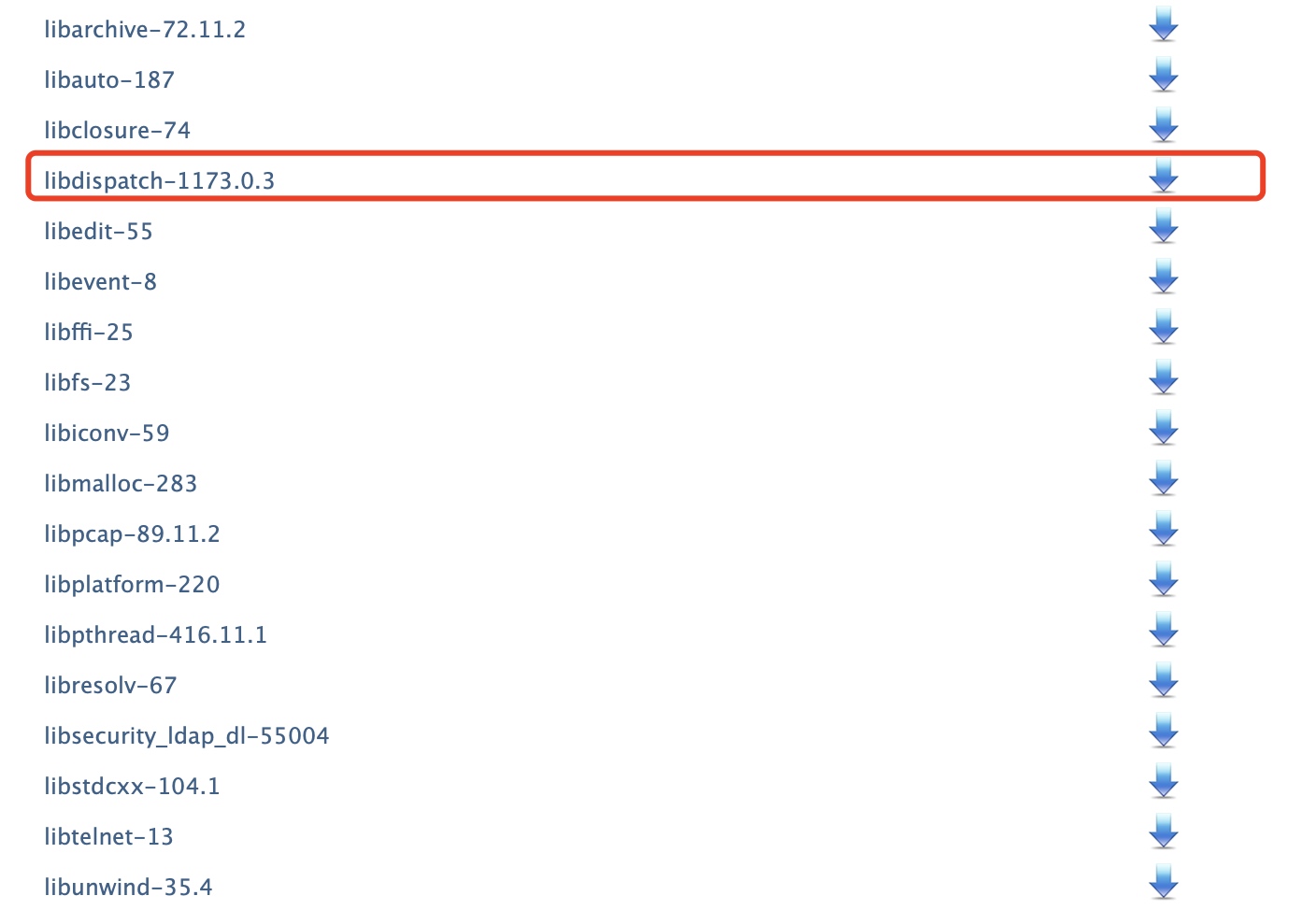

源码下载

我们可以通过GCD的源码libdispatch.dylib来分析内部实现

libdispatch.dylib的下载地址:https://opensource.apple.com/release/macos-1015.html

然后找到libdispatch-1173.0.3进行下载

源码分析

dispatch_queue_create的底层实现

我们在queue.c文件中搜索dispatch_queue_create,可以找到对应实现

dispatch_queue_t

dispatch_queue_create(const char *label, dispatch_queue_attr_t attr)

{

return _dispatch_lane_create_with_target(label, attr,

DISPATCH_TARGET_QUEUE_DEFAULT, true);

}

进入_dispatch_lane_create_with_target

DISPATCH_NOINLINE

static dispatch_queue_t

_dispatch_lane_create_with_target(const char *label, dispatch_queue_attr_t dqa,

dispatch_queue_t tq, bool legacy)

{

// dqai 创建

dispatch_queue_attr_info_t dqai = _dispatch_queue_attr_to_info(dqa);

//

// Step 1: Normalize arguments (qos, overcommit, tq)

// 第一步:规范化参数,例如qos, overcommit, tq

dispatch_qos_t qos = dqai.dqai_qos;

#if !HAVE_PTHREAD_WORKQUEUE_QOS

if (qos == DISPATCH_QOS_USER_INTERACTIVE) {

dqai.dqai_qos = qos = DISPATCH_QOS_USER_INITIATED;

}

if (qos == DISPATCH_QOS_MAINTENANCE) {

dqai.dqai_qos = qos = DISPATCH_QOS_BACKGROUND;

}

#endif // !HAVE_PTHREAD_WORKQUEUE_QOS

_dispatch_queue_attr_overcommit_t overcommit = dqai.dqai_overcommit;

if (overcommit != _dispatch_queue_attr_overcommit_unspecified && tq) {

if (tq->do_targetq) {

DISPATCH_CLIENT_CRASH(tq, "Cannot specify both overcommit and "

"a non-global target queue");

}

}

if (tq && dx_type(tq) == DISPATCH_QUEUE_GLOBAL_ROOT_TYPE) {

// Handle discrepancies between attr and target queue, attributes win

if (overcommit == _dispatch_queue_attr_overcommit_unspecified) {

if (tq->dq_priority & DISPATCH_PRIORITY_FLAG_OVERCOMMIT) {

overcommit = _dispatch_queue_attr_overcommit_enabled;

} else {

overcommit = _dispatch_queue_attr_overcommit_disabled;

}

}

if (qos == DISPATCH_QOS_UNSPECIFIED) {

qos = _dispatch_priority_qos(tq->dq_priority);

}

tq = NULL;

} else if (tq && !tq->do_targetq) {

// target is a pthread or runloop root queue, setting QoS or overcommit

// is disallowed

if (overcommit != _dispatch_queue_attr_overcommit_unspecified) {

DISPATCH_CLIENT_CRASH(tq, "Cannot specify an overcommit attribute "

"and use this kind of target queue");

}

} else {

if (overcommit == _dispatch_queue_attr_overcommit_unspecified) {

// Serial queues default to overcommit!

overcommit = dqai.dqai_concurrent ?

_dispatch_queue_attr_overcommit_disabled :

_dispatch_queue_attr_overcommit_enabled;

}

}

if (!tq) {

tq = _dispatch_get_root_queue(

qos == DISPATCH_QOS_UNSPECIFIED ? DISPATCH_QOS_DEFAULT : qos,

overcommit == _dispatch_queue_attr_overcommit_enabled)->_as_dq;

if (unlikely(!tq)) {

DISPATCH_CLIENT_CRASH(qos, "Invalid queue attribute");

}

}

//

// Step 2: Initialize the queue

// 第二步:初始化队列

if (legacy) {

// if any of these attributes is specified, use non legacy classes

if (dqai.dqai_inactive || dqai.dqai_autorelease_frequency) {

legacy = false;

}

}

// 拼接队列名称

const void *vtable;

dispatch_queue_flags_t dqf = legacy ? DQF_MUTABLE : 0;

if (dqai.dqai_concurrent) {

vtable = DISPATCH_VTABLE(queue_concurrent);

} else {

vtable = DISPATCH_VTABLE(queue_serial);

}

switch (dqai.dqai_autorelease_frequency) {

case DISPATCH_AUTORELEASE_FREQUENCY_NEVER:

dqf |= DQF_AUTORELEASE_NEVER;

break;

case DISPATCH_AUTORELEASE_FREQUENCY_WORK_ITEM:

dqf |= DQF_AUTORELEASE_ALWAYS;

break;

}

if (label) {

const char *tmp = _dispatch_strdup_if_mutable(label);

if (tmp != label) {

dqf |= DQF_LABEL_NEEDS_FREE;

label = tmp;

}

}

// 创建队列,并初始化

dispatch_lane_t dq = _dispatch_object_alloc(vtable,

sizeof(struct dispatch_lane_s));

// 根据dqai.dqai_concurrent的值,判断是串行还是并发队列

_dispatch_queue_init(dq, dqf, dqai.dqai_concurrent ?

DISPATCH_QUEUE_WIDTH_MAX : 1, DISPATCH_QUEUE_ROLE_INNER |

(dqai.dqai_inactive ? DISPATCH_QUEUE_INACTIVE : 0));

// 队列label的标识符

dq->dq_label = label;

dq->dq_priority = _dispatch_priority_make((dispatch_qos_t)dqai.dqai_qos,

dqai.dqai_relpri);

if (overcommit == _dispatch_queue_attr_overcommit_enabled) {

dq->dq_priority |= DISPATCH_PRIORITY_FLAG_OVERCOMMIT;

}

if (!dqai.dqai_inactive) {

_dispatch_queue_priority_inherit_from_target(dq, tq);

_dispatch_lane_inherit_wlh_from_target(dq, tq);

}

_dispatch_retain(tq);

dq->do_targetq = tq;

_dispatch_object_debug(dq, "%s", __func__);

return _dispatch_trace_queue_create(dq)._dq;

}

_dispatch_trace_queue_create内部会一步步调用_dispatch_introspection_queue_create -> _dispatch_introspection_queue_create_hook -> dispatch_introspection_queue_get_info,最终可以找到是通过_dispatch_introspection_lane_get_info通过模板来创建的队列

DISPATCH_ALWAYS_INLINE

static inline dispatch_introspection_queue_s

_dispatch_introspection_lane_get_info(dispatch_lane_class_t dqu)

{

dispatch_lane_t dq = dqu._dl;

bool global = _dispatch_object_is_global(dq);

uint64_t dq_state = os_atomic_load2o(dq, dq_state, relaxed);

dispatch_introspection_queue_s diq = {

.queue = dq->_as_dq,

.target_queue = dq->do_targetq,

.label = dq->dq_label,

.serialnum = dq->dq_serialnum,

.width = dq->dq_width,

.suspend_count = _dq_state_suspend_cnt(dq_state) + dq->dq_side_suspend_cnt,

.enqueued = _dq_state_is_enqueued(dq_state) && !global,

.barrier = _dq_state_is_in_barrier(dq_state) && !global,

.draining = (dq->dq_items_head == (void*)~0ul) ||

(!dq->dq_items_head && dq->dq_items_tail),

.global = global,

.main = dx_type(dq) == DISPATCH_QUEUE_MAIN_TYPE,

};

return diq;

}

_dispatch_lane_create_with_target详细步骤解析

1.调用_dispatch_queue_attr_to_info传入dqa,创建dispatch_queue_attr_info_t类型的dqai,用于存储队列的相关属性信息

dispatch_queue_attr_info_t

_dispatch_queue_attr_to_info(dispatch_queue_attr_t dqa)

{

dispatch_queue_attr_info_t dqai = { };

if (!dqa) return dqai;

#if DISPATCH_VARIANT_STATIC

// 默认为serial和null

if (dqa == &_dispatch_queue_attr_concurrent) {

dqai.dqai_concurrent = true;

return dqai;

}

#endif

if (dqa < _dispatch_queue_attrs ||

dqa >= &_dispatch_queue_attrs[DISPATCH_QUEUE_ATTR_COUNT]) {

DISPATCH_CLIENT_CRASH(dqa->do_vtable, "Invalid queue attribute");

}

size_t idx = (size_t)(dqa - _dispatch_queue_attrs);

dqai.dqai_inactive = (idx % DISPATCH_QUEUE_ATTR_INACTIVE_COUNT);

idx /= DISPATCH_QUEUE_ATTR_INACTIVE_COUNT;

dqai.dqai_concurrent = !(idx % DISPATCH_QUEUE_ATTR_CONCURRENCY_COUNT);

idx /= DISPATCH_QUEUE_ATTR_CONCURRENCY_COUNT;

dqai.dqai_relpri = -(int)(idx % DISPATCH_QUEUE_ATTR_PRIO_COUNT);

idx /= DISPATCH_QUEUE_ATTR_PRIO_COUNT;

dqai.dqai_qos = idx % DISPATCH_QUEUE_ATTR_QOS_COUNT;

idx /= DISPATCH_QUEUE_ATTR_QOS_COUNT;

dqai.dqai_autorelease_frequency =

idx % DISPATCH_QUEUE_ATTR_AUTORELEASE_FREQUENCY_COUNT;

idx /= DISPATCH_QUEUE_ATTR_AUTORELEASE_FREQUENCY_COUNT;

dqai.dqai_overcommit = idx % DISPATCH_QUEUE_ATTR_OVERCOMMIT_COUNT;

idx /= DISPATCH_QUEUE_ATTR_OVERCOMMIT_COUNT;

return dqai;

}

2.通过_dispatch_object_alloc创建队列dq

void *

_dispatch_object_alloc(const void *vtable, size_t size)

{

#if OS_OBJECT_HAVE_OBJC1

const struct dispatch_object_vtable_s *_vtable = vtable;

dispatch_object_t dou;

dou._os_obj = _os_object_alloc_realized(_vtable->_os_obj_objc_isa, size);

dou._do->do_vtable = vtable;

return dou._do;

#else

return _os_object_alloc_realized(vtable, size);

#endif

}

内部调用_os_object_alloc_realized,可以看出队列内部也有一个isa指针,所以队列也是对象

inline _os_object_t

_os_object_alloc_realized(const void *cls, size_t size)

{

_os_object_t obj;

dispatch_assert(size >= sizeof(struct _os_object_s));

while (unlikely(!(obj = calloc(1u, size)))) {

_dispatch_temporary_resource_shortage();

}

obj->os_obj_isa = cls;

return obj;

}

3.通过_dispatch_queue_init设置队列的相关属性

static inline dispatch_queue_class_t

_dispatch_queue_init(dispatch_queue_class_t dqu, dispatch_queue_flags_t dqf,

uint16_t width, uint64_t initial_state_bits)

{

uint64_t dq_state = DISPATCH_QUEUE_STATE_INIT_VALUE(width);

dispatch_queue_t dq = dqu._dq;

dispatch_assert((initial_state_bits & ~(DISPATCH_QUEUE_ROLE_MASK |

DISPATCH_QUEUE_INACTIVE)) == 0);

if (initial_state_bits & DISPATCH_QUEUE_INACTIVE) {

dq->do_ref_cnt += 2; // rdar://8181908 see _dispatch_lane_resume

if (dx_metatype(dq) == _DISPATCH_SOURCE_TYPE) {

dq->do_ref_cnt++; // released when DSF_DELETED is set

}

}

dq_state |= initial_state_bits;

dq->do_next = DISPATCH_OBJECT_LISTLESS;

dqf |= DQF_WIDTH(width);

os_atomic_store2o(dq, dq_atomic_flags, dqf, relaxed);

dq->dq_state = dq_state;

dq->dq_serialnum =

os_atomic_inc_orig(&_dispatch_queue_serial_numbers, relaxed);

return dqu;

}

总结:

上述分析可以通过下图来概述

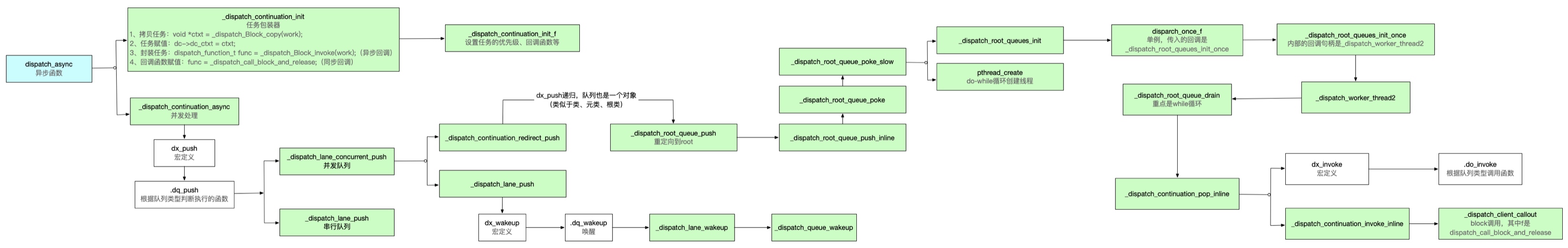

dispatch_async的底层实现

在queue.c中找到dispatch_async,其内部的实现如下

void

dispatch_async(dispatch_queue_t dq, dispatch_block_t work)

{

dispatch_continuation_t dc = _dispatch_continuation_alloc();

uintptr_t dc_flags = DC_FLAG_CONSUME;

dispatch_qos_t qos;

// 任务包装函数

qos = _dispatch_continuation_init(dc, dq, work, 0, dc_flags);

// 并发处理函数

_dispatch_continuation_async(dq, dc, qos, dc->dc_flags);

}

1.在_dispatch_continuation_init中进行任务的封装

DISPATCH_ALWAYS_INLINE

static inline dispatch_qos_t

_dispatch_continuation_init(dispatch_continuation_t dc,

dispatch_queue_class_t dqu, dispatch_block_t work,

dispatch_block_flags_t flags, uintptr_t dc_flags)

{

// 拷贝任务

void *ctxt = _dispatch_Block_copy(work);

dc_flags |= DC_FLAG_BLOCK | DC_FLAG_ALLOCATED;

if (unlikely(_dispatch_block_has_private_data(work))) {

dc->dc_flags = dc_flags;

dc->dc_ctxt = ctxt; // 赋值

// will initialize all fields but requires dc_flags & dc_ctxt to be set

return _dispatch_continuation_init_slow(dc, dqu, flags);

}

// 封装任务,异步回调

dispatch_function_t func = _dispatch_Block_invoke(work);

if (dc_flags & DC_FLAG_CONSUME) {

func = _dispatch_call_block_and_release;

}

return _dispatch_continuation_init_f(dc, dqu, ctxt, func, flags, dc_flags);

}

_dispatch_Block_invoke是一个宏,主要就是对任务的封装

#define _dispatch_Block_invoke(bb) \

((dispatch_function_t)((struct Block_layout *)bb)->invoke)

2.在_dispatch_continuation_async中并发处理函数

DISPATCH_ALWAYS_INLINE

static inline void

_dispatch_continuation_async(dispatch_queue_class_t dqu,

dispatch_continuation_t dc, dispatch_qos_t qos, uintptr_t dc_flags)

{

#if DISPATCH_INTROSPECTION

if (!(dc_flags & DC_FLAG_NO_INTROSPECTION)) {

_dispatch_trace_item_push(dqu, dc); // 跟踪日志

}

#else

(void)dc_flags;

#endif

return dx_push(dqu._dq, dc, qos);

}

其中的dx_push是一个宏

#define dx_push(x, y, z) dx_vtable(x)->dq_push(x, y, z)

其中的dq_push需要根据队列的类型,执行不同的函数

总结:

上述分析可以通过下图来概述

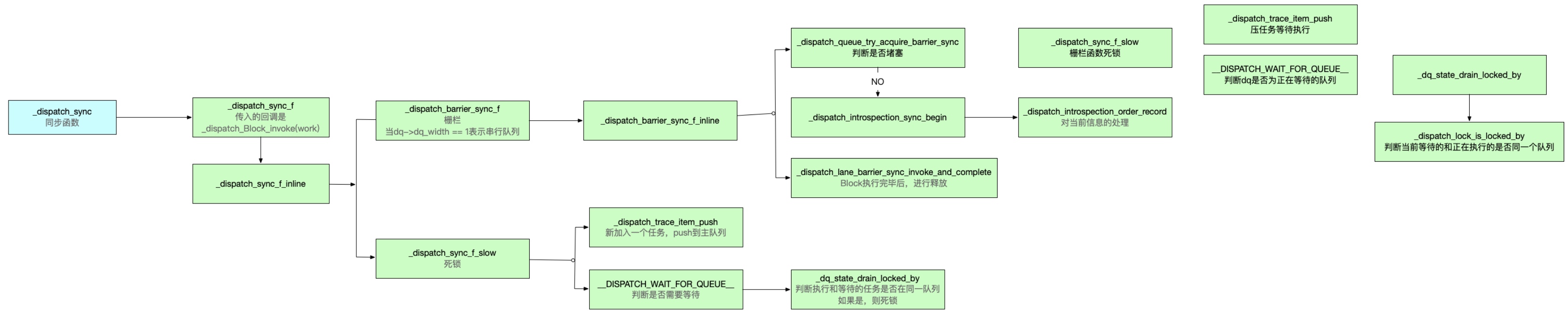

dispatch_sync的底层实现

在queue.c中找到dispatch_async,其内部的实现如下

void

dispatch_sync(dispatch_queue_t dq, dispatch_block_t work)

{

uintptr_t dc_flags = DC_FLAG_BLOCK;

if (unlikely(_dispatch_block_has_private_data(work))) {

return _dispatch_sync_block_with_privdata(dq, work, dc_flags);

}

_dispatch_sync_f(dq, work, _dispatch_Block_invoke(work), dc_flags);

}

然后调用到_dispatch_sync_f

static void

_dispatch_sync_f(dispatch_queue_t dq, void *ctxt, dispatch_function_t func,

uintptr_t dc_flags)

{

_dispatch_sync_f_inline(dq, ctxt, func, dc_flags);

}

然后调用到_dispatch_sync_f_inline,发现其内部是用栅栏函数实现的

static inline void

_dispatch_sync_f_inline(dispatch_queue_t dq, void *ctxt,

dispatch_function_t func, uintptr_t dc_flags)

{

if (likely(dq->dq_width == 1)) { // 表示串行队列

return _dispatch_barrier_sync_f(dq, ctxt, func, dc_flags); // 栅栏函数

}

if (unlikely(dx_metatype(dq) != _DISPATCH_LANE_TYPE)) {

DISPATCH_CLIENT_CRASH(0, "Queue type doesn't support dispatch_sync");

}

dispatch_lane_t dl = upcast(dq)._dl;

// Global concurrent queues and queues bound to non-dispatch threads

// always fall into the slow case, see DISPATCH_ROOT_QUEUE_STATE_INIT_VALUE

if (unlikely(!_dispatch_queue_try_reserve_sync_width(dl))) {

return _dispatch_sync_f_slow(dl, ctxt, func, 0, dl, dc_flags); // 死锁

}

if (unlikely(dq->do_targetq->do_targetq)) {

return _dispatch_sync_recurse(dl, ctxt, func, dc_flags);

}

_dispatch_introspection_sync_begin(dl); // 处理当前信息

_dispatch_sync_invoke_and_complete(dl, ctxt, func DISPATCH_TRACE_ARG(

_dispatch_trace_item_sync_push_pop(dq, ctxt, func, dc_flags))); // block执行并释放

}

在_dispatch_sync_f_slow中,当前的主队列会被阻塞挂起

static void

_dispatch_sync_f_slow(dispatch_queue_class_t top_dqu, void *ctxt,

dispatch_function_t func, uintptr_t top_dc_flags,

dispatch_queue_class_t dqu, uintptr_t dc_flags)

{

dispatch_queue_t top_dq = top_dqu._dq;

dispatch_queue_t dq = dqu._dq;

if (unlikely(!dq->do_targetq)) {

return _dispatch_sync_function_invoke(dq, ctxt, func);

}

pthread_priority_t pp = _dispatch_get_priority();

struct dispatch_sync_context_s dsc = {

.dc_flags = DC_FLAG_SYNC_WAITER | dc_flags,

.dc_func = _dispatch_async_and_wait_invoke,

.dc_ctxt = &dsc,

.dc_other = top_dq,

.dc_priority = pp | _PTHREAD_PRIORITY_ENFORCE_FLAG,

.dc_voucher = _voucher_get(),

.dsc_func = func,

.dsc_ctxt = ctxt,

.dsc_waiter = _dispatch_tid_self(),

};

_dispatch_trace_item_push(top_dq, &dsc);

__DISPATCH_WAIT_FOR_QUEUE__(&dsc, dq);

if (dsc.dsc_func == NULL) {

dispatch_queue_t stop_dq = dsc.dc_other;

return _dispatch_sync_complete_recurse(top_dq, stop_dq, top_dc_flags);

}

_dispatch_introspection_sync_begin(top_dq);

_dispatch_trace_item_pop(top_dq, &dsc);

_dispatch_sync_invoke_and_complete_recurse(top_dq, ctxt, func,top_dc_flags

DISPATCH_TRACE_ARG(&dsc));

}

总结:

上述分析可以通过下图来概述

浙公网安备 33010602011771号

浙公网安备 33010602011771号