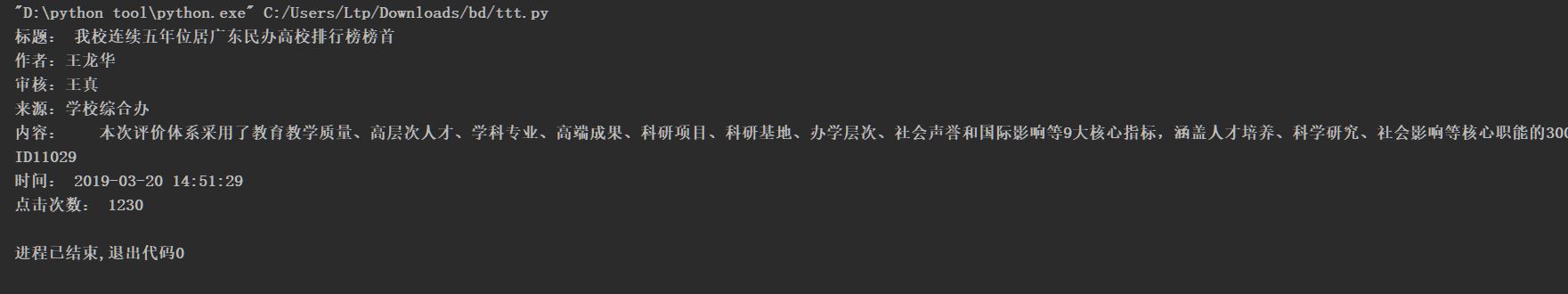

获取一篇新闻的全部信息

给定一篇新闻的链接newsUrl,获取该新闻的全部信息

标题、作者、发布单位、审核、来源

发布时间:转换成datetime类型

点击:

- newsUrl

- newsId(使用正则表达式re)

- clickUrl(str.format(newsId))

- requests.get(clickUrl)

- newClick(用字符串处理,或正则表达式)

- int()

整个过程包装成一个简单清晰的函数。

尝试去爬取一个你感兴趣的网页。

import requests

from bs4 import BeautifulSoup

import time

import re

url = 'http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0320/11029.html'

def pross(url):

parser = 'html.parser'

res = requests.get(url)

res.encoding = 'UTF-8'

soup = BeautifulSoup(res.text, parser)

for selsoup in soup.select('.show-nav'):

title = soup.select('.show-title')[0].text # 标题

author = soup.select('.show-info')[0].text.split()[2] # 作者

inspector = soup.select('.show-info')[0].text.split()[3] # 审核

source = soup.select('.show-info')[0].text.split()[4] # 来源

content = soup.select('.show-content p')[0].text # 内容

newsid = artsid(url) # 新闻编号id

time = times(soup) # 发布时间

clicktime = artfreq() # 点击次数

listt =['标题: '+title,author,inspector,source,'内容:'+content,'ID'+newsid,'时间: '+time,'点击次数: '+clicktime]

for i in range(8):

print(listt[i])

def times(soup):

msg = soup.select('.show-info')[0].text

msgdate = msg.split()[0].split(':')[1]

msgtime = msg.split()[1]

date = msgdate + ' ' + msgtime

conten1time = time.strftime(date, time.localtime())

return conten1time

def artsid(url):

artid = re.match('http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0320/(.*).html', url).group(1)

return artid

def artfreq():

clickurl = 'http://oa.gzcc.cn/api.php?op=count&id=11029&modelid=80'

res = requests.get(clickurl)

click = res.text.split('.html')[-1].lstrip("('").rstrip("');")

return click

pross(url)

浙公网安备 33010602011771号

浙公网安备 33010602011771号