LangChain使用MCP,openai和通义千问

1.安装依赖包

pip install langchain

pip install langchain-core

pip install langgraph

pip install langchain_mcp_tools

pip install websockets

# 使用openai大模型需要

pip install langchain-openai

# 使用通义千问大模型需要

pip install --upgrade --quiet langchain-community dashscope

2.安装npx、uvx命令

本机安装node.js和python,npx和uvx用于在本地安装mcp工具包

npm install -g npx

pip install uv

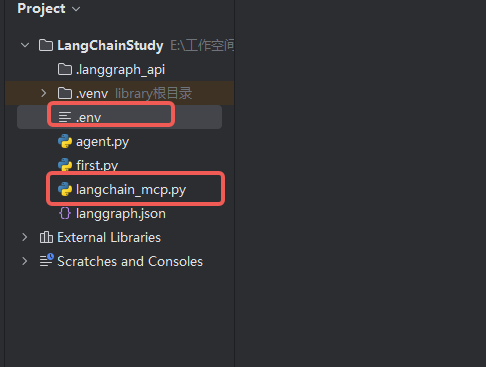

3.目录结构

使用pycharm开发工具

4.环境变量

OPENAI_API_KEY="填写自己的openai申请的api_key,我是使用下面的第三方代理申请的"

OPENAI_BASE_URL="https://api.closeai-proxy.xyz/v1"

DASHSCOPE_API_KEY="填写自己申请的通义千问api_key"

5.python代码

import asyncio

import logging

import os

import sys

# 第三方依赖

try:

from dotenv import load_dotenv, find_dotenv

from langchain_core.messages import HumanMessage

from langchain_openai import ChatOpenAI

from langchain_community.chat_models import ChatTongyi

from langgraph.prebuilt import create_react_agent

from langchain_mcp_tools import convert_mcp_to_langchain_tools

except ImportError as e:

print(f'\nError: Required package not found: {e}')

print('Please ensure all required packages are installed\n')

sys.exit(1)

async def run() -> None:

# Be sure to set ANTHROPIC_API_KEY and/or OPENAI_API_KEY as needed

global cleanup

_ = load_dotenv(find_dotenv())

try:

# MCP服务市场中的相关工具配置,下面配置了6个工具,这里根据自己的需要手动添加和删除,每个工具的配置json格式来源mcp市场(自行百度)

mcp_configs = {

'filesystem': {

'command': 'npx',

'args': [

'-y',

'@modelcontextprotocol/server-filesystem',

'.' # path to a directory to allow access to

]

},

'fetch': {

'command': 'uvx',

'args': [

'mcp-server-fetch'

]

},

'weather': {

'command': 'npx',

'args': [

'-y',

'@h1deya/mcp-server-weather'

]

},

"amap-maps": {

"command": "npx",

"args": [

"-y",

"@amap/amap-maps-mcp-server"

],

"env": {

"AMAP_MAPS_API_KEY": "自己申请高德地图开发者api_key"

}

},

"tavily-mcp": {

"command": "npx",

"args": [

"-y",

"tavily-mcp"

],

"env": {

"TAVILY_API_KEY": "自己申请搜索引擎的api_key"

},

},

"mysql": {

"type": "stdio",

"command": "uvx",

"args": [

"--from",

"mysql-mcp-server",

"mysql_mcp_server"

],

"env": {

"MYSQL_HOST": "数据库IP地址",

"MYSQL_PORT": "数据库端口号",

"MYSQL_USER": "用户名",

"MYSQL_PASSWORD": "密码",

"MYSQL_DATABASE": "数据库名称"

}

},

}

tools, cleanup = await convert_mcp_to_langchain_tools(

mcp_configs

)

# 使用openai大模型

# llm = ChatOpenAI(model="gpt-4o", temperature=0)

# 使用通义千问大模型

llm = ChatTongyi(temperature=0)

agent = create_react_agent(

llm,

tools

)

while True:

query = input("请输入您的问题 (输入 '退出'、'exit' 或 'quit' 来结束): ")

if query.lower() in ['退出', 'exit', 'quit']:

print("正在退出...")

break

if not query.strip():

print("问题不能为空,请重新输入。")

continue

print('问题:', query)

messages = [HumanMessage(content=query)]

try:

result = await agent.ainvoke({'messages': messages})

# the last message should be an AIMessage

response = result['messages'][-1].content

print('大模型输出结果开始')

print(response)

print('大模型输出结果结束')

except Exception as e:

print(f"处理请求时发生错误: {e}")

print('--------------------') # 分隔每次问答

finally:

if cleanup:

await cleanup()

if sys.platform == "win32":

await asyncio.sleep(0.25)

print('------- 清理完成,程序结束 -------')

def main() -> None:

asyncio.run(run())

if __name__ == '__main__':

main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号