【大数据Spark_SparkCore系列_4】Spark的JdbcRDD的使用

一、什么是JdbcRDD

下面一段话来源于package org.apache.spark.rdd.JdbcRDD的源码:

简单来说就是可以在一个connection上执行SQL并且获取返回值的RDD。

// TODO: Expose a jdbcRDD function in SparkContext and mark this as semi-private /** * An RDD that executes a SQL query on a JDBC connection and reads results. * For usage example, see test case JdbcRDDSuite. * * @param getConnection a function that returns an open Connection. * The RDD takes care of closing the connection. * @param sql the text of the query. * The query must contain two ? placeholders for parameters used to partition the results. * For example, * {{{ * select title, author from books where ? <= id and id <= ? * }}} * @param lowerBound the minimum value of the first placeholder * @param upperBound the maximum value of the second placeholder * The lower and upper bounds are inclusive. * @param numPartitions the number of partitions. * Given a lowerBound of 1, an upperBound of 20, and a numPartitions of 2, * the query would be executed twice, once with (1, 10) and once with (11, 20) * @param mapRow a function from a ResultSet to a single row of the desired result type(s). * This should only call getInt, getString, etc; the RDD takes care of calling next. * The default maps a ResultSet to an array of Object. */ class JdbcRDD[T: ClassTag]( sc: SparkContext, getConnection: () => Connection, sql: String, lowerBound: Long, upperBound: Long, numPartitions: Int, mapRow: (ResultSet) => T = JdbcRDD.resultSetToObjectArray _) extends RDD[T](sc, Nil) with Logging

二、JdbcRDD的使用

使用JdbcRDD执行SQL查询MySQL数据库并且打印结果:

package lesson0613

import java.sql.{Connection, DriverManager}

import org.apache.spark.rdd.JdbcRDD

import org.apache.spark.{SparkConf, SparkContext}

object MyJdbcRDDDemo {

val connection = () => {

//Class.forName("oracle.jdbc.OracleDriver").newInstance()

Class.forName("com.mysql.jdbc.Driver").newInstance()

DriverManager.getConnection("jdbc:mysql://10.30.3.104:3306/Interfaces", "root", "2561304") //插入mysql数据库

}

def main(args: Array[String]): Unit = {

//创建spacrkconf对象

val conf = new SparkConf().setAppName("My WebCount Demo").setMaster("local")

//创建sparkcontext

val sc = new SparkContext(conf)

//结果:10号部门,工资大于2000的员工姓名和薪水

val oracleRDD = new JdbcRDD(sc, connection, "select * from t_result where sucess between 5 and ? and fail>?", 10, 1, 1, r => {

val col1 = r.getString(1)//保存第1列

val col2 = r.getInt(2)//保存第2列

val col3 = r.getInt(3)//保存第3列

val col4 = r.getInt(4)//保存第4列

(col1, col2, col3, col4)//输出格式1-4列的数组

})

val result = oracleRDD.collect()

println(result.toBuffer)

sc.stop()

}

}

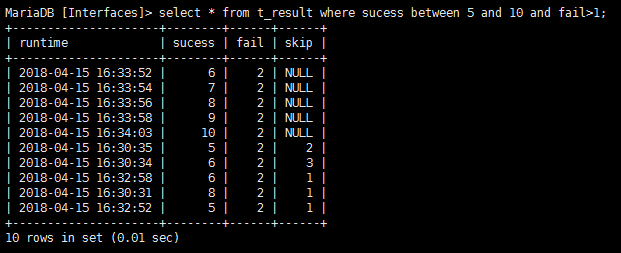

执行的结果如下(忽略具体的数据,因为表的内容是无相关的数据):

原始数据库查询:

代码输出:

ArrayBuffer((2018-04-15 16:33:52.0,6,2,0), (2018-04-15 16:33:54.0,7,2,0), (2018-04-15 16:33:56.0,8,2,0), (2018-04-15 16:33:58.0,9,2,0), (2018-04-15 16:34:03.0,10,2,0), (2018-04-15 16:30:35.0,5,2,2)。。。。

三、JdbcRDD参数说明和分析

由JdbcRDD的源码(段落一)可以看出JdbcRDD的参数很多,详细解释如下:

JdbcRDD参数说明:

|

参数名称 |

类型 |

说明 |

|

sc |

org.apache.spark.SparkContext |

Spark Context对象 |

|

getConnection |

scala.Function0[java.sql.Connection] |

得到一个数据库Connection |

|

sql |

scala.Predef.String |

执行的SQL语句 |

|

lowerBound |

scala.Long |

下边界值,即:SQL的第一个参数 |

|

upperBound |

scala.Long |

上边界值,即:SQL的第二个参数 |

|

numPartitions |

scala.Int |

分区的个数,即:启动多少个Executor |

|

mapRow |

scala.Function1[java.sql.ResultSet, T] |

得到的结果集 |

JdbcRDD的缺点:从上面的参数说明可以看出,JdbcRDD有以下两个缺点:

1.执行的SQL必须有两个参数,并类型都是Long

2.得到的结果是ResultSet,即:只支持select操作

浙公网安备 33010602011771号

浙公网安备 33010602011771号