卷积神经网络入门

卷积神经网络入门

多层卷积网络的基本理论

卷积神经网络(Convolutional Neural Network,CNN) 是一种前馈神经网络, 它的人工神经元可以响应一部分覆盖范围内的周围单元,对于大型图像处理有出色表现。它包括卷积层(alternating convolutional layer)和池层(pooling layer)。

多层卷积网络的基本可以参看下面这篇博文。

卷积神经网络的特点

全连接网络的弊端

- 参数权值过多:

全连接可知道,前一层神经元数($m$)与后一层神经元数($n$)之间的参数为$m\times n$个。对于图像来说,一副$1000 \times 1000$像素的图片,输入神经元为$10^6$个,如果下一层与之相同,那么两层之间的权值参数就有$w = 10^12$,这么多的参数将会导致网络无法训练。

- 梯度消失:

全连接网络的层数太多之后,会出现梯度消失的问题。

- 关联性被破坏

针对图片一类的数据来说,一个像素会与其上下左右等方向的数据有很大的相关性,全连接时,将数据展开后,容易忽略图片的相关性,或者是将毫无相关的两个像素强制关联在一起。

CNN的优势

正对上面的缺陷,CNN做了如下改进:

- 局部视野:

由于全连接参数太多,同时也破坏了关联性。所以采用局部视野的,假设每$10 \times 10$个像素连接到一个神经元,那么结果就变成$w = 100 \times 10^6 = 10^8$ 明显比全连接降低了4个数量级。这就是卷积的来源

- 权值共享

上面卷积的方式虽然使得参数下降了几个数量级,但参数还是很多,那么假设对于$10 \times 10$像素的权值$w$参数都相同,即$10^6$个神经元共享100个权值,那么我们最终的权值参数就降低到了100个!

- 多核卷积

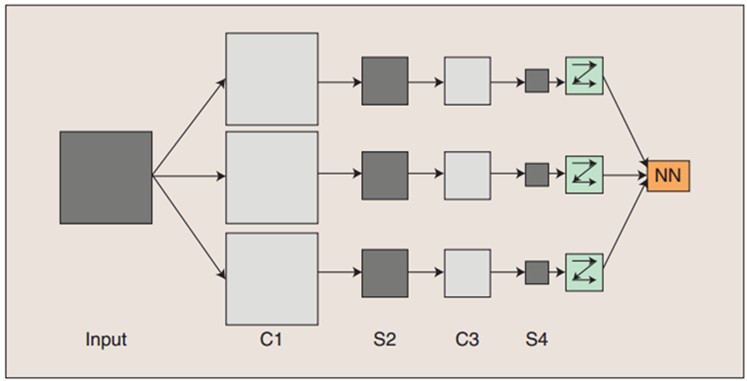

虽然说权值共享大幅度的减少了参数,对于计算是好事,但是对于模型的学习来说,得到的结果就不一定好,毕竟100个参数能学到的东西也是很有限的。自然而然的也就想到了多用“几套权值”来学习输入的特征,这样既可以保证参数在可以控制的范围内,也可以从不懂的角度来理解输入数据。 每一套$w$学习到的神经元结果组成了一个特征图,从而实现特征的提取。这不同“套”的权值又叫做卷积核(Filter),而通过卷积核提取的结果又叫特征图(featuremap)。不同的卷积核能学习到不同的特征,以图片为例,有些能学习到轮廓,有些能学习到颜色,有些能学习到边角等等。如下:

- 下采样

每一套特征图的输出结果的量也不少,同时还可能存在一些干扰。这时候可以通过下采样的方式来减少得到的结果,同时又能保留重要参数。

直观的认识卷积网络

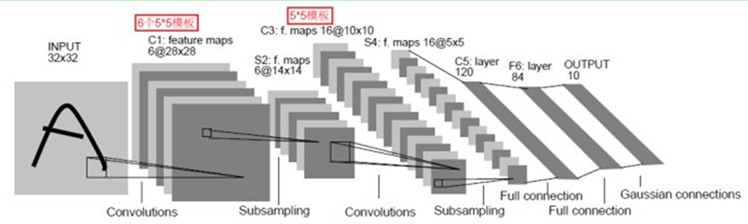

以LeNet-5 为例子来直观的理解CNN的工作方式

值得注意的一点是其中sampling 和 pooling会在不同的CNN资料中出现,这都是采样的叫法。

卷积网络的符号表示为:

进一步理解如何卷积

理论上认识卷积网络

卷积公式

数学上的卷积公式

连续型

一维卷积 $w(x) * f(x) = \int ^\infty_{-\infty} w(u)f(x-u){\rm d}u$

二维卷积 $w(x, y) * f(x, y) = \int ^\infty_{-\infty} \int ^\infty_{-\infty} w(u, v)f(x-u, y-v){\rm d}u{\rm d}v$

离散型

$w(x) * f(x) = \sum_{u}\sum_{v} w(u,v)f(x-u, y-v)$

关于原点对称时

$w(x) * f(x) = \sumu_{-u}\sumv_{v} w(u,v)f(x-u, y-v)$

ML中的卷积公式:

二维卷积公式

$a_{i,j} = f(\sum_m \sum_n w_{m,n}* x_{s-m, s-n} + w_b)$

维卷积公式

$a_{i,j} = f(\sum_d \sum_m \sum_n w_{m,n}* x_{s-m, s-n} + w_b)$

Feature map size

有前面二维的的过动态图,我们可以知道,当进行卷积后,特征图的大小是多少?怎么计算?这与卷积的大小以及卷积窗口在图像上移动的步长有关系,上图中: image size: $5 \times 5$, Filter size: $3 \times 3$, stride(step): 1.

我们会发想最后得到的size 为:$(5-3)/1 \times (5-3)/1 + 1 = 3 \times 3$

有的时候,图像输入与Filter移动的步长会出项不满足,这个时候的解决办法是在输入的四周不上n圈0,从而使得Filter发生正确的滑动,记作: $padding=n$。

最后给出feature mape size 的推导公式如下:

宽: $W_2 = (W_1 - F + 2P)/S + 1$

高: $H_2 = (H_1 - F + 2P)/S + 1$

其中 F为filter的size, S为stride的size。

定于卷积公式

$C_{s,t} = \sum^{m_a - 1}{m=0}\sum^{n_a - 1}{n} W_{m,n} X_{s-m, t-n} s.t 0\leq s < m_a + m_b - 1 and 0 \leq t < n_a + n_b - 1 $

其中 $m_a$、$n_a$是$W$的行列

其中 $n_b$、$n_b$是$X$的行列

矩阵定义:$C_{st} = W * X$

需要注意的是在一些资料中会有旋转180之说,这是因为$s-m, t-n$这里是由大到小的进行乘的,矩阵中可以看做是W旋转180度之后与x做的互相关操作

简单解释一下: $C_{st} = AB$可以与互相关互相转换, $D = BA $

池化与全连接

见上图。

Backpropagation

略.感兴趣么我再讲。

ReLu 以及 sigmoid

两个激活函数:

代码实现

对理论有了一定的理解后,我们现在开始进入编程阶段。我们使用深度学习库tensorflow来实现,当然你也可以使用其他的库例如:caffe、theano、deeplearning4j、torch、kears...

首先导入tensorflow的相关包。

构建一个多层卷积网络

在前面一个笔记中的手写识别大概在91%左右,正确率并不高。但是我们将学习卷积神经网络来改善效果。准确率会比前面的高好多。

为了创建这个模型,我们需要创建大量的权重和偏置项。这个模型中的权重在初始化时应该加入少量的噪声来打破对称性以及避免0梯度。由于我们使用的是ReLU神经元,因此比较好的做法是用一个较小的正数来初始化偏置项,以避免神经元节点输出恒为0的问题(dead neurons )。

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

开始加载MNIST数据,这是已经处理过的,使用Numpy array保存的数据,可以直接使用。

mnist = input_data.read_data_sets('./data/MNIST_data/', one_hot=True)

Extracting ./data/MNIST_data/train-images-idx3-ubyte.gz

Extracting ./data/MNIST_data/train-labels-idx1-ubyte.gz

Extracting ./data/MNIST_data/t10k-images-idx3-ubyte.gz

Extracting ./data/MNIST_data/t10k-labels-idx1-ubyte.gz

由于tensorflow是图计算的方式,所以需要先定义计算结构,tensorflow才能运行。

所以下面先使用占位符(placeholder)对输入进行定义

x = tf.placeholder(tf.float32, shape=[None, 784])

y_ = tf.placeholder(tf.float32, shape=[None, 10])

工欲善其事必先利其器。在开始卷积网络之前,我们先做两个函数定义,用来初始化我们的权值。

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

卷积和池化

TensorFlow在卷积和池化上有很强的灵活性。我们怎么处理边界?步长应该设多大?在这个实例里,我们会一直使用vanilla版本。我们的卷积使用1步长(stride size),0边距(padding size) 的模板, 保证输出和输入是同一个大小。我们的池化用简单传统的2x2大小的模板做max pooling。为了代码更简洁,我们把这部分抽象成一个函数。

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

第一层卷积

定义了卷积方式之后,我们就可以来实现CNN的第一层了。它是有一个卷积接一个池化来完成的。卷积在每个5x5的patch中算出32个特征,卷积的权重张量形状是[5, 5, 1, 32],前两个维度是patch大小,接着是输入的通道数目,,最后是输出的通道数目。同时每个通道还有一个偏置量。

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

#为了用这一层,我们把x变成一个4d向量,其第2、第3维对应图片的宽、高,最后一维代表图片的颜色通道数(因为是灰度图所以这里的通道数为1,如果是rgb彩色图,则为3)。

x_image = tf.reshape(x, [-1, 28, 28, 1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

第二层卷积

为了构建一个更深的网络,我们会把几个类似的层堆叠起来。第二层中,每个5x5的patch会得到64个特征。

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

密集层连接

现在,图片尺寸减小到7x7,我们加入一个有1024个神经元的全连接层,用于处理整个图片。我们把池化层输出的张量reshape成一些向量,乘上权重矩阵,加上偏置,然后对其使用ReLU。

W_fc1 = weight_variable([7*7*64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

Dropout

为了减少过拟合,我们在输出层之前加入dropout。我们用一个placeholder来代表一个神经元的输出在dropout中保持不变的概率。这样我们可以在训练过程中启用dropout,在测试过程中关闭dropout。 TensorFlow的tf.nn.dropout操作除了可以屏蔽神经元的输出外,还会自动处理神经元输出值的scale。所以用dropout的时候可以不用考虑scale。

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob=keep_prob)

输出层

最后添加一个softmax层。

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

模型的训练

下面定义损失函数以及训练模型

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(20000):

batch = mnist.train.next_batch(50)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict= {x: batch[0], y_: batch[1], keep_prob: 1.0})

print('step %d, training accuracy %g' % (i, train_accuracy))

train_step.run(feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.5})

print('test accuracy %g' % accuracy.eval(feed_dict={

x: mnist.test.images, y_: mnist.test.labels, keep_prob: 1.0}))

step 0, training accuracy 0.12

step 100, training accuracy 0.8

step 200, training accuracy 0.94

step 300, training accuracy 0.86

step 400, training accuracy 0.98

step 500, training accuracy 0.92

step 600, training accuracy 0.92

step 700, training accuracy 0.9

step 800, training accuracy 0.94

step 900, training accuracy 0.9

step 1000, training accuracy 0.96

step 1100, training accuracy 0.92

step 1200, training accuracy 0.94

step 1300, training accuracy 0.94

step 1400, training accuracy 0.94

step 1500, training accuracy 1

step 1600, training accuracy 0.94

step 1700, training accuracy 0.98

step 1800, training accuracy 0.98

step 1900, training accuracy 1

step 2000, training accuracy 0.88

step 2100, training accuracy 0.96

step 2200, training accuracy 0.98

step 2300, training accuracy 1

step 2400, training accuracy 0.96

step 2500, training accuracy 0.96

step 2600, training accuracy 1

step 2700, training accuracy 0.98

step 2800, training accuracy 1

step 2900, training accuracy 1

step 3000, training accuracy 0.98

step 3100, training accuracy 0.98

step 3200, training accuracy 1

step 3300, training accuracy 1

step 3400, training accuracy 1

step 3500, training accuracy 1

step 3600, training accuracy 1

step 3700, training accuracy 1

step 3800, training accuracy 0.98

step 3900, training accuracy 0.98

step 4000, training accuracy 0.98

step 4100, training accuracy 1

step 4200, training accuracy 0.98

step 4300, training accuracy 1

step 4400, training accuracy 1

step 4500, training accuracy 0.98

step 4600, training accuracy 1

step 4700, training accuracy 0.98

step 4800, training accuracy 0.98

step 4900, training accuracy 0.94

step 5000, training accuracy 0.96

step 5100, training accuracy 1

step 5200, training accuracy 1

step 5300, training accuracy 1

step 5400, training accuracy 1

step 5500, training accuracy 1

step 5600, training accuracy 1

step 5700, training accuracy 0.98

step 5800, training accuracy 1

step 5900, training accuracy 1

step 6000, training accuracy 0.98

step 6100, training accuracy 1

step 6200, training accuracy 0.98

step 6300, training accuracy 1

step 6400, training accuracy 1

step 6500, training accuracy 1

step 6600, training accuracy 0.98

step 6700, training accuracy 1

step 6800, training accuracy 0.98

step 6900, training accuracy 1

step 7000, training accuracy 1

step 7100, training accuracy 1

step 7200, training accuracy 1

step 7300, training accuracy 1

step 7400, training accuracy 1

step 7500, training accuracy 1

step 7600, training accuracy 1

step 7700, training accuracy 1

step 7800, training accuracy 1

step 7900, training accuracy 1

step 8000, training accuracy 1

step 8100, training accuracy 1

step 8200, training accuracy 1

step 8300, training accuracy 1

step 8400, training accuracy 1

step 8500, training accuracy 1

step 8600, training accuracy 1

step 8700, training accuracy 1

step 8800, training accuracy 1

step 8900, training accuracy 0.96

step 9000, training accuracy 1

step 9100, training accuracy 1

step 9200, training accuracy 0.98

step 9300, training accuracy 1

step 9400, training accuracy 0.98

step 9500, training accuracy 1

step 9600, training accuracy 1

step 9700, training accuracy 0.98

step 9800, training accuracy 0.98

step 9900, training accuracy 1

step 10000, training accuracy 1

step 10100, training accuracy 1

step 10200, training accuracy 1

step 10300, training accuracy 1

step 10400, training accuracy 1

step 10500, training accuracy 1

step 10600, training accuracy 1

step 10700, training accuracy 1

step 10800, training accuracy 0.98

step 10900, training accuracy 1

step 11000, training accuracy 1

step 11100, training accuracy 1

step 11200, training accuracy 1

step 11300, training accuracy 1

step 11400, training accuracy 1

step 11500, training accuracy 0.98

step 11600, training accuracy 1

step 11700, training accuracy 1

step 11800, training accuracy 1

step 11900, training accuracy 1

step 12000, training accuracy 0.98

step 12100, training accuracy 0.98

step 12200, training accuracy 1

step 12300, training accuracy 1

step 12400, training accuracy 1

step 12500, training accuracy 1

step 12600, training accuracy 1

step 12700, training accuracy 1

step 12800, training accuracy 1

step 12900, training accuracy 1

step 13000, training accuracy 0.98

step 13100, training accuracy 1

step 13200, training accuracy 1

step 13300, training accuracy 1

step 13400, training accuracy 0.98

step 13500, training accuracy 1

step 13600, training accuracy 0.98

step 13700, training accuracy 1

step 13800, training accuracy 1

step 13900, training accuracy 1

step 14000, training accuracy 1

step 14100, training accuracy 1

step 14200, training accuracy 1

step 14300, training accuracy 1

step 14400, training accuracy 1

step 14500, training accuracy 1

step 14600, training accuracy 1

step 14700, training accuracy 1

step 14800, training accuracy 1

step 14900, training accuracy 1

step 15000, training accuracy 1

step 15100, training accuracy 1

step 15200, training accuracy 1

step 15300, training accuracy 1

step 15400, training accuracy 1

step 15500, training accuracy 1

step 15600, training accuracy 1

step 15700, training accuracy 1

step 15800, training accuracy 1

step 15900, training accuracy 0.98

step 16000, training accuracy 1

step 16100, training accuracy 1

step 16200, training accuracy 1

step 16300, training accuracy 1

step 16400, training accuracy 1

step 16500, training accuracy 1

step 16600, training accuracy 1

step 16700, training accuracy 1

step 16800, training accuracy 1

step 16900, training accuracy 1

step 17000, training accuracy 1

step 17100, training accuracy 1

step 17200, training accuracy 1

step 17300, training accuracy 1

step 17400, training accuracy 1

step 17500, training accuracy 1

step 17600, training accuracy 1

step 17700, training accuracy 1

step 17800, training accuracy 1

step 17900, training accuracy 1

step 18000, training accuracy 1

step 18100, training accuracy 1

step 18200, training accuracy 1

step 18300, training accuracy 1

step 18400, training accuracy 1

step 18500, training accuracy 1

step 18600, training accuracy 1

step 18700, training accuracy 1

step 18800, training accuracy 1

step 18900, training accuracy 1

step 19000, training accuracy 1

step 19100, training accuracy 1

step 19200, training accuracy 1

step 19300, training accuracy 1

step 19400, training accuracy 1

step 19500, training accuracy 1

step 19600, training accuracy 1

step 19700, training accuracy 1

step 19800, training accuracy 0.98

step 19900, training accuracy 1

test accuracy 0.9922

well done!

浙公网安备 33010602011771号

浙公网安备 33010602011771号