【hadoop集群】三、hive添加tez、spark引擎

hive引擎指令设置

set hive.execution.engine=spark;

一、添加tez

1、解压到本地

mkdir /opt/module/tez

tar -zxvf /opt/software/tez-0.10.1-SNAPSHOT-minimal.tar.gz -C /opt/module/tez

2、新建目录上传到hdfs

hadoop fs -mkdir /tez

hadoop fs -put /opt/software/tez-0.10.1-SNAPSHOT.tar.gz /tez

3、在hadoop环境下新建tez-site.xml

vim $HADOOP_HOME/etc/hadoop/tez-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>tez.lib.uris</name>

<value>${fs.defaultFS}/tez/tez-0.10.1-SNAPSHOT.tar.gz</value>

</property>

<property>

<name>tez.use.cluster.hadoop-libs</name>

<value>true</value>

</property>

<property>

<name>tez.am.resource.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>tez.am.resource.cpu.vcores</name>

<value>1</value>

</property>

<property>

<name>tez.container.max.java.heap.fraction</name>

<value>0.4</value>

</property>

<property>

<name>tez.task.resource.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>tez.task.resource.cpu.vcores</name>

<value>1</value>

</property>

</configuration>

再添加 vim $HADOOP_HOME/etc/hadoop/shellprofile.d/tez.sh

hadoop_add_profile tez

function _tez_hadoop_classpath

{

hadoop_add_classpath "$HADOOP_HOME/etc/hadoop" after

hadoop_add_classpath "/opt/module/tez/*" after

hadoop_add_classpath "/opt/module/tez/lib/*" after

}

3、hive下的hive-site.xml配置添加

<property> <name>hive.execution.engine</name> <value>tez</value> </property> <property> <name>hive.tez.container.size</name> <value>1024</value> </property>

解决日志冲突的问题

rm /opt/module/tez/lib/slf4j-log4j12-1.7.10.jar

问题:

Current usage: 303.3 MB of 1 GB physical memory used; 2.7 GB of 2.1 GB virtual memory used. Killing container.

关闭虚拟机检查 yarn-site.xml

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

二、添加spark引擎

1、本地解压

tar -zxvf spark-3.0.0-bin-hadoop3.2.tgz -C /opt/module/

修改环境变量

sudo vim /etc/profile.d/my_env.sh

# SPARK_HOME export SPARK_HOME=/opt/module/spark export PATH=$PATH:$SPARK_HOME/bin

2、在hive配置中创建spark的默认配置

vim /opt/module/hive/conf/spark-defaults.conf

spark.master yarn spark.eventLog.enabled true spark.eventLog.dir hdfs://hadoop102:8020/spark-history spark.executor.memory 1g spark.driver.memory 1g

hive-site.xml 配置信息

<!--Spark依赖位置(注意:端口号8020必须和namenode的端口号一致)-->

<property>

<name>spark.yarn.jars</name>

<value>hdfs://hadoop102:8020/spark-jars/*</value>

</property>

<!--Hive执行引擎-->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

3、把spark的纯净版jar上传到hdfs

tar -zxvf /opt/software/spark-3.0.0-bin-without-hadoop.tgz

hadoop fs -mkdir /spark-jars

hadoop fs -put spark-3.0.0-bin-without-hadoop/jars/* /spark-jars

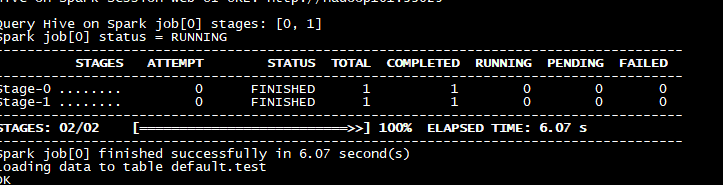

最终执行结果:

执行成功

浙公网安备 33010602011771号

浙公网安备 33010602011771号