大二下学期团队项目(爬取豆瓣电影)

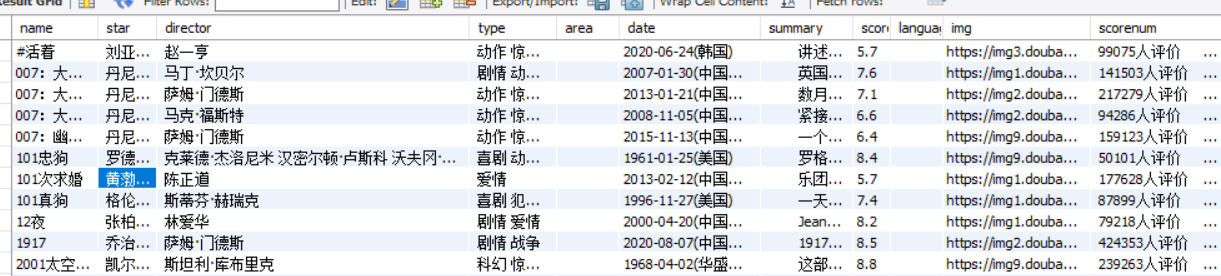

今日爬取了豆瓣的一部分电影信息,大约2000个:

import string import time import traceback import pymysql import requests import re from lxml import etree import random from bs4 import BeautifulSoup from flask import json def get_conn(): """ :return: 连接,游标192.168.1.102 """ # 创建连接 conn = pymysql.connect(host="*", user="root", password="root", db="*", charset="utf8") # 创建游标 cursor = conn.cursor() # 执行完毕返回的结果集默认以元组显示 return conn, cursor def close_conn(conn, cursor): if cursor: cursor.close() if conn: conn.close() def query(sql,*args): """ 封装通用查询 :param sql: :param args: :return: 返回查询结果以((),(),)形式 """ conn,cursor = get_conn(); cursor.execute(sql) res=cursor.fetchall() close_conn(conn,cursor) return res def get_tencent_data(): #豆瓣的网址 url_bean = 'https://movie.douban.com/j/new_search_subjects?sort=T&range=0,10&tags=%E7%94%B5%E5%BD%B1&start=' headers = { 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.90 Safari/537.36', } a=1 num=0 cursor = None conn = None conn, cursor = get_conn() while a<=100: num_str='%d'%num num=num+20 a=a+1; # 获取豆瓣页面电影数据 r = requests.get(url_bean + num_str, headers=headers) res_bean = json.loads(r.text); data_bean = res_bean["data"] print(f"{time.asctime()}开始插入数据",(a-1)) #循环遍历电影数据 try: for i in data_bean: #分配数据 score = i["rate"] director = i["directors"] # [] director_str = "" for j in director: director_str = director_str + " " + j name = i["title"] img = i["cover"] star = i["casts"] # [] star_str = "" for j in star: star_str = star_str + " " + j # 分配数据 # 获取电影详细数据的网址 url_details = i["url"] r = requests.get(url_details, headers=headers) soup_bean = BeautifulSoup(r.text,"lxml") #获取详细数据 span = soup_bean.find_all("span", {"property": "v:genre"}) type = "" for i in span: type = type + " " + i.text span = soup_bean.find_all("span", {"property": "v:runtime"}) timelen = span[0].text span = soup_bean.find_all("span", {"property": "v:initialReleaseDate"}) date = span[0].text span = soup_bean.find("a", {"class", "rating_people"}) scorenum = span.text span = soup_bean.find("span", {"property": "v:summary"}) summary = span.text.replace(" ", "")#将空格去掉 # 获取详细数据 sql = "insert into test_bean values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)" cursor.execute(sql, [name, star_str, director_str, type, "", date, summary, score, "", img, scorenum, timelen]) conn.commit() # 提交事务 update delete insert操作 //*[@id="info"]/text()[2] except: traceback.print_exc() print(f"{time.asctime()}插入数据完毕",(a-1))#循环了几次 close_conn(conn, cursor) print(f"{time.asctime()}所有数据插入完毕") if __name__ == "__main__": get_tencent_data()

浙公网安备 33010602011771号

浙公网安备 33010602011771号