转 percona-toolkit的安装和使用

###sample

percona-toolkit 3.0.13 简单安装记录

环境:

centos6.x

mysql:8.0.17

https://blog.csdn.net/weixin_30405421/article/details/99660363

# 清除yum缓存

yum clean all

yum -y install perl-DBI

yum -y install perl-DBD-MySQL

yum -y install perl-IO-Socket-SSL.noarch

yum -y install perl-Time-HiRes

yum -y install perl-TermReadKey

yum -y install perl-ExtUtils-MakeMaker

->方法1

cd /opt/

wget https://www.percona.com/downloads/percona-toolkit/3.0.13/binary/tarball/percona-toolkit-3.0.13_x86_64.tar.gz

tar -zxf percona-toolkit-3.0.13_x86_64.tar.gz

cd /usr/local/

ln -s /opt/percona-toolkit-3.0.13 ptools

ln -s /opt/percona-toolkit-3.0.13/bin/* /usr/bin/

->方法2:

rpm -ivh *.rpm

检查是否安装成功

which pt-query-digest

转载于:https://www.cnblogs.com/bjx2020/p/11357705.html

注意:如果是在windows ,就需要到以下网站下载 wget.exe 文件即可,(wget 支持断点续传)

https://eternallybored.org/misc/wget/

####

percona-toolkit的安装和使用

percona-toolkit简介

percona-toolkit是一组高级命令行工具的集合,用来执行各种通过手工执行非常复杂和麻烦的mysql和系统任务,这些任务包括:

-

检查master和slave数据的一致性

-

有效地对记录进行归档

-

查找重复的索引

-

对服务器信息进行汇总

-

分析来自日志和tcpdump的查询

-

当系统出问题的时候收集重要的系统信息

percona-toolkit工具包安装

#wget https://www.percona.com/downloads/percona-toolkit/2.2.20/deb/percona-toolkit_2.2.20-1.tar.gz

#tar zxf percona-toolkit_2.2.20-1.tar.gz

#cd percona-toolkit-2.2.20/

#perl Makefile.PL 报错如下:

[root@b0d81312ea9e percona-toolkit-2.2.20]# perl Makefile.PL

Can't locate ExtUtils/MakeMaker.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at Makefile.PL line 1.

BEGIN failed--compilation aborted at Makefile.PL line 1.

该错误解决办法:

#yum install perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker -y

问题解决,下面我继续安装

#perl Makefile.PL

#make && make install

安装成功!

---------------------------------------------------------------------------------------------------------

下面我使用percona-toolkit中的pt-online-schema-change来给一个表增加字段

pt-online-schema-change用处:

ALTER tables without locking them.修改过程中不会造成读写阻塞,用于给大表添加字段。

实现原理:

如果表有外键,除非使用 –alter-foreign-keys-method 指定特定的值,否则工具不予执行。

1 创建一个和你要执行 alter 操作的表一样的空表结构。

2 执行表结构修改,然后从原表中的数据到copy到 表结构修改后的表,

3 在原表上创建触发器将 copy 数据的过程中,在原表的更新操作 更新到新表.

注意:如果表中已经定义了触发器这个工具就不能工作了。

4 copy 完成以后,用rename table 新表代替原表,默认删除原表。

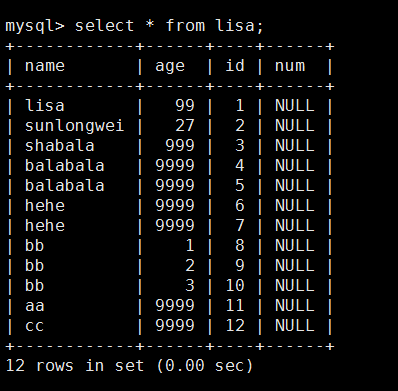

# pt-online-schema-change --alter "ADD COLUMN num INT" D=sls,t=lisa

//给我mysql中的sls数据库的lisa表增加一个num字段 D:指定数据库 t:指定表

报错如下:

Can't locate Time/HiRes.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at /usr/local/bin/pt-online-schema-change line 3727.

BEGIN failed--compilation aborted at /usr/local/bin/pt-online-schema-change line 3727.

该错误的意思是:系统没有安装Perl的Time组件

该错误解决办法:

# yum -y install perl-Time*

解决问题后再次添加字段:

# pt-online-schema-change --alter "ADD COLUMN num INT" D=sls,t=lisa

再次报错如下:

Operation, tries, wait:

analyze_table, 10, 1

copy_rows, 10, 0.25

create_triggers, 10, 1

drop_triggers, 10, 1

swap_tables, 10, 1

update_foreign_keys, 10, 1

Exiting without altering `sls`.`lisa` because neither --dry-run nor --execute was specified. Please read the tool's documentation carefully before using this tool.

解决办法:

# pt-online-schema-change --alter "ADD COLUMN num INT" D=sls,t=lisa --user=sls --socket=/tmp/mysql.sock --password=122541 --execute

报错如下:

You do not have the PROCESS privilege at /usr/local/bin/pt-online-schema-change line 4330.

错误原因:没有给sls用户授权

解决办法

mysql>grant all privileges on *.* to sls@localhost identified by "122541";

mysql> flush privileges;

解决问题后再次添加字段:

# pt-online-schema-change --alter "ADD COLUMN num INT" D=sls,t=lisa --user=sls --socket=/tmp/mysql.sock --password=122541 --execute

报错如下:

No slaves found. See --recursion-method if host b0d81312ea9e has slaves.

Not checking slave lag because no slaves were found and --check-slave-lag was not specified.

Operation, tries, wait:

analyze_table, 10, 1

copy_rows, 10, 0.25

create_triggers, 10, 1

drop_triggers, 10, 1

swap_tables, 10, 1

update_foreign_keys, 10, 1

Altering `sls`.`lisa`...

Creating new table...

Created new table sls._lisa_new OK.

Altering new table...

Altered `sls`.`_lisa_new` OK.

2016-12-24T08:54:54 Dropping new table...

2016-12-24T08:54:54 Dropped new table OK.

`sls`.`lisa` was not altered.

The new table `sls`.`_lisa_new` does not have a PRIMARY KEY or a unique index which is required for the DELETE trigger.

错误原因:没有给lisa表创建主键

解决办法:

mysql> alter table lisa add id int not null primary key auto_increment;

//id列必须是lisa表中不存在的列。存在会报错。

解决问题后再次添加字段:

# pt-online-schema-change --alter "ADD COLUMN num INT" D=sls,t=lisa --user=sls --socket=/tmp/mysql.sock --password=122541 --execute

成功了!!!

No slaves found. See --recursion-method if host b0d81312ea9e has slaves.

Not checking slave lag because no slaves were found and --check-slave-lag was not specified.

Operation, tries, wait:

analyze_table, 10, 1

copy_rows, 10, 0.25

create_triggers, 10, 1

drop_triggers, 10, 1

swap_tables, 10, 1

update_foreign_keys, 10, 1

Altering `sls`.`lisa`...

Creating new table...

Created new table sls._lisa_new OK.

Altering new table...

Altered `sls`.`_lisa_new` OK.

2016-12-24T09:09:15 Creating triggers...

2016-12-24T09:09:15 Created triggers OK.

2016-12-24T09:09:15 Copying approximately 12 rows...

2016-12-24T09:09:15 Copied rows OK.

2016-12-24T09:09:15 Analyzing new table...

2016-12-24T09:09:15 Swapping tables...

2016-12-24T09:09:15 Swapped original and new tables OK.

2016-12-24T09:09:15 Dropping old table...

2016-12-24T09:09:15 Dropped old table `sls`.`_lisa_old` OK.

2016-12-24T09:09:15 Dropping triggers...

2016-12-24T09:09:15 Dropped triggers OK.

Successfully altered `sls`.`lisa`.

成功了!!!

终于成功了!!我已经爱上我自己!!哈哈哈

#####sample

-》 https://my.oschina.net/sansom/blog/164085

-》https://www.cnblogs.com/shengdimaya/p/7063204.html

pt-query-digest(percona toolkit)小解

-》 https://yq.aliyun.com/articles/505021

pt-query-digest --filter 使用方法

tar percona-toolkit-2.2.4.tar.gz

cd percona-toolkit-2.2.4

perl Makefile.PL

make && make install

pt-query-digest --user=myuser --password=111111 --review h=192.168.6.20,D=myawr,t=myawr_query_review --history h=192.168.6.20,D=myawr,t=myawr_query_review_history --no-report --limit=100% --filter=" \$event->{add_column} = length(\$event->{arg}) and \$event->{hostid}=7" /usr/local/mysql/data/mysql-6-slow.log

--user=myuser(传送到指定数据的用户)

--password=111111(传送到指定数据的用户的密码)

--review h=192.168.6.20(传送到指定远程指定的数据库)

D=myawr(数据库名)

t=myawr_query_review(表名)

--history (传送到远程数据库历史数据表)

h=192.168.6.20(传送到指定远程指定的数据库)

D=myawr(数据库名)

t=myawr_query_review_history(表名)

CREATE TABLE query_review (

checksum BIGINT UNSIGNED NOT NULL PRIMARY KEY,

fingerprint TEXT NOT NULL,

sample TEXT NOT NULL,

first_seen DATETIME,

last_seen DATETIME,

reviewed_by VARCHAR(20),

reviewed_on DATETIME,

comments TEXT

)历史表创建脚本:

CREATE TABLE query_review_history (

checksum BIGINT UNSIGNED NOT NULL,

sample TEXT NOT NULL,

ts_min DATETIME,

ts_max DATETIME,

ts_cnt FLOAT,

Query_time_sum FLOAT,

Query_time_min FLOAT,

Query_time_max FLOAT,

Query_time_pct_95 FLOAT,

Query_time_stddev FLOAT,

Query_time_median FLOAT,

Lock_time_sum FLOAT,

Lock_time_min FLOAT,

Lock_time_max FLOAT,

Lock_time_pct_95 FLOAT,

Lock_time_stddev FLOAT,

Lock_time_median FLOAT,

Rows_sent_sum FLOAT,

Rows_sent_min FLOAT,

Rows_sent_max FLOAT,

Rows_sent_pct_95 FLOAT,

Rows_sent_stddev FLOAT,

Rows_sent_median FLOAT,

Rows_examined_sum FLOAT,

Rows_examined_min FLOAT,

Rows_examined_max FLOAT,

Rows_examined_pct_95 FLOAT,

Rows_examined_stddev FLOAT,

Rows_examined_median FLOAT,

-- Percona extended slowlog attributes

-- http://www.percona.com/docs/wiki/patches:slow_extended

Rows_affected_sum FLOAT,

Rows_affected_min FLOAT,

Rows_affected_max FLOAT,

Rows_affected_pct_95 FLOAT,

Rows_affected_stddev FLOAT,

Rows_affected_median FLOAT,

Rows_read_sum FLOAT,

Rows_read_min FLOAT,

Rows_read_max FLOAT,

Rows_read_pct_95 FLOAT,

Rows_read_stddev FLOAT,

Rows_read_median FLOAT,

Merge_passes_sum FLOAT,

Merge_passes_min FLOAT,

Merge_passes_max FLOAT,

Merge_passes_pct_95 FLOAT,

Merge_passes_stddev FLOAT,

Merge_passes_median FLOAT,

InnoDB_IO_r_ops_min FLOAT,

InnoDB_IO_r_ops_max FLOAT,

InnoDB_IO_r_ops_pct_95 FLOAT,

InnoDB_IO_r_ops_stddev FLOAT,

InnoDB_IO_r_ops_median FLOAT,

InnoDB_IO_r_bytes_min FLOAT,

InnoDB_IO_r_bytes_max FLOAT,

InnoDB_IO_r_bytes_pct_95 FLOAT,

InnoDB_IO_r_bytes_stddev FLOAT,

InnoDB_IO_r_bytes_median FLOAT,

InnoDB_IO_r_wait_min FLOAT,

InnoDB_IO_r_wait_max FLOAT,

InnoDB_IO_r_wait_pct_95 FLOAT,

InnoDB_IO_r_wait_stddev FLOAT,

InnoDB_IO_r_wait_median FLOAT,

InnoDB_rec_lock_wait_min FLOAT,

InnoDB_rec_lock_wait_max FLOAT,

InnoDB_rec_lock_wait_pct_95 FLOAT,

InnoDB_rec_lock_wait_stddev FLOAT,

InnoDB_rec_lock_wait_median FLOAT,

InnoDB_queue_wait_min FLOAT,

InnoDB_queue_wait_max FLOAT,

InnoDB_queue_wait_pct_95 FLOAT,

InnoDB_queue_wait_stddev FLOAT,

InnoDB_queue_wait_median FLOAT,

InnoDB_pages_distinct_min FLOAT,

InnoDB_pages_distinct_max FLOAT,

InnoDB_pages_distinct_pct_95 FLOAT,

InnoDB_pages_distinct_stddev FLOAT,

InnoDB_pages_distinct_median FLOAT,

-- Boolean (Yes/No) attributes. Only the cnt and sum are needed for these.

-- cnt is how many times is attribute was recorded and sum is how many of

-- those times the value was Yes. Therefore sum/cnt * 100 = % of recorded

-- times that the value was Yes.

QC_Hit_cnt FLOAT,

QC_Hit_sum FLOAT,

Full_scan_cnt FLOAT,

Full_scan_sum FLOAT,

Full_join_cnt FLOAT,

Full_join_sum FLOAT,

Tmp_table_cnt FLOAT,

Tmp_table_sum FLOAT,

Tmp_table_on_disk_cnt FLOAT,

Tmp_table_on_disk_sum FLOAT,

Filesort_cnt FLOAT,

Filesort_sum FLOAT,

Filesort_on_disk_cnt FLOAT,

Filesort_on_disk_sum FLOAT,

PRIMARY KEY(checksum, ts_min, ts_max)

);

####sample 1

2.配置慢查询

被监控机主要有以下几个步骤:mysql基础配置--依赖软件安装---脚本配置--定时任务。

mysql基础配置:

在mysql命令行中执行以下命令:

set global slow_query_log=on;

set global long_query_time=1;#设置记录查询超过多长时间的sql,根据自己情况定

set global slow_query_log_file='/opt/lampp/var/mysql/';#设置mysql慢查询日志路径

断开当前连接然后重新连接进入命令行查询(缓存):

show variables like '%slow%';

show variables like '%long_query_time%';作者:dancingking

链接:https://www.jianshu.com/p/ff62001d9f1c

来源:简书

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

开启慢查询日志,可以让MySQL记录下查询超过指定时间的语句,通过定位分析性能的瓶颈,才能更好的优化数据库系统的性能。

2.设置mysql慢查询

方法一:全局变量设置(临时生效)

将 slow_query_log 全局变量设置为“ON”状态

mysql> set global slow_query_log='ON';

设置慢查询日志存放的位置

mysql> set global slow_query_log_file='/usr/local/mysql/data/slow.log';

查询超过1秒就记录

mysql> set global long_query_time=1;

方法二:配置文件设置(永久生效)

修改配置文件my.cnf,在[mysqld]下方加入:

[mysqld] slow_query_log = ON slow_query_log_file = /usr/local/mysql/data/slow.log long_query_time = 1

重启MySQL服务

service mysqld restart

3.查看mysql慢查询状态

show variables like '%slow%'; #查看慢查询的开启状态和慢查询日志的存储路径

show variables like '%log_output%'; #查看当前慢查询日志输出的格式,可以是FILE(存储在数数据库的数据文件中的hostname.log),也可以是TABLE(存储在数据库中的mysql.general_log)

show variables like 'long%'; #查看慢查询阈值,当查询时间多于设定的阈值时,记录日志

select sleep(2); #插入一条数据,数据大于“long_query_time”(1秒)时(不包含1秒),会把日志保存到慢查询日志中

show global status like '%Slow_queries%'; #查看慢查询的个数,根据个数是否新增来判断测试结果

4.mysql慢查询日志分析工具

mysqldumpslow

浙公网安备 33010602011771号

浙公网安备 33010602011771号