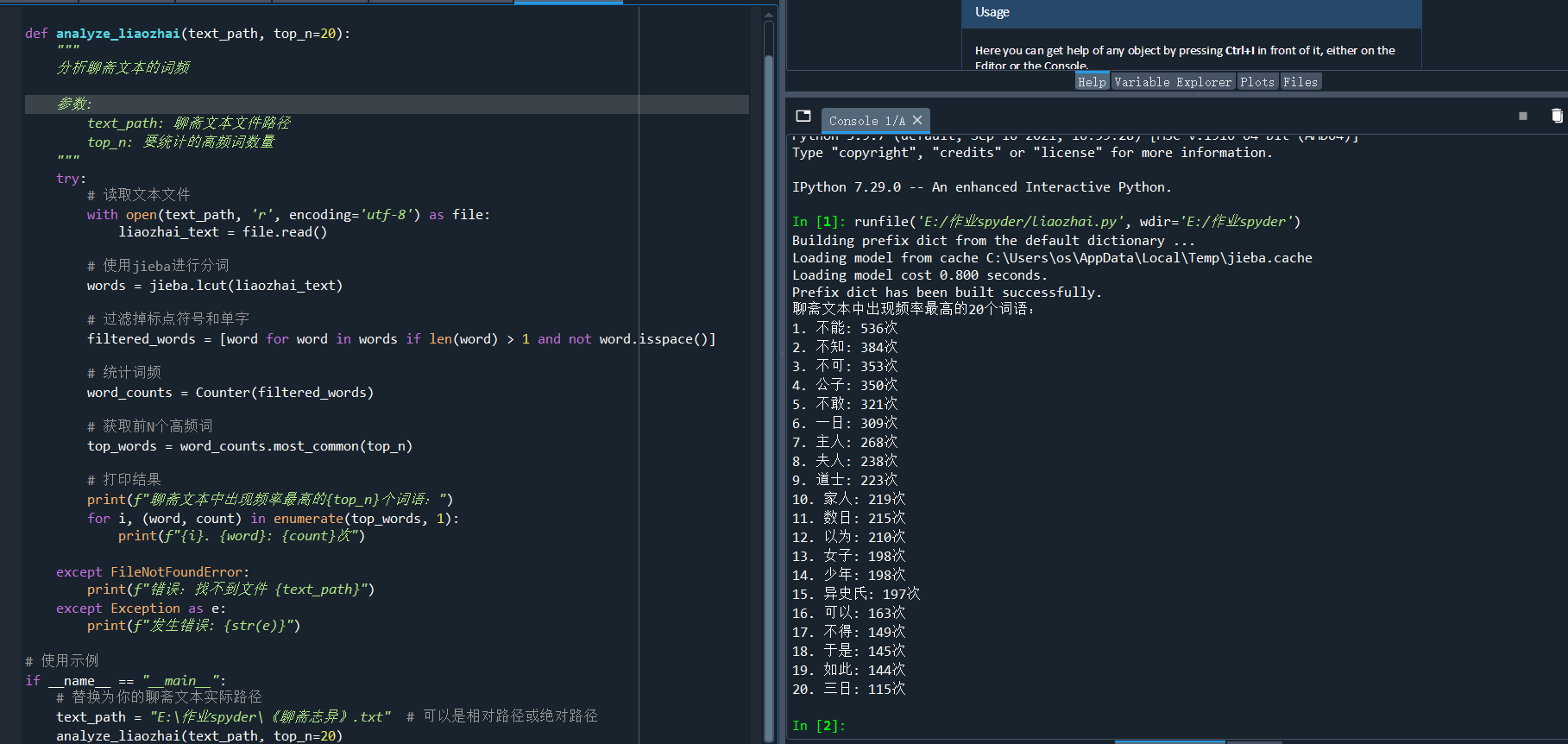

聊斋相关的分词出现次数前20

import jieba

from collections import Counter

def analyze_liaozhai(text_path, top_n=20):

"""

分析聊斋文本的词频

参数:

text_path: 聊斋文本文件路径

top_n: 要统计的高频词数量

"""

try:

# 读取文本文件

with open(text_path, 'r', encoding='utf-8') as file:

liaozhai_text = file.read()

# 使用jieba进行分词

words = jieba.lcut(liaozhai_text)

# 过滤掉标点符号和单字

filtered_words = [word for word in words if len(word) > 1 and not word.isspace()]

# 统计词频

word_counts = Counter(filtered_words)

# 获取前N个高频词

top_words = word_counts.most_common(top_n)

# 打印结果

print(f"聊斋文本中出现频率最高的{top_n}个词语:")

for i, (word, count) in enumerate(top_words, 1):

print(f"{i}. {word}: {count}次")

except FileNotFoundError:

print(f"错误:找不到文件 {text_path}")

except Exception as e:

print(f"发生错误: {str(e)}")

使用示例

if name == "main":

# 替换为你的聊斋文本实际路径

text_path = "E:\作业spyder\《聊斋志异》.txt" # 可以是相对路径或绝对路径

analyze_liaozhai(text_path, top_n=20)

浙公网安备 33010602011771号

浙公网安备 33010602011771号