OpenShift 4.2环境离线部署Operatorhub

缺省离线环境安装的ocp4的Operatorhub是没有内容的。详细离线文档参考官网文档

https://docs.openshift.com/container-platform/4.2/operators/olm-restricted-networks.html

我这里以amq-stream为例记录离线部署的过程, 如何批量导入的过程正在研究中。。。。

- 访问路径拿出所有的package

$ curl https://quay.io/cnr/api/v1/packages?namespace=redhat-operators > packages.txt

- 拼出amq-stream的链接后curl一下获取下载地址

bash-4.2$ curl https://quay.io/cnr/api/v1/packages/redhat-operators/amq-streams/4.0.0 [{"content":{"digest":"091168d8d6f9511404ffa1d69502c84144e1a83ceb5503e8c556f69a1af66a1e","mediaType":"application/vnd.cnr.package.helm.v0.tar+gzip","size":89497,"urls":[]},"created_at":"2019-10-24T09:09:26","digest":"sha256:d0707e2a688e64907ff4d287c5f95a35b45b08121f8a7d556b9f130840e39052","mediaType":"application/vnd.cnr.package-manifest.helm.v0.json","metadata":null,"package":"redhat-operators/amq-streams","release":"4.0.0"}]

- 将Operator的内容存成一个tar.gz的包

curl -XGET https://quay.io/cnr/api/v1/packages/redhat-operators/amq-streams/blobs/sha256/091168d8d6f9511404ffa1d69502c84144e1a83ceb5503e8c556f69a1af66a1e \ -o amq-streams.tar.gz

建立路径并解压缩

$ mkdir -p manifests/ $ tar -xf amq-stream.tar.gz -C manifests/

- 重新命名后tree一下结构

[root@localhost ~]# tree manifests/ manifests/ └── amq-streams ├── 1.0.0 │ ├── amq-streams-kafkaconnect.crd.yaml │ ├── amq-streams-kafkaconnects2i.crd.yaml │ ├── amq-streams-kafka.crd.yaml │ ├── amq-streams-kafkamirrormaker.crd.yaml │ ├── amq-streams-kafkatopic.crd.yaml │ ├── amq-streams-kafkauser.crd.yaml │ └── amq-streams.v1.0.0.clusterserviceversion.yaml ├── 1.1.0 │ ├── amq-streams-kafkaconnect.crd.yaml │ ├── amq-streams-kafkaconnects2i.crd.yaml │ ├── amq-streams-kafka.crd.yaml │ ├── amq-streams-kafkamirrormaker.crd.yaml │ ├── amq-streams-kafkatopic.crd.yaml │ ├── amq-streams-kafkauser.crd.yaml │ └── amq-streams.v1.1.0.clusterserviceversion.yaml ├── 1.2.0 │ ├── amq-streams-kafkabridges.crd.yaml │ ├── amq-streams-kafkaconnects2is.crd.yaml │ ├── amq-streams-kafkaconnects.crd.yaml │ ├── amq-streams-kafkamirrormakers.crd.yaml │ ├── amq-streams-kafkas.crd.yaml │ ├── amq-streams-kafkatopics.crd.yaml │ ├── amq-streams-kafkausers.crd.yaml │ └── amq-streams.v1.2.0.clusterserviceversion.yaml ├── 1.3.0 │ ├── amq-streams-kafkabridges.crd.yaml │ ├── amq-streams-kafkaconnects2is.crd.yaml │ ├── amq-streams-kafkaconnects.crd.yaml │ ├── amq-streams-kafkamirrormakers.crd.yaml │ ├── amq-streams-kafkas.crd.yaml │ ├── amq-streams-kafkatopics.crd.yaml │ ├── amq-streams-kafkausers.crd.yaml │ └── amq-streams.v1.3.0.clusterserviceversion.yaml └── amq-streams.package.yaml

展开package.yaml看看

[root@localhost ~]# cat manifests/amq-streams/amq-streams.package.yaml packageName: amq-streams channels: - name: stable currentCSV: amqstreams.v1.3.0

当前指向为1.3.0目录,所以去1.3.0目录去更新amq-streams.v1.3.0.clusterserviceversion.yaml,具体是这么一堆标黑的。

labels: name: amq-streams-cluster-operator strimzi.io/kind: cluster-operator spec: serviceAccountName: strimzi-cluster-operator containers: - name: cluster-operator image: registry.redhat.ren/amq7/amq-streams-operator:1.3.0 args: - /opt/strimzi/bin/cluster_operator_run.sh env: - name: STRIMZI_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.annotations['olm.targetNamespaces'] - name: STRIMZI_FULL_RECONCILIATION_INTERVAL_MS value: "120000" - name: STRIMZI_OPERATION_TIMEOUT_MS value: "300000" - name: STRIMZI_DEFAULT_ZOOKEEPER_IMAGE value: registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_DEFAULT_TLS_SIDECAR_ENTITY_OPERATOR_IMAGE value: registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_DEFAULT_TLS_SIDECAR_KAFKA_IMAGE value: registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_DEFAULT_TLS_SIDECAR_ZOOKEEPER_IMAGE value: registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_DEFAULT_KAFKA_EXPORTER_IMAGE value: registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_KAFKA_IMAGES value: | 2.2.1=registry.redhat.io/amq7/amq-streams-kafka-22:1.3.0 2.3.0=registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 - name: STRIMZI_KAFKA_CONNECT_IMAGES value: | 2.2.1=registry.redhat.io/amq7/amq-streams-kafka-22:1.3.0

下载镜像,然后再推送到自己的位置那些就不说了

我因为只修改了上面那个operator的后面的没有修改和导入导致后来拉镜像有问题,不说了。。。。

- 建立一个 custom-registry.Dockerfile

[root@helper operator]# cat custom-registry.Dockerfile FROM registry.redhat.io/openshift4/ose-operator-registry:v4.2.0 AS builder COPY manifests manifests RUN /bin/initializer -o ./bundles.db;sleep 20 #FROM scratch #COPY --from=builder /registry/bundles.db /bundles.db #COPY --from=builder /bin/registry-server /registry-server #COPY --from=builder /bin/grpc_health_probe /bin/grpc_health_probe EXPOSE 50051 ENTRYPOINT ["/bin/registry-server"] CMD ["--database", "/registry/bundles.db"]

如果按照官方文档来搞, 此处有个n大坑

- 运行完initializer后说找不到bundles.db文件,实际上运行完后需要sleep一下,让文件形成

- bundles.db位置不对,在/registry下,而不是在/build下

- registry-server的位置不对,在/bin下,而不是在/build/bin/下

- 如果FROM scratch的话,形成的镜像因为格式问题无法运行。

耽误时间3个钟头以上。。。。。

$ oc patch OperatorHub cluster --type json \ -p '[{"op": "add", "path": "/spec/disableAllDefaultSources", "value": true}]'

- 构建镜像

podman build -f custom-registry.Dockerfile -t registry.redhat.ren/ocp4/custom-registry

podman push registry.redhat.ren/ocp4/custom-registry

- 创建一个my-operator-catalog.yaml文件

[root@helper operator]# cat my-operator-catalog.yaml apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: my-operator-catalog namespace: openshift-marketplace spec: displayName: My Operator Catalog sourceType: grpc image: registry.redhat.ren/ocp4/custom-registry:latest

然后

oc create -f my-operator-catalog.yaml

正常情况下看到的是

[root@helper operator]# oc get pods -n openshift-marketplace NAME READY STATUS RESTARTS AGE marketplace-operator-5c846b89cb-k5827 1/1 Running 1 2d4h my-operator-catalog-8jt25 1/1 Running 0 4m53s

[root@helper operator]# oc get catalogsource -n openshift-marketplace NAME DISPLAY TYPE PUBLISHER AGE my-operator-catalog My Operator Catalog grpc 5m5s

[root@helper operator]# oc get packagemanifest -n openshift-marketplace NAME CATALOG AGE amq-streams My Operator Catalog 5m11s

我的中间过程有点问题,后来发现是因为各个节点解析不到registry.redhat.ren这个外部镜像仓库地址,后手工在/etc/hosts中加上。

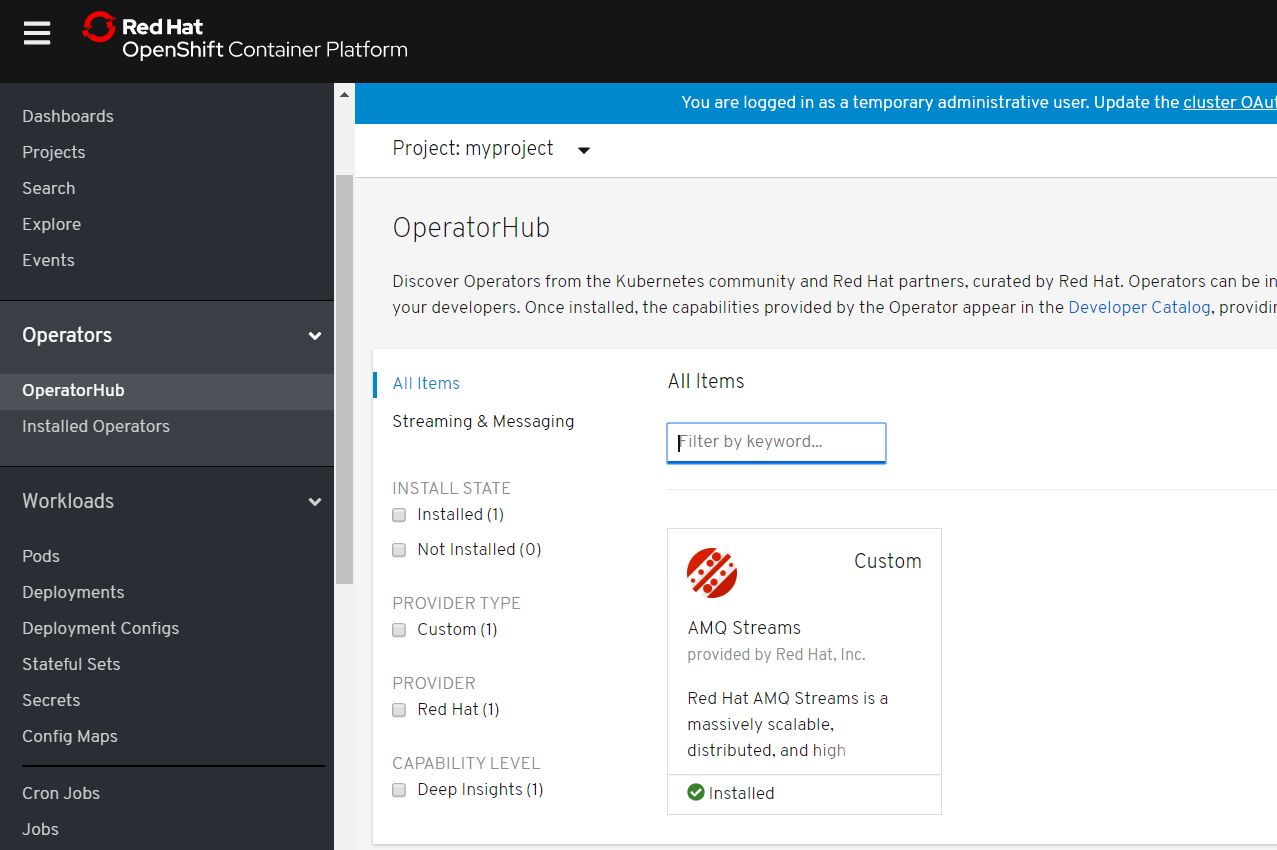

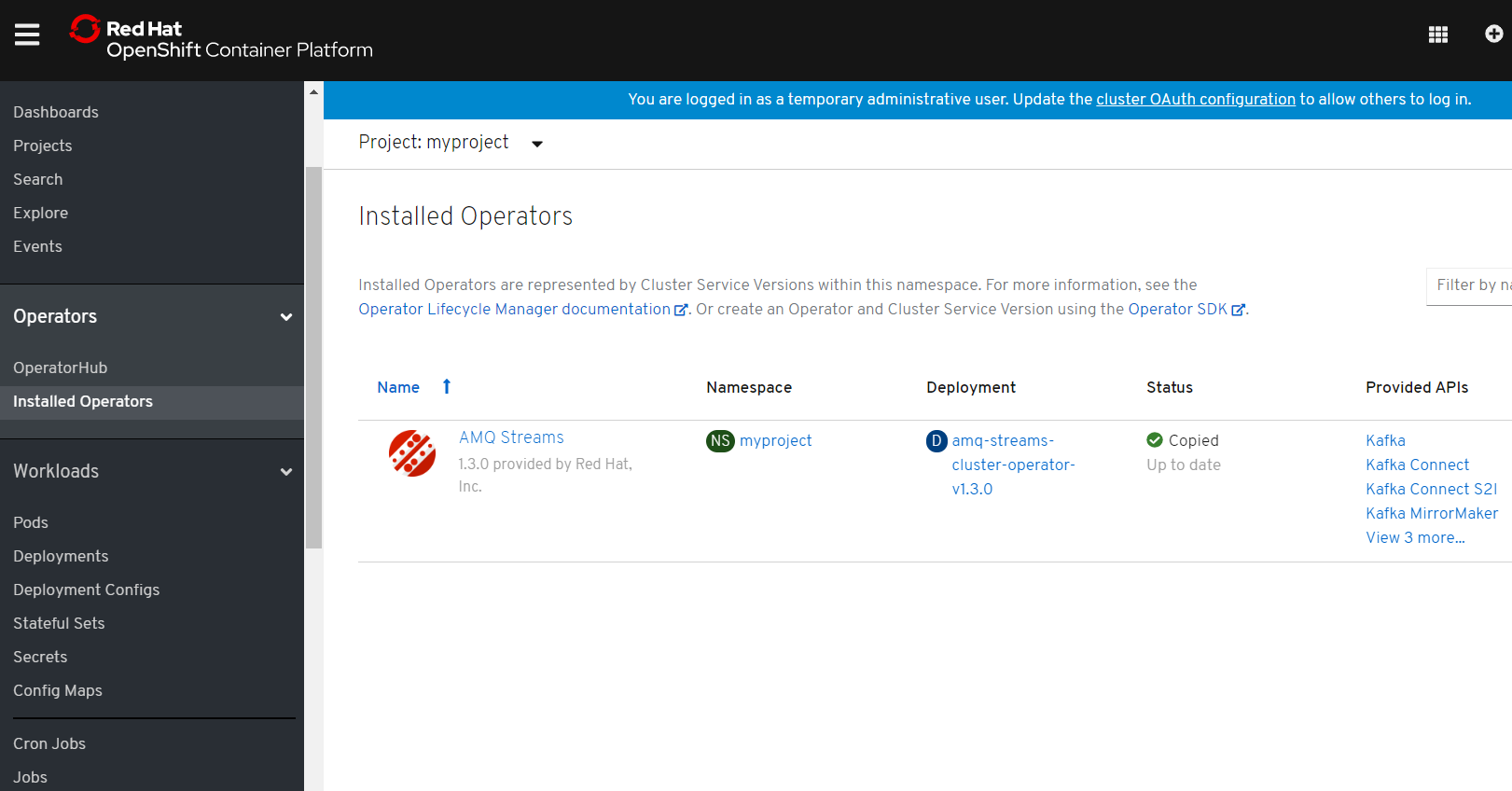

- 切换到openshift界面。

建立一个新的项目安装

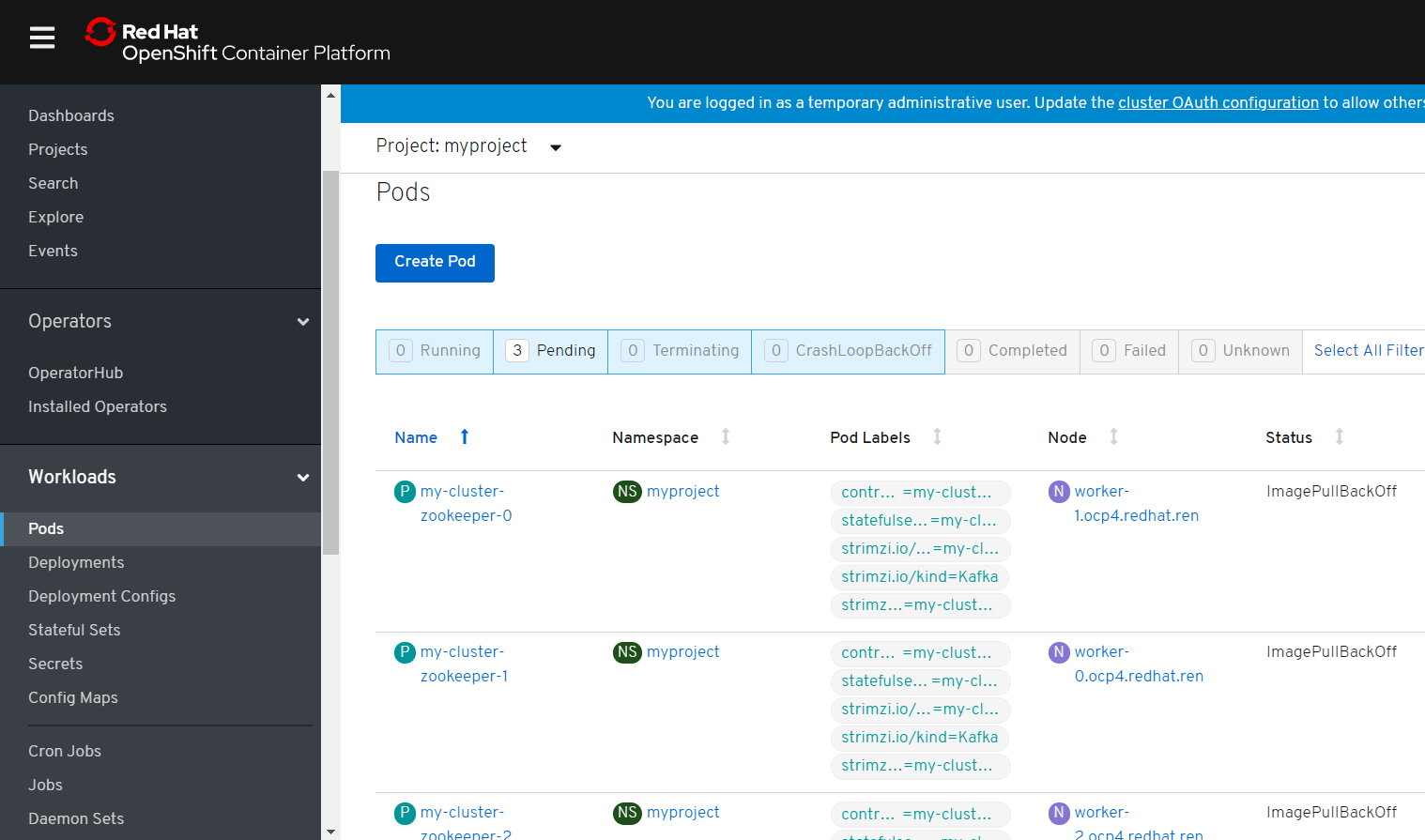

然后建立kafka集群然后查看Pod,我因为没有镜像所以都image pullbackoff..... :-(

大功告成!

浙公网安备 33010602011771号

浙公网安备 33010602011771号