flume+elasticsearch

项目的日志服是使用flume+elasticsearch

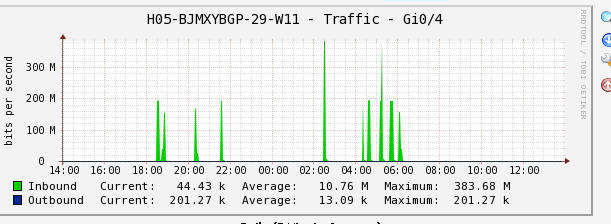

但是运维大哥告诉我,经常会大量往外发包,以至流量超标.问我是不是程序有问题.当时我拍着胸膛说:肯定不是.他说,可能是 服务器被攻击了,让我换一台服务器.

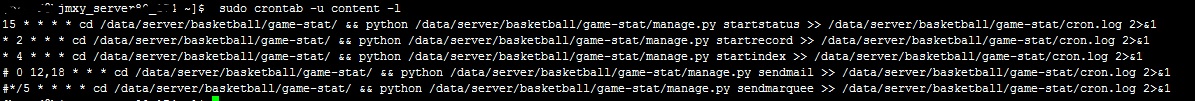

看了一下后台 cron task

感觉不是cron task引起的.

于是换了一台服务器.将原来的配置完全拷过来了.

结果启动flume时,老是netty报 Failed to create a selector.失败.原来是,登录用户 open file 太小,扩大到四倍.

sudo sh -c "ulimit -n 4096 && exec su $brand"

这样又运行了几天.然后运维大哥告诉我,日志服经常会大量往外发包,以至流量超标.

这次认真看了看 flume.log发现大量报这个错误:主要是sink c2 和c98.

443 24 Mar 2015 22:58:42,781 WARN [New I/O worker #59] (org.apache.flume.source.AvroSource.append:350) - Avro source r2: Unable to process event. Exception follows. 101444 org.apache.flume.ChannelException: Unable to put event on required channel: org.apache.flume.channel.MemoryChannel{name: c98} 101445 at org.apache.flume.channel.ChannelProcessor.processEvent(ChannelProcessor.java:275) 101446 at org.apache.flume.source.AvroSource.append(AvroSource.java:348) 101447 at sun.reflect.GeneratedMethodAccessor9.invoke(Unknown Source) 101448 at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) 101449 at java.lang.reflect.Method.invoke(Method.java:606) 101450 at org.apache.avro.ipc.specific.SpecificResponder.respond(SpecificResponder.java:88) 101451 at org.apache.avro.ipc.Responder.respond(Responder.java:149) 101452 at org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.messageReceived(NettyServer.java:188) 101453 at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70) 101454 at org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.handleUpstream(NettyServer.java:173) 101455 at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:558) 101456 at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:786) 101457 at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296) 101458 at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:458) 101459 at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:439) 101460 at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303) 101461 at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70) 101462 at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:558) 101463 at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:553) 101464 at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268) 101465 at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255) 101466 at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:84) 101467 at org.jboss.netty.channel.socket.nio.AbstractNioWorker.processSelectedKeys(AbstractNioWorker.java:471) 101468 at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:332) 101469 at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:35) 101470 at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:102) 101471 at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42) 101472 at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) 101473 at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) 101474 at java.lang.Thread.run(Thread.java:745) 101475 Caused by: org.apache.flume.ChannelFullException: Space for commit to queue couldn't be acquired. Sinks are likely not keeping up with sources, or the buffer size is too tight 101476 at org.apache.flume.channel.MemoryChannel$MemoryTransaction.doCommit(MemoryChannel.java:130) 101477 at org.apache.flume.channel.BasicTransactionSemantics.commit(BasicTransactionSemantics.java:151) 101478 at org.apache.flume.channel.ChannelProcessor.processEvent(ChannelProcessor.java:267) 101479 ... 29 more

估计是

agent.channels.c98.type = memory

agent.channels.c98.capacity = 1000//capacity 太小

agent.channels.c98.transactionCapacity = 100

然后提高了c2 与c98的capacity.

又能正常使用.估计过段时间 运维大哥 还会找我:

日志服经常会大量往外发包,以至流量超标.

给笨笨的自己提个醒>_<~

浙公网安备 33010602011771号

浙公网安备 33010602011771号