学习了对数据的储存,感觉还不够深入,昨天开始对储存数据进行提取、整合和图像化显示。实例还是喜马拉雅Fm,算是对之前数据爬取之后的补充。

明确需要解决的问题

1,蕊希电台全部作品的进行储存 --scrapy爬取:作品id(trackid),作品名称(title),播放量playCount

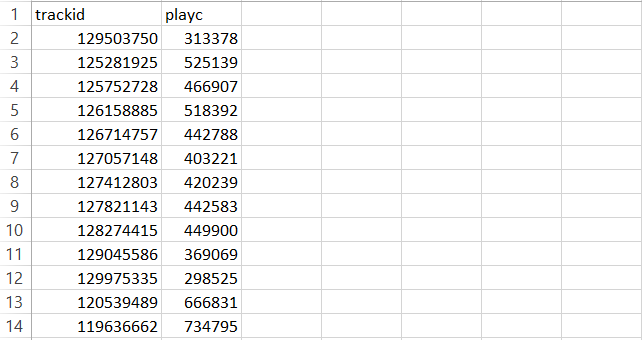

2,储存的数据进行提取,整合 --pandas运用:提取出trackid,playCount;对播放量进行排序,找出最高播放量(palyCount)的作品

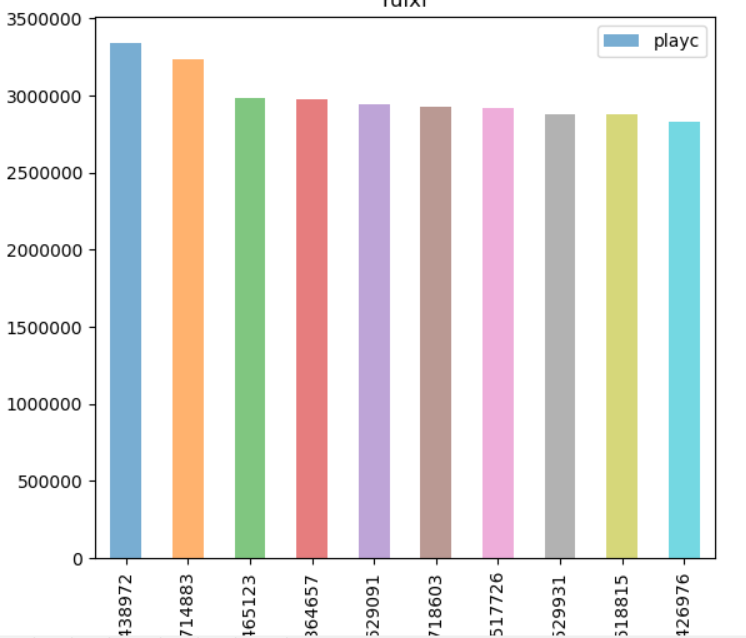

3.整合的数据图像化显示 --matplotlib图像化,清楚的查看哪些作品最受欢迎:trackid作为x轴,播放量(playCount)作为y轴

三、给大家看下成果

3.1_蕊希电台所有作品数(369)

3.2_全部储存到mongoDB数据库

3.3_导出csv文件:mongoexport -d ruixi -c ruixi -f trackid,playc --csv -o Desktop\ruixi.csv

3.4_图像化显示

二、items.py,middlewares.py就不讲了,可以看我之前的博客;重点说一下其他3个文件

2.1_爬虫文件:spiders/ruixi.py

# -*- coding: utf-8 -*- import scrapy from Ruixi.items import RuixiItem import json from Ruixi.settings import USER_AGENT import re class RuixiSpider(scrapy.Spider): name = 'ruixi' allowed_domains = ['www.ximalaya.com'] start_urls = ['https://www.ximalaya.com/revision/track/trackPageInfo?trackId=129503750'] def parse(self, response): ruixi = RuixiItem() #使用json,提取需要文件 ruixi['trackid'] = json.loads(response.body)['data']['trackInfo']['trackId'] ruixi['title'] = json.loads(response.body)['data']['trackInfo']['title'] ruixi['playc'] = json.loads(response.body)['data']['trackInfo']["playCount"] yield ruixi #对当前页面的trackid进行提取,生成新的url,跳转至下一链接,继续提取 for each_item in json.loads(response.body)['data']["moreTracks"]: each_trackid = each_item['trackId'] new_url = 'https://www.ximalaya.com/revision/track/trackPageInfo?trackId=' + str(each_trackid) yield scrapy.Request(new_url,callback=self.parse)

2.2_管道文件配置:pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import scrapy import pymongo from scrapy.item import Item from scrapy.exceptions import DropItem import codecs import json from openpyxl import Workbook #储存之前,进行去重处理 class DuplterPipeline(): def __init__(self): self.set = set() def process_item(self,item,spider): name = item['trackid'] if name in self.set(): raise DropItem('Dupelicate the items is%s' % item) self.set.add(name) return item class RuixiPipeline(object): def process_item(self, item, spider): return item #存储到mongodb中 class MongoDBPipeline(object): @classmethod def from_crawler(cls,crawler): cls.DB_URL = crawler.settings.get("MONGO_DB_URL",'mongodb://localhost:27017/') cls.DB_NAME = crawler.settings.get("MONGO_DB_NAME",'scrapy_data') return cls() def open_spider(self,spider): self.client = pymongo.MongoClient(self.DB_URL) self.db = self.client[self.DB_NAME] def close_spider(self,spider): self.client.close() def process_item(self,item,spider): collection = self.db[spider.name] post = dict(item) if isinstance(item,Item) else item collection.insert(post) return item #储存至.Json文件 class JsonPipeline(object): def __init__(self): self.file = codecs.open('data_cn.json', 'wb', encoding='gb2312') def process_item(self, item, spider): line = json.dumps(dict(item)) + '\n' self.file.write(line.decode("unicode_escape")) return item #储存至.xlsx文件 class XlsxPipeline(object): # 设置工序一 def __init__(self): self.wb = Workbook() self.ws = self.wb.active def process_item(self, item, spider): # 工序具体内容 line = [item['trackid'], item['title'], item['playc']] # 把数据中每一项整理出来 self.ws.append(line) # 将数据以行的形式添加到xlsx中 self.wb.save('ruixi.xlsx') # 保存xlsx文件 return item

2.3_设置文件:settings.py

MONGO_DB_URL = 'mongodb://localhost:27017/' MONGO_DB_NAME = 'ruixi' FEED_EXPORT_ENCODING = 'utf-8' USER_AGENT =[ #设置浏览器的User_agent "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] FEED_EXPORT_FIELDS = ['trackid','title','playc'] ROBOTSTXT_OBEY = False CONCURRENT_REQUESTS = 10 DOWNLOAD_DELAY = 0.5 COOKIES_ENABLED = False

# Crawled (400) <GET https://www.cnblogs.com/eilinge/> (referer: None) DEFAULT_REQUEST_HEADERS =

{

'User-Agent': random.choice(USER_AGENT),

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

} DOWNLOADER_MIDDLEWARES =

{ 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware':543, 'Ruixi.middlewares.RuixiSpiderMiddleware': 144, } ITEM_PIPELINES =

{ 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware':1, 'Ruixi.pipelines.DuplterPipeline': 290, 'Ruixi.pipelines.MongoDBPipeline': 300, 'Ruixi.pipelines.JsonPipeline':301, 'Ruixi.pipelines.XlsxPipeline':302, }

2.4_生成报表

#-*- coding:utf-8 -*- import matplotlib as mpl import numpy as np import pandas as pd import matplotlib.pyplot as plt import pdb df = pd.read_csv("ruixi.csv") df1= df.sort_values(by='playc',ascending=False)

df2 = df1.iloc[:10,:]

df2.plot(kind='bar',x='trackid',y='playc',alpha=0.6)

plt.xlabel("trackId")

plt.ylabel("playc")

plt.title("ruixi")

plt.show()

浙公网安备 33010602011771号

浙公网安备 33010602011771号