日志系统ELK安装

一、环境准备

node:172.26.8.146

jdk1.8

只有一台机器,所有的组件都使用单机模式,客户端使用filebeat收集日志

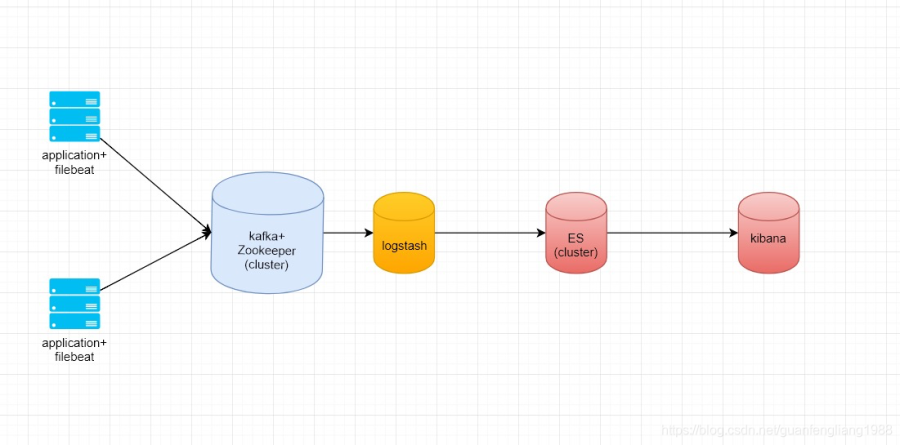

拓扑图:

1.1 检查是否有JDK

查看是否已安装jdk

java -version

elasticsearch与logstash至少需要java 8支持

安装方式一:

su -c "yum install java-1.8.0-openjdk"

安装方式二:

下载官网下载rpm包

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

rpm -ivh jdk-8u181-linux-x64.rpm

rpm 包不需配置环境变量

1.2修改limit限制

vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

echo 'vm.max_map_count=262144'>> /etc/sysctl.conf

sysctl -p

vi /etc/security/limits.d/90-nproc.conf

* soft nproc 2048

二、安装 elasticsearch

2.1 下载es

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.6.1-linux-x86_64.tar.gz

useradd es

tar -zxvf elasticsearch-7.6.1-linux-x86_64.tar.gz

mkdir -p /data/es/es-elk

mv elasticsearch-7.6.1 /data/es/es-elk

chown -R es.es /data/es

2.2修改配置文件

vim /data/es/es-elk/config/elasticsearch.yml

cluster.name: elk

node.name: n1

network.host: 0.0.0.0

http.port: 9210

transport.tcp.port: 9310

cluster.initial_master_nodes: ["n1"]

#修改最大分片数量 默认1000

cluster.max_shards_per_node: 10000

#解除硬盘限制超过80%无法创建索引

cluster.routing.allocation.disk.threshold_enabled: false

http.cors.enabled: true

http.cors.allow-origin: "*"

堆内存修改

vim /home/es/es-elk/config/jvm.options

-Xms4g

-Xmx4g

2.3 启动

sudo -u es /home/es/es-elk/bin/elasticsearch -d

2.4 验证是否启动

curl -XGET localhost:9210/_cat

三、Kibana安装

3.1 下载安装

tar xf kibana-7.6.1-linux-x86_64.tar.gz

mv kibana-7.6.1-linux-x86_64 /usr/local/kibana

3.2 修改kibana配置

vim /usr/local/kibana/config/kibana.yml

i18n.locale: "zh-CN"

server.port: 8013

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://127.0.0.1:9210"]

logging.dest: /tmp/kibana.log

3.3 启动kibana

nohup /usr/local/kibana/bin/kibana --allow-root &

四、Logstash安装

4.1 下载安装

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.6.1.tar.gz

tar xf logstash-7.6.1.tar.gz

mv logstash-7.6.1 /usr/local/logstash

4.2 修改配置

vim /usr/local/logstash/config/logstash.yml

path.config: /usr/local/logstash/conf.d

五、kafka安装

5.1 下载安装

tar xf kafka_2.13-3.3.1.tgz

mv kafka_2.13-3.3.1 /usr/local/kafka

5.2 修改配置

vim /usr/local/kafka/config/server.properties

broker.id=0

listeners=PLAINTEXT://172.26.8.46:9092

advertised.listeners=PLAINTEXT://172.26.8.46:9092

log.dirs=/data/kafka/kafka-logs

创建目录

mkdir -p /data/kafka/kafka-logs

5.3 启动kafka

/usr/local/kafka/bin/zookeeper-server-start.sh -daemon /usr/local/kafka/config/zookeeper.properties

/usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

六、FileBeat安装

6.1 下载安装

rpm -ivh filebeat-7.6.1-x86_64.rpm

6.2 修改配置

收集message日志为例

vim /etc/filebeat/filebeat.yml

name: 172.16.26.146

close_inactive: 5m

scan_frequency: 30s

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/messages

tags: ["dev_messages"]

fields:

log_topic: dev_messages-log

ignore_older: 6h

# 去掉不需要的字段

#fields: [ "beat", "input", "offset", "prospector", "source" ]

processors:

- drop_fields:

fields: [ "beat", "input", "offset", "prospector", "source" ]

#fields: [ "offset", "fields", "agent", "@version","ecs","log","beat", "input", "offset", "prospector", "source" ]

output.kafka:

hosts: ["172.26.8.146:9092"]

topic: '%{[fields.log_topic]}'

worker: 4

compression: gzip

max_message_bytes: 10000000

6.3 启动filebeat

systemctl start filebeat

systemctl enabled filebeat

七、logstash 配置

vim /usr/local/logstash/conf.d/logstash-agent.conf

input {

kafka {

bootstrap_servers => "172.26.8.146:9092"

topics => [ 'dev_messages-log', 'dev_mysql-log', 'dev_nginx-log']

decorate_events => true

consumer_threads => 2

codec => json

}

}

fileter {

mutate {

remove_field => [ "offset", "fields", "agent", "@version","ecs","log","beat", "input", "offset", "prospector", "source" ]

rename => { "[host][name]" => "host" }

}

}

output {

if "dev_messages" in [tags] {

elasticsearch {

hosts => [ "localhost:9210" ]

index => "dev_messages-%{+YYYY-MM-dd}"

}

}

}

测试配置文件语法是否正确

/usr/local/logstash/bin/logstash -f /usr/local/logstash/conf.d/logstash-agent.conf -t

启动logstash

nohup /usr/local/logstash/bin/logstash -f /usr/local/logstash/conf.d/logstash-agent.conf >/dev/null &

八、登陆Kibana

http://172.26.146:8013

浙公网安备 33010602011771号

浙公网安备 33010602011771号