ConcurrentHashMap原理和源码

JDK1.8的实现已经摒弃了JDK1.7Segment的概念,而是直接用Node数组+链表+红黑树的数据结构来实现,并发控制使用Synchronized和CAS来操作,整个看起来就像是优化过且线程安全的HashMap,虽然在JDK1.8中还能看到Segment的数据结构,但是已经简化了属性,只是为了兼容旧版本

ConcurrentHashMap<String, String> hashMap = new ConcurrentHashMap<>();

put操作分析:hashMap.put("key","value");

put方法:存放key和value

//put操作向Map中插入key和value

public V put(K key, V value) {

return putVal(key, value, false);

}

//onlyIfAbsent 如果value的值缺席才put

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

//计算hash值

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)

//初始化table

tab = initTable();

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

//如果table(表示数组)中i下标的node为空,直接new Node放在这个位置

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

else if ((fh = f.hash) == MOVED)

//如果node的hash值为MOVED,这代表链表或者红黑树正在扩容,线程进去帮助扩容

tab = helpTransfer(tab, f);

else {

V oldVal = null;

//同步锁 锁住当前数组下标的头节点

synchronized (f) {

//再次确认是否还是当前头节点

if (tabAt(tab, i) == f) {

if (fh >= 0) {

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

//if代表key值重复

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

//当key值重复的情况下,是否需要覆盖value

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

//循环e.next 找到链表的尾部,new Node追加到尾部

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

//红黑树

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

//数据放入红黑树

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

if (binCount >= TREEIFY_THRESHOLD)

//TREEIFY_THRESHOLD为8,此处代表如果链表长度大于等于8,链表变化成红黑树

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

//增加数组总数和扩容

addCount(1L, binCount);

return null;

}

spread方法:key的hash落点均匀

//为了使key在map的数组中的落点均匀分布

static final int spread(int h) {

//HASH_BITS就是Integer.MAX,即0111 1111 1111 1111 1111 1111 1111 1111

return (h ^ (h >>> 16)) & HASH_BITS;

}

initTable方法:初始化map中的数组大小

//初始化Map中的数组

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

if ((sc = sizeCtl) < 0)

//volatile修饰的sizeCtl,具有可见性,小于0代表已经有线程

//执行了else if cas操作:U.compareAndSwapInt(this, SIZECTL, sc, -1)

//把sizeCtl改成了-1,让出cpu时间片

Thread.yield();

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if ((tab = table) == null || tab.length == 0) {

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = tab = nt;

sc = n - (n >>> 2);//如果n为默认16,则sc为12(n的0.75倍)

}

} finally {

sizeCtl = sc; //初始化结束,sizeCtl重新代表扩容因子

}

break;

}

}

return tab;

}

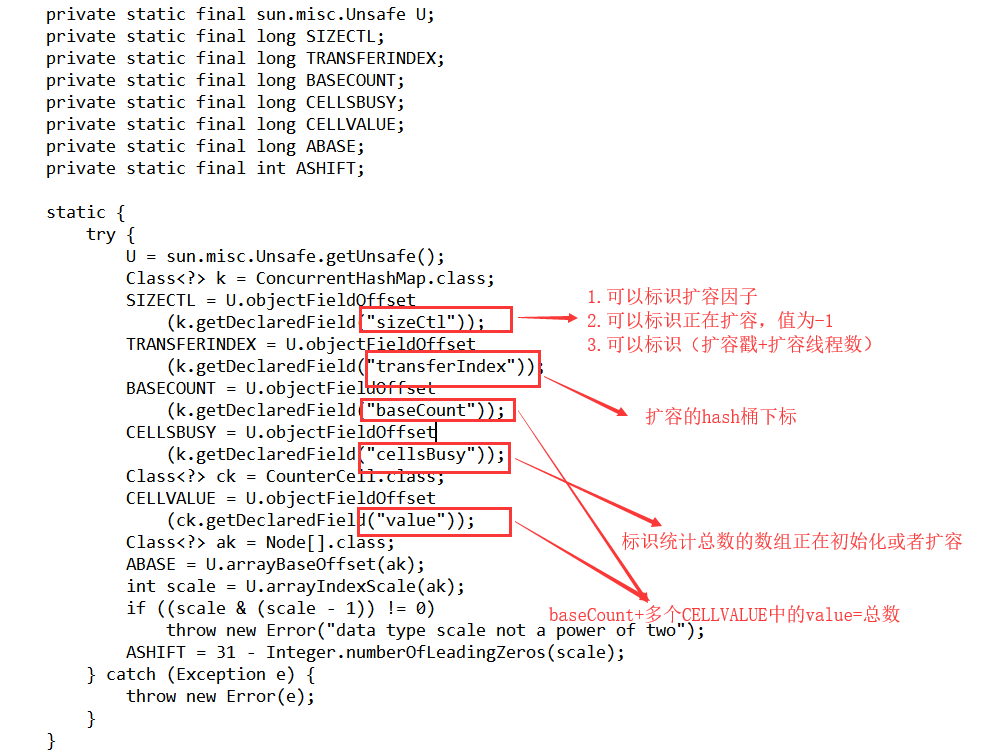

initTable()中的U.compareAndSwapInt(this, SIZECTL, sc, -1)是一种直接操作内存的cas操作,U是JDK提供的UnSafe类,ConcurrentHashMap源码的最后如下都是这种类似操作

addCount方法: 1.改变map的count数量 2.扩容

//分为两个部分,第一部分总数加1,第二部分扩容

private final void addCount(long x, int check) {

CounterCell[] as; long b, s;

if ((as = counterCells) != null ||

//尝试一次cas操作

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

CounterCell a; long v; int m;

boolean uncontended = true;//非竞争

if (as == null || (m = as.length - 1) < 0 ||

(a = as[ThreadLocalRandom.getProbe() & m]) == null ||

//尝试一次cas操作,直接将CELLVALUE的值+1,失败则uncontended为false,竞争关系

!(uncontended =U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) {

//增加总数(如果as == null || (m = as.length - 1) < 0可能还需要初始化as)

fullAddCount(x, uncontended);

return;

}

if (check <= 1)

return;

//统计总数

s = sumCount();

}

//扩容

if (check >= 0) {//如果 binCount>=0,标识需要检查扩容

Node<K,V>[] tab, nt; int n, sc;

//其实本身这个方法没有统计总数的必要,因为上一个部分的cas操作或者fullAddCount已经改变了总数

//然而为什么出现多次s = sumCount(),目的只是为了下面的判断

while (s >= (long)(sc = sizeCtl) && (tab = table) != null &&

(n = tab.length) < MAXIMUM_CAPACITY) {

int rs = resizeStamp(n);//32795,十进制本身没有意思,二进制才有意义

if (sc < 0) {//sc<0,也就是 sizeCtl<0,说明已经有别的线程正在扩容

//sc >>> RESIZE_STAMP_SHIFT!=rs 表示比较高 RESIZE_STAMP_BITS 位生成戳和 rs 是否相等,相同

//sc=rs+1 表示扩容结束

//sc==rs+MAX_RESIZERS 表示帮助线程线程已经达到最大值了

//nt=nextTable == null 表示扩容已经结束

//transferIndex<=0 表示transfer任务都被领取完了,没有剩余的hash桶用来扩容

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

//增加一个线程进行扩容

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

//else if 线程扩容,标识正在扩容的线程数,如果当前没有在扩容,那么 rs 肯定是一个正数, //通过rs<<RESIZE_STAMP_SHIFT 将 sc 设置为一个负数,+2 表示有一个线程在执行扩容

//rs =0000 0000 0000 0000 1000 0000 0001 1011

//高16位代表扩容戳(n决定),低16位标识扩容线程数

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

s = sumCount();

}

}

}

fullAddCount方法: 尝试cas(直接改变baseCount值)失败后,改变map的count数量

private final void fullAddCount(long x, boolean wasUncontended) { int h; if ((h = ThreadLocalRandom.getProbe()) == 0) { ThreadLocalRandom.localInit(); h = ThreadLocalRandom.getProbe();//获得随机数 wasUncontended = true; } boolean collide = false; //标识符号 for (;;) { CounterCell[] as; CounterCell a; int n; long v; //如果counterCells不为空,代表counterCells已经被初始化,在后面的else if中初始化Initialize table if ((as = counterCells) != null && (n = as.length) > 0) { //数组as[(n - 1) & h]下标落点为null if ((a = as[(n - 1) & h]) == null) { if (cellsBusy == 0) { CounterCell r = new CounterCell(x); //通过 cas 设置 cellsBusy 标识,防止其他线程来对counterCells 并发处理 if (cellsBusy == 0 &&U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { boolean created = false; try { CounterCell[] rs; int m, j; if ((rs = counterCells) != null && (m = rs.length) > 0 && rs[j = (m - 1) & h] == null) { rs[j] = r; created = true; } } finally { cellsBusy = 0; } if (created) break; continue; // Slot is now non-empty } } collide = false; } ////说明在调用该方法的addCount方法中cas失败了,并且获取 probe 的值不为空 else if (!wasUncontended) // CAS already known to fail wasUncontended = true; // Continue after rehash //尝试一次cas,改变CELLVALUE的value值 else if (U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x)) break; else if (counterCells != as || n >= NCPU) collide = false; // At max size or stale else if (!collide) //collide含义在于告诉线程,下一次循环就可以考虑扩容了 collide = true; //进入这个步骤,说明 CounterCell 数组容量不够,线程竞争较大,所以先设置一个标识表示为正在扩容 else if (cellsBusy == 0 &&U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { try { if (counterCells == as) {// Expand table unless stale //n << 1代表扩容一倍 CounterCell[] rs = new CounterCell[n << 1]; for (int i = 0; i < n; ++i) rs[i] = as[i]; counterCells = rs; } } finally { cellsBusy = 0; } collide = false; continue; // Retry with expanded table } h = ThreadLocalRandom.advanceProbe(h); } else if (cellsBusy == 0 && counterCells == as && U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { // Initialize table 初始化counterCells boolean init = false; try { if (counterCells == as) { //初始化2个 CounterCell[] rs = new CounterCell[2]; rs[h & 1] = new CounterCell(x); counterCells = rs; init = true; } } finally { cellsBusy = 0; } if (init) break; } //尝试一次cas直接BASECOUNT+1 else if (U.compareAndSwapLong(this, BASECOUNT, v = baseCount, v + x)) break; // Fall back on using base } }

Map中本身维护的CounterCell[] counterCells在线程竞争激烈的情况下也可以扩容,通过cellsBusy来标识。

tranfer方法:扩容

//扩容

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

//将 (n>>>3 相当于 n/8) 然后除以 CPU 核心数。如果得到的结果小于 16,那么就使用 16

// 这里的目的是让每个 CPU 处理的桶一样多,避免出现转移任务不均匀的现象,如果桶较少的话,

//默认一个 CPU(一个线程)处理 16 个桶,也就是长度为 16 的时候,扩容的时候只会有一个线程来扩容

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) { // initiating

try {

//map的数组长度扩大一倍

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

// 创建一个 fwd 节点,表示一个正在被迁移的 Node,并且它的 hash 值为-1(MOVED),

// 也就是前面我们在讲 putval 方法的时候,会有一个判断 MOVED 的逻辑。它的作用是用来占位,

// 表示原数组中位置i处的节点完成迁移以后,就会在i位置设置一个fwd来告诉其他线程这个位置已经处理了

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

for (int i = 0, bound = 0;;) {

//f代表当前数组节点的根节点 ,fh代表hash值

Node<K,V> f; int fh;

//while代码块的含义就是确认当前线程扩容的数组边界

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

//transferIndex代表改变的数组边界

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

//分配线程扩容的区间

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) {//完成扩容

nextTable = null;//nextTable重新置空

table = nextTab;//新数组赋值给table

sizeCtl = (n << 1) - (n >>> 1);//重新更新sizeCtl

return;

}

//sc - 1代表一个线程已经扩容完了

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

//sc初始化的时候等于rs << RESIZE_STAMP_SHIFT) + 2

//后续帮其扩容的线程,执行 transfer 方法之前,会设置 sizeCtl = sizeCtl+1,反之-1

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

//(sc - 2) == resizeStamp(n) << RESIZE_STAMP_SHIFT为true

//代表扩容结束

finishing = advance = true;

i = n; // recheck before commit

}

}

else if ((f = tabAt(tab, i)) == null)

//把当前线程的扩容区间的尾部node设置为fwd

advance = casTabAt(tab, i, null, fwd);

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

else {

//真正的开始扩容

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

if (fh >= 0) {

//链表扩容,下面就是确定链表中的节点应该在高位还是低位

//fh & n计算高低位,如果以前在数组的下标位置为5,扩容后只可能在5或者21的位置 //如0-15的范围就是低位,反之就是高位

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

if (runBit == 0) {

ln = lastRun;

hn = null;

}

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

//高低链设计ph(hash值),比如容器原大小n等于16,扩容前ph的hash槽落点为ph&1111 , //ph&10000(16),根据从右往左第五位是1还是0,确定扩容后hash槽落点

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);//设置低位,位置不变

setTabAt(nextTab, i + n, hn);//设置高位

setTabAt(tab, i, fwd);

advance = true;

}

else if (f instanceof TreeBin) {

//红黑树扩容,下面就是确定链表中的节点应该在高位还是低位

//fh & n计算高低位,如果以前在数组的下标位置为5,扩容后只可能在5或者21的位置 //如0-15的范围就是低位,反之就是高位

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

//高低位 判断是否退化为链表

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);//设置低位,位置不变

setTabAt(nextTab, i + n, hn);//设置高位

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}

ConcurrentHashMap的设计优点

1.通过数组的方式来实现并发增加元素的个数

2.并发扩容,可以通过多个线程并行来实现数据的迁移

3.resizeStamp设计,高低位来标识唯一性和多个线程并行扩容

4.采用高地链的方式解决多次hash的问题,提高效率

5.sizeCtl的设计,3种标识状态

=========================================================================================================================================

我只是一粒简单的石子,未曾想掀起惊涛骇浪,也不愿随波逐流

每个人都很渺小,努力做自己,不虚度光阴,做真实的自己,无论是否到达目标点,既然选择了出发,便勇往直前

我不能保证所有的东西都是对的,但都是能力范围内的深思熟虑和反复斟酌

浙公网安备 33010602011771号

浙公网安备 33010602011771号