【NLP】How to Generate Embeddings?

How to represent words.

0 .

Native represtation: one-hot vectors

Demision: |all words|

(too large and hard to express senmatic similarity)

Idea:produce dense vector representations based on the context/use of words

So, there are Three main approaches:

1.

Count-based methods

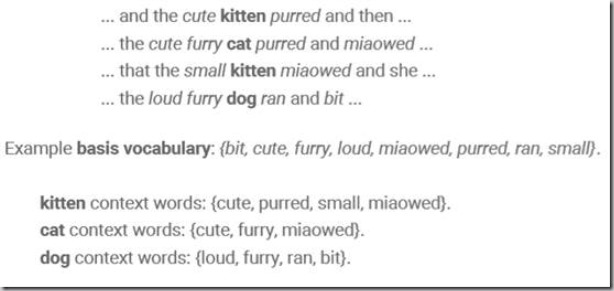

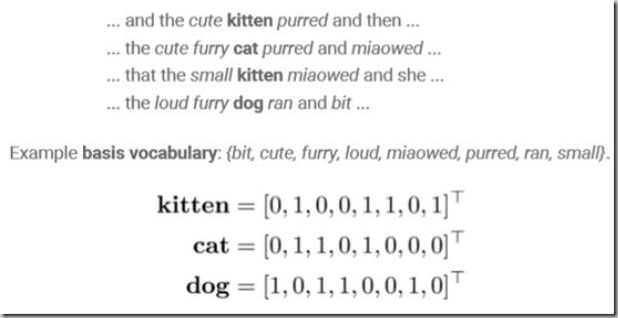

(1) Define a basis vocabulary C(lower than all words dimision) of context words(expect:the、a、of…)

(2) Define a word window size W

(3) Count the basis vocabulary words occurring W words to the left or right of each instance of a target word in the corpus

(4) From a vector represtation of the target word based on these counts

Example-express:

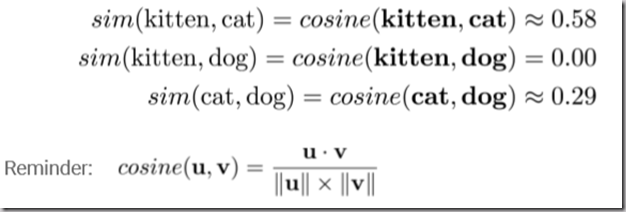

We can calculate the similarity of two words using inner product or cosine.

For instance.

2.

Neural Embedding Models(Main Idea)

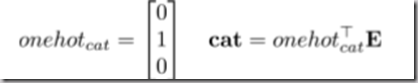

To generate an embedding matrix in R(|all words| * |context words|) which looks like:

Rows are word vectores.

We can retrieve a certain word vector with one-hot vector.

(One)generic idea behind embedding learning:

(1) Collect instances ti∈inst(t) of a word t of vocab V

(2) For each instance, collect its context word c(ti) (e.g.k-word window)

(3) Define some score function score(ti,c(ti),θ,E) with upper bound on output

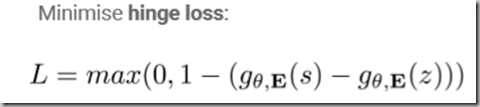

(4) Define a loss

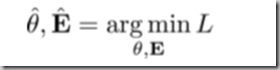

(5) Estimate:

(6) Use the estimated E as the embedding matrix

Attention:

Scoring function estimates whether a sentence(or the object word and its context) is said or used normally by a people,so the higher the score,the more likely it is.

3.

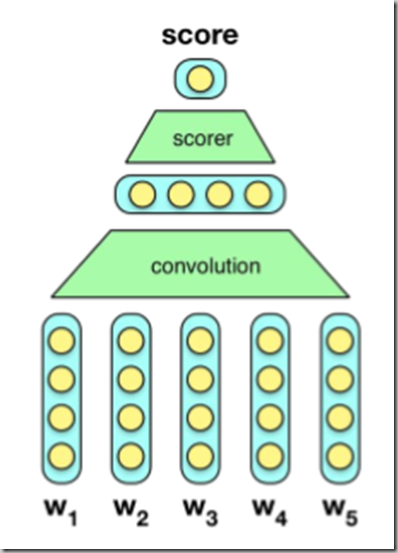

C&W

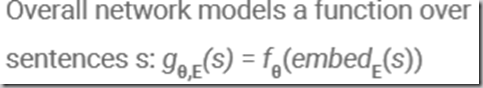

Firstly,we embed all words in a sentence with E.

Then,sentence(w1,w2,w3,w4,w5) goes through a convolution layer(maybe just simpal connection layer).

Then,it goes through a simpal MLP.

Then,it goes through the ‘scorer’layer and output the final Score.

Minimize the loss function(!),and use the parameter matrix of input layer and ..

4. Word2Vec

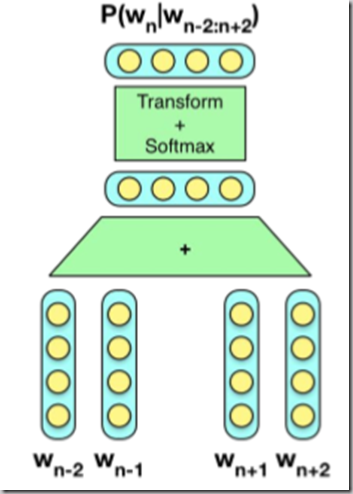

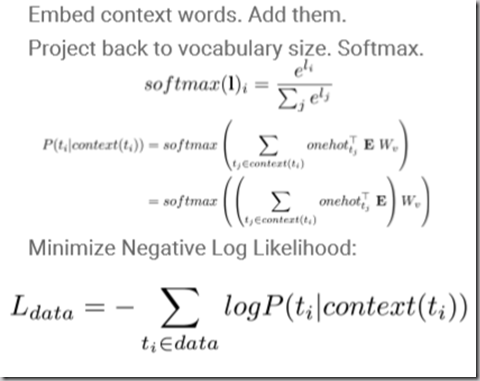

1) CBoW(contextual bag of words)

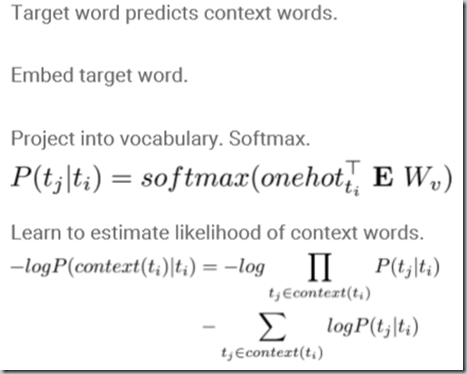

2) Skip-gram:

浙公网安备 33010602011771号

浙公网安备 33010602011771号