微服务架构中的NGINX

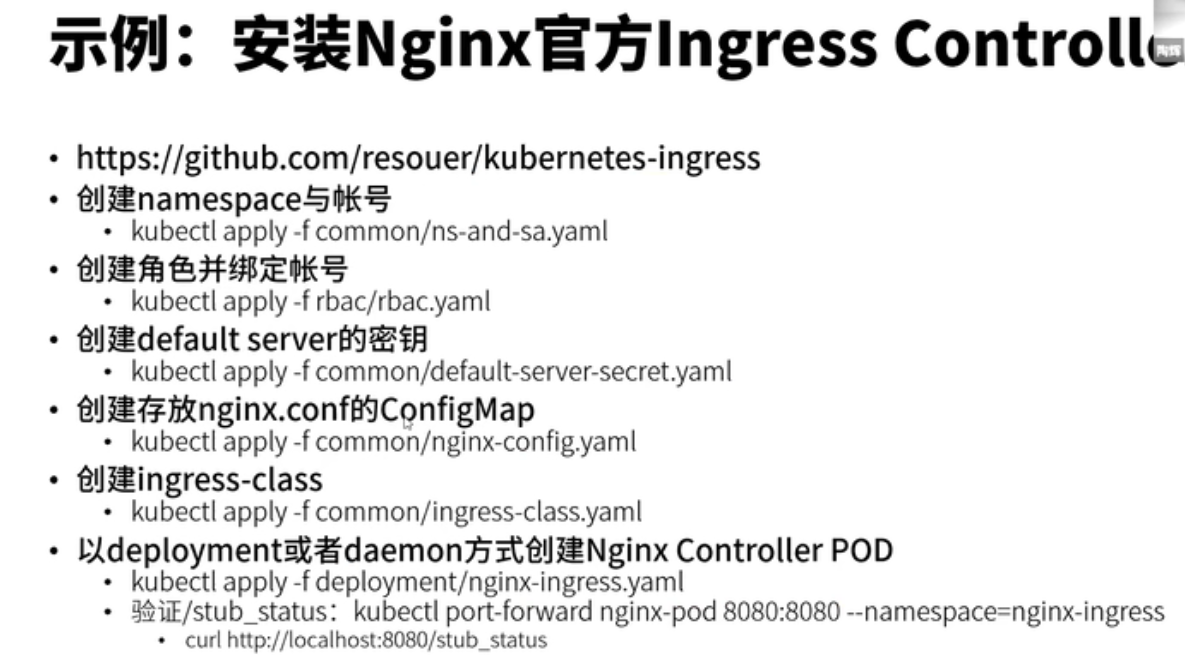

root@ubuntu:~/kubernetes-ingress/deployments/common# ls crds default-server-secret.yaml ingress-class.yaml nginx-config.yaml ns-and-sa.yaml root@ubuntu:~/kubernetes-ingress/deployments/common# kubectl apply -f ns-and-sa.yaml namespace/nginx-ingress created serviceaccount/nginx-ingress created root@ubuntu:~/kubernetes-ingress/deployments/common# cd .. root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f rbac/ ap-rbac.yaml rbac.yaml root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f rbac/rbac.yaml clusterrole.rbac.authorization.k8s.io/nginx-ingress created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress created root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f common/default-server-secret.yaml secret/default-server-secret created root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f common/n nginx-config.yaml ns-and-sa.yaml root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f common/nginx-config.yaml configmap/nginx-config created root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f common/ingress-class.yaml error: unable to recognize "common/ingress-class.yaml": no matches for kind "IngressClass" in version "networking.k8s.io/v1" root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# cat common/ingress-class.yaml apiVersion: networking.k8s.io/v1 kind: IngressClass metadata: name: nginx # annotations: # ingressclass.kubernetes.io/is-default-class: "true" spec: controller: nginx.org/ingress-controller

改成 networking.k8s.io/v1beta

root@ubuntu:~/kubernetes-ingress/deployments# kubectl apply -f common/ingress-class.yaml ingressclass.networking.k8s.io/nginx created root@ubuntu:~/kubernetes-ingress/deployments# cat common/ingress-class.yaml apiVersion: networking.k8s.io/v1beta1 kind: IngressClass metadata: name: nginx # annotations: # ingressclass.kubernetes.io/is-default-class: "true" spec: controller: nginx.org/ingress-controller

root@ubuntu:~/kubernetes-ingress/deployments# kubectl create -f deployment/nginx- nginx-ingress.yaml nginx-plus-ingress.yaml root@ubuntu:~/kubernetes-ingress/deployments# kubectl create -f deployment/nginx-ingress.yaml deployment.apps/nginx-ingress created root@ubuntu:~/kubernetes-ingress/deployments# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default apache-svc ClusterIP 10.111.63.105 <none> 80/TCP 20d default coffee-svc ClusterIP 10.109.121.61 <none> 80/TCP 8m12s default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 55d default nginx-svc ClusterIP 10.103.182.145 <none> 80/TCP 20d default tea-svc ClusterIP 10.105.105.208 <none> 80/TCP 8m12s default web2 ClusterIP 10.99.87.66 <none> 8097/TCP 20d default web3 ClusterIP 10.107.70.171 <none> 8097/TCP 20d ingress-nginx ingress-nginx-controller NodePort 10.105.207.185 <none> 80:31679/TCP,443:32432/TCP 15h ingress-nginx ingress-nginx-controller-admission ClusterIP 10.101.64.30 <none> 443/TCP 15h kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 55d ns-calico1 nodeport-svc NodePort 10.109.58.6 <none> 3000:30090/TCP 33d

root@ubuntu:~/kubernetes-ingress# kubectl get svc -o wide -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default apache-svc ClusterIP 10.111.63.105 <none> 80/TCP 20d app=apache-app default coffee-svc ClusterIP 10.109.121.61 <none> 80/TCP 60m app=coffee default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 55d <none> default nginx-svc ClusterIP 10.103.182.145 <none> 80/TCP 20d app=nginx-app default tea-svc ClusterIP 10.105.105.208 <none> 80/TCP 60m app=tea default web2 ClusterIP 10.99.87.66 <none> 8097/TCP 20d run=web2 default web3 ClusterIP 10.107.70.171 <none> 8097/TCP 20d run=web3 kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 55d k8s-app=kube-dns ns-calico1 nodeport-svc NodePort 10.109.58.6 <none> 3000:30090/TCP 34d app=calico1-nginx root@ubuntu:~/kubernetes-ingress# kubectl get pod -o wide -n nginx-ingress NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-85d86d7d6d-nwkcx 0/1 Running 0 5m15s 10.244.41.14 cloud <none> <none> root@ubuntu:~/kubernetes-ingress#

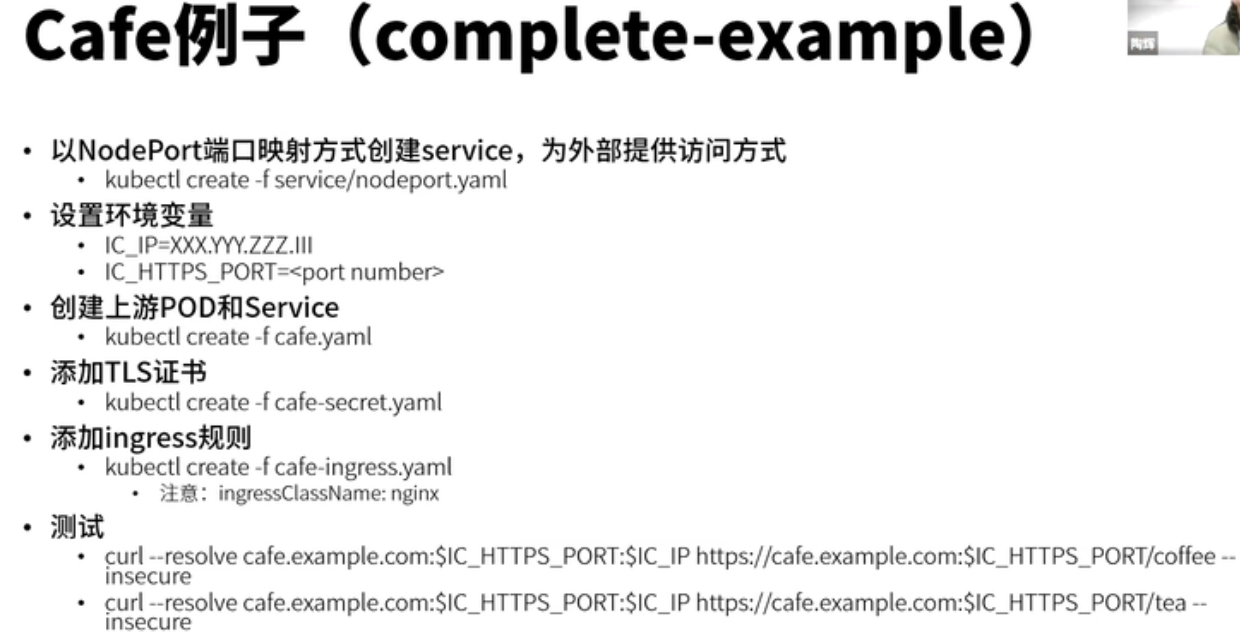

root@ubuntu:~/kubernetes-ingress# cd examples/c complete-example/ custom-annotations/ customization/ custom-log-format/ custom-templates/ root@ubuntu:~/kubernetes-ingress# cd examples/complete-example/ root@ubuntu:~/kubernetes-ingress/examples/complete-example# ls cafe-ingress.yaml cafe-secret.yaml cafe.yaml dashboard.png README.md root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe.yaml deployment.apps/coffee created service/coffee-svc created deployment.apps/tea created service/tea-svc created root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe-secret.yaml secret/cafe-secret created root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe-ingress.yaml error: unable to recognize "cafe-ingress.yaml": no matches for kind "Ingress" in version "networking.k8s.io/v1" root@ubuntu:~/kubernetes-ingress/examples/complete-example# vi cafe-ingress.yaml

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe-ingress.yaml error: error validating "cafe-ingress.yaml": error validating data: [ValidationError(Ingress.spec.rules[0].http.paths[0].backend): unknown field "service" in io.k8s.api.networking.v1beta1.IngressBackend, ValidationError(Ingress.spec.rules[0].http.paths[1].backend): unknown field "service" in io.k8s.api.networking.v1beta1.IngressBackend]; if you choose to ignore these errors, turn validation off with --validate=false root@ubuntu:~/kubernetes-ingress/examples/complete-example#

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl explain Ingress KIND: Ingress VERSION: extensions/v1beta1 DESCRIPTION: Ingress is a collection of rules that allow inbound connections to reach the endpoints defined by a backend. An Ingress can be configured to give services externally-reachable urls, load balance traffic, terminate SSL, offer name based virtual hosting etc. DEPRECATED - This group version of Ingress is deprecated by networking.k8s.io/v1beta1 Ingress. See the release notes for more information. FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <Object> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <Object> Spec is the desired state of the Ingress. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status status <Object> Status is the current state of the Ingress. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

root@ubuntu:~/kubernetes-ingress/examples/complete-example# cat cafe-ingress.yaml apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: cafe-ingress spec: ingressClassName: nginx tls: - hosts: - cafe.example.com secretName: cafe-secret rules: - host: cafe.example.com http: paths: - path: /tea pathType: Prefix backend: serviceName: tea-svc servicePort: 80 - path: /coffee pathType: Prefix backend: serviceName: coffee-svc servicePort: 80

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe-ingress.yaml

ingress.networking.k8s.io/cafe-ingress created

namesapce 是nginx-ingress

^Croot@ubuntu:~/kubernetes-ingress# kubectl port-forward nginx-ingress-85d86d7d6d-nwkcx 9999:443 -n nginx-ingress Forwarding from 127.0.0.1:9999 -> 443 Forwarding from [::1]:9999 -> 443 Handling connection for 9999 Handling connection for 9999 E0826 12:02:46.764087 2648505 portforward.go:400] an error occurred forwarding 9999 -> 443: error forwarding port 443 to pod 240494b7e3176e2d9db2cb5f8266fd16690542d2f4c05a7c95618ebf1547f48b, uid : exit status 1: 2021/08/26 12:02:46 socat[2270844] E write(5, 0xaaaaf4faeec0, 5): Broken pipe Handling connection for 9999

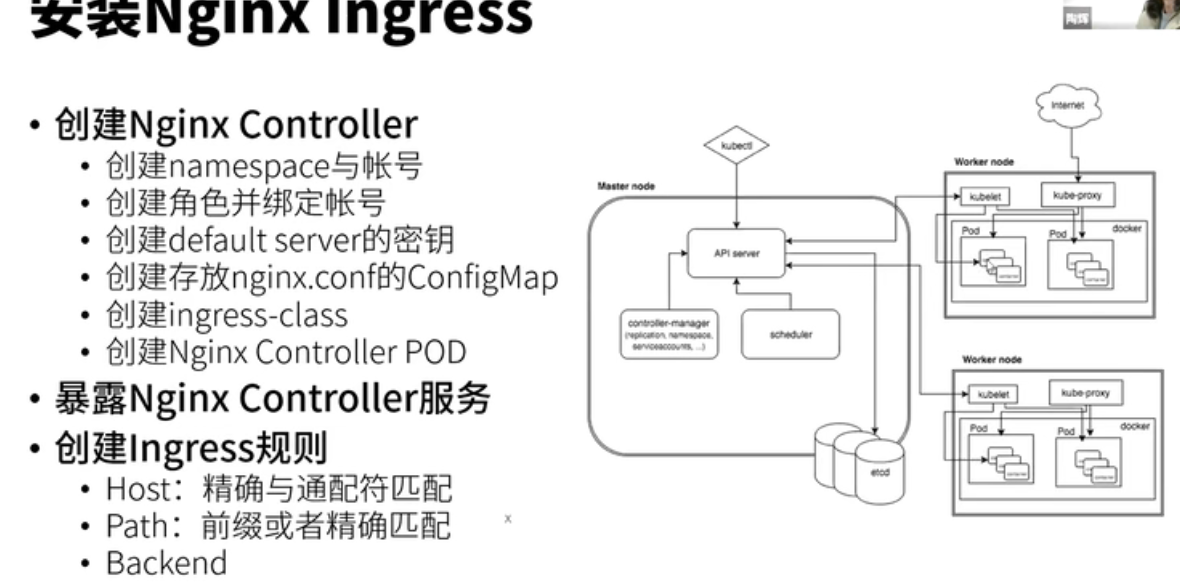

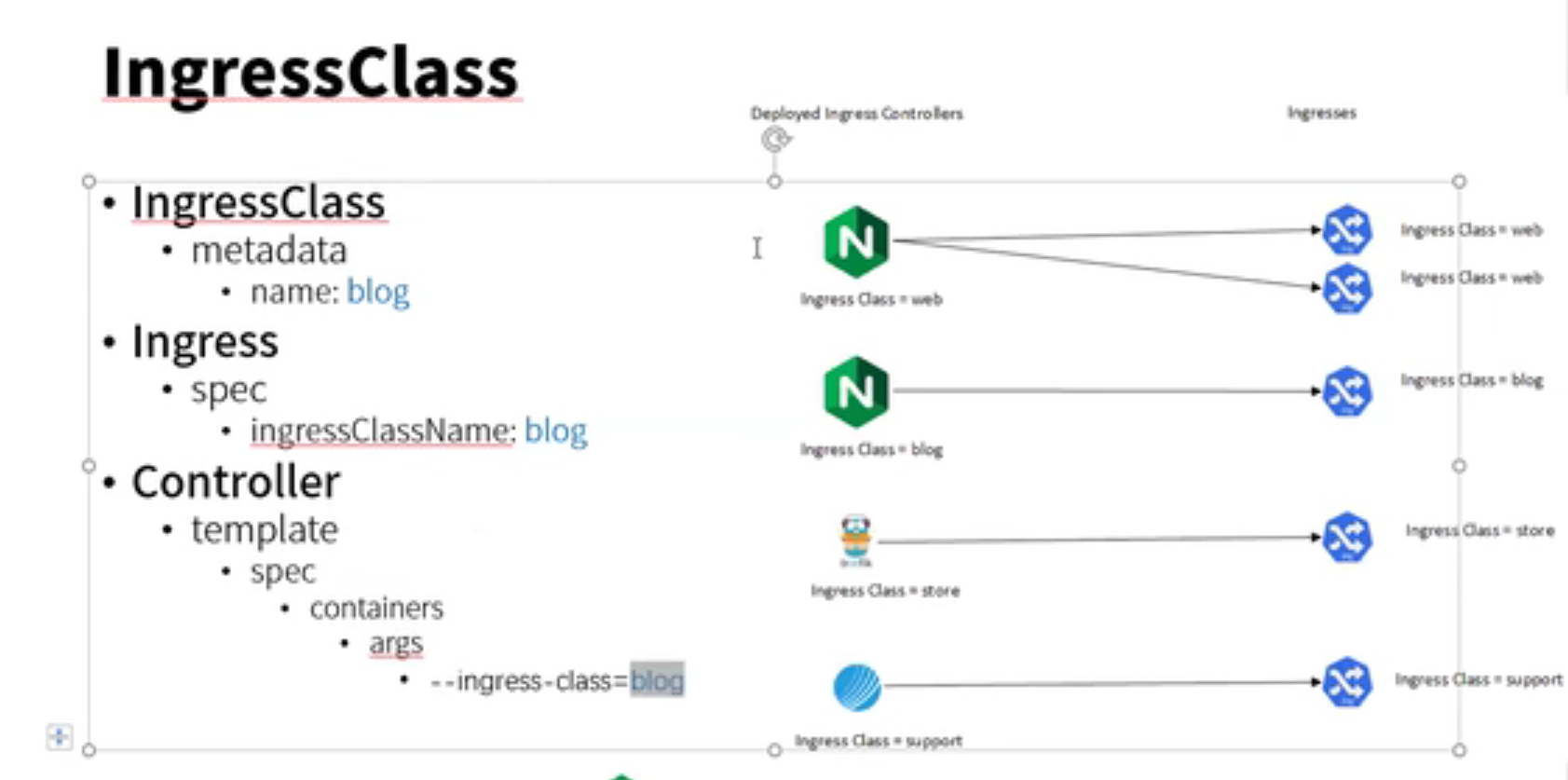

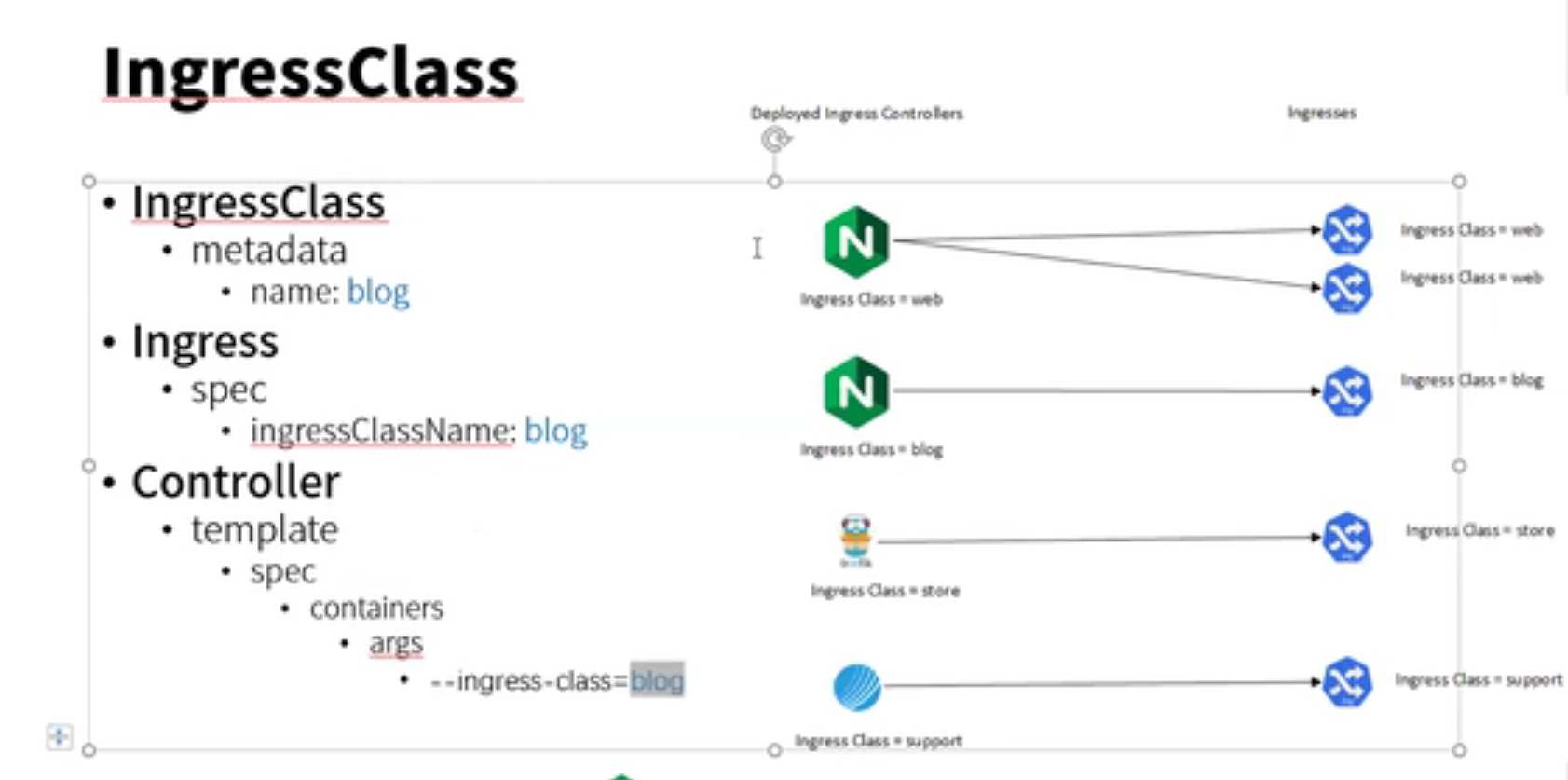

怎么部署两套ngnix controller

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE nginx nginx.org/ingress-controller <none> 3h35m root@ubuntu:~/kubernetes-ingress/examples/complete-example#

root@ubuntu:~/kubernetes-ingress/deployments/common# cat ingress-class.yaml apiVersion: networking.k8s.io/v1beta1 kind: IngressClass metadata: name: nginx # annotations: # ingressclass.kubernetes.io/is-default-class: "true" spec: controller: nginx.org/ingress-controller

root@ubuntu:~/kubernetes-ingress/deployments# kubectl get ing -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default cafe-ingress nginx cafe.example.com 80, 443 162m default example-ingress <none> ubuntu.com 10.105.207.185 80 20d default micro-ingress <none> nginx.mydomain.com,apache.mydomain.com 10.105.207.185 80 20d default web-ingress <none> web.mydomain.com 10.105.207.185 80 20d default web-ingress-lb <none> web3.mydomain.com,web2.mydomain.com 10.105.207.185 80 20d root@ubuntu:~/kubernetes-ingress/deployments#

更改名称

root@ubuntu:~/kubernetes-ingress/deployments# kubectl create -f common/ingress-class.yaml ingressclass.networking.k8s.io/nginx-org created root@ubuntu:~/kubernetes-ingress/deployments# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE nginx-org nginx.org/ingress-controller <none> 12s root@ubuntu:~/kubernetes-ingress/deployments# cat common/ingress-class.yaml apiVersion: networking.k8s.io/v1beta1 kind: IngressClass metadata: name: nginx-org # annotations: # ingressclass.kubernetes.io/is-default-class: "true" spec: controller: nginx.org/ingress-controller

更改

cafe-ingress

root@ubuntu:~/kubernetes-ingress/deployments# kubectl get ing -o wide -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default cafe-ingress nginx-org cafe.example.com 80, 443 167m default example-ingress <none> ubuntu.com 10.105.207.185 80 20d default micro-ingress <none> nginx.mydomain.com,apache.mydomain.com 10.105.207.185 80 20d default web-ingress <none> web.mydomain.com 10.105.207.185 80 20d default web-ingress-lb <none> web3.mydomain.com,web2.mydomain.com 10.105.207.185 80 20d root@ubuntu:~/kubernetes-ingress/deployments# kubectl edit ing cafe-ingress

root@ubuntu:~/kubernetes-ingress/deployments# kubectl exec -it nginx-ingress-85d86d7d6d-nwkcx -n nginx-ingress -- /bin/bash nginx@nginx-ingress-85d86d7d6d-nwkcx:/$ cd /etc/n nginx/ nsswitch.conf nginx@nginx-ingress-85d86d7d6d-nwkcx:/$ cd /etc/nginx/conf conf.d/ config-version.conf nginx@nginx-ingress-85d86d7d6d-nwkcx:/$ cd /etc/nginx/conf.d/ nginx@nginx-ingress-85d86d7d6d-nwkcx:/etc/nginx/conf.d$ ls nginx@nginx-ingress-85d86d7d6d-nwkcx:/etc/nginx/conf.d$ ls nginx@nginx-ingress-85d86d7d6d-nwkcx:/etc/nginx/conf.d$

竟然什么都没有

nginx@nginx-ingress-85d86d7d6d-nwkcx:/$ cat /etc/nginx/nginx.conf | grep include include /etc/nginx/mime.types; include /etc/nginx/config-version.conf; include /etc/nginx/conf.d/*.conf; include /etc/nginx/stream-conf.d/*.conf; nginx@nginx-ingress-85d86d7d6d-nwkcx:/$

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get pod,svc,secret,ingress -n nginx-ingress -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/nginx-ingress-85d86d7d6d-nwkcx 0/1 Running 0 3h16m 10.244.41.14 cloud <none> <none> NAME TYPE DATA AGE secret/default-server-secret kubernetes.io/tls 2 4h23m secret/default-token-9226g kubernetes.io/service-account-token 3 4h23m secret/nginx-ingress-token-xnkwn kubernetes.io/service-account-token 3 4h23m root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get pod | grep coffe coffee-5f56ff9788-plfcq 1/1 Running 0 3h3m coffee-5f56ff9788-zs2f7 1/1 Running 0 3h3m root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get pod | grep tea tea-69c99ff568-hdcbl 1/1 Running 0 3h3m tea-69c99ff568-p59d6 1/1 Running 0 3h3m tea-69c99ff568-tm9q6 1/1 Running 0 3h3m root@ubuntu:~/kubernetes-ingress/examples/complete-example#

kubectl logs nginx-ingress-85d86d7d6d-nwkcx -n nginx-ingress

erverroutes.k8s.nginx.org) E0826 07:03:43.188312 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.Policy: failed to list *v1.Policy: the server could not find the requested resource (get policies.k8s.nginx.org) E0826 07:03:48.360767 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TransportServer: failed to list *v1alpha1.TransportServer: the server could not find the requested resource (get transportservers.k8s.nginx.org) E0826 07:04:14.214771 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.Policy: failed to list *v1.Policy: the server could not find the requested resource (get policies.k8s.nginx.org) E0826 07:04:17.201091 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.VirtualServer: failed to list *v1.VirtualServer: the server could not find the requested resource (get virtualservers.k8s.nginx.org) E0826 07:04:33.115149 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.VirtualServerRoute: failed to list *v1.VirtualServerRoute: the server could not find the requested resource (get virtualserverroutes.k8s.nginx.org) E0826 07:04:41.914552 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TransportServer: failed to list *v1alpha1.TransportServer: the server could not find the requested resource (get transportservers.k8s.nginx.org) E0826 07:04:54.294521 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.Policy: failed to list *v1.Policy: the server could not find the requested resource (get policies.k8s.nginx.org) E0826 07:04:54.660443 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.VirtualServer: failed to list *v1.VirtualServer: the server could not find the requested resource (get virtualservers.k8s.nginx.org) E0826 07:05:25.632495 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TransportServer: failed to list *v1alpha1.TransportServer: the server could not find the requested resource (get transportservers.k8s.nginx.org) E0826 07:05:32.079676 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.21.2/tools/cache/reflector.go:167: Failed to watch *v1.VirtualServerRoute: failed to list *v1.VirtualServerRoute: the server could not find the requested resource (get virtual

執行

kubectl apply -f crds/k8s.nginx.org_virtualservers.yaml

kubectl apply -f crds/k8s.nginx.org_virtualserverroutes.yaml

kubectl apply -f crds/k8s.nginx.org_transportservers.yaml

kubectl apply -f crds/k8s.nginx.org_policies.yaml

kubectl apply -f crds/k8s.nginx.org_globalconfigurations.yaml

規則生成了,但是沒有caffe的

root@ubuntu:~/kubernetes-ingress/deployments# kubectl exec -it nginx-ingress-85d86d7d6d-nwkcx -n nginx-ingress -- ls /etc/nginx/conf.d/ default-example-ingress.conf default-web-ingress-lb.conf default-micro-ingress.conf default-web-ingress.conf root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# kubectl exec -it nginx-ingress-85d86d7d6d-nwkcx -n nginx-ingress -- cat /etc/nginx/conf.d/default-micro-ingress.conf # configuration for default/micro-ingress upstream default-micro-ingress-apache.mydomain.com-apache-svc-80 { zone default-micro-ingress-apache.mydomain.com-apache-svc-80 256k; random two least_conn; server 10.244.243.197:80 max_fails=1 fail_timeout=10s max_conns=0; server 10.244.41.61:80 max_fails=1 fail_timeout=10s max_conns=0; } upstream default-micro-ingress-nginx.mydomain.com-nginx-svc-80 { zone default-micro-ingress-nginx.mydomain.com-nginx-svc-80 256k; random two least_conn; server 10.244.243.195:80 max_fails=1 fail_timeout=10s max_conns=0; server 10.244.41.58:80 max_fails=1 fail_timeout=10s max_conns=0; } server { listen 80; server_tokens on; server_name nginx.mydomain.com; set $resource_type "ingress"; set $resource_name "micro-ingress"; set $resource_namespace "default"; location / { set $service "nginx-svc"; proxy_http_version 1.1; proxy_connect_timeout 60s; proxy_read_timeout 60s; proxy_send_timeout 60s; client_max_body_size 1m; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Host $host; proxy_set_header X-Forwarded-Port $server_port; proxy_set_header X-Forwarded-Proto $scheme; proxy_buffering on; proxy_pass http://default-micro-ingress-nginx.mydomain.com-nginx-svc-80; } } server { listen 80; server_tokens on; server_name apache.mydomain.com; set $resource_type "ingress"; set $resource_name "micro-ingress"; set $resource_namespace "default"; location / { set $service "apache-svc"; proxy_http_version 1.1; proxy_connect_timeout 60s; proxy_read_timeout 60s; proxy_send_timeout 60s; client_max_body_size 1m; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Host $host; proxy_set_header X-Forwarded-Port $server_port; proxy_set_header X-Forwarded-Proto $scheme; proxy_buffering on; proxy_pass http://default-micro-ingress-apache.mydomain.com-apache-svc-80; } }

nginx@nginx-ingress-85d86d7d6d-nwkcx:/$ ./nginx-ingress -h Usage of ./nginx-ingress: -alsologtostderr log to standard error as well as files -default-server-tls-secret string A Secret with a TLS certificate and key for TLS termination of the default server. Format: <namespace>/<name>. If not set, than the certificate and key in the file "/etc/nginx/secrets/default" are used. If "/etc/nginx/secrets/default" doesn't exist, the Ingress Controller will configure NGINX to reject TLS connections to the default server. If a secret is set, but the Ingress controller is not able to fetch it from Kubernetes API or it is not set and the Ingress Controller fails to read the file "/etc/nginx/secrets/default", the Ingress controller will fail to start. -enable-app-protect Enable support for NGINX App Protect. Requires -nginx-plus. -enable-custom-resources Enable custom resources (default true) -enable-internal-routes Enable support for internal routes with NGINX Service Mesh. Requires -spire-agent-address and -nginx-plus. Is for use with NGINX Service Mesh only. -enable-latency-metrics Enable collection of latency metrics for upstreams. Requires -enable-prometheus-metrics -enable-leader-election Enable Leader election to avoid multiple replicas of the controller reporting the status of Ingress, VirtualServer and VirtualServerRoute resources -- only one replica will report status (default true). See -report-ingress-status flag. (default true) -enable-preview-policies Enable preview policies -enable-prometheus-metrics Enable exposing NGINX or NGINX Plus metrics in the Prometheus format -enable-snippets Enable custom NGINX configuration snippets in VirtualServer, VirtualServerRoute and TransportServer resources. -enable-tls-passthrough Enable TLS Passthrough on port 443. Requires -enable-custom-resources -external-service string Specifies the name of the service with the type LoadBalancer through which the Ingress controller pods are exposed externally. The external address of the service is used when reporting the status of Ingress, VirtualServer and VirtualServerRoute resources. For Ingress resources only: Requires -report-ingress-status. -global-configuration string The namespace/name of the GlobalConfiguration resource for global configuration of the Ingress Controller. Requires -enable-custom-resources. Format: <namespace>/<name> -health-status Add a location based on the value of health-status-uri to the default server. The location responds with the 200 status code for any request. Useful for external health-checking of the Ingress controller -health-status-uri string Sets the URI of health status location in the default server. Requires -health-status (default "/nginx-health") -ingress-class string A class of the Ingress controller.

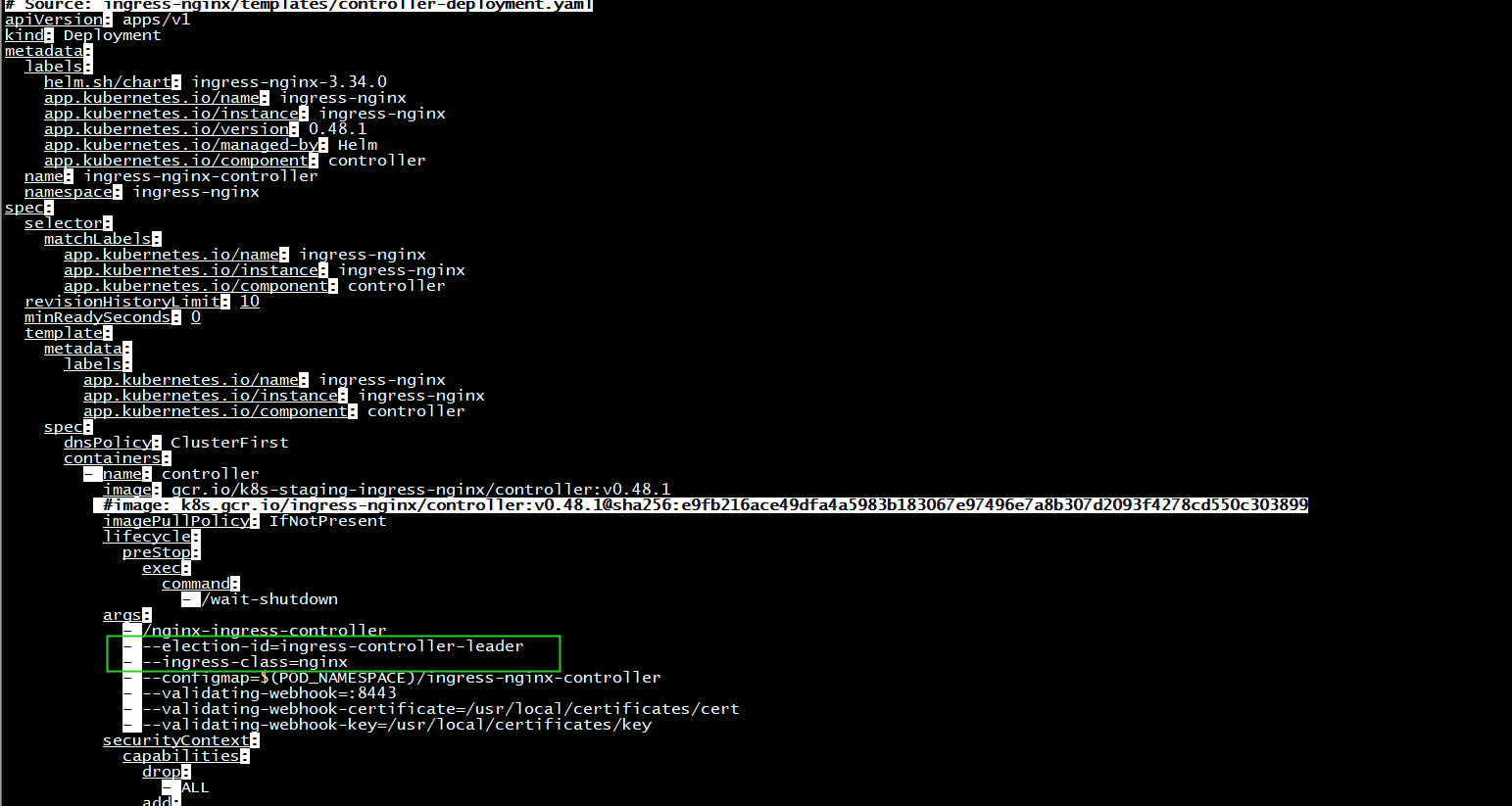

更改啓動參數

args: - -nginx-configmaps=$(POD_NAMESPACE)/nginx-config - -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret - -ingress-class=nginx-org

root@ubuntu:~/kubernetes-ingress/deployments# kubectl exec -it nginx-ingress-6f7d9bdb87-twhxg -n nginx-ingress -- ls /etc/nginx/conf.d/ default-cafe-ingress.conf root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default apache-svc ClusterIP 10.111.63.105 <none> 80/TCP 20d default coffee-svc ClusterIP 10.101.87.73 <none> 80/TCP 3h46m default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 55d default nginx-svc ClusterIP 10.103.182.145 <none> 80/TCP 20d default tea-svc ClusterIP 10.103.138.254 <none> 80/TCP 3h46m default web2 ClusterIP 10.99.87.66 <none> 8097/TCP 20d default web3 ClusterIP 10.107.70.171 <none> 8097/TCP 20d kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 55d ns-calico1 nodeport-svc NodePort 10.109.58.6 <none> 3000:30090/TCP 34d root@ubuntu:~/kubernetes-ingress/deployments# kubectl get pod -n nginx-ingress -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-6f7d9bdb87-twhxg 1/1 Running 0 8m29s 10.244.41.21 cloud <none> <none> root@ubuntu:~/kubernetes-ingress/deployments# curl https://10.244.41.21:443/coffee --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.21.0</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl https://10.244.41.21:443/tea --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.21.0</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl https://10.244.41.21:443--insecure curl: (3) Port number ended with '-' root@ubuntu:~/kubernetes-ingress/deployments# curl https://10.244.41.21:443 --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.21.0</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl --resolve cafe.example.com:443:10.244.41.21 https://cafe.example.com:443/tea --insecure Server address: 10.244.41.15:8080 Server name: tea-69c99ff568-p59d6 Date: 26/Aug/2021:07:51:22 +0000 URI: /tea Request ID: 68a8e864e4cc43b7f3896da25312e68c

root@ubuntu:~/kubernetes-ingress/deployments# curl --resolve cafe.example.com:443:10.244.41.21 https://cafe.example.com:443/coffee --insecure Server address: 10.244.243.252:8080 Server name: coffee-5f56ff9788-plfcq Date: 26/Aug/2021:09:19:50 +0000 URI: /coffee Request ID: 60e7be5ff2fd71f2b1ec8452a8705242

root@ubuntu:~/kubernetes-ingress/deployments# kubectl get pod -A -o wide | grep -E 'coffee|tea' default coffee-5f56ff9788-plfcq 1/1 Running 0 5h20m 10.244.243.252 ubuntu <none> <none> default coffee-5f56ff9788-zs2f7 1/1 Running 0 5h20m 10.244.41.19 cloud <none> <none> default tea-69c99ff568-hdcbl 1/1 Running 0 5h20m 10.244.41.17 cloud <none> <none> default tea-69c99ff568-p59d6 1/1 Running 0 5h20m 10.244.41.15 cloud <none> <none> default tea-69c99ff568-tm9q6 1/1 Running 0 5h20m 10.244.41.18 cloud <none> <none>

root@ubuntu:~/kubernetes-ingress/deployments# curl --resolve cafe.example.com:443:10.244.41.21 curl: no URL specified! curl: try 'curl --help' or 'curl --manual' for more information root@ubuntu:~/kubernetes-ingress/deployments# curl https://cafe.example.com:443/coffee --insecure curl: (6) Could not resolve host: cafe.example.com root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# curl --resolve cafe.example.com:443:10.244.41.21 https://cafe.example.com:443/coffee --insecure -v * Added cafe.example.com:443:10.244.41.21 to DNS cache * Hostname cafe.example.com was found in DNS cache * Trying 10.244.41.21... * TCP_NODELAY set * Connected to cafe.example.com (10.244.41.21) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/certs/ca-certificates.crt CApath: /etc/ssl/certs * TLSv1.3 (OUT), TLS handshake, Client hello (1): * TLSv1.3 (IN), TLS handshake, Server hello (2): * TLSv1.2 (IN), TLS handshake, Certificate (11): * TLSv1.2 (IN), TLS handshake, Server key exchange (12): * TLSv1.2 (IN), TLS handshake, Server finished (14): * TLSv1.2 (OUT), TLS handshake, Client key exchange (16): * TLSv1.2 (OUT), TLS change cipher, Client hello (1): * TLSv1.2 (OUT), TLS handshake, Finished (20): * TLSv1.2 (IN), TLS handshake, Finished (20): * SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384 * ALPN, server accepted to use http/1.1 * Server certificate: * subject: C=US; ST=CA; O=Internet Widgits Pty Ltd; CN=cafe.example.com * start date: Sep 12 16:15:35 2018 GMT * expire date: Sep 11 16:15:35 2023 GMT * issuer: C=US; ST=CA; O=Internet Widgits Pty Ltd; CN=cafe.example.com * SSL certificate verify result: self signed certificate (18), continuing anyway. > GET /coffee HTTP/1.1 > Host: cafe.example.com > User-Agent: curl/7.58.0 > Accept: */* > < HTTP/1.1 200 OK < Server: nginx/1.21.0 < Date: Thu, 26 Aug 2021 09:24:20 GMT < Content-Type: text/plain < Content-Length: 164 < Connection: keep-alive < Expires: Thu, 26 Aug 2021 09:24:19 GMT < Cache-Control: no-cache < Server address: 10.244.243.252:8080 Server name: coffee-5f56ff9788-plfcq Date: 26/Aug/2021:09:24:20 +0000 URI: /coffee Request ID: e1d17cb44eb89b94e8efe178344f5dd8 * Connection #0 to host cafe.example.com left intact root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# kubectl get pod -A -o wide | grep ingress nginx-ingress nginx-ingress-6f7d9bdb87-twhxg 1/1 Running 0 109m 10.244.41.21 cloud <none> <none> root@ubuntu:~/kubernetes-ingress/deployments#

root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: cafe.example.com" https://10.244.41.21:443/coffee --insecure -v * Trying 10.244.41.21... * TCP_NODELAY set * Connected to 10.244.41.21 (10.244.41.21) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/certs/ca-certificates.crt CApath: /etc/ssl/certs * TLSv1.3 (OUT), TLS handshake, Client hello (1): * TLSv1.3 (IN), TLS handshake, Server hello (2): * TLSv1.2 (IN), TLS handshake, Certificate (11): * TLSv1.2 (IN), TLS handshake, Server key exchange (12): * TLSv1.2 (IN), TLS handshake, Server finished (14): * TLSv1.2 (OUT), TLS handshake, Client key exchange (16): * TLSv1.2 (OUT), TLS change cipher, Client hello (1): * TLSv1.2 (OUT), TLS handshake, Finished (20): * TLSv1.2 (IN), TLS handshake, Finished (20): * SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384 * ALPN, server accepted to use http/1.1 * Server certificate: * subject: CN=NGINXIngressController * start date: Sep 12 18:03:35 2018 GMT * expire date: Sep 11 18:03:35 2023 GMT * issuer: CN=NGINXIngressController * SSL certificate verify result: self signed certificate (18), continuing anyway. > GET /coffee HTTP/1.1 > Host: cafe.example.com > User-Agent: curl/7.58.0 > Accept: */* > < HTTP/1.1 200 OK < Server: nginx/1.21.0 < Date: Thu, 26 Aug 2021 09:26:59 GMT < Content-Type: text/plain < Content-Length: 164 < Connection: keep-alive < Expires: Thu, 26 Aug 2021 09:26:58 GMT < Cache-Control: no-cache < Server address: 10.244.243.252:8080 Server name: coffee-5f56ff9788-plfcq Date: 26/Aug/2021:09:26:59 +0000 URI: /coffee Request ID: f7b3794d0f1a25443bb4f8d6526dadfd * Connection #0 to host 10.244.41.21 left intact

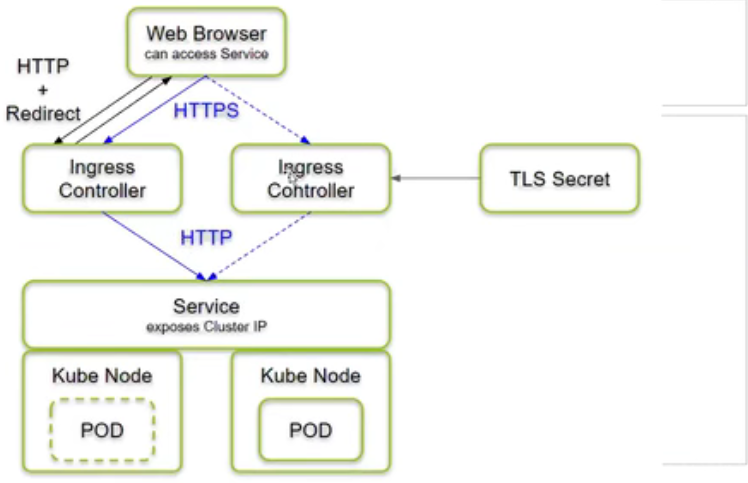

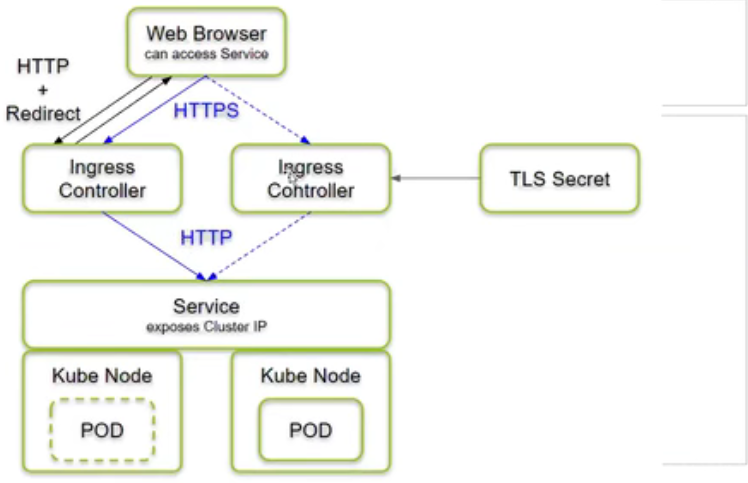

http請求先匹配域名cafe.example.com,再匹配uri/coffee

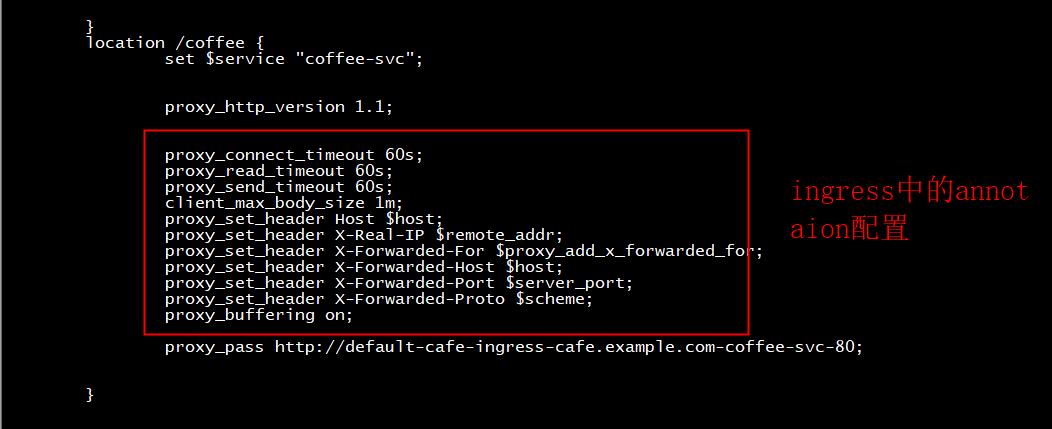

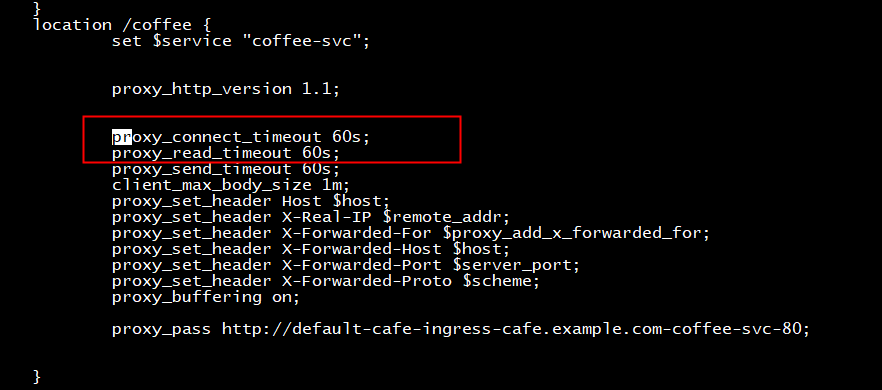

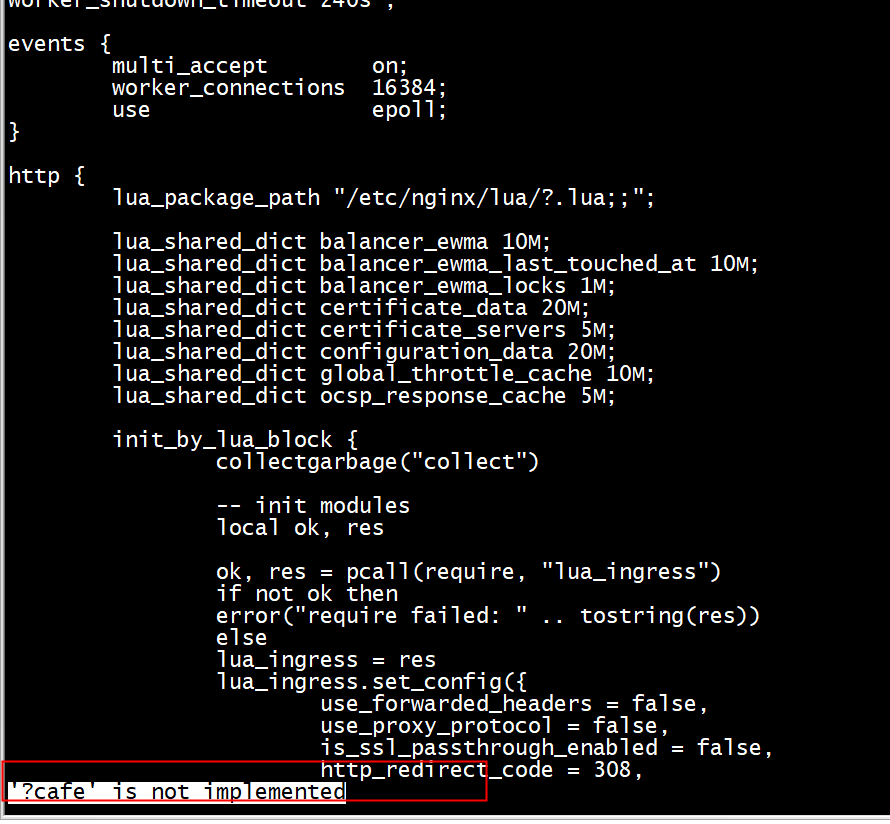

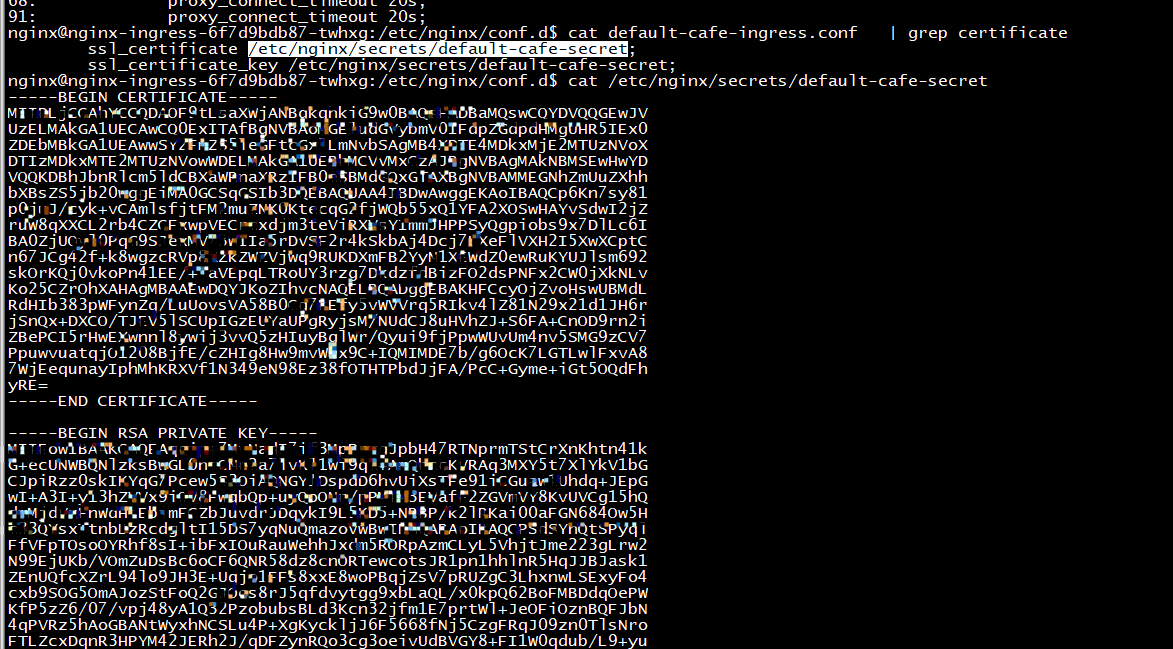

root@ubuntu:~/kubernetes-ingress/deployments# kubectl exec -it nginx-ingress-6f7d9bdb87-twhxg -n nginx-ingress -- cat /etc/nginx/conf.d/default-cafe-ingress.conf # configuration for default/cafe-ingress upstream default-cafe-ingress-cafe.example.com-coffee-svc-80 { zone default-cafe-ingress-cafe.example.com-coffee-svc-80 256k; random two least_conn; server 10.244.243.252:8080 max_fails=1 fail_timeout=10s max_conns=0; server 10.244.41.19:8080 max_fails=1 fail_timeout=10s max_conns=0; } upstream default-cafe-ingress-cafe.example.com-tea-svc-80 { zone default-cafe-ingress-cafe.example.com-tea-svc-80 256k; random two least_conn; server 10.244.41.15:8080 max_fails=1 fail_timeout=10s max_conns=0; server 10.244.41.17:8080 max_fails=1 fail_timeout=10s max_conns=0; server 10.244.41.18:8080 max_fails=1 fail_timeout=10s max_conns=0; } server { listen 80; listen 443 ssl; ssl_certificate /etc/nginx/secrets/default-cafe-secret; ssl_certificate_key /etc/nginx/secrets/default-cafe-secret; server_tokens on; server_name cafe.example.com; set $resource_type "ingress"; set $resource_name "cafe-ingress"; set $resource_namespace "default"; if ($scheme = http) { return 301 https://$host:443$request_uri; } location /tea { set $service "tea-svc"; proxy_http_version 1.1; proxy_connect_timeout 60s; proxy_read_timeout 60s; proxy_send_timeout 60s; client_max_body_size 1m; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Host $host; proxy_set_header X-Forwarded-Port $server_port; proxy_set_header X-Forwarded-Proto $scheme; proxy_buffering on; proxy_pass http://default-cafe-ingress-cafe.example.com-tea-svc-80; } location /coffee { set $service "coffee-svc"; proxy_http_version 1.1; proxy_connect_timeout 60s; proxy_read_timeout 60s; proxy_send_timeout 60s; client_max_body_size 1m; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Host $host; proxy_set_header X-Forwarded-Port $server_port; proxy_set_header X-Forwarded-Proto $scheme; proxy_buffering on; proxy_pass http://default-cafe-ingress-cafe.example.com-coffee-svc-80; } }

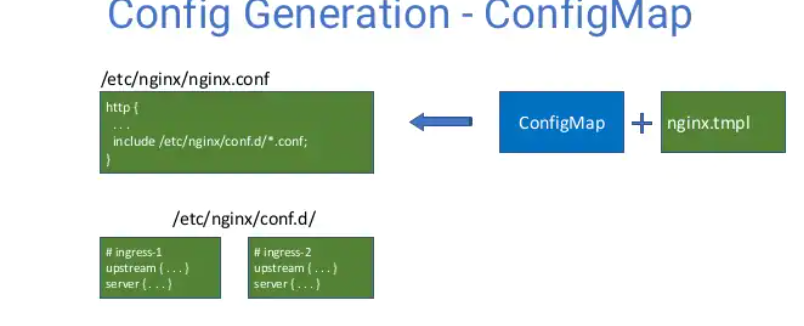

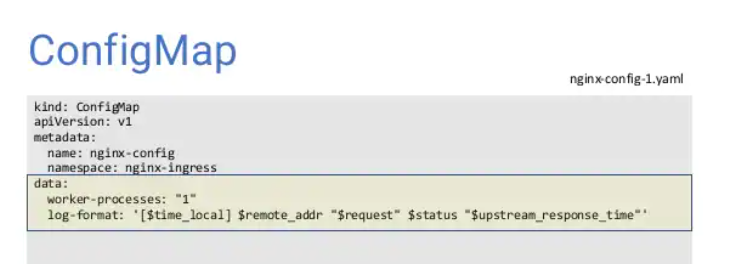

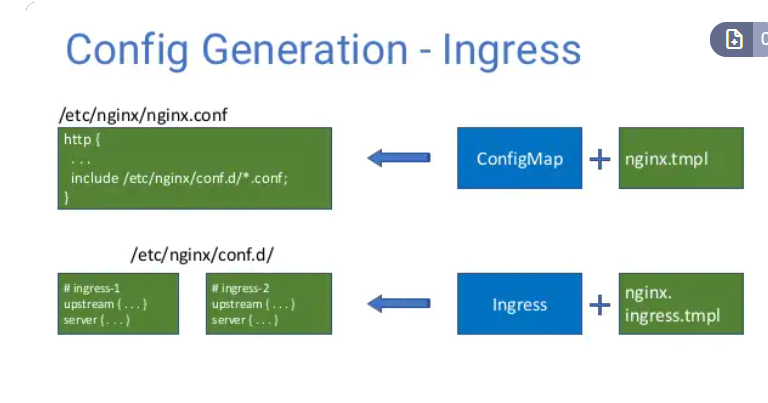

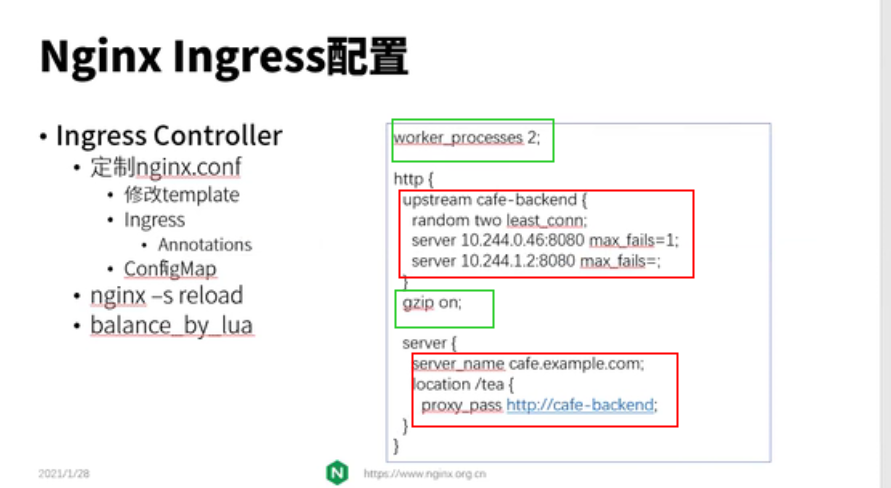

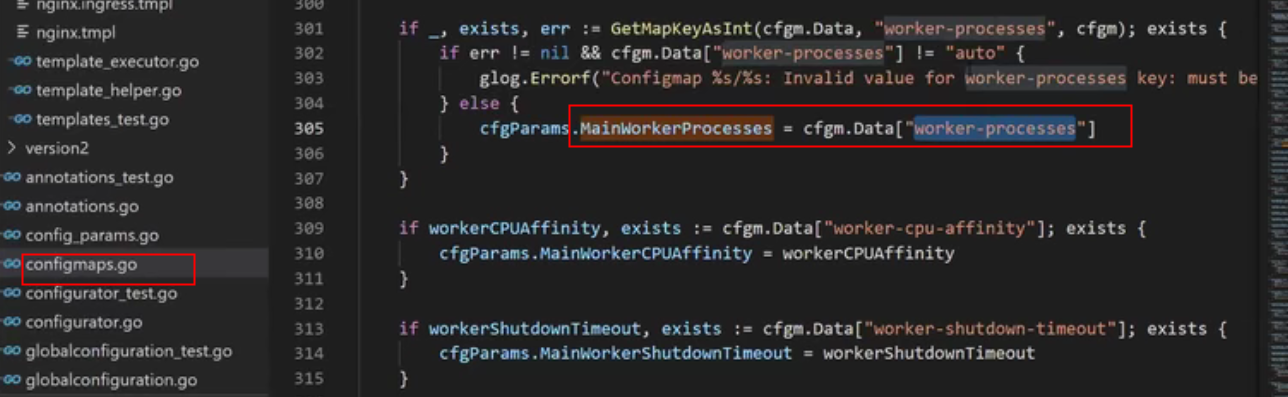

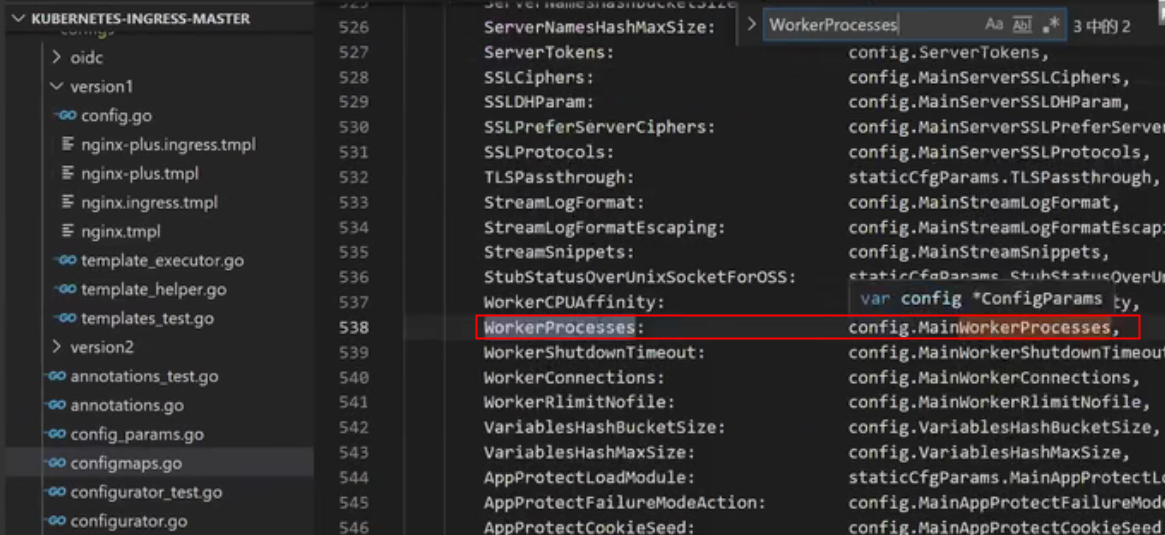

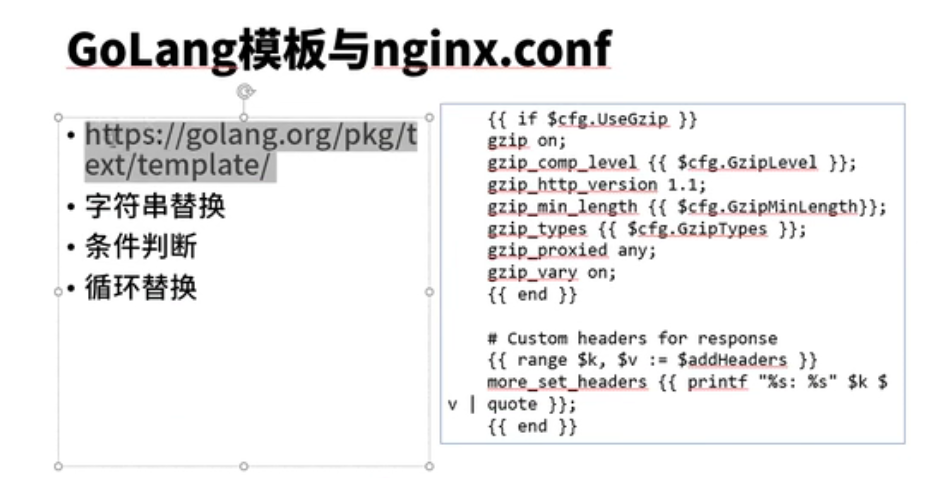

confgimap对应全局配置(绿色)

ingress(包括annotaion):对应局部配置

confgimap和ingress(都和golang template有关

apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: cafe-ingress spec: ingressClassName: nginx-org tls: - hosts: - cafe.example.com secretName: cafe-secret rules: - host: cafe.example.com http: paths: - path: /tea pathType: Prefix backend: serviceName: tea-svc servicePort: 80 - path: /coffee pathType: Prefix backend: serviceName: coffee-svc servicePort: 80

k8s官网

Nginx 官方 configMap

root@ubuntu:~/kubernetes-ingress# cat deployments/common/nginx-config.yaml kind: ConfigMap apiVersion: v1 metadata: name: nginx-config namespace: nginx-ingress data:

root@ubuntu:~/kubernetes-ingress# kubectl exec -it nginx-ingress-6f7d9bdb87-twhxg -n nginx-ingress -- /bin/bash nginx@nginx-ingress-6f7d9bdb87-twhxg:/$ ls bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib media mnt nginx-ingress nginx.ingress.tmpl nginx.tmpl nginx.transportserver.tmpl nginx.virtualserver.tmpl opt proc root run sbin srv sys tmp usr var nginx@nginx-ingress-6f7d9bdb87-twhxg:/$ cd /etc/n nginx/ nsswitch.conf nginx@nginx-ingress-6f7d9bdb87-twhxg:/$ cd /etc/nginx/ nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx$ ls conf.d config-version.conf fastcgi_params mime.types modules nginx.conf scgi_params secrets stream-conf.d uwsgi_params nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx$ cat nginx.conf | grep worker worker_processes auto; worker_connections 1024;

更改worker-processes,会导致reload

root@ubuntu:~/kubernetes-ingress# kubectl apply -f deployments/common/nginx-config.yaml configmap/nginx-config configured root@ubuntu:~/kubernetes-ingress# kubectl exec -it nginx-ingress-6f7d9bdb87-twhxg -n nginx-ingress -- /bin/bash nginx@nginx-ingress-6f7d9bdb87-twhxg:/$ cat /etc/nginx/nginx.conf | grep worker worker_processes 1; worker_connections 1024; nginx@nginx-ingress-6f7d9bdb87-twhxg:/$ exit exit root@ubuntu:~/kubernetes-ingress# cat deployments/common/nginx-config.yaml kind: ConfigMap apiVersion: v1 metadata: name: nginx-config namespace: nginx-ingress data: worker-processes: "1" root@ubuntu:~/kubernetes-ingress#

root@cloud:~# ps -elf | grep nginx | grep 2609105 4 S systemd+ 2609105 2609088 0 80 0 - 187147 futex_ Aug26 ? 00:13:31 /nginx-ingress -nginx-configmaps=nginx-ingress/nginx-config -default-server-tls-secret=nginx-ingress/default-server-secret -ingress-class=nginx-org 4 S systemd+ 2609154 2609105 0 80 0 - 2449 sigsus Aug26 ? 00:00:01 nginx: master process /usr/sbin/nginx root@cloud:~# ps -elf | grep nginx | grep 2609154 4 S systemd+ 2609154 2609105 0 80 0 - 2449 sigsus Aug26 ? 00:00:01 nginx: master process /usr/sbin/nginx 1 S systemd+ 3294377 2609154 0 80 0 - 2454 ep_pol 15:47 ? 00:00:00 nginx: worker process root@cloud:~#

更改ingress

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl apply -f cafe-ingress.yaml Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply ingress.networking.k8s.io/cafe-ingress configured root@ubuntu:~/kubernetes-ingress/examples/complete-example# cat cafe-ingress.yaml apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: cafe-ingress annotations: nginx.org/proxy-connect-timeout: "20s" spec: ingressClassName: nginx-org tls: - hosts: - cafe.example.com secretName: cafe-secret rules: - host: cafe.example.com http: paths: - path: /tea pathType: Prefix backend: serviceName: tea-svc servicePort: 80 - path: /coffee pathType: Prefix backend: serviceName: coffee-svc servicePort: 80

location /coffee { set $service "coffee-svc"; proxy_http_version 1.1; proxy_connect_timeout 20s; proxy_read_timeout 60s; proxy_send_timeout 60s; client_max_body_size 1m; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Host $host; proxy_set_header X-Forwarded-Port $server_port; proxy_set_header X-Forwarded-Proto $scheme; proxy_buffering on; proxy_pass http://default-cafe-ingress-cafe.example.com-coffee-svc-80; } } nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx/conf.d$ cat default-cafe-ingress.conf | grep proxy_connect_timeout proxy_connect_timeout 20s; proxy_connect_timeout 20s; nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx/conf.d$ cat default-cafe-ingress.conf | grep -n proxy_connect_timeout 68: proxy_connect_timeout 20s; 91: proxy_connect_timeout 20s; nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx/conf.d$

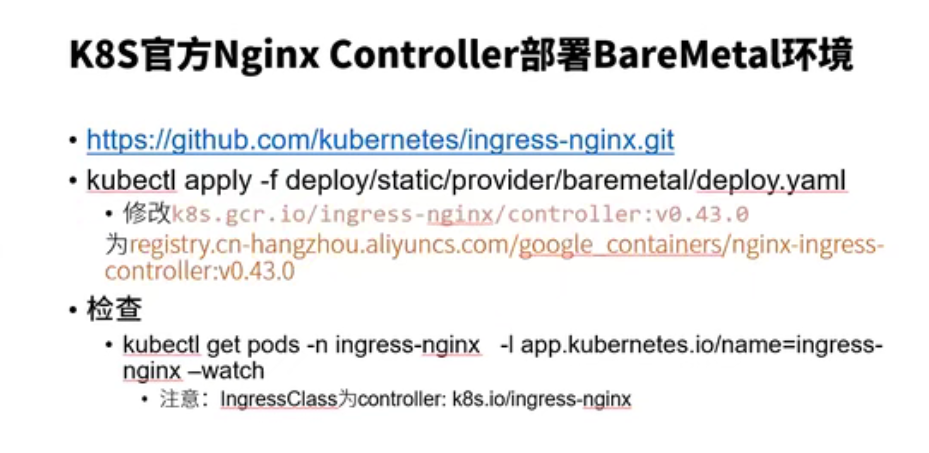

deploy/static/provider/baremetal/deploy.yaml

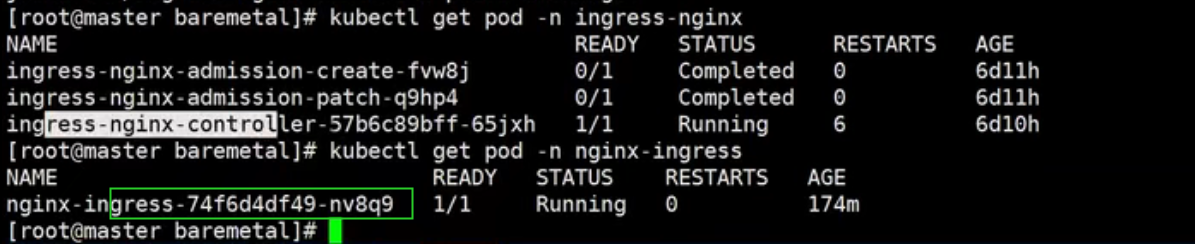

root@ubuntu:~# kubectl get pod -n ingress-nginx NAME READY STATUS RESTARTS AGE ingress-nginx-admission-create-vgn6m 0/1 Completed 0 16h ingress-nginx-admission-patch-7m6c8 0/1 Completed 1 16h ingress-nginx-controller-558d6d646f-r6smp 1/1 Running 0 16h root@ubuntu:~# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-6f7d9bdb87-twhxg 1/1 Running 0 19h root@ubuntu:~#

root@ubuntu:~/nginx_ingress/ingress-nginx# ls bin charts code-of-conduct.md controller.v0.48.1.tar docs hack internal mkdocs.yml OWNERS_ALIASES rootfs stable.txt version build cloudbuild.yaml CONTRIBUTING.md deploy go.mod images LICENSE nginx-ingress-alpine README.md SECURITY_CONTACTS TAG Changelog.md cmd controller.v0.48.1.debug.tar deployment.v0.44.0.yaml go.sum ingress-nginx-build Makefile OWNERS RELEASE.md SECURITY.md test root@ubuntu:~/nginx_ingress/ingress-nginx# kubectl create -f deploy/static/provider/baremetal/deploy.yaml namespace/ingress-nginx created serviceaccount/ingress-nginx created configmap/ingress-nginx-controller created clusterrole.rbac.authorization.k8s.io/ingress-nginx created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created role.rbac.authorization.k8s.io/ingress-nginx created rolebinding.rbac.authorization.k8s.io/ingress-nginx created service/ingress-nginx-controller-admission created service/ingress-nginx-controller created deployment.apps/ingress-nginx-controller created validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created serviceaccount/ingress-nginx-admission created clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created role.rbac.authorization.k8s.io/ingress-nginx-admission created rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created job.batch/ingress-nginx-admission-create created job.batch/ingress-nginx-admission-patch created root@ubuntu:~/nginx_ingress/ingress-nginx# kubectl get pods -n ingress-nginx NAME READY STATUS RESTARTS AGE ingress-nginx-admission-create-vgn6m 0/1 Completed 0 18s ingress-nginx-admission-patch-7m6c8 0/1 Completed 1 18s ingress-nginx-controller-558d6d646f-r6smp 0/1 Running 0 18s root@ubuntu:~/nginx_ingress/ingress-nginx# kubectl get pods -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-6f7d9bdb87-twhxg 1/1 Running 0 147m root@ubuntu:~/nginx_ingress/ingress-nginx# kubectl get ing -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default cafe-ingress nginx-org cafe.example.com 80, 443 172m default example-ingress <none> ubuntu.com 80 20d default micro-ingress <none> nginx.mydomain.com,apache.mydomain.com 80 20d default web-ingress <none> web.mydomain.com 80 20d default web-ingress-lb <none> web3.mydomain.com,web2.mydomain.com 80 20d root@ubuntu:~/nginx_ingress/ingress-nginx#

root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: nginx.mydomain.com" https://10.244.41.23:443/nginx --insecure -v * Trying 10.244.41.23... * TCP_NODELAY set * Connected to 10.244.41.23 (10.244.41.23) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/certs/ca-certificates.crt CApath: /etc/ssl/certs * TLSv1.3 (OUT), TLS handshake, Client hello (1): * TLSv1.3 (IN), TLS handshake, Server hello (2): * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, Unknown (8): * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, Certificate (11): * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, CERT verify (15): * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, Finished (20): * TLSv1.3 (OUT), TLS change cipher, Client hello (1): * TLSv1.3 (OUT), TLS Unknown, Certificate Status (22): * TLSv1.3 (OUT), TLS handshake, Finished (20): * SSL connection using TLSv1.3 / TLS_AES_256_GCM_SHA384 * ALPN, server accepted to use h2 * Server certificate: * subject: O=Acme Co; CN=Kubernetes Ingress Controller Fake Certificate * start date: Aug 26 10:06:15 2021 GMT * expire date: Aug 26 10:06:15 2022 GMT * issuer: O=Acme Co; CN=Kubernetes Ingress Controller Fake Certificate * SSL certificate verify result: unable to get local issuer certificate (20), continuing anyway. * Using HTTP2, server supports multi-use * Connection state changed (HTTP/2 confirmed) * Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0 * TLSv1.3 (OUT), TLS Unknown, Unknown (23): * TLSv1.3 (OUT), TLS Unknown, Unknown (23): * TLSv1.3 (OUT), TLS Unknown, Unknown (23): * Using Stream ID: 1 (easy handle 0xaaaad8f55af0) * TLSv1.3 (OUT), TLS Unknown, Unknown (23): > GET /nginx HTTP/2 > Host: nginx.mydomain.com > User-Agent: curl/7.58.0 > Accept: */* > * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, Newsession Ticket (4): * TLSv1.3 (IN), TLS Unknown, Certificate Status (22): * TLSv1.3 (IN), TLS handshake, Newsession Ticket (4): * TLSv1.3 (IN), TLS Unknown, Unknown (23): * Connection state changed (MAX_CONCURRENT_STREAMS updated)! * TLSv1.3 (OUT), TLS Unknown, Unknown (23): * TLSv1.3 (IN), TLS Unknown, Unknown (23): < HTTP/2 404 < date: Thu, 26 Aug 2021 10:09:09 GMT < content-type: text/html < content-length: 153 < strict-transport-security: max-age=15724800; includeSubDomains < <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.21.1</center> </body> </html> * TLSv1.3 (IN), TLS Unknown, Unknown (23): * Connection #0 to host 10.244.41.23 left intact

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl create -f cafe-k8s-ingress.yaml ingress.networking.k8s.io/cafe-k8s-ingress created root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get ing -A NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE default cafe-ingress nginx-org cafe.example.com 80, 443 3h46m default cafe-k8s-ingress nginx cafe.example.com 80, 443 10s default example-ingress <none> ubuntu.com 10.10.16.47 80 20d default micro-ingress <none> nginx.mydomain.com,apache.mydomain.com 10.10.16.47 80 20d default web-ingress <none> web.mydomain.com 10.10.16.47 80 20d default web-ingress-lb <none> web3.mydomain.com,web2.mydomain.com 10.10.16.47 80 20d root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get ingressclass -A NAME CONTROLLER PARAMETERS AGE nginx-org nginx.org/ingress-controller <none> 4h17m root@ubuntu:~/kubernetes-ingress/examples/complete-example#

竟然只有一個ingresclass

創建ingress class

root@ubuntu:~/kubernetes-ingress/deployments/common# kubectl create -f ingress-k8s-class.yaml ingressclass.networking.k8s.io/nginx created root@ubuntu:~/kubernetes-ingress/deployments/common# cat ingress-k8s-class.yaml apiVersion: networking.k8s.io/v1beta1 kind: IngressClass metadata: name: nginx # annotations: # ingressclass.kubernetes.io/is-default-class: "true" spec: controller: kubernetes.io/ingress-controller root@ubuntu:~/kubernetes-ingress/deployments/common# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE nginx kubernetes.io/ingress-controller <none> 87s nginx-org nginx.org/ingress-controller <none> 4h33m root@ubuntu:~/kubernetes-ingress/deployments/common#

root@ubuntu:~/kubernetes-ingress/examples/complete-example# vi cafe-k8s-ingress.yaml apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: cafe-k8s-ingress spec: ingressClassName: nginx tls: - hosts: - cafe.example.com secretName: cafe-secret rules: - host: cafe.example.com http: paths: - path: /tea pathType: Prefix backend: serviceName: tea-svc servicePort: 80 - path: /coffee pathType: Prefix backend: serviceName: coffee-svc servicePort: 80 ~

无法访问curl -H "Host: cafe.example.com" https://10.244.41.23:443 --insecure

root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: cafe.example.com" https://10.244.41.23:443 --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: cafe.example.com" https://10.244.41.23:443/nginx --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: nginx.mydomain.com" https://10.244.41.23:443 --insecure <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments# curl -H "Host: nginx.mydomain.com" https://10.244.41.23:443/nginx --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.21.1</center> </body> </html> root@ubuntu:~/kubernetes-ingress/deployments#

bash-5.1$ ./dbg conf | grep coffee location /coffee/ { set $service_name "coffee-svc"; set $location_path "/coffee"; set $proxy_upstream_name "default-coffee-svc-80"; location = /coffee { set $service_name "coffee-svc"; set $location_path "/coffee"; set $proxy_upstream_name "default-coffee-svc-80"; bash-5.1$ ./dbg conf | grep tea location /tea/ { set $service_name "tea-svc"; set $location_path "/tea"; set $proxy_upstream_name "default-tea-svc-80"; location = /tea { set $service_name "tea-svc"; set $location_path "/tea"; set $proxy_upstream_name "default-tea-svc-80";

/etc/nginx/nginx.conf

root@ubuntu:~/kubernetes-ingress/examples/complete-example# cat cafe-k8s-ingress.yaml apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: cafe-k8s-ingress spec: ingressClassName: nginx tls: - hosts: - cafe.example.com secretName: cafe-secret rules: - host: cafe.example.com http: paths: - path: /tea pathType: Prefix backend: serviceName: tea-svc servicePort: 80 - path: /coffee pathType: Prefix backend: serviceName: coffee-svc servicePort: 80 root@ubuntu:~/kubernetes-ingress/examples/complete-example# ls cafe-ingress.yaml cafe-k8s-ingress.yaml cafe-secret.yaml cafe.yaml dashboard.png README.md root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE nginx kubernetes.io/ingress-controller <none> 15h nginx-org nginx.org/ingress-controller <none> 20h root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl apply -f cafe-k8s-ingress.yaml Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply ingress.networking.k8s.io/cafe-k8s-ingress configured root@ubuntu:~/kubernetes-ingress/examples/complete-example#

可以访问了

root@ubuntu:~/kubernetes-ingress/examples/complete-example# curl -H "Host: cafe.example.com" https://10.244.41.23:443 --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx</center> </body> </html> root@ubuntu:~/kubernetes-ingress/examples/complete-example# curl -H "Host: cafe.example.com" https://10.244.41.23:443 --insecure <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx</center> </body> </html> root@ubuntu:~/kubernetes-ingress/examples/complete-example# curl --resolve cafe.example.com:443:10.244.41.23 https://cafe.example.com:443/tea --insecure Server address: 10.244.41.18:8080 Server name: tea-69c99ff568-tm9q6 Date: 27/Aug/2021:03:01:04 +0000 URI: /tea Request ID: 467d1ef232643f26052177281ea7b396 root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get pod -o wide -n nginx-ingress NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-6f7d9bdb87-twhxg 1/1 Running 0 19h 10.244.41.21 cloud <none> <none> root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get pod -o wide -n ingress-nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-admission-create-vgn6m 0/1 Completed 0 16h 10.244.41.20 cloud <none> <none> ingress-nginx-admission-patch-7m6c8 0/1 Completed 1 16h 10.244.41.22 cloud <none> <none> ingress-nginx-controller-558d6d646f-r6smp 1/1 Running 0 16h 10.244.41.23 cloud <none> <none> root@ubuntu:~/kubernetes-ingress/examples/complete-example# curl -H "Host: cafe.example.com" https://10.244.41.23:443/coffee --insecure Server address: 10.244.41.19:8080 Server name: coffee-5f56ff9788-zs2f7 Date: 27/Aug/2021:03:02:48 +0000 URI: /coffee Request ID: 3e5096cb09945d16c32eb766621112dd root@ubuntu:~/kubernetes-ingress/examples/complete-example#

nginx ingress

tls

root@ubuntu:~/kubernetes-ingress/examples/complete-example# kubectl get secret NAME TYPE DATA AGE cafe-secret kubernetes.io/tls 2 4d5h default-token-cfr6q kubernetes.io/service-account-token 3 60d root@ubuntu:~/kubernetes-ingress/examples/complete-example# grep cafe-secret -rn * cafe-ingress.yaml:12: secretName: cafe-secret cafe-k8s-ingress.yaml:10: secretName: cafe-secret cafe-secret.yaml:4: name: cafe-secret README.md:31: $ kubectl create -f cafe-secret.yaml root@ubuntu:~/kubernetes-ingress/examples/complete-example# cat cafe-secret.yaml apiVersion: v1 kind: Secret metadata: name: cafe-secret type: kubernetes.io/tls data: tls.crt: LS0tL0THNhWFdqQU5CZ2txaGtpRzl3MEJBUXNGQURCYU1Rc3dDUVlEVlFRR0V3SlYKVXpFTE1Ba0dBMVVFQ0F3Q1EwRXhJVEFmQmdOVkJBb01HRWx1ZEdWeWJtVjBJRmRwWkdkcGRITWdVSFI1SUV4MApaREViTUJrR0ExVUVBd3dTWTJGbVpTNWxlR0Z0Y0d4bExtTnZiU0FnTUI0WERURTRNRGt4TWpFMk1UVXpOVm9YCkRUSXpNRGt4TVRFMk1UVXpOVm93V0RFTE1Ba0dBMVVFQmhNQ1ZWTXhDekFKQmdOVkJBZ01Ba05CTVNFd0h3WUQKVlFRS0RCaEpiblJsY201bGRDQlhhV1JuYVhSeklGQjBlU0JNZEdReEdUQVhCZ05WQkFNTUVHTmhabVV1WlhoaApiWEJzWlM1amIyMHdnZ0VpTUEwR0NTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDcDZLbjdzeTgxCnAwanVKL2N5ayt2Q0FtbHNmanRGTTJtdVpOSzBLdGVjcUcyZmpXUWI1NXhRMVlGQTJYT1N3SEFZdlNkd0kyaloKcnVXOHFYWENMMnJiNENaQ0Z4d3BWRUNyY3hkam0zdGVWaVJYVnNZSW1tSkhQUFN5UWdwaW9iczl4N0RsTGM2SQpCQTBaalVPeWwwUHFHOVNKZXhNVjczV0lJYTVyRFZTRjJyNGtTa2JBajREY2o3TFhlRmxWWEgySTVYd1hDcHRDCm42N0pDZzQyZitrOHdnemNSVnA4WFprWldaVmp3cTlSVUtEWG1GQjJZeU4xWEVXZFowZXdSdUtZVUpsc202OTIKc2tPcktRajB2a29QbjQxRUUvK1RhVkVwcUxUUm9VWTNyemc3RGtkemZkQml6Rk8yZHNQTkZ4MkNXMGpYa05MdgpLbzI1Q1pyT2hYQUhBZ01CQUFFd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFLSEZDY3lPalp2b0hzd1VCTWRMClJkSEliMzgzcFdGeW5acS9MdVVvdnNWQTU4QjBDZzdCRWZ5NXZXVlZycTVSSWt2NGxaODFOMjl4MjFkMUpINnIKalNuUXgrRFhDTy9USkVWNWxTQ1VwSUd6RVVZYVVQZ1J5anNNL05VZENKOHVIVmhaSitTNkZBK0NuT0Q5cm4yaQpaQmVQQ0k1ckh3RVh3bm5sOHl3aWozdnZRNXpISXV5QmdsV3IvUXl1aTlmalBwd1dVdlVtNG52NVNNRzl6Q1Y3ClBwdXd2dWF0cWpPMTIwOEJqZkUvY1pISWc4SHc5bXZXOXg5QytJUU1JTURFN2IvZzZPY0s3TEdUTHdsRnh2QTgKN1dqRWVxdW5heUlwaE1oS1JYVmYxTjM0OWVOOThFejM4Zk9USFRQYmRKakZBL1BjQytHeW1lK2lHdDVPUWRGaAp5UkU9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K tls.key: LS0tLS1CRUdJTiBSU0ZOYWRJN2lmM01wUHJ3Z0pwYkg0N1JUTnBybVRTdENyWG5LaHRuNDFrCkcrZWNVTldCUU5semtzQndHTDBuY0NObzJhN2x2S2wxd2k5cTIrQW1RaGNjS1ZSQXEzTVhZNXQ3WGxZa1YxYkcKQ0pwaVJ6ejBza0lLWXFHN1BjZXc1UzNPaUFRTkdZMURzcGRENmh2VWlYc1RGZTkxaUNHdWF3MVVoZHErSkVwRwp3SStBM0kreTEzaFpWVng5aU9WOEZ3cWJRcCt1eVFvT05uL3BQTUlNM0VWYWZGMlpHVm1WWThLdlVWQ2cxNWhRCmRtTWpkVnhGbldkSHNFYmltRkNaYkp1dmRySkRxeWtJOUw1S0Q1K05SQlAvazJsUkthaTAwYUZHTjY4NE93NUgKYzMzUVlzeFR0bmJEelJjZGdsdEkxNURTN3lxTnVRbWF6b1Z3QndJREFRQUJBb0lCQVFDUFNkU1luUXRTUHlxbApGZlZGcFRPc29PWVJoZjhzSStpYkZ4SU91UmF1V2VoaEp4ZG01Uk9ScEF6bUNMeUw1VmhqdEptZTIyM2dMcncyCk45OUVqVUtiL1ZPbVp1RHNCYzZvQ0Y2UU5SNThkejhjbk9SVGV3Y290c0pSMXBuMWhobG5SNUhxSkpCSmFzazEKWkVuVVFmY1hackw5NGxvOUpIM0UrVXFqbzFGRnM4eHhFOHdvUEJxalpzVjdwUlVaZ0MzTGh4bndMU0V4eUZvNApjeGI5U09HNU9tQUpvelN0Rm9RMkdKT2VzOHJKNXFmZHZ5dGdnOXhiTGFRTC94MGtwUTYyQm9GTUJEZHFPZVBXCktmUDV6WjYvMDcvdnBqNDh5QTFRMzJQem9idWJzQkxkM0tjbjMyamZtMUU3cHJ0V2wrSmVPRmlPem5CUUZKYk4KNHFQVlJ6NWhBb0dCQU50V3l4aE5DU0x1NFArWGdLeWNrbGpKNkY1NjY4Zk5qNUN6Z0ZScUowOXpuMFRsc05ybwpGVExaY3hEcW5SM0hQWU00MkpFUmgySi9xREZaeW5SUW8zY2czb2VpdlVkQlZHWTgrRkkxVzBxZHViL0w5K3l1CmVkT1pUUTVYbUdHcDZyNmpleHltY0ppbS9Pc0IzWm5ZT3BPcmxEN1NQbUJ2ek5MazRNRjZneGJYQW9HQkFNWk8KMHA2SGJCbWNQMHRqRlhmY0tFNzdJbUxtMHNBRzR1SG9VeDBlUGovMnFyblRuT0JCTkU0TXZnRHVUSnp5K2NhVQprOFJxbWRIQ2JIelRlNmZ6WXEvOWl0OHNaNzdLVk4xcWtiSWN1YytSVHhBOW5OaDFUanNSbmU3NFowajFGQ0xrCmhIY3FIMHJpN1BZU0tIVEU4RnZGQ3haWWRidUI4NENtWmlodnhicFJBb0dBSWJqcWFNWVBUWXVrbENkYTVTNzkKWVNGSjFKelplMUtqYS8vdER3MXpGY2dWQ0thMzFqQXdjaXowZi9sU1JxM0hTMUdHR21lemhQVlRpcUxmZVpxYwpSMGlLYmhnYk9jVlZrSkozSzB5QXlLd1BUdW14S0haNnpJbVpTMGMwYW0rUlk5WUdxNVQ3WXJ6cHpjZnZwaU9VCmZmZTNSeUZUN2NmQ21mb09oREN0enVrQ2dZQjMwb0xDMVJMRk9ycW40M3ZDUzUxemM1em9ZNDR1QnpzcHd3WU4KVHd2UC9FeFdNZjNWSnJEakJDSCtULzZzeXNlUGJKRUltbHpNK0l3eXRGcEFOZmlJWEV0LzQ4WGY2ME54OGdXTQp1SHl4Wlp4L05LdER3MFY4dlgxUE9ucTJBNWVpS2ErOGpSQVJZS0pMWU5kZkR1d29seHZHNmJaaGtQaS80RXRUCjNZMThzUUtCZ0h0S2JrKzdsTkpWZXN3WEU1Y1VHNkVEVXNEZS8yVWE3ZlhwN0ZjanFCRW9hcDFMU3crNlRYcDAKWmdybUtFOEFSek00NytFSkhVdmlpcS9udXBFMTVnMGtKVzNzeWhwVTl6WkxPN2x0QjBLSWtPOVpSY21Vam84UQpjcExsSE1BcWJMSjhXWUdKQ2toaVd4eWFsNmhZVHlXWTRjVmtDMHh0VGwvaFVFOUllTktvCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx/conf.d$ cat default-cafe-ingress.conf | grep certificate ssl_certificate /etc/nginx/secrets/default-cafe-secret; ssl_certificate_key /etc/nginx/secrets/default-cafe-secret; nginx@nginx-ingress-6f7d9bdb87-twhxg:/etc/nginx/conf.d$

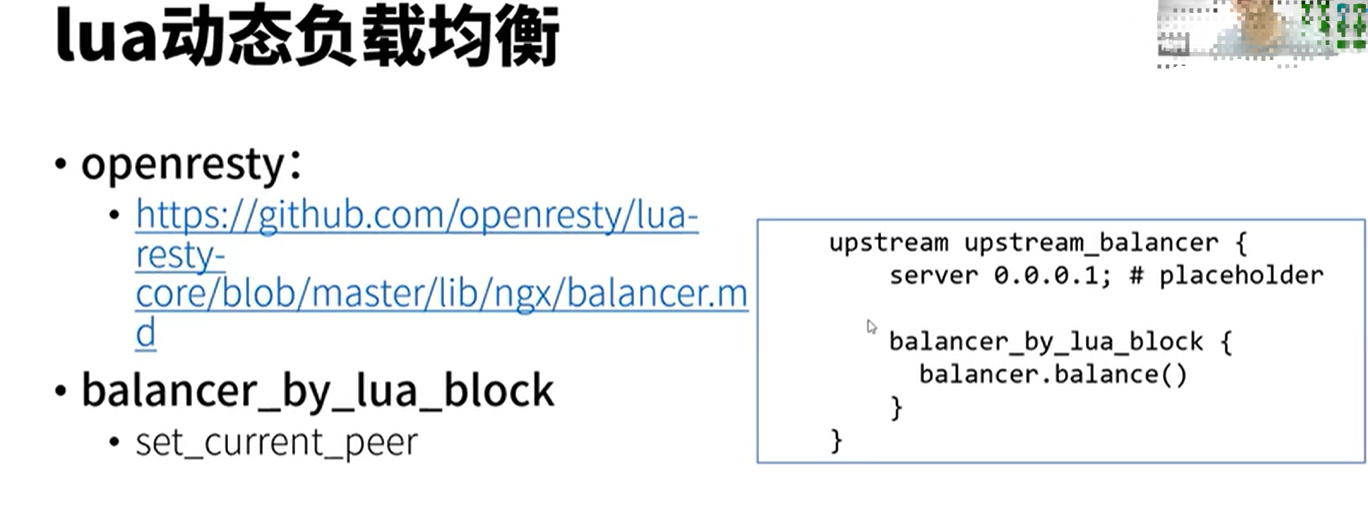

bash-5.1$ grep 'M.balance' balancer.lua function _M.balance() bash-5.1$ pwd /etc/nginx/lua bash-5.1$

function _M.balance() local balancer = get_balancer() if not balancer then return end local peer = balancer:balance() if not peer then ngx.log(ngx.WARN, "no peer was returned, balancer: " .. balancer.name) return end if peer:match(PROHIBITED_PEER_PATTERN) then ngx.log(ngx.ERR, "attempted to proxy to self, balancer: ", balancer.name, ", peer: ", peer) return end ngx_balancer.set_more_tries(1) local ok, err = ngx_balancer.set_current_peer(peer) if not ok then ngx.log(ngx.ERR, "error while setting current upstream peer ", peer, ": ", err) end end

root@ubuntu:~/nginx_ingress/ingress-nginx/rootfs# find ./ -name nginx.tmpl ./etc/nginx/template/nginx.tmpl root@ubuntu:~/nginx_ingress/ingress-nginx/rootfs#

bash-5.1$ ./dbg backends all [ { "name": "default-apache-svc-80", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "protocol": "TCP", "port": 80, "targetPort": 80 } ], "selector": { "app": "apache-app" }, "clusterIP": "10.111.63.105", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 80, "sslPassthrough": false, "endpoints": [ { "address": "10.244.243.197", "port": "80" }, { "address": "10.244.41.61", "port": "80" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "default-coffee-svc-80", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "name": "http", "protocol": "TCP", "port": 80, "targetPort": 8080 } ], "selector": { "app": "coffee" }, "clusterIP": "10.101.87.73", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 80, "sslPassthrough": false, "endpoints": [ { "address": "10.244.243.252", "port": "8080" }, { "address": "10.244.41.19", "port": "8080" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "default-nginx-svc-80", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "protocol": "TCP", "port": 80, "targetPort": 80 } ], "selector": { "app": "nginx-app" }, "clusterIP": "10.103.182.145", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 80, "sslPassthrough": false, "endpoints": [ { "address": "10.244.243.195", "port": "80" }, { "address": "10.244.41.58", "port": "80" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "default-tea-svc-80", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "name": "http", "protocol": "TCP", "port": 80, "targetPort": 8080 } ], "selector": { "app": "tea" }, "clusterIP": "10.103.138.254", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 80, "sslPassthrough": false, "endpoints": [ { "address": "10.244.41.15", "port": "8080" }, { "address": "10.244.41.17", "port": "8080" }, { "address": "10.244.41.18", "port": "8080" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "default-web2-8097", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "protocol": "TCP", "port": 8097, "targetPort": 80 } ], "selector": { "run": "web2" }, "clusterIP": "10.99.87.66", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 8097, "sslPassthrough": false, "endpoints": [ { "address": "10.244.41.59", "port": "80" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "default-web3-8097", "service": { "metadata": { "creationTimestamp": null }, "spec": { "ports": [ { "protocol": "TCP", "port": 8097, "targetPort": 80 } ], "selector": { "run": "web3" }, "clusterIP": "10.107.70.171", "type": "ClusterIP", "sessionAffinity": "None" }, "status": { "loadBalancer": {} } }, "port": 8097, "sslPassthrough": false, "endpoints": [ { "address": "10.244.41.55", "port": "80" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": { "upstream-hash-by-subset-size": 3 }, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } }, { "name": "upstream-default-backend", "port": 0, "sslPassthrough": false, "endpoints": [ { "address": "127.0.0.1", "port": "8181" } ], "sessionAffinityConfig": { "name": "", "mode": "", "cookieSessionAffinity": { "name": "" } }, "upstreamHashByConfig": {}, "noServer": false, "trafficShapingPolicy": { "weight": 0, "header": "", "headerValue": "", "headerPattern": "", "cookie": "" } } ]

root@ubuntu:~# kubectl get svc -A -o wide NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default apache-svc ClusterIP 10.111.63.105 <none> 80/TCP 24d app=apache-app default coffee-svc ClusterIP 10.101.87.73 <none> 80/TCP 4d6h app=coffee default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60d <none> default nginx-svc ClusterIP 10.103.182.145 <none> 80/TCP 24d app=nginx-app default tea-svc ClusterIP 10.103.138.254 <none> 80/TCP 4d6h app=tea default web2 ClusterIP 10.99.87.66 <none> 8097/TCP 24d run=web2 default web3 ClusterIP 10.107.70.171 <none> 8097/TCP 24d run=web3 ingress-nginx ingress-nginx-controller NodePort 10.105.214.187 <none> 80:30383/TCP,443:30972/TCP 4d app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx ingress-nginx ingress-nginx-controller-admission ClusterIP 10.106.20.178 <none> 443/TCP 4d app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 60d k8s-app=kube-dns ns-calico1 nodeport-svc NodePort 10.109.58.6 <none> 3000:30090/TCP 38d app=calico1-nginx root@ubuntu:~# kubectl describe svc tea-svc Name: tea-svc Namespace: default Labels: <none> Annotations: <none> Selector: app=tea Type: ClusterIP IP: 10.103.138.254 Port: http 80/TCP TargetPort: 8080/TCP Endpoints: 10.244.41.15:8080,10.244.41.17:8080,10.244.41.18:8080 Session Affinity: None Events: <none> root@ubuntu:~#

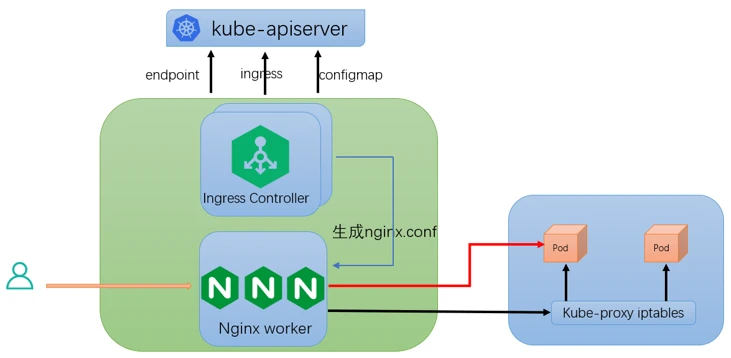

上图 红色箭头所示方案:nginx upstream 设置 Pod IP。由于 Pod IP 不固定,nginx 基于 ngx-lua 模块将实时监听到的 Pod IP 动态更新到 upstream,nginx 会直接将 HTTP 请求转发到业务 Pod,这是目前 ingress nginx 默认方案;请求流量不会经过 kube-proxy 生成的 iptable

https://docs.oracle.com/en-us/iaas/Content/ContEng/Tasks/contengsettingupingresscontroller.htm

https://www.digitalocean.com/community/tutorials/how-to-set-up-an-nginx-ingress-on-digitalocean-kubernetes-using-helm

在K8S集群中使用NGINX Ingress V1.7

Multiple Ingress controllers

Multiple Ingress controllers(多ingress部署)

浙公网安备 33010602011771号

浙公网安备 33010602011771号