hive on spark 报错_李孟_新浪博客

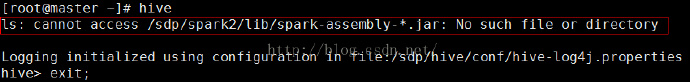

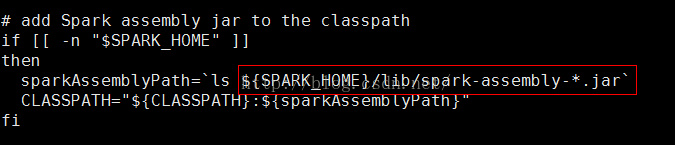

1.自从spark2.0.0发布没有assembly的包了,在jars里面,是很多小jar包

修改目录查找jar

2.异常HiveConf of name hive.enable.spark.execution.engine does not exist

在hive-site.xml中:

hive.enable.spark.execution.engine过时了,配置删除即可

3.异常

Failed to execute spark task, with exception 'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create spark client.)' FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

Spark与hive版本不对,spark的编译,在这里我使用的是hive稳定版本2.01,查看他的pom.xml需要的spark版本是1.5.0。hive与spark版本必须对应着

重新编译完报

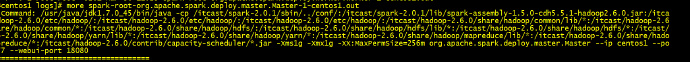

Exception in thread "main" java.lang.NoClassDefFoundError: org/slf4j/impl/StaticLoggerBinder

在spark-env.sh里面添加

export SPARK_DIST_CLASSPATH=$(hadoop classpath)

spark master可以起来了,但是slaves仍然是上面错误

用scala./dev/change-scala-version.sh mvn -Pyarn -Phadoop-2.4 -Dscala-2.11 -DskipTests clean package

4.异常

4] shutting down ActorSystem [sparkMaster]

java.lang.NoClassDefFoundError: com/fasterxml/jackson/databind/Module

删除hive/lib

jackson-annotations-2.4.0.jar

jackson-core-2.4.2.jar

jackson-databind-2.4.2.jar

cp $HADOOP_HOME/share/hadoop/tools/lib/jackson-annotations-2.2.3.jar ./

cp $HADOOP_HOME/share/hadoop/tools/lib/jackson-core-2.2.3.jar ./

cp $HADOOP_HOME/share/hadoop/tools/lib/jackson-databind-2.2.3.jar ./

Spark运行时的日志,查看加载jar包的地方,添加上述jar

5.异常

java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate root

在hadoop中在core-site.xml添加如下属性,其中

hadoop.proxyuser.root.groups

root

Allow the superuser oozie to impersonate any members of the group group1 and group2

hadoop.proxyuser.root.hosts

*

The superuser can connect only from host1 and host2 to impersonate a user

6.异常

MetaException(message:Hive Schema version 2.1.0 does not match metastore's schema version 2.0.0 Metastore is not up

graded or corrupt)

HADOOP或者hive对应着版本太高

浙公网安备 33010602011771号

浙公网安备 33010602011771号