百度百科词条采集

https://baike.baidu,com/view/? 方式尽可能遍历百科词条

1 # -*- coding: utf-8 -*- 2 # @time : 2019/7/1 14:56 3 import requests 4 import random 5 from multiprocessing import Process, Pool 6 import pymysql 7 8 ''' 9 通过组装“https://baike.baidu.com/view/”+数字的方式进行多进程遍历。 10 ''' 11 12 mysql_ip = '' 13 mysql_port = 14 mysql_user = '' 15 mysql_passwd = '' 16 msyql_db = '' 17 18 process_num = 5 19 20 baseUrl = 'https://baike.baidu.com/view/' 21 headers = { 22 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36', 23 'Referer': 'https://www.baidu.com/', 24 'Accept-Encoding': 'gzip, deflate, br' 25 } 26 ip_pool = [ 27 '119.98.44.192:8118', 28 '111.198.219.151:8118', 29 '101.86.86.101:8118', 30 ] 31 32 connection = pymysql.connect(host=mysql_ip, port=mysql_port, user=mysql_user, passwd=mysql_passwd, db=msyql_db) 33 cursor = connection.cursor() 34 filedWriter = open("filedItemUrl.txt", "a+", encoding="utf8") 35 36 37 def ip_proxy(): 38 ip = ip_pool[random.randrange(0, 3)] 39 proxy_ip = 'https://' + ip 40 proxies = {'http': proxy_ip} 41 return proxies 42 43 44 def sprider(start_index, end_index): 45 for i in range(start_index, end_index): 46 try: 47 response = requests.get(baseUrl + str(i), proxies=ip_proxy(), headers=headers, timeout=1) 48 if 'error' in response.url: 49 pass 50 else: 51 id = i 52 url = requests.utils.unquote(response.url) 53 url_cotent = response.text.encode(encoding='ISO-8859-1').decode('utf8') 54 sql = 'insert into baikebaiku (id,url,html_content) values(%s,%s,%s)' 55 cursor.execute(sql, (id, url, url_cotent)) 56 connection.commit() 57 print("第" + str(i) + "个,添加数据库成功") 58 except Exception as e: 59 filedWriter.write(str(i) + '\n') 60 filedWriter.flush() 61 print(e.args) 62 63 64 if __name__ == '__main__': 65 66 pool = Pool(processes=process_num) 67 68 one_process_task_num = 20000000 // process_num 69 70 for i in range(process_num): 71 pool.apply_async(sprider, args=[one_process_task_num * i, one_process_task_num * (i + 1)]) 72 73 pool.close() 74 pool.join()

# -*- coding: utf-8 -*- # @time : 2019/7/1 14:56 import requests import random from multiprocessing import Process, Pool import os, time import urllib ''' 通过组装“https://baike.baidu.com/view/”+数字的方式进行多进程遍历。 ''' BASE_DIR = os.path.join(os.path.dirname(os.path.abspath(__file__)), 'crawl_res') idNum=30000000 process_num = 80 sleep_time = 30 baseUrl = 'https://baike.baidu.com/view/' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36', 'Referer': 'https://www.baidu.com/', 'Accept-Encoding': 'gzip, deflate, br' } ip_pool = [ '119.98.44.192:8118', '111.198.219.151:8118', '101.86.86.101:8118', ] failed_writer = open("failItemUrl.txt", "a+", encoding="utf8") error_id_writer = open("error_id.txt", "a+", encoding="utf8") def ip_proxy(): ip = ip_pool[random.randrange(0, 3)] proxy_ip = 'https://' + ip proxies = {'http': proxy_ip} return proxies def spider(start_index, end_index, process_index): # 每个进程的变量 COUNT = 0 DIR_INDEX = 0 DIR_MAX_FILES = 10000 start_id = start_index # start1=0 # start222 = time.time() for id in range(start_index, end_index): # start1+=1 # if start1 % 100==0: # end222 = time.time() # print("每100个耗时"+str(end222-start222)) # start1=time.time() print(id) try: response = requests.get(baseUrl + str(id), headers=headers, timeout=1) if 'https://baike.baidu.com/error.html' in response.url: error_id_writer.write(str(id) + '\n') error_id_writer.flush() else: # 记录每个id对应的item,eg: 16360 马云/6252 with open("id2item.txt", "a+", encoding="utf-8") as fin2: url_split = response.url.split("?")[0].split("/") name = url_split[-2] unique_id = url_split[-1] if 'item'.__eq__(name): name = unique_id unique_id = "" fin2.write(str(id) + "\t" + str(urllib.parse.unquote(name)) + "/" + str(unique_id) + '\n') dir_name = os.path.join(BASE_DIR, str(process_index), str(DIR_INDEX)) if not os.path.exists(dir_name): os.makedirs(dir_name) url_cotent = str(response.content, encoding="utf-8") filename = os.path.join(dir_name, str(id) + ".html") with open(filename, 'w', encoding="utf-8") as f: f.write(url_cotent) # current = time.strftime("%Y%m%d%H%M%S", time.localtime()) # htmlWriter = open(current + "_" + str(id) + ".html", "a+", encoding="utf8") # # htmlWriter.write(url_cotent) # htmlWriter.flush() # writer.write(str(id) + "\t" + url + "\t" + (current + "_" + str(id) + ".html") + "\n") # writer.flush() COUNT += 1 # 控制每个文件夹内10000个html,然后记录每个文件夹的id范围 if COUNT == DIR_MAX_FILES: with open(str(process_index) + "_id_margin.txt", "a+", encoding="utf-8") as id_margin: id_margin.write(str(DIR_INDEX) + "\t" + str(start_id) + "\t" + str(id) + "\n") start_id = id+1 COUNT = 0 DIR_INDEX += 1 except Exception as e: failed_writer.write(str(id) + '\n') failed_writer.flush() time.sleep(sleep_time) print(e) if __name__ == '__main__': pool = Pool(processes=process_num) one_process_task_num = idNum // process_num #start = time.time() for i in range(process_num): pool.apply_async(spider, args=[one_process_task_num * i, one_process_task_num * (i + 1), i]) pool.close() pool.join() #end = time.time() #print(str(idNum)+"个id遍历用"+str(process_num)+"需要 "+str(end-start))

以上采集的可能有重复,并且少了很多。

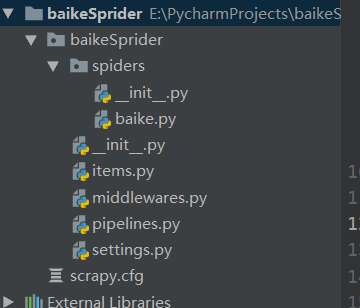

因此我修改了采集方式,以id为主,以界面中a.href为辅 的手段完成采集。 并且此次采用scrapy完成采集。

目录:

import scrapy import requests import sys from scrapy import Request class BaikeSpider(scrapy.Spider): name = 'baike' allowed_domains = ['baike.baidu.com'] start_urls = ['https://baike.baidu.com/view/' + str(id) for id in range(1, 25000000)] has_crawled_urls = dict() def parse(self, response): if 'error' in response.url: pass else: url = requests.utils.unquote(response.url) url_split = url.split("?")[0].split("/") name = url_split[-2] unique_id = url_split[-1] if 'item'.__eq__(name): name=unique_id unique_id=str(-1) if name in BaikeSpider.has_crawled_urls.keys(): # 这个人是有歧义的 if unique_id in BaikeSpider.has_crawled_urls[name]: pass else: # 保存 # 先存储当前页面 url_cotent = str(response.body, encoding="utf-8") with open(str(name) + "_" + str(unique_id) + ".html", 'w', encoding="utf-8") as f: f.write(url_cotent) BaikeSpider.has_crawled_urls[name].add(unique_id) res = response.xpath('//a') for id, i in enumerate(res): candicate = i.xpath('@href').extract() if candicate!=None and len(candicate) > 0 and candicate[0].startswith("/item/"): yield Request("https://baike.baidu.com" + candicate[0]) else: # 先存储当前页面 url_cotent = str(response.body, encoding="utf-8") with open(str(name) + "_" + str(unique_id)+".html", 'w', encoding="utf-8") as f: f.write(url_cotent) BaikeSpider.has_crawled_urls[name]=set() res = response.xpath('//a') for id, i in enumerate(res): candicate = i.xpath('@href').extract() if candicate!=None and len(candicate) > 0 and candicate[0].startswith("/item/"): yield Request("https://baike.baidu.com" + candicate[0]) # filename = "teacher.html" # # url = requests.utils.unquote(response.url) # print(str(response.body, encoding = "utf-8")) # url_cotent = str(response.body, encoding = "utf-8") # # f = open(filename, 'w',encoding="utf-8") # f.write(url_cotent) # f.close()

# Scrapy settings for baikeSprider project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://docs.scrapy.org/en/latest/topics/settings.html # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html # https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'baikeSprider' SPIDER_MODULES = ['baikeSprider.spiders'] NEWSPIDER_MODULE = 'baikeSprider.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'baikeSprider (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: DEFAULT_REQUEST_HEADERS = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36', 'Referer': 'https://www.baidu.com/', 'Accept-Encoding': 'gzip, deflate, br' } # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'baikeSprider.middlewares.BaikespriderSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'baikeSprider.middlewares.BaikespriderDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'baikeSprider.pipelines.BaikespriderPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

浙公网安备 33010602011771号

浙公网安备 33010602011771号