2025,每天10分钟,跟我学K8S(四十七)- Prometheus(四)自动发现

上一章节学习了Prometheus监控应用的流程,同时Prometheus也为我们提供了一个自动发现应用进行监控的方式。它有5种模式:Node、Service 、Pod、Endpoints、ingress。我们可以通过添加额外的配置来进行服务发现进行自动监控。不同的服务发现模式适用于不同的场景,例如:node适用于与主机相关的监控资源,如节点中运行的Kubernetes 组件状态、节点上运行的容器状态、节点自身状态等;service 和 ingress 适用于通过黑盒监控的场景,如对服务的可用性以及服务质量的监控;endpoints 和 pod 均可用于获取 Pod 实例的监控数据,如监控用户或者管理员部署的支持 Prometheus 的应用。本文就用Service 和Pod 举例。

为解决服务发现的问题,kube-prometheus 为我们提供了一个额外的抓取配置来解决这个问题,我们可以通过添加额外的配置来进行服务发现进行自动监控。我们可以在 kube-prometheus 当中去自动发现并监控具有 prometheus.io/scrape=true 这个 annotations 的 Service。

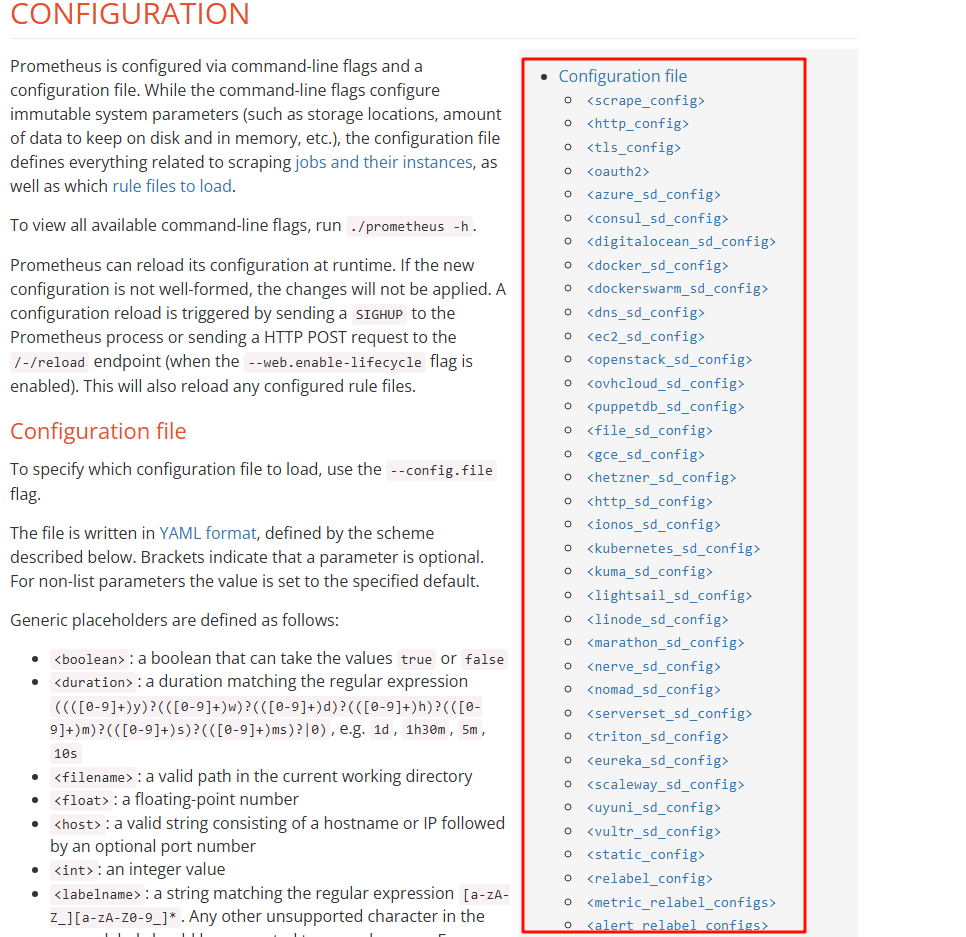

官方配置项规则参考:https://prometheus.io/docs/prometheus/latest/configuration/configuration/

其中是通过 kubernetes_sd_configs 支持监控各种资源。kubernetes SD 配置允许从 kubernetes REST API 接收搜集指标,且总是和集群保持同步状态,任何一种 role 类型都能够配置来发现我们想要的对象。

规则配置使用 yaml 格式,比如下面是文件中一级配置项。自动发现 k8s Metrics 接口是通过 scrape_configs 来实现的:

#全局配置

global:

#规则配置主要是配置报警规则

rule_files:

#抓取配置,主要配置抓取客户端相关

scrape_configs:

#报警配置

alerting:

#用于远程存储写配置

remote_write:

#用于远程读配置

remote_read:之前搭建完 Prometheus 的时候,我们注意到 kube-apiserver 是自动就被监控到的。我们并没有手动去添加他,他是如何做到的,查看一下他的 serviceMonitor文件。

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/name: apiserver

app.kubernetes.io/part-of: kube-prometheus

name: kube-apiserver

namespace: monitoring

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

metricRelabelings:

......

- action: drop

regex: apiserver_request_duration_seconds_bucket;(0.15|0.25|0.3|0.35|0.4|0.45|0.6|0.7|0.8|0.9|1.25|1.5|1.75|2.5|3|3.5|4.5|6|7|8|9|15|25|30|50)

sourceLabels:

- __name__

- le

port: https

scheme: https

tlsConfig:

caFile: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

serverName: kubernetes

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 5s

metricRelabelings:

- action: drop

regex: process_start_time_seconds

sourceLabels:

- __name__

path: /metrics/slis

port: https

scheme: https

tlsConfig:

caFile: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

serverName: kubernetes

jobLabel: component

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

component: apiserver

provider: kubernetes

action的行为:

replace:默认行为,不配置action的话就采用这种行为,它会根据regex来去匹配source_labels标签上的值,并将并将匹配到的值写入target_label中

labelmap:它会根据regex去匹配标签名称,并将匹配到的内容作为新标签的名称,其值作为新标签的值

keep:仅收集匹配到regex的源标签,而会丢弃没有匹配到的所有标签,用于选择

drop:丢弃匹配到regex的源标签,而会收集没有匹配到的所有标签,用于排除

labeldrop:使用regex匹配标签,符合regex规则的标签将从target实例中移除,其实也就是不收集不保存

labelkeep:使用regex匹配标签,仅收集符合regex规则的标签,不符合的不收集

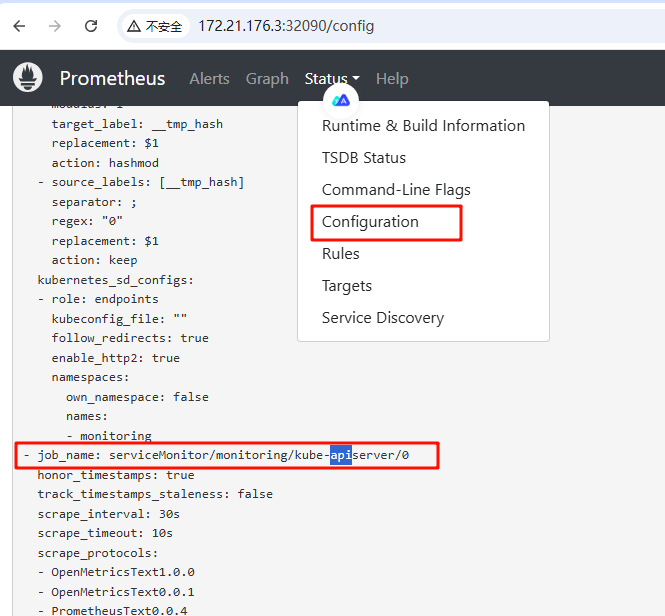

上面的配置除了在serviceMonitor文件中可以看到,也可以在web页面的config页面查看到,那我们大概能明白是需要增加一个job_name,然后匹配不同规则的正则需求。这些不同对象的需求在官网上可以看到。接下来就实际操作新增pod的自动发现和svc的自动发现。

Pod的自动发现

1、创建prometheus-additional.yaml

vim manifests/prometheus-additional.yaml

---

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name2、生成secret

生成secret ,命名为additional-configs-pod

# kubectl create secret generic additional-configs --from-file=manifests/prometheus-additional.yaml -n monitoringsecret/additional-configs created

3、查看刚才创建的secret

查看刚才创建的secret generic additional-configs

# kubectl get secret additional-configs -n monitoring -o yaml

apiVersion: v1

data:

prometheus-additional.yaml: LS0tCi0gam9iX25hbWU6IGt1YmVybmV0ZXMtcG9kcwogIGt1YmVybmV0ZXNfc2RfY29uZmlnczoKICAtIHJvbGU6IHBvZAogIHJlbGFiZWxfY29uZmlnczoKICAtIGFjdGlvbjoga2VlcAogICAgcmVnZXg6IHRydWUKICAgIHNvdXJjZV9sYWJlbHM6CiAgICAtIF9fbWV0YV9rdWJlcm5ldGVzX3BvZF9hbm5vdGF0aW9uX3Byb21ldGhldXNfaW9fc2NyYXBlCiAgLSBhY3Rpb246IHJlcGxhY2UKICAgIHJlZ2V4OiAoLispCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX21ldGFfa3ViZXJuZXRlc19wb2RfYW5ub3RhdGlvbl9wcm9tZXRoZXVzX2lvX3BhdGgKICAgIHRhcmdldF9sYWJlbDogX19tZXRyaWNzX3BhdGhfXwogIC0gYWN0aW9uOiByZXBsYWNlCiAgICByZWdleDogKFteOl0rKSg/OjpcZCspPzsoXGQrKQogICAgcmVwbGFjZW1lbnQ6ICQxOiQyCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX2FkZHJlc3NfXwogICAgLSBfX21ldGFfa3ViZXJuZXRlc19wb2RfYW5ub3RhdGlvbl9wcm9tZXRoZXVzX2lvX3BvcnQKICAgIHRhcmdldF9sYWJlbDogX19hZGRyZXNzX18KICAtIGFjdGlvbjogbGFiZWxtYXAKICAgIHJlZ2V4OiBfX21ldGFfa3ViZXJuZXRlc19wb2RfbGFiZWxfKC4rKQogIC0gYWN0aW9uOiByZXBsYWNlCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX21ldGFfa3ViZXJuZXRlc19uYW1lc3BhY2UKICAgIHRhcmdldF9sYWJlbDoga3ViZXJuZXRlc19uYW1lc3BhY2UKICAtIGFjdGlvbjogcmVwbGFjZQogICAgc291cmNlX2xhYmVsczoKICAgIC0gX19tZXRhX2t1YmVybmV0ZXNfcG9kX25hbWUKICAgIHRhcmdldF9sYWJlbDoga3ViZXJuZXRlc19wb2RfbmFtZQo=

kind: Secret

metadata:

creationTimestamp: "2025-04-15T03:18:34Z"

name: additional-configs

namespace: monitoring

resourceVersion: "2195855"

uid: b0138dc1-0071-432e-a6d0-12e2b9621c21

type: Opaque

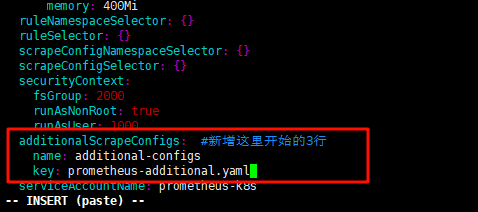

4、编辑prometheus-prometheus.yaml

新增三行,对应的additionalScrapeConfigs配置

添加完成后,直接更新 prometheus 这个 CRD 资源对象:

# kubectl apply -f manifests/prometheus-prometheus.yaml

prometheus.monitoring.coreos.com/k8s configured

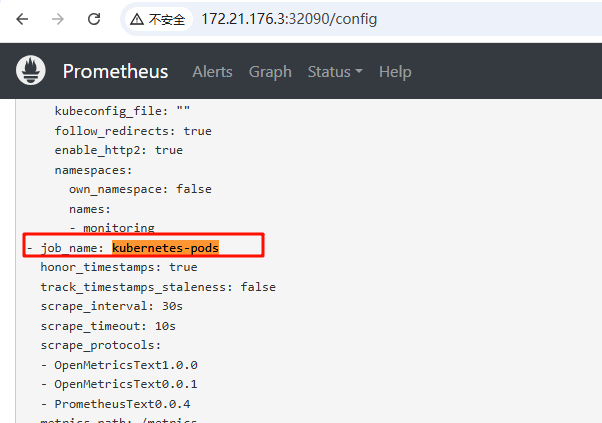

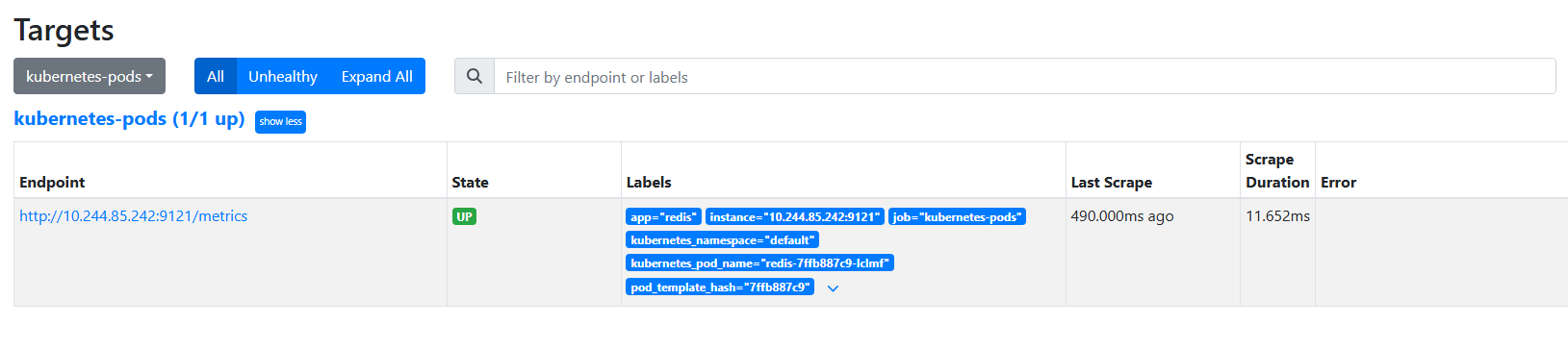

5、查看web页面

等待几分钟,在prometheus dashboard 页面 status configuration页面查看刚才创建的 job_name: kubernetes-pods 是否有出来

6、验证

创建一个新的pod,以redis为例,来查看自动发现pod功能,是否启用

要想自动发现集群中的 pod,也需要我们在 pod 的annotation区域添加:prometheus.io/scrape=true的声明

# cat prome-redis.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

labels:

app: redis

# namespace: kube-system

spec:

selector: #k8s 新版本必须有selector

matchLabels:

app: redis

template:

metadata:

annotations: #下面2栏是必须要的,只有包含这2栏,自动发现才可以找到

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

labels:

app: redis

spec:

containers:

- name: redis

image: m.daocloud.io/docker.io/redis

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 6379

- name: redis-exporter

image: m.daocloud.io/docker.io/oliver006/redis_exporter:latest

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9121

# kubectl apply -f prome-redis.yaml

deployment.apps/redis created

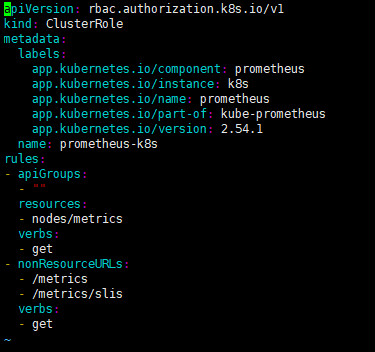

7、解决rbac的报错

但是此时切换到 targets 页面下面却并没有发现对应的监控任务,查看 Prometheus 的 Pod 日志,发现大量日志权限问题的报错

# kubectl logs -f prometheus-k8s-0 prometheus -n monitoring

ts=2025-04-15T03:26:04.637Z caller=klog.go:108 level=warn component=k8s_client_runtime func=Warningf msg="pkg/mod/k8s.io/client-go@v0.29.3/tools/cache/reflector.go:229: failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"pods\" in API group \"\" at the cluster scope"

ts=2025-04-15T03:26:04.637Z caller=klog.go:116 level=error component=k8s_client_runtime func=ErrorDepth msg="pkg/mod/k8s.io/client-go@v0.29.3/tools/cache/reflector.go:229: Failed to watch *v1.Pod: failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"pods\" in API group \"\" at the cluster scope"这说明是 RBAC 权限的问题,通过 prometheus 资源对象的配置可以知道 Prometheus 绑定了一个名为 prometheus-k8s 的 ServiceAccount 对象,而这个对象绑定的是一个名为 prometheus-k8s 的 ClusterRole:(prometheus-clusterRole.yaml)

原本的权限如下:

修改后如下:

cat prometheus-clusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

# kubectl apply -f manifests/prometheus-clusterRole.yaml

clusterrole.rbac.authorization.k8s.io/prometheus-k8s configured

再次去在 Prometheus Dashboard的targets页面,已经可以发现刚才监控的redis监控项了。

service的自动发现

service通过selector和pod建立关联。k8s会根据service关联到pod的podIP信息组合成一个endpoint。

若service定义中没有selector字段,service被创建时,endpoint controller不会自动创建endpoint。所以监控endpoint即可监控service

1、新增redis的service

给上面创建的redis创建service

vim prome-redis-service.yaml

kind: Service

apiVersion: v1

metadata: #下面3行必须包

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

name: redis

# namespace: kube-system

spec:

selector: #必须有selector

app: redis

ports:

- name: redis

port: 6379

targetPort: 6379

- name: metrics #由于上面选择的是port进行对应,所以这里名称随意

port: 9121

targetPort: 9121

kubectl apply -f prome-redis-service.yaml

2、新增svc的job_name

修改prometheus-additional.yaml 注意新增加的job不能用3横线

vim prometheus-additional.yaml

# 新增下面内容 注意此处不能用3横线,否则无法识别下面内容

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

3、重新应用secret

删除刚才生成secret ,再次重新创建一个

# kubectl delete secret -n monitoring additional-configs

secret "additional-configs" deleted

# kubectl create secret generic additional-configs --from-file=manifests/prometheus-additional.yaml -n monitoring

secret/additional-configs created

4、验证secret

查看刚才创建的secret generic additional-configs

# kubectl get secret additional-configs -n monitoring -o yaml

apiVersion: v1

data:

prometheus-additional.yaml: LS0tCi0gam9iX25hbWU6IGt1YmVybmV0ZXMtcG9kcwogIGt1YmVybmV0ZXNfc2RfY29uZmlnczoKICAtIHJvbGU6IHBvZAogIHJlbGFiZWxfY29uZmlnczoKICAtIGFjdGlvbjoga2VlcAogICAgcmVnZXg6IHRydWUKICAgIHNvdXJjZV9sYWJlbHM6CiAgICAtIF9fbWV0YV9rdWJlcm5ldGVzX3BvZF9hbm5vdGF0aW9uX3Byb21ldGhldXNfaW9fc2NyYXBlCiAgLSBhY3Rpb246IHJlcGxhY2UKICAgIHJlZ2V4OiAoLispCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX21ldGFfa3ViZXJuZXRlc19wb2RfYW5ub3RhdGlvbl9wcm9tZXRoZXVzX2lvX3BhdGgKICAgIHRhcmdldF9sYWJlbDogX19tZXRyaWNzX3BhdGhfXwogIC0gYWN0aW9uOiByZXBsYWNlCiAgICByZWdleDogKFteOl0rKSg/OjpcZCspPzsoXGQrKQogICAgcmVwbGFjZW1lbnQ6ICQxOiQyCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX2FkZHJlc3NfXwogICAgLSBfX21ldGFfa3ViZXJuZXRlc19wb2RfYW5ub3RhdGlvbl9wcm9tZXRoZXVzX2lvX3BvcnQKICAgIHRhcmdldF9sYWJlbDogX19hZGRyZXNzX18KICAtIGFjdGlvbjogbGFiZWxtYXAKICAgIHJlZ2V4OiBfX21ldGFfa3ViZXJuZXRlc19wb2RfbGFiZWxfKC4rKQogIC0gYWN0aW9uOiByZXBsYWNlCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX21ldGFfa3ViZXJuZXRlc19uYW1lc3BhY2UKICAgIHRhcmdldF9sYWJlbDoga3ViZXJuZXRlc19uYW1lc3BhY2UKICAtIGFjdGlvbjogcmVwbGFjZQogICAgc291cmNlX2xhYmVsczoKICAgIC0gX19tZXRhX2t1YmVybmV0ZXNfcG9kX25hbWUKICAgIHRhcmdldF9sYWJlbDoga3ViZXJuZXRlc19wb2RfbmFtZQotIGpvYl9uYW1lOiBrdWJlcm5ldGVzLXNlcnZpY2UtZW5kcG9pbnRzCiAga3ViZXJuZXRlc19zZF9jb25maWdzOgogIC0gcm9sZTogZW5kcG9pbnRzCiAgcmVsYWJlbF9jb25maWdzOgogIC0gYWN0aW9uOiBrZWVwCiAgICByZWdleDogdHJ1ZQogICAgc291cmNlX2xhYmVsczoKICAgIC0gX19tZXRhX2t1YmVybmV0ZXNfc2VydmljZV9hbm5vdGF0aW9uX3Byb21ldGhldXNfaW9fc2NyYXBlCiAgLSBhY3Rpb246IHJlcGxhY2UKICAgIHJlZ2V4OiAoaHR0cHM/KQogICAgc291cmNlX2xhYmVsczoKICAgIC0gX19tZXRhX2t1YmVybmV0ZXNfc2VydmljZV9hbm5vdGF0aW9uX3Byb21ldGhldXNfaW9fc2NoZW1lCiAgICB0YXJnZXRfbGFiZWw6IF9fc2NoZW1lX18KICAtIGFjdGlvbjogcmVwbGFjZQogICAgcmVnZXg6ICguKykKICAgIHNvdXJjZV9sYWJlbHM6CiAgICAtIF9fbWV0YV9rdWJlcm5ldGVzX3NlcnZpY2VfYW5ub3RhdGlvbl9wcm9tZXRoZXVzX2lvX3BhdGgKICAgIHRhcmdldF9sYWJlbDogX19tZXRyaWNzX3BhdGhfXwogIC0gYWN0aW9uOiByZXBsYWNlCiAgICByZWdleDogKFteOl0rKSg/OjpcZCspPzsoXGQrKQogICAgcmVwbGFjZW1lbnQ6ICQxOiQyCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX2FkZHJlc3NfXwogICAgLSBfX21ldGFfa3ViZXJuZXRlc19zZXJ2aWNlX2Fubm90YXRpb25fcHJvbWV0aGV1c19pb19wb3J0CiAgICB0YXJnZXRfbGFiZWw6IF9fYWRkcmVzc19fCiAgLSBhY3Rpb246IGxhYmVsbWFwCiAgICByZWdleDogX19tZXRhX2t1YmVybmV0ZXNfc2VydmljZV9sYWJlbF8oLispCiAgLSBhY3Rpb246IHJlcGxhY2UKICAgIHNvdXJjZV9sYWJlbHM6CiAgICAtIF9fbWV0YV9rdWJlcm5ldGVzX25hbWVzcGFjZQogICAgdGFyZ2V0X2xhYmVsOiBrdWJlcm5ldGVzX25hbWVzcGFjZQogIC0gYWN0aW9uOiByZXBsYWNlCiAgICBzb3VyY2VfbGFiZWxzOgogICAgLSBfX21ldGFfa3ViZXJuZXRlc19zZXJ2aWNlX25hbWUKICAgIHRhcmdldF9sYWJlbDoga3ViZXJuZXRlc19uYW1lCg==

kind: Secret

metadata:

creationTimestamp: "2025-04-15T05:26:34Z"

name: additional-configs

namespace: monitoring

resourceVersion: "2286438"

uid: cedfc809-a8b3-4006-b432-651d47f7a366

type: Opaque

添加完成后,等待即可,如果一直不出来,可以删除重新更新,但是不建议这样做。直接更新 prometheus 这个 CRD 资源对象:

kubectl delete -f prometheus-prometheus.yaml

kubectl apply -f prometheus-prometheus.yaml

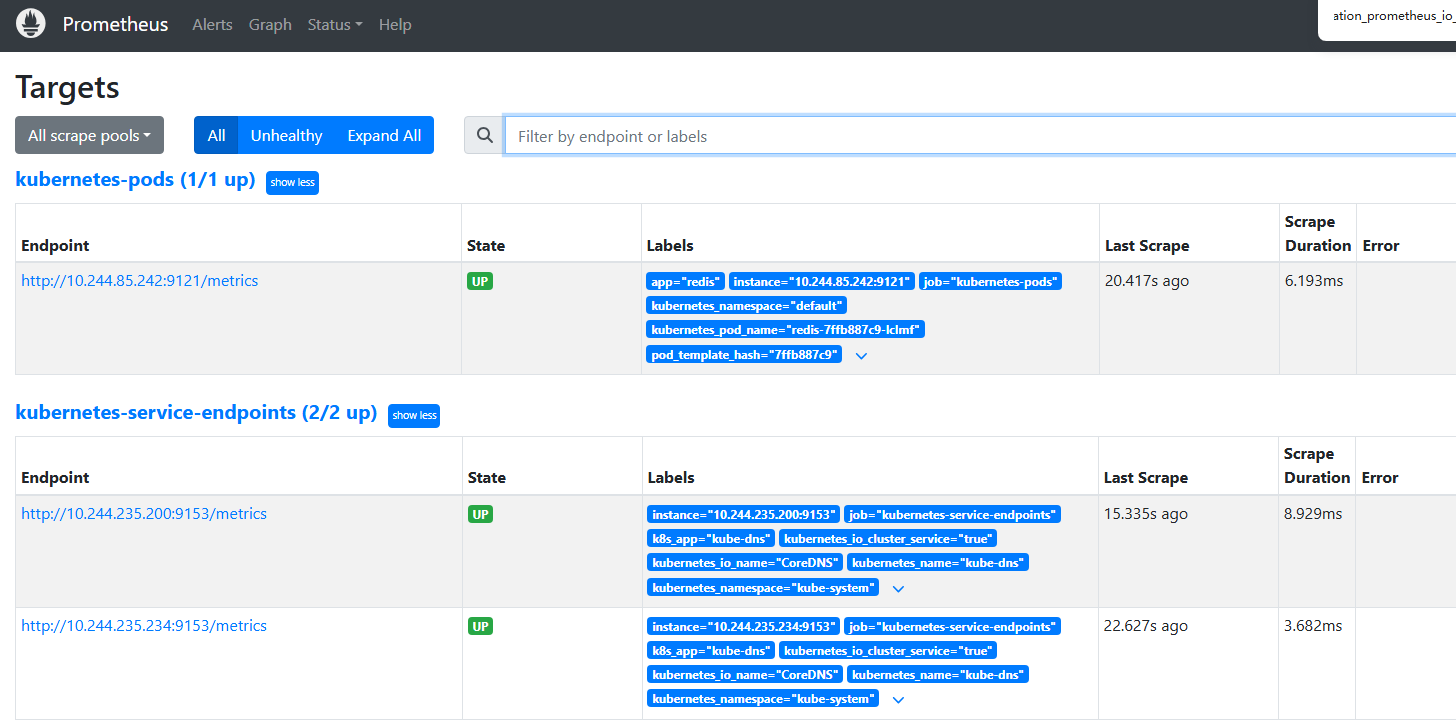

prometheus.monitoring.coreos.com/k8s configured5、查看web页面

等待几分钟,在prometheus dashboard 页面 status configuration页面查看刚才创建的 job_name: kubernetes-service是否有出来

再去监控页面查看是有有这个监控项

至此,service的自动发现也做完了,从图例看出来,pod自动发现和service自动发现重复了。一般监控一种就可以了

总结:

不管是自动发现pod还是service,大概步骤分为以下几步

1.在prometheus-additional.yaml针对不同对象新增的job_name

2.将additional-configs的secret删除并重新生成

# 删除secret

# kubectl delete secret -n monitoring additional-configs

# 重新生成secret

# kubectl create secret generic additional-configs --from-file=manifests/prometheus-additional.yaml -n monitoring3. 编辑prometheus-prometheus.yaml,将刚才生成的secret挂载进去,并重新apply (只用一次)

4. 出现权限报错,重新创建rbac,赋予权限 (只用一次)

5.创建对应的pod或者svc的yaml,新增对应的注解,如果是pod的yaml文件需要通过旁挂一个sidecar的形式提供应用的exporter服务,暴露/metrics接口。这种sidecar的形式我们将在日志章节单独讲解。

# 如果是pod,新增pod的注解

metadata:

annotations: #下面2栏是必须要的,只有包含这2栏,自动发现才可以找到

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

# 如果是svc,新增svc的注解

metadata: #下面3行必须包

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

浙公网安备 33010602011771号

浙公网安备 33010602011771号