Text-to-SQL Pandas Chatbot-agent

url:https://www.cnblogs.com/devcxx/p/18615698

In today’s data-driven world, the ability to interact seamlessly with databases using natural language is a game-changer. Imagine asking a question in plain English and receiving not only the relevant data but also insightful visualizations—all without writing a single line of SQL. In this blog post, I’ll walk you through how I built a Text-to-SQL Pandas Chatbot using LangChain, Streamlit, and OpenAI’s GPT-4. Whether you’re a seasoned developer or a complete beginner, this guide will help you understand the process of creating an intelligent SQL agent.

Introduction

The goal of this project is to create an intelligent chatbot that can understand natural language queries, convert them into SQL statements, fetch data from a database, and even perform further data analysis and visualization—all within a user-friendly interface. By leveraging powerful libraries like LangChain and Streamlit, combined with the capabilities of OpenAI’s GPT-4, we can build a robust and interactive SQL agent.

Prerequisites

Before diving into the code, ensure you have the following:

• Python 3.7 or higher: The programming language used for this project.

• Streamlit: For building the web interface.

• LangChain: A framework for building applications with LLMs.

• OpenAI API Key: To access GPT-4.

• SQLite Database: For storing and querying data.

• Basic Knowledge of Python: Familiarity with Python programming will be beneficial.

Understanding the Application Architecture

The application is structured to perform the following tasks:

1. User Interface: Built with Streamlit, it provides a chat interface for user interactions.

2. Language Model (LLM): GPT-4 processes natural language inputs and generates SQL queries or Python code.

3. Tools:

• SQL Query Generator: Converts user questions into SQL queries.

• SQL Data Fetcher: Executes SQL queries and retrieves data.

• Python Code Generator: Creates Python scripts for data analysis and visualization.

4. Agent Executor: Orchestrates the tools based on user input to provide responses.

Detailed Code Explanation

Let’s break down the code step-by-step to understand how each component contributes to the overall functionality.

1. Importing Necessary Libraries

import json

import psycopg2

import ast

import re

import streamlit as st

from contextlib import redirect_stdout

from io import StringIO, BytesIO

import pandas as pd

from langchain.agents import create_tool_calling_agent, AgentExecutor

from langchain_core.tools import tool

from langchain.prompts import PromptTemplate, ChatPromptTemplate

from langchain_community.callbacks.streamlit import StreamlitCallbackHandler

from langchain_openai import ChatOpenAI

import httpx

import sqlite3

from dotenv import load_dotenv

import matplotlib.pyplot as plt

Streamlit (streamlit as st): For building the web interface.

LangChain: For creating and managing the language model agents and tools.

OpenAI’s ChatOpenAI: Interface to interact with GPT-4.

SQLite (sqlite3): To connect and interact with the SQLite database.

Pandas (pd): For data manipulation and analysis.

Matplotlib: For data visualization.

2. Configuring the Streamlit App

load_dotenv()

st.title("Text-to-SQL Pandas Chatbot")

proxies = ''

api_key = "your_api"

• load_dotenv(): Loads environment variables from the .env file.

• st.title: Sets the title of the Streamlit app.

• proxies & api_key: Configures the proxy and OpenAI API key. Note: It’s recommended to store API keys securely and not hard-code them.

3. Setting Up the Language Model (LLM)

llm = ChatOpenAI(

model="gpt-4",

temperature=0,

max_tokens=2000,

openai_api_key=api_key,

request_timeout=60,

http_client=httpx.Client(proxies=proxies)

)

ChatOpenAI: Initializes the GPT-4 model with specific parameters:

model="gpt-4": Specifies the GPT-4 model.

temperature=0: Ensures deterministic outputs.

max_tokens=2000: Limits the response length.

http_client: Configures the HTTP client with proxy settings.

4. Defining the Tools

In LangChain, tools are functions that perform specific tasks, such as generating SQL queries or fetching data from a database. Each tool is decorated with @tool, which makes it accessible to the agent.

a. SQL Query Generator Tool

@tool

def sql_query_generator(question: str) -> str:

"""

Generate an SQL query based on the provided question.

"""

prompt1 = PromptTemplate.from_template(

"""

You are a PostgreSQL expert.

NOTE: Only use the table listed in the database schema.

Database_schema:

Tables:

1. CustomerInfo (CustomerID, Name, ContactInfo, RegistrationDate, CategoryPreferences, TotalLifetimeSpending, NumberOfAppointments, LastAppointmentDate, AverageSpendingPerVisit, ChurnIndicator)

2. ProductServiceSales (ProductServiceID, ProductServiceName, Category, UnitPrice, UnitsSold, Revenue, CustomerRetentionRate)

;

Question: {question}

NOTE:Provide the SQL query in JSON format like this: {{"query": "SELECT * FROM transaction;"}}.

NOTE:Don't use markdown.

""")

chain = prompt1 | llm

response = chain.invoke({"question": question})

query = json.loads(response.content)["query"]

print(query)

return query

Purpose: Converts natural language questions into SQL queries.

Process:

1. Prompt Template: Defines the structure and instructions for GPT-4, including the database schema.

2. Invoke LLM: Sends the prompt to GPT-4 and retrieves the response.

3. Parse Response: Extracts the SQL query from the JSON-formatted response.

b. SQL Data Fetching Tool

@tool

def fetch_sql_data(sql_query: str) -> pd.DataFrame:

"""

Fetch data from transaction database based on the SQL query obtained from sql_query_generator_tool and return it as a dataframe.

"""

db_path = 'beauty_clinic.db'

sql_query = sql_query_generator(prompt)

conn = sqlite3.connect(db_path)

cursor = conn.cursor()

# Execute SQL operation

cursor.execute(sql_query)

result = cursor.fetchall()

column_names = [desc[0] for desc in cursor.description]

result_df = pd.DataFrame(result, columns=column_names)

datasets.append(result_df)

print(datasets)

if len(datasets) > 5:

return {

"dataset_id": 0,

"dataset_sample": result_df[:5].to_json(),

}

else:

return result_df

Purpose: Executes the generated SQL query and retrieves data.

Process:

1. Database Connection: Connects to the SQLite database.

2. Execute Query: Runs the SQL query.

3. Fetch Results: Retrieves the data and converts it into a Pandas DataFrame.

4. Data Handling: Manages datasets and returns either a sample or the full DataFrame based on the number of datasets.

c. Python Code Generator Tool

@tool

def generate_python_code(dataset_id: int, dataset_sample: str) -> str:

"""

Generates Python code for data analysis based on the dataset sample.

"""

prompt2 = PromptTemplate.from_template(

"""

You are a Python and Streamlit expert.

The code you generate will run in a Streamlit app. Keep in mind the following:

1. Assume that the dataset is already available as a Pandas DataFrame named 'dataset_df'.

2. The dataset provided (dataset_sample) is the final output. Do not filter, clean, or modify the dataset in any way.

3. The task is to create a visualization directly using the dataset. Do not perform any analysis, filtering, or transformations.

4. Only give me the code, do not include the 'code =', the code you gave me should be returned as a string.

Generate Python code to perform the following task:

Here is dataset_sample:

{dataset_sample}

Output only the Python code. Do not include explanations.

"""

)

chain = prompt2 | llm

response = chain.invoke({"dataset_sample": dataset_sample, "question": prompt})

analysis_code = response.content.strip()

analysis_code = sanitize_input(analysis_code)

print(analysis_code)

dataset = datasets[0] # Retrieve the dataset based on dataset_id

try:

tree = ast.parse(analysis_code)

except SyntaxError as e:

print(f"Syntax error in analysis_code: {e}")

raise

module = ast.Module(tree.body, type_ignores=[])

globals = {}

locals = {

"dataset_df": pd.DataFrame(dataset)

}

# Capture standard output and image buffers

io_buffer = StringIO()

image_buffers = []

try:

# Parse and compile the generated code

module = ast.parse(analysis_code)

compiled_code = compile(module, filename="<ast>", mode="exec")

with redirect_stdout(io_buffer):

exec(compiled_code, globals, locals) # Execute the code

# Capture Matplotlib figures

for fig_num in plt.get_fignums():

fig = plt.figure(fig_num)

buffer = BytesIO()

fig.savefig(buffer, format="png")

buffer.seek(0)

image_buffers.append(buffer)

plt.close("all")

except Exception as e:

st.error(f"Error while executing the analysis code: {e}")

return io_buffer.getvalue()

# Display images

if image_buffers:

for idx, buffer in enumerate(image_buffers):

st.image(buffer, caption=f"Generated Plot {idx + 1}", use_column_width=True)

else:

st.warning("No plots were generated by the code.")

Purpose: Generates and executes Python code for data analysis and visualization based on the fetched data.

Process:

1. Prompt Template: Instructs GPT-4 to generate Python code tailored for Streamlit and Matplotlib.

2. Invoke LLM: Retrieves the generated code.

3. Sanitize Input: Cleans the code to prevent malicious injections.

4. Execute Code: Runs the code in a controlled environment, capturing any visualizations.

5. Display Results: Shows the generated plots within the Streamlit app.

5. Building and Executing the Agent

This section delves deeper into how to set up and execute the agent using LangChain’s tools and AgentExecutor.

a. Understanding Agents in LangChain

In LangChain, an agent is an entity that can perform actions by utilizing various tools. It takes user inputs, decides which tools to use, executes them, and provides the final response. Agents are powerful because they can orchestrate multiple tools to achieve complex tasks.

b. Setting Up Tools for the Agent

Tools are the building blocks of an agent. Each tool performs a specific function, such as generating SQL queries or fetching data. In our project, we have defined three tools:

1. SQL Query Generator (sql_query_generator): Converts natural language questions into SQL queries.

2. SQL Data Fetcher (fetch_sql_data): Executes SQL queries and retrieves data.

3. Python Code Generator (generate_python_code): Creates Python scripts for data analysis and visualization.

These tools are encapsulated as functions and decorated with @tool, making them accessible to the agent.

c. Creating the Agent Executor

datasets = []

tools = [sql_query_generator, fetch_sql_data, generate_python_code]

prompt = ChatPromptTemplate.from_messages([

("system", """

You are an agent that uses tools to interact with a PostgreSQL database.

You should first decide if sql query can solve the problem.

If sql query can solve it, use sql_query_generator to generate the query, then use fetch_sql_data tool to display the result.

If sql query cannot solve it, the procedures are:

First, generate the SQL query based on the question using the sql_query_generator tool.

Second, execute the SQL query and return the result using the fetch_sql_data tool.

Third, generate a python code to analyse the result using the generate_python_code_tool.

"""),

("human", "{input}"),

("placeholder", "{agent_scratchpad}"),

])

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

a.Components:

• datasets: A list to store fetched datasets.

• tools: A list of the defined tools that the agent can use.

• prompt: Defines the behavior and instructions for the agent.

• System Message: Instructs the agent on how to decide which tools to use based on the user’s query.

• Human Message: Represents the user’s input.

• Placeholder: A placeholder for the agent’s scratchpad (intermediate reasoning steps).

• create_tool_calling_agent: Initializes the agent with the specified LLM, tools, and prompt.

• AgentExecutor: Manages the execution flow, allowing the agent to process inputs and interact with tools.

b.Process:

1. User Input: The agent receives a natural language query from the user.

2. Decision Making: Based on the system prompt, the agent decides whether a SQL query can solve the problem.

3. Tool Invocation:

• If a SQL query suffices, it uses the sql_query_generator to create the query and fetch_sql_data to retrieve data.

• If further analysis is needed, it additionally uses the generate_python_code tool.

4. Response Generation: The agent compiles the results and provides the final response to the user.

c.Key Elements of an Agent:

• Language Model (LLM): The underlying model (GPT-4) that processes and generates responses.

• Tools: Functions that perform specific tasks.

• Prompt Template: Instructions that guide the agent’s behavior.

• Executor: Manages the interaction between the agent and its tools.

• Language Model (LLM): The underlying model (GPT-4) that processes and generates responses.

• Tools: Functions that perform specific tasks.

• Prompt Template: Instructions that guide the agent’s behavior.

• Executor: Manages the interaction between the agent and its tools.

6. Handling User Input and Displaying Results

if prompt := st.chat_input():

st.chat_message("user").write(prompt)

with st.chat_message("assistant"):

st_callback = StreamlitCallbackHandler(st.container())

response = agent_executor.invoke(

{"input": prompt},

{"callbacks": [st_callback]}

)

if isinstance(response["output"], pd.DataFrame):

st.dataframe(response["output"]) # Display data frame with interactive features

else:

st.write(response["output"])

Running the Application

1.Start the Streamlit App:

streamlit run your_script_name.py

2.Interact with the Chatbot:

• Open the provided local URL in your browser.

• Enter natural language queries related to your database.

• Receive data tables and visualizations in response.

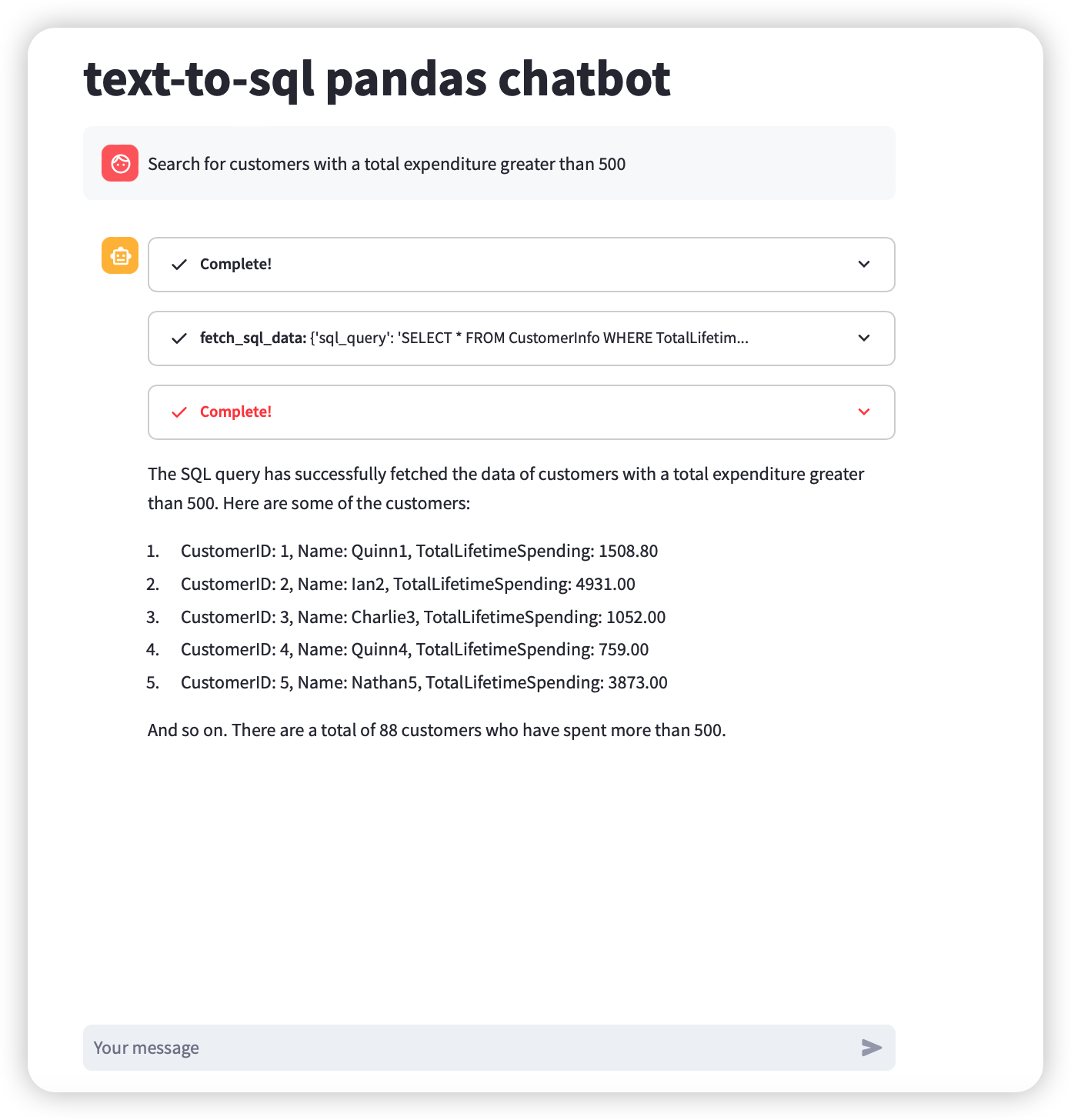

for example:

sql data fetch

question:Search for customers with a total expenditure greater than 500

it show that agent successfully choose the right function and successfully execute it

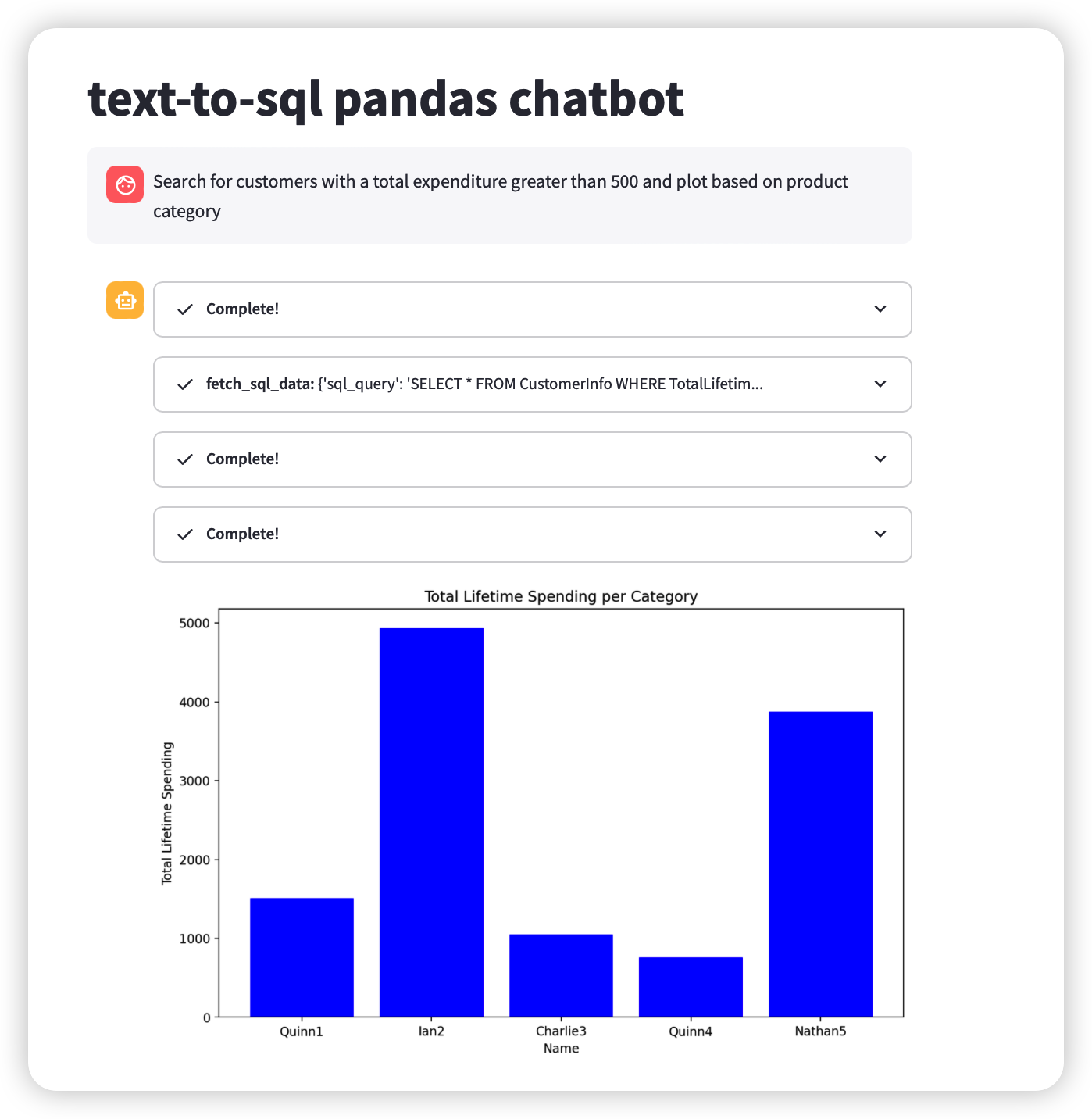

python visualization

question:Search for customers with a total expenditure greater than 500 and plot based on product category

it show that agent successfully choose the right function include sql function and python function and successfully execute it

浙公网安备 33010602011771号

浙公网安备 33010602011771号