Elasticsearch-01-ELK服务搭建+简单使用

0、准备工作

1). 环境要求: CentOS7,建议内存2G以上,预先安装好jdk8

2). 添加用户elk(elasticsearch6+不允许使用root身份启动),同时为用户elk添加sudo权限

su root

adduser elk --添加用户

passwd elk --输入用户密码

whereis sudoers

ls -l /etc/sudoers

chmod -v u+w /etc/sudoers

vim /etc/sudoers

## Allow root to run any commands anywher

root ALL=(ALL) ALL

elk ALL=(ALL) ALL #这个是新增的用户

chmod -v u-w /etc/sudoers

su elk

1、安装elasticsearch-6.3.1

1). 创建安装目录

su elk

mkdir -p /home/elk/elk

2). 下载elasticsearch安装包

cd /home/elk/elk

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.3.1.tar.gz

3). 解压缩安装包

cd /home/elk/elk

tar -zxvf elasticsearch-6.3.1.tar.gz

4). 修改配置文件

cd /home/elk/elk/elasticsearch-6.3.1/config

vim jvm.options

vim elasticsearch.yml

jvm.options 中修改 -xms1g -xmx1g 为适合当前机器的配置

elasticsearch.yml 中修改网络配置 network.host: 192.168.188.133<当前虚拟机ip地址>

5). 尝试启动

su elk

cd /home/elk/elk/elasticsearch-6.3.1/bin

./elasticsearch

如果有以下报错信息:

[1]:max file descriptors [4096] for elasticsearch process is too low ,increase to at least [65536]

[2]:max virtual memory areas vm.max_map_count [65536] is too low ,increase to at least [262144]

针对[1]解决方案

sudo su | su root

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* hard nproc 4096

* soft nproc 4096

sysctl -p

针对[2]解决方案

sudo su | su root

vim /etc/sysctl.conf

vm.max_map_count=655360

fs.file-max=655360

sysctl -p

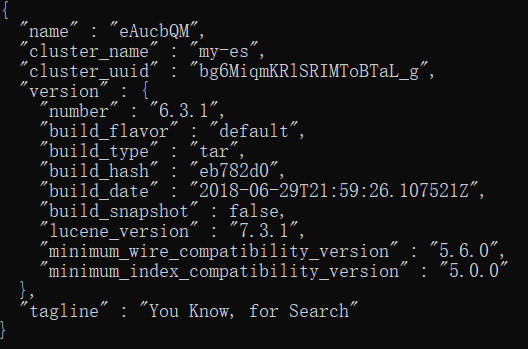

6). 启动成功校验方式

http://192.168.188.133:9200

curl http://192.168.188.133:9200

6.常用端口介绍

9200: Http协议端口,都通过该端口请求es并且获取es中的数据(json)

9300: Tcp协议端口,是es的集群端口(各个节点之间通讯使用)

7. 后台启动

su elk

cd /home/elk/elk/elasticsearch-6.3.1/bin

nohup ./elasticsearch &

vim start.sh

nohup ./elasticsearch &

chmod 777 start.sh

./start.sh

二、安装kinaba-6.3.1

1). 下载安装包

su elk

cd /home/elk/elk

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.3.1-linux-x86_64.tar.gz

2). 解压缩安装包

su elk

cd /home/elk/elk

tar -zxvf kibana/kibana-6.3.1-linux-x86_64.tar.gz

3). 修改配置文件

su elk

cd /home/elk/elk/kibana-6.3.1/config

vim kibana.yml

server.port: 5601

server.host: 192.168.188.133

elasticsearch.url: "http://192.168.188.133:9200"

kibana.index: ".kibana"

4. 启动kibana服务器

cd /home/elk/elk/kibana-6.3.1/bin

nohup ./kibana &

5. windows系统中浏览器访问地址 http://192.168.188.133:5601/kibana

3、安装logstash-6.3.1

1. 下载安装包

su elk

cd /home/elk/elk

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.3.1.tar.gz

2. 解压缩安装包

su elk

cd /home/elk/elk

tar -zxvf logstash-6.3.1.tar.gz

3. 修改配置文件

todo 收集filebeat中采集的数据数据发送给Elasticsearch

su elk

cd /home/elk/elk/logstash-6.3.1/config

vim logstash.yml

path.data: /home/elk/elk/data/logstash

path.config: /home/elk/elk/conf/logstash/*.conf

path.logs: /home/elk/elk/logs/logstash/

http.port: 5044

mkdir -p /home/elk/elk/data/logstash

mkdir -p /home/elk/elk/logs/logstash/

mkdir -p /home/elk/elk/conf/logstash/

# logstash从filebeat的http端口5045中获取数据(filebeat从项目日志文件中实时监控获取数据)

vim /home/elk/elk/conf/logstash/logstash.conf

input {

beats {

port => 5045

codec => json

}

}

filter {

if [fields][service] == "zhboot" {

date {

match => [ "requestTime" , "yyyy-MM-dd HH:mm:ss" ]

target => "@timestamp"

}

mutate {

remove_field => "parent"

remove_field => "meta"

remove_field => "trace"

remove_field => "tags"

remove_field => "prospector"

remove_field => "span"

remove_field => "fields"

remove_field => "severity"

remove_field => "@version"

remove_field => "exportable"

remove_field => "input"

remove_field => "pid"

remove_field => "thread"

remove_field => "beat"

remove_field => "host"

remove_field => "offset"

remove_field => "log"

}

}

}

output {

elasticsearch {

hosts => ["192.168.188.133:9200"]

index => "%{[esindex]}_%{+YYYYMM}"

}

}

4、 启动logstash

su elk

cd /home/elk/elk/logstash-6.3.1/bin

nohup ./logstash &

4、centos7 安装FileBeat-6.3.1

1. 官网地址下载FileBeat

https://www.elastic.co/cn/downloads/past-releases/filebeat-6-3-1

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.3.1-linux-x86_64.tar.gz

2. 上传至Centos服务器

a). 使用sz|rz插件

sudo su

yum install lrzsz

rz # 打开上传窗口

su elk

b). 使用FTP工具上传

3. 解压安装包

su elk

cd /home/elk/elk

tar -zxvf filebeat-6.3.1-linux-x86_64.tar.gz

4. 修改filebeat配置文件

cd /home/elk/elk/filebeat-6.3.1-linux-x86_64

mv filebeat.yml filebeat.yml.bak

vim filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /logs/web-log/*.json

fields:

service: zhboot

filebeat.config.modules:

path: /home/elk/elk/filebeat-6.3.1-linux-x86_64/modules.d/*.yml

reload.enabled: false

output.logstash:

hosts: ["192.168.188.133:5045"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

# filebeat 监听/logs/web-log目录下的所有json文件,将数据传输给logstash日志收集,格式化处理之后存入elasticsearch,最后通过kibana展示出来

5. 创建启动脚本

vim start.sh

#!/bin/sh

nohup ./filebeat -e -c filebeat.yml > filebeat.log &

chmod 777 *.sh

6. 启动服务

./start.sh

7. 检查服务--当日志中出现如下信息表示成功启动

tail -100f filebeat.log

2020-10-19T15:22:37.643+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":10,"time":{"ms":2}}

,"total":{"ticks":20,"time":{"ms":2},"value":20},"user":{"ticks":10}},"handles":{"limit":{"hard":100002,"soft":100001},"open":5},"info":{"ephemeral_id":"88a4f644-752e-45d5-84ea-8aa67234ecec",

"uptime":{"ms":60013}},"memstats":{"gc_next":4194304,"memory_alloc":2787928,"memory_total":7048880},"runtime":{"goroutines":20}},"filebeat":{"harvester":{"open_files":0,"running":0}},

"libbeat":{"config":{"module":{"running":0}},"pipeline":{"clients":1,"events":{"active":0}}},"registrar":{"states":{"current":0}},"system":{"load":{"1":0.07,"15":0.09,"5":0.12,

"norm":{"1":0.035,"15":0.045,"5":0.06}}}}}}

5、centos7 运行Springboot项目

1. 项目说明:一个基于SpringBoot开发的项目,使用拦截器+log4j日志框架将需要收集的业务请求数据包装写入/logs/web-log/web.json 文件中,使用FileBeat+Logstash 收集如Elasticsearch中,通过Kibana查看数据

2. 将SpringBoot项目使用 mvn clean compile package -Dmaven.test.skip=true -P dev 命令打成jar包

3. centos上新建目录 mkdir /data/elk/project –p

4. 将jar包上传至centos服务器,使用 以下命令启动

[root@VM-16-13-centos project]# vim start.sh

#! /bin/bash

nohup java -server -Xms512m -Xmx512m -Xss512k -jar spring-boot-web.jar >/dev/null 2>&1 &

[root@VM-16-13-centos project]# chmod 777 *.sh

[root@VM-16-13-centos project]# ./start.sh

[root@VM-16-13-centos project]# ps -ef|grep elk |grep -v grep

root 1058 1 2 09:32 pts/0 00:00:09 java -server -Xms512m -Xmx512m -Xss512k -jar spring-boot-web.jar

5. 访问项目接口产生日志

curl "http://192.168.188.133:8500/order" -H "Content-Type:application/json" -X POST -d "{\"booleanLombok\":true,\"intLombok\":1,\"personLombok\":{\"age\":25,\"name\":\"Tom\"},\"strLombok\":\"str\"}"

curl -H "Content-Type:application/json" -X POST -d "{\"divisor\":112,\"name\":\"张三\"}" "http://192.168.188.133:8500/error/log"

6、Elasticsearch安装ik中文分词器

1. 进入elasticsearch安装目录

cd /home/elk/elk/elasticsearch-6.3.1/plugins

su elk

mkdir lk

cd lk

2. 下载elasticsearch对应地ik分词器安装包

wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v6.3.1/elasticsearch-analysis-ik-6.3.1.zip

3. 解压安装包

unzip elasticsearch-analysis-ik-6.3.1.zip

rm -rf elasticsearch-analysis-ik-6.3.1.zip

4. 重启elasticsearch服务,如果日志中打印出如下信息表示安装成功

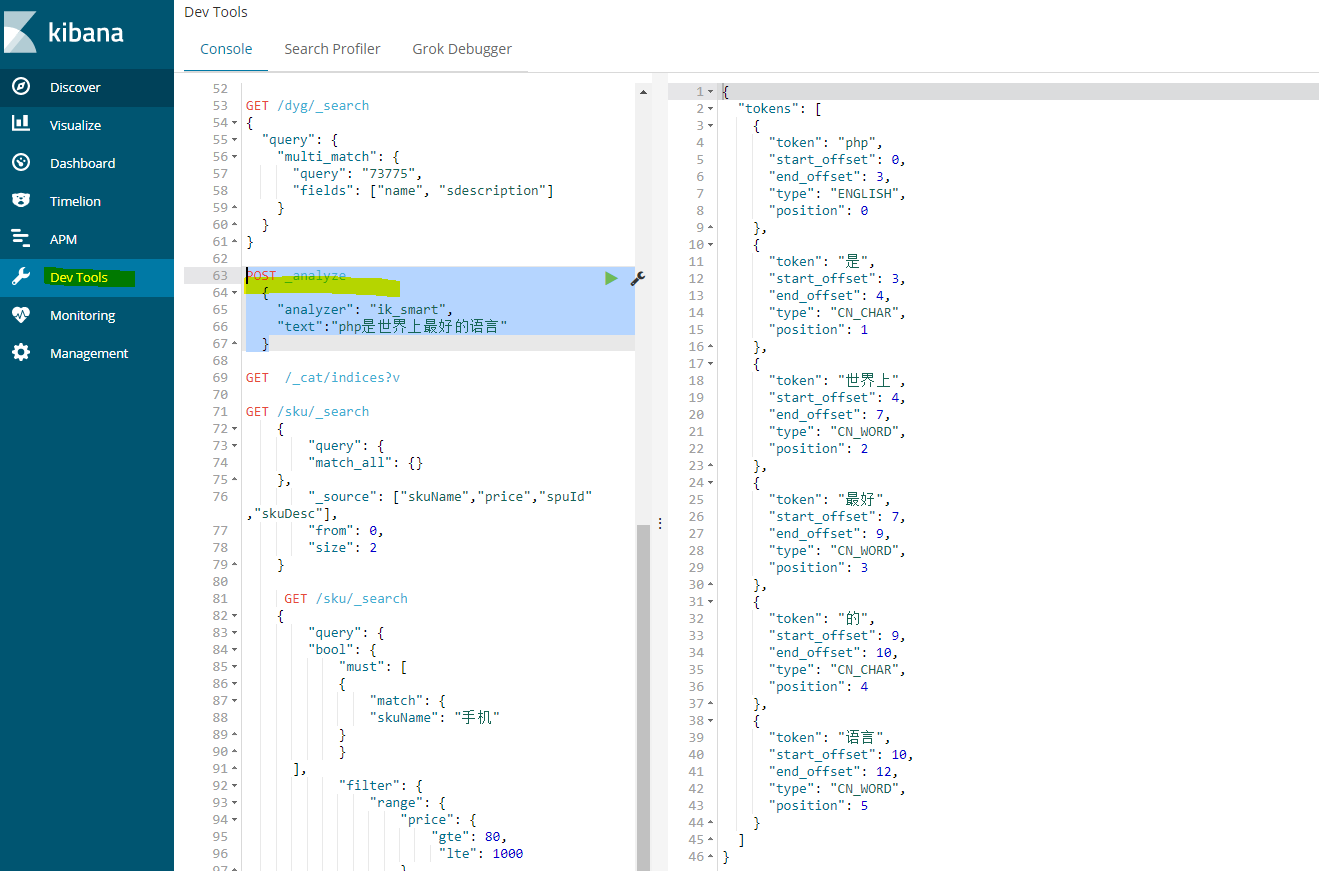

5. 使用kibana中文分词测试

POST _analyze

{

"analyzer": "ik_smart",

"text":"php是世界上最好的语言"

}

7、环境说明

1. Windows系统(win10环境)

2. VMware10.0.1

3. Centos 7.4

4. Xshell5

5. Elasticsearch 6.3.1

6. Kibana 6.3.1

7. Logstash 6.3.1

8. Filebeat 6.3.1

9. SpringBoot项目 (版本号2.3.0.RELEASE)

todo kibana可视化图表 https://www.elastic.co/guide/cn/kibana/current/tutorial-visualizing.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号