使用 Ansible 批量完成 CentOS 7 操作系统基础配置

1. 服务器列表

| IP | 内存(GB) | CPU核数 | 磁盘 | 操作系统 | CPU 架构 | 角色 |

|---|---|---|---|---|---|---|

| 10.0.0.13 | 8 | 1 | 500GB | CentOS 7.9.2009 | x86_64 | Ansible 管理机+受控机 |

| 10.0.0.14 | 8 | 1 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.15 | 8 | 1 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.16 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.17 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.18 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.19 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.20 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

| 10.0.0.21 | 16 | 2 | 500GB | CentOS 7.9.2009 | x86_64 | 受控机 |

说明:

- 每个服务器均存在一个管理员用户 admin,该用户可以免密码执行 sudo 命令;

- 所有操作均使用 admin 用户完成。

2. Ansible 安装部署

2.1 在管理机安装 Ansible 工具

找一台有网的 CentOS 7 服务器操作

$ pwd

/home/admin

$ mkdir -p package

$ sudo yum install -y yum-utils

$ yumdownloader --resolve --destdir=package/ansible ansible

$ cd package

$ tar -zcvf ansible.tar.gz ansible

将 ansible.tar.gz 下载到本地后,上传到 10.0.0.13 的 /home/admin 目录下。

在 10.0.0.13 操作

$ pwd

/home/admin

$ tar -zxvf ansible.tar.gz

$ sudo yum install -y ansible/*.rpm

$ rm -rf ansible.tar.gz ansible/*

$ mkdir -p ansible/system

如 10.0.0.13 机器有互联网且已经配置阿里云的 base + epel YUM 源,则直接执行:

$ sudo yum install -y ansible

$ cd ~

$ mkdir -p ansible/system

2.2 集群间免密码 SSH 配置

在 10.0.0.13 服务器操作

$ pwd

/home/admin

$ cd ansible

$ tee ansible.cfg > /dev/null << 'EOF'

[defaults]

inventory=./hosts

host_key_checking=False

EOF

# 说明

# 在生产环境,通常分配的机器都会使用相同的管理员用户,但是每个服务器的管理员用户密码很大可能是不一样的

$ tee hosts > /dev/null << 'EOF'

[cluster]

10.0.0.13 ansible_password=123456

10.0.0.14 ansible_password=123456

10.0.0.15 ansible_password=123456

10.0.0.16 ansible_password=123456

10.0.0.17 ansible_password=123456

10.0.0.18 ansible_password=123456

10.0.0.19 ansible_password=123456

10.0.0.20 ansible_password=123456

10.0.0.21 ansible_password=123456

[cluster:vars]

ansible_user=admin

EOF

创建 /home/admin/ansible/system/ssh-key.yml,内容如下:

---

- name: Ensure SSH key exists on Ansible control node (localhost)

hosts: localhost

gather_facts: false

become: false

vars:

admin_user: "admin"

ssh_key_file: "~/.ssh/id_rsa"

tasks:

- name: Check if SSH private key exists on localhost

stat:

path: "{{ ssh_key_file }}"

register: key_stat

- name: Ensure .ssh directory exists

file:

path: "~/.ssh"

state: directory

owner: "{{ admin_user }}"

group: "{{ admin_user }}"

mode: '0700'

- name: Generate SSH key on localhost if not exists

openssh_keypair:

path: "{{ ssh_key_file }}"

type: rsa

size: 4096

owner: "{{ admin_user }}"

group: "{{ admin_user }}"

mode: "0600"

when: not key_stat.stat.exists

- name: Read public key from localhost

slurp:

src: "{{ ssh_key_file }}.pub"

register: manager_pubkey

- name: Install public key to all cluster nodes

hosts: cluster

become: false

gather_facts: false

vars:

admin_user: "admin"

ssh_key_file: "~/.ssh/id_rsa"

manager_pubkey_content: "{{ hostvars['localhost']['manager_pubkey'].content | b64decode }}"

tasks:

- name: Ensure .ssh directory exists

file:

path: "~/.ssh"

state: directory

owner: "{{ admin_user }}"

group: "{{ admin_user }}"

mode: '0700'

- name: Authorize SSH key on all nodes

authorized_key:

user: "{{ admin_user }}"

state: present

key: "{{ manager_pubkey_content }}"

- shell: whoami

register: result

- debug:

msg: "{{ result.stdout }}"

$ pwd

/home/admin/ansible

$ ansible-playbook system/ssh-key.yml

# 关键输出

TASK [debug] **********************...

ok: [10.0.0.13] => {

"msg": "admin"

}

ok: [10.0.0.15] => {

"msg": "admin"

}

ok: [10.0.0.14] => {

"msg": "admin"

}

ok: [10.0.0.16] => {

"msg": "admin"

}

ok: [10.0.0.17] => {

"msg": "admin"

}

ok: [10.0.0.18] => {

"msg": "admin"

}

ok: [10.0.0.19] => {

"msg": "admin"

}

ok: [10.0.0.20] => {

"msg": "admin"

}

ok: [10.0.0.21] => {

"msg": "admin"

}

$ cp hosts hosts-backup-1

$ sed -i 's/[[:space:]]*ansible_password=[^ ]*//g' hosts

# 同时测试免密码 ssh 和提权

$ ansible all -a "whoami" -b

10.0.0.14 | CHANGED | rc=0 >>

root

10.0.0.16 | CHANGED | rc=0 >>

root

10.0.0.17 | CHANGED | rc=0 >>

root

10.0.0.15 | CHANGED | rc=0 >>

root

10.0.0.13 | CHANGED | rc=0 >>

root

10.0.0.18 | CHANGED | rc=0 >>

root

10.0.0.20 | CHANGED | rc=0 >>

root

10.0.0.19 | CHANGED | rc=0 >>

root

10.0.0.21 | CHANGED | rc=0 >>

root

3. 集群系统配置

3.1 修改主机名

说明:在生产环境,申请一批服务器之后,主机名通常是随机的字符组成,不利于集群管理。

目标:在集群所有机器(10.0.0.{13..21}),按照规划修改主机名,规划如下:

| IP | 主机名 |

|---|---|

| 10.0.0.13 | arc-pro-dc01 |

| 10.0.0.14 | arc-pro-dc02 |

| 10.0.0.15 | arc-pro-dc03 |

| 10.0.0.16 | arc-pro-dc04 |

| 10.0.0.17 | arc-pro-dc05 |

| 10.0.0.18 | arc-pro-dc06 |

| 10.0.0.19 | arc-pro-dc07 |

| 10.0.0.20 | arc-pro-dc08 |

| 10.0.0.21 | arc-pro-dc09 |

在 10.0.0.13 服务器操作

创建 /home/admin/ansible/system/set-hostname.yml,内容如下:

---

- name: Set hostnames for cluster nodes

hosts: cluster

gather_facts: false

become: true

vars:

hostnames:

"10.0.0.13": "arc-pro-dc01"

"10.0.0.14": "arc-pro-dc02"

"10.0.0.15": "arc-pro-dc03"

"10.0.0.16": "arc-pro-dc04"

"10.0.0.17": "arc-pro-dc05"

"10.0.0.18": "arc-pro-dc06"

"10.0.0.19": "arc-pro-dc07"

"10.0.0.20": "arc-pro-dc08"

"10.0.0.21": "arc-pro-dc09"

tasks:

- name: Set the hostname

hostname:

name: "{{ hostnames[inventory_hostname] }}"

- shell: hostname

register: result

- debug:

msg: "{{ result.stdout }}"

$ pwd

/home/admin/ansible

$ ansible-playbook system/set-hostname.yml

TASK [debug] ******************************...

ok: [10.0.0.15] => {

"msg": "arc-pro-dc03"

}

ok: [10.0.0.13] => {

"msg": "arc-pro-dc01"

}

ok: [10.0.0.14] => {

"msg": "arc-pro-dc02"

}

ok: [10.0.0.16] => {

"msg": "arc-pro-dc04"

}

ok: [10.0.0.17] => {

"msg": "arc-pro-dc05"

}

ok: [10.0.0.18] => {

"msg": "arc-pro-dc06"

}

ok: [10.0.0.19] => {

"msg": "arc-pro-dc07"

}

ok: [10.0.0.20] => {

"msg": "arc-pro-dc08"

}

ok: [10.0.0.21] => {

"msg": "arc-pro-dc09"

}

3.2 修改 /etc/hosts 文件

目标:在集群所有机器(10.0.0.{13..21})上修改 /etc/hosts 文件,每台机器的 /etc/hosts 文件中都有集群 9 台服务器的 IP 地址和主机名的映射关系。

在 10.0.0.13 服务器操作

创建 /home/admin/ansible/system/set-hosts.yml,内容如下:

---

- name: Ensure expected hosts entries exist on target machine

hosts: cluster

become: true

gather_facts: false

vars:

hosts_map:

- { ip: "10.0.0.13", name: "arc-pro-dc01" }

- { ip: "10.0.0.14", name: "arc-pro-dc02" }

- { ip: "10.0.0.15", name: "arc-pro-dc03" }

- { ip: "10.0.0.16", name: "arc-pro-dc04" }

- { ip: "10.0.0.17", name: "arc-pro-dc05" }

- { ip: "10.0.0.18", name: "arc-pro-dc06" }

- { ip: "10.0.0.19", name: "arc-pro-dc07" }

- { ip: "10.0.0.20", name: "arc-pro-dc08" }

- { ip: "10.0.0.21", name: "arc-pro-dc09" }

tasks:

- name: Backup existing /etc/hosts

copy:

src: /etc/hosts

dest: /etc/hosts.bak

remote_src: yes

owner: root

group: root

mode: '0644'

ignore_errors: yes # 避免目标节点没有 /etc/hosts 文件时报错

- name: Ensure each IP has its corresponding hostname

shell: |

cp /etc/hosts /etc/hosts.backup.`date +"%Y%m%d%H%M%S"`

OL=$(egrep "^{{ item.ip }}" /etc/hosts)

if [ "x${OL}" == "x" ]; then

NL="{{ item.ip }} {{ item.name }}"

echo $NL >> /etc/hosts

else

NL="{{ item.ip }}"

echo "$OL" | while IFS= read -r line; do

change="true"

arr=(${line})

for i in "${!arr[@]}"; do

if [[ "${arr[$i]}" != "{{ item.ip }}" ]]; then

if [[ "${arr[$i]}" == "{{ item.name }}" ]]; then

NL="${OL}"

change="false"

break

else

NL="${NL} ${arr[$i]}"

fi

fi

done

if [[ "${change}" == "true" ]]; then

NL="${NL} {{ item.name }}"

sed -i "/^{{ item.ip }}/c\\${NL}" /etc/hosts

fi

done

fi

loop: "{{ hosts_map }}"

- shell: cat /etc/hosts

register: result

- debug:

msg: "{{ result.stdout }}"

$ pwd

/home/admin/ansible

$ ansible-playbook system/set-hosts.yml

# 输出结果中,每台机器的 /etc/hosts 文件中都有集群 9 台服务器的 IP 地址和主机名的映射关系

TASK [debug] **********************************************...

ok: [10.0.0.15] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.13] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.14] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.16] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.17] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.18] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.19] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.20] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

ok: [10.0.0.21] => {

"msg": "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n\n10.0.0.13\tarc-pro-dc01\n10.0.0.14\tarc-pro-dc02\n10.0.0.15\tarc-pro-dc03\n10.0.0.16\tarc-pro-dc04\n10.0.0.17\tarc-pro-dc05\n10.0.0.18\tarc-pro-dc06\n10.0.0.19\tarc-pro-dc07\n10.0.0.20\tarc-pro-dc08\n10.0.0.21\tarc-pro-dc09"

}

# 修改 ansible 的清单文件

$ cp hosts hosts-backup-2

$ tee hosts > /dev/null << 'EOF'

[cluster]

arc-pro-dc01

arc-pro-dc02

arc-pro-dc03

arc-pro-dc04

arc-pro-dc05

arc-pro-dc06

arc-pro-dc07

arc-pro-dc08

arc-pro-dc09

[cluster:vars]

ansible_user=admin

EOF

# 测试

$ ansible all -a "hostname"

arc-pro-dc05 | CHANGED | rc=0 >>

arc-pro-dc05

arc-pro-dc04 | CHANGED | rc=0 >>

arc-pro-dc04

arc-pro-dc02 | CHANGED | rc=0 >>

arc-pro-dc02

arc-pro-dc03 | CHANGED | rc=0 >>

arc-pro-dc03

arc-pro-dc01 | CHANGED | rc=0 >>

arc-pro-dc01

arc-pro-dc06 | CHANGED | rc=0 >>

arc-pro-dc06

arc-pro-dc09 | CHANGED | rc=0 >>

arc-pro-dc09

arc-pro-dc08 | CHANGED | rc=0 >>

arc-pro-dc08

arc-pro-dc07 | CHANGED | rc=0 >>

arc-pro-dc07

3.3 禁用防火墙

在 10.0.0.13 服务器操作

创建 /home/admin/ansible/system/disable-firewall.yml,内容如下:

---

- name: Disable firewalld on all nodes

hosts: cluster

gather_facts: false

become: true

tasks:

- name: Stop firewalld service

systemd:

name: firewalld

state: stopped

enabled: no

- name: Check firewalld service status

command: systemctl is-active firewalld

register: firewalld_active

ignore_errors: true

- name: Check firewalld enabled status

command: systemctl is-enabled firewalld

register: firewalld_enabled

ignore_errors: true

- name: Display firewalld status

debug:

msg: |

Host: {{ inventory_hostname }}

firewalld active: {{ firewalld_active.stdout | default('unknown') }}

firewalld enabled: {{ firewalld_enabled.stdout | default('unknown') }}

- name: Fail if firewalld is running or enabled

fail:

msg: "Firewalld is still active or enabled on {{ inventory_hostname }}"

when: firewalld_active.stdout == "active" or firewalld_enabled.stdout == "enabled"

$ pwd

/home/admin/ansible

$ ansible-playbook system/disable-firewall.yml

TASK [Display firewalld status] ***********************************************...

ok: [arc-pro-dc01] => {

"msg": "Host: arc-pro-dc01\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc02] => {

"msg": "Host: arc-pro-dc02\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc03] => {

"msg": "Host: arc-pro-dc03\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc05] => {

"msg": "Host: arc-pro-dc05\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc04] => {

"msg": "Host: arc-pro-dc04\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc06] => {

"msg": "Host: arc-pro-dc06\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc07] => {

"msg": "Host: arc-pro-dc07\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc08] => {

"msg": "Host: arc-pro-dc08\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

ok: [arc-pro-dc09] => {

"msg": "Host: arc-pro-dc09\nfirewalld active: unknown\nfirewalld enabled: disabled\n"

}

3.4 禁用 SELINUX

在 10.0.0.13 服务器操作

创建 /home/admin/ansible/system/disable-selinux.yml,内容如下:

---

- name: Disable SELinux on all nodes

hosts: cluster

gather_facts: false

become: true

tasks:

- name: Temporarily disable SELinux

command: setenforce 0

ignore_errors: true

- name: Permanently disable SELinux in config

lineinfile:

path: /etc/selinux/config

regexp: '^SELINUX='

line: 'SELINUX=disabled'

backup: yes

- name: Check running SELinux status

command: getenforce

register: selinux_status

- name: Check permanent SELinux status

command: grep '^SELINUX=' /etc/selinux/config

register: selinux_config

- name: Display SELinux status

debug:

msg: |

Host: {{ inventory_hostname }}

Running SELinux status: {{ selinux_status.stdout }}

Configured SELinux status: {{ selinux_config.stdout }}

$ pwd

/home/admin/ansible

$ ansible-playbook system/disable-selinux.yml

TASK [Display SELinux status] ****************************************************************...

ok: [arc-pro-dc01] => {

"msg": "Host: arc-pro-dc01\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc04] => {

"msg": "Host: arc-pro-dc04\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc03] => {

"msg": "Host: arc-pro-dc03\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc02] => {

"msg": "Host: arc-pro-dc02\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc05] => {

"msg": "Host: arc-pro-dc05\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc06] => {

"msg": "Host: arc-pro-dc06\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc07] => {

"msg": "Host: arc-pro-dc07\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc08] => {

"msg": "Host: arc-pro-dc08\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

ok: [arc-pro-dc09] => {

"msg": "Host: arc-pro-dc09\nRunning SELinux status: Disabled\nConfigured SELinux status: SELINUX=disabled\n"

}

3.5 时钟同步

3.5.1 局域网内有指定的时间同步服务器

在生产环境,通常运维部门会提供一个内网可用的时间同步服务器,公司所有的服务器可以使用。或者按部门、项目分配指定的时间同步服务器。假设时间同步服务器的地址为:11.11.11.11在 10.0.0.13 服务器执行

创建 /home/admin/ansible/system/ntp-sync.yml,内容如下:

---

- name: Configure NTP time synchronization using ntpd

hosts: cluster

gather_facts: false

become: true

vars:

ntp_server: "11.11.11.11"

tasks:

- name: Install NTP package

yum:

name: ntp

state: present

- name: Configure NTP server

lineinfile:

path: /etc/ntp.conf

regexp: '^server'

line: "server {{ ntp_server }} iburst"

state: present

backup: yes

- name: Ensure ntpd service is enabled and started

systemd:

name: ntpd

enabled: yes

state: started

- name: Force immediate time synchronization

command: ntpdate -u {{ ntp_server }}

ignore_errors: yes

- name: Restart ntpd to apply changes

systemd:

name: ntpd

state: restarted

- name: Check ntpd service status

systemd:

name: ntpd

register: ntp_status

ignore_errors: yes

- name: Show ntpd running status

debug:

msg: "Host {{ inventory_hostname }} ntpd is {{ 'running' if ntp_status.status.ActiveState == 'active' else 'stopped' }}"

- name: Show ntpd enabled status

debug:

msg: "Host {{ inventory_hostname }} ntpd is {{ 'enabled' if ntp_status.status.UnitFileState == 'enabled' else 'disabled' }}"

- name: Check NTP peers

command: ntpq -p

register: ntpq_output

ignore_errors: yes

- name: Show NTP peers

debug:

msg: "{{ ntpq_output.stdout_lines }}"

$ pwd

/home/admin/ansible

$ ansible-playbook ntp-sync.yml

如果集群允许访问外网,则直接使用阿里云的时间服务器,修改 /home/admin/ansible/system/ntp-sync.yml

---

- name: Configure NTP time synchronization using ntpd

hosts: cluster

gather_facts: false

become: true

vars:

ntp_server: "ntp.aliyun.com"

...

然后执行 ansible-playbook ntp-sync.yml 即可。

3.5.2 局域网内没有时间同步服务器可用

在集群中选择一台服务器作为时间同步服务器(例如 10.0.0.13),其他服务器从 10.0.0.13 同步时间。

在 10.0.0.13 服务器操作

修改 ansible 清单文件:

$ pwd

/home/admin/ansible

$ cat <<EOF >> hosts

[ntp_server]

arc-pro-dc01

[ntp_client]

arc-pro-dc02

arc-pro-dc03

arc-pro-dc04

arc-pro-dc05

arc-pro-dc06

arc-pro-dc07

arc-pro-dc08

arc-pro-dc09

EOF

创建 /home/admin/ansible/system/ntp-server.yml,内容如下:

---

- name: Deploy and configure NTP server on management node

hosts: ntp_server

gather_facts: false

become: true

vars:

allow_ip_scope: "10.0.0.0"

mask: "255.255.255.0"

tasks:

- name: Install ntp package

yum:

name: ntp

state: present

- name: Enable and start ntpd service

systemd:

name: ntpd

state: started

enabled: yes

- name: Configure ntp server

lineinfile:

path: /etc/ntp.conf

regexp: '^restrict default'

line: 'restrict default nomodify notrap nopeer noquery'

state: present

- lineinfile:

path: /etc/ntp.conf

regexp: '^server'

line: 'server 127.127.1.0'

state: present

- lineinfile:

path: /etc/ntp.conf

line: 'fudge 127.127.1.0 stratum 10'

state: present

- lineinfile:

path: /etc/ntp.conf

line: "restrict {{ allow_ip_scope }} mask {{ mask }} nomodify notrap"

state: present

- name: restart ntpd

systemd:

name: ntpd

state: restarted

创建 /home/admin/ansible/system/ntp-client.yml,内容如下:

- name: Configure NTP client on all nodes

hosts: ntp_client

gather_facts: false

become: true

vars:

ntp_server: "10.0.0.13"

tasks:

- name: Install ntp package

yum:

name: ntp

state: present

- name: Enable and start ntpd service

systemd:

name: ntpd

state: started

enabled: yes

- name: Configure ntp client to sync with management node

lineinfile:

path: /etc/ntp.conf

regexp: '^server {{ ntp_server }}'

line: "server {{ ntp_server }} iburst"

state: present

- lineinfile:

path: /etc/ntp.conf

regexp: '^server 127\.127\.1\.0'

line: 'server 127.127.1.0'

state: present

- lineinfile:

path: /etc/ntp.conf

regexp: '^fudge 127\.127\.1\.0'

line: 'fudge 127.127.1.0 stratum 10'

state: present

- lineinfile:

path: /etc/ntp.conf

line: "restrict {{ ntp_server }} nomodify notrap nopeer noquery"

state: present

- name: stop ntpd

systemd:

name: ntpd

state: stopped

- name: ntpdate

shell: "ntpdate {{ ntp_server }}"

- name: start ntpd

systemd:

name: ntpd

state: started

创建 /home/admin/ansible/system/check-ntp-sync.yml,内容如下:

---

- name: Check NTP status on all nodes

hosts: cluster

gather_facts: false

become: true

tasks:

- name: Check NTP peers

command: ntpq -p

register: ntpq_output

ignore_errors: yes

- name: Show NTP peers

debug:

msg: "{{ ntpq_output.stdout_lines }}"

$ pwd

/home/admin/ansible

$ ansible-playbook system/ntp-server.yml

$ ansible-playbook system/ntp-client.yml

$ ansible-playbook system/check-ntp-sync.yml

TASK [Show NTP peers] ***************************************

# arc-pro-dc01 *LOCAL(0) 代表从本地同步时间

ok: [arc-pro-dc01] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*LOCAL(0) .LOCL. 10 l 4 64 377 0.000 0.000 0.000"

]

}

# 其他服务器,打印的是 *arc-pro-dc01,注意 * 号在 arc-pro-dc01 之前,代表从 arc-pro-dc01 同步数据

# 有些服务器显示的还是 *LOCAL(0),这个是正常的,可能需要等待几分钟或者十几分钟,才可以切换到 arc-pro-dc01

ok: [arc-pro-dc02] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 40 64 17 0.161 22.831 23.328",

" LOCAL(0) .LOCL. 10 l 179 64 14 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc03] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 42 64 17 0.234 -25.571 25.300",

" LOCAL(0) .LOCL. 10 l 179 64 14 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc04] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 38 64 17 0.222 -3.610 6.001",

" LOCAL(0) .LOCL. 10 l 179 64 14 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc05] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 42 64 17 0.357 -43.293 37.725",

" LOCAL(0) .LOCL. 10 l 51 64 17 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc06] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 38 64 17 0.270 23.929 24.081",

" LOCAL(0) .LOCL. 10 l 180 64 14 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc07] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 42 64 17 0.356 11.410 12.059",

" LOCAL(0) .LOCL. 10 l 51 64 17 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc08] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 41 64 17 0.214 -5.287 6.890",

" LOCAL(0) .LOCL. 10 l 51 64 17 0.000 0.000 0.000"

]

}

ok: [arc-pro-dc09] => {

"msg": [

" remote refid st t when poll reach delay offset jitter",

"==============================================================================",

"*arc-pro-dc01 LOCAL(0) 11 u 43 64 17 0.310 -10.642 11.711",

" LOCAL(0) .LOCL. 10 l 51 64 17 0.000 0.000 0.000"

]

}

# 确保时间同步

$ for host in arc-pro-dc0{1..9}; do ssh $host "date +'%F %T'"; done

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

2025-09-01 14:27:07

# 如与互联网时间相差过大,可以手动设置时间

$ for host in arc-pro-dc0{1..9}; do ssh $host "sudo date -s '2025-09-01 15:34:30'"; done

3.6 安装局域网内的 YUM 源(可选)

选择 10.0.0.13 服务器作为局域网内 YUM 源服务端。

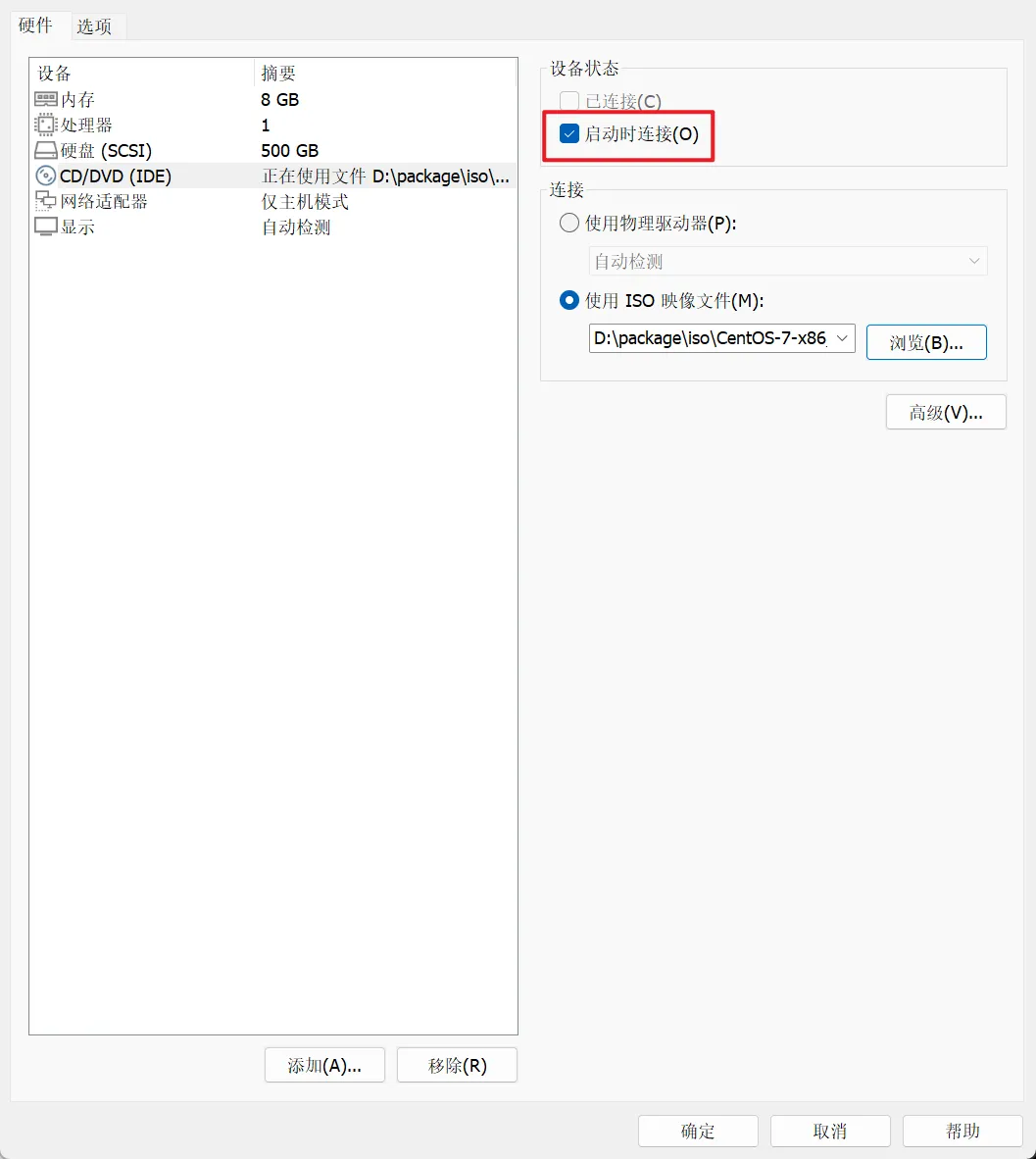

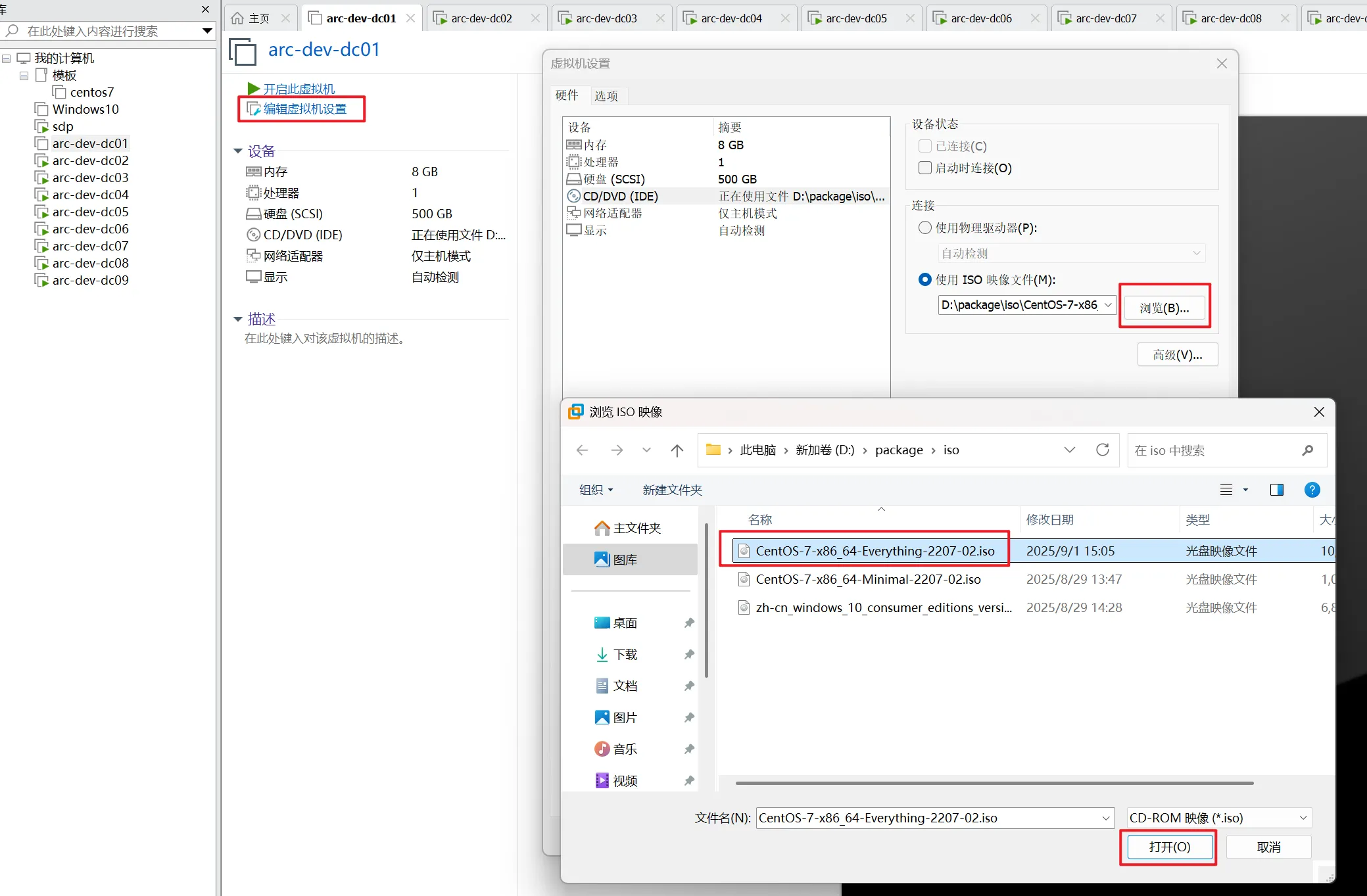

连接镜像源:(此步骤在生产环境由运维部门负责完成)

在 10.0.0.13 服务器操作

挂载镜像:

$ pwd

/home/admin/ansible

$ sudo ls -l /dev | grep sr

lrwxrwxrwx 1 root root 3 Sep 1 14:56 cdrom -> sr0

srw-rw-rw- 1 root root 0 Sep 1 14:56 log

brw-rw---- 1 root cdrom 11, 0 Sep 1 14:56 sr0

$ sudo mkdir -p /mnt/centos

$ sudo su -

[root@arc-dev-dc01 ~]# echo -e "/dev/sr0\t/mnt/centos\tiso9660\tloop,defaults\t0\t0" >> /etc/fstab

[root@arc-dev-dc01 ~]# exit

$ sudo mount -a

$ sudo mkdir -p /etc/yum.repos.d/backup

$ sudo mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/

$ sudo tee /etc/yum.repos.d/local.repo > /dev/null << 'EOF'

[local]

name=CentOS7 ISO Local Repo

baseurl=file:///mnt/centos

enabled=1

gpgcheck=0

EOF

$ sudo yum clean all

$ sudo yum makecache

$ sudo yum install -y httpd

$ sudo systemctl enable httpd --now

$ cd /var/www/html

$ sudo ln -s /mnt/centos centos7

$ sudo sed -i 's|^baseurl=file:///mnt/centos|baseurl=http://10.0.0.13/centos7/|' /etc/yum.repos.d/local.repo

$ sudo yum clean all

$ sudo yum makecache

创建 /home/admin/ansible/system/local-repo.yml,内容如下:

---

- name: 分发本地 YUM 源配置

hosts: cluster:!arc-pro-dc01

gather_facts: false

become: yes

tasks:

- name: 创建备份目录

command: mkdir -p /etc/yum.repos.d/backup

- name: 备份现有 repo 文件

shell: mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup

ignore_errors: yes

- name: 分发 local.repo 到目标机器

copy:

src: /etc/yum.repos.d/local.repo

dest: /etc/yum.repos.d/local.repo

owner: root

group: root

mode: '0644'

- name: make cache

shell: yum clean all && yum makecache

ignore_errors: yes

执行命令:

$ pwd

/home/admin/ansible

$ ansible-playbook system/local-repo.yml

3.7 禁用交换分区

在 10.0.0.13 服务器操作

创建 /home/admin/ansible/system/disable-swap.yml,内容如下:

---

- name: Disable swap on all cluster nodes

hosts: cluster

gather_facts: false

become: yes

tasks:

- name: Turn off all swap immediately

command: swapoff -a

changed_when: swapoff_result.rc == 0

- name: Backup fstab before modifying

copy:

src: /etc/fstab

dest: "/etc/fstab.backup"

owner: root

group: root

mode: 0644

- name: Comment out swap entries in fstab to disable on boot

lineinfile:

path: /etc/fstab

regexp: '^([^#].*\s+swap\s+.*)$'

line: '# \1'

backrefs: yes

$ pwd

/home/admin/ansible

$ ansible-playbook system/disable-swap.yml

浙公网安备 33010602011771号

浙公网安备 33010602011771号