(原)DropBlock A regularization method for convolutional networks

转载请注明出处:

https://www.cnblogs.com/darkknightzh/p/9985027.html

论文网址:

https://arxiv.org/abs/1810.12890

第三方实现:

Pytorch:https://github.com/Randl/DropBlock-pytorch

Tensorflow:https://github.com/DHZS/tf-dropblock

修改后的pytorch实现:https://github.com/darkknightzh/DropBlock_pytorch

一 总体介绍

传统的dropout对FC层效果更好,对conv层效果较差,原因可能是conv层的特征单元是空间相关的,使用dropout随机丢弃一些特征,网络仍旧能从相邻区域获取信息,因而输入的信息仍旧能够被送到下一层,导致网络过拟合。而论文提出的DropBlock则是将在特征图上连续的信息一起丢弃。

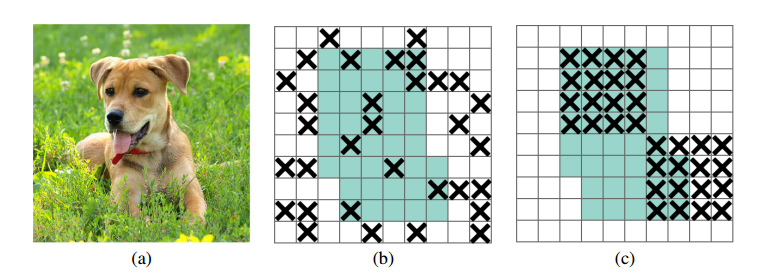

下图是一个简单示例。a为输入图像,狗的头、脚等区域具有相关性。b为dropout的方式直接丢弃信息,此时能从临近区域获取相关信息(带x的为丢弃信息的mask)。c为DropBlock的方式,将一定区域内的特征全部丢弃。

二 算法框架

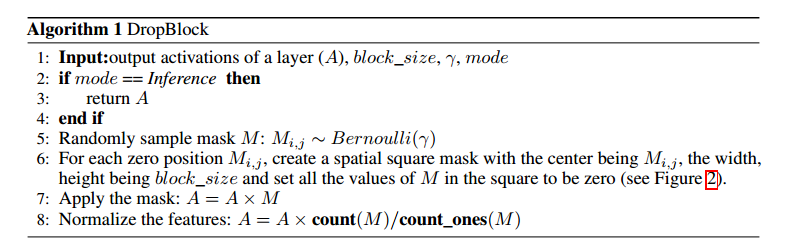

由于要使用mask,DropBlock有两种实现方式:① 不同特征层共享相同的DropBlock mask,在相同位置丢弃信息;② 每个特征层使用各自的DropBlock mask。文中发现第二种效果更好,下图是方式②的流程(DropBlock有两个参数,block_size和γ。block_size为块的大小,γ为伯努利分布的参数):

说明:

3 如果处于推断模式,则不丢弃任何特征

5 生成mask M,每个点均服从参数为γ的伯努利分布(伯努利分布,随机变量x以概率γ和1-γ取1和0)。需要注意的是,只有mask的绿色有效区域内的点服从该分布,如下图a所示,这样确保步骤6不会处理边缘区域。

6 对于M中为0的点,以该点为中心,创建一个长宽均为block_size的矩形,该矩形内所有值均置0。如上图b所示。

7 将mask应用于特征图上:A=A*M

8 对特征进行归一化:A=A*count(M)/count_ones(M)。此处count指M的像素数(即特征图的宽*高),count_ones指M中值为1的像素数。其他文献看得少,此处不知道怎么解释了。反正是对特征值放大一点点。。。

三 具体细节

block_size:所有特征图的block_size均相同,文中为7时大部分情况下最好。

γ:并未直接设置γ,而是从keep_prob来推断γ,这两者关系如下(feat_size为特征图的大小):

$\gamma =\frac{1-keep\_prob}{block\_siz{{e}^{2}}}\frac{feat\_siz{{e}^{2}}}{{{(feat\_size-block\_size+1)}^{2}}}$

keep_prob:固定keep_prob时DropBlock效果不好,而在训练初期使用过小的keep_prob会又会影响学习到的参数。因而随着训练的进行DropBlock将keep_prob从1线性降低到目标值(如0.9)

四 实验结果

具体见论文吧。

只说一下,在resnet 50上测试时,将训练epochs从90轮增加到270轮,并且在125、200、250轮分别将学习率降低到原来的1/10。另一方面,由于训练次数太多,因而进行比较的baseline,不是使用最后的结果(训练次数过多,模型可能过拟合,验证集上性能可能会降低),而是每次训练都会计算在验证集上的性能,并取最好的作为baseline的性能。

五 pytorch代码及修改

1. 使用conv2D来得到mask

参考网址中给出了pytorch的代码,缺点是需要手动降低keep_prob(如下):

1 class DropBlock2D(nn.Module): 2 r"""Randomly zeroes spatial blocks of the input tensor. 3 4 5 As described in the paper 6 `DropBlock: A regularization method for convolutional networks`_ , 7 dropping whole blocks of feature map allows to remove semantic 8 information as compared to regular dropout. 9 10 Args: 11 keep_prob (float, optional): probability of an element to be kept. 12 Authors recommend to linearly decrease this value from 1 to desired 13 value. 14 block_size (int, optional): size of the block. Block size in paper 15 usually equals last feature map dimensions. 16 17 Shape: 18 - Input: :math:`(N, C, H, W)` 19 - Output: :math:`(N, C, H, W)` (same shape as input) 20 21 .. _DropBlock: A regularization method for convolutional networks: 22 https://arxiv.org/abs/1810.12890 23 """ 24 25 def __init__(self, keep_prob=0.9, block_size=7): 26 super(DropBlock2D, self).__init__() 27 self.keep_prob = keep_prob 28 self.block_size = block_size 29 30 def forward(self, input): 31 if not self.training or self.keep_prob == 1: 32 return input 33 gamma = (1. - self.keep_prob) / self.block_size ** 2 34 for sh in input.shape[2:]: 35 gamma *= sh / (sh - self.block_size + 1) 36 M = torch.bernoulli(torch.ones_like(input) * gamma) 37 Msum = F.conv2d(M, torch.ones((input.shape[1], 1, self.block_size, self.block_size)).to(device=input.device, dtype=input.dtype), 38 padding=self.block_size // 2, groups=input.shape[1]) 39 40 torch.set_printoptions(threshold=5000) 41 mask = (Msum < 1).to(device=input.device, dtype=input.dtype) 42 43 return input * mask * mask.numel() /mask.sum() #TODO input * mask * self.keep_prob ?

代码中使用conv2D来得到mask。

33-35行得到gamma。

36行得到和输入尺寸相同的,且符合伯努利分布的矩阵M

37,38,41行通过conv2d得到mask。

2. 使用max_pool2d来得到mask

可以结合tensorflow的代码,使用max_pool2d来得到mask,如下面代码所示:

1 class DropBlock2DV2(nn.Module): 2 def __init__(self, keep_prob=0.9, block_size=7): 3 super(DropBlock2DV2, self).__init__() 4 self.keep_prob = keep_prob 5 self.block_size = block_size 6 7 def forward(self, input): 8 if not self.training or self.keep_prob == 1: 9 return input 10 gamma = (1. - self.keep_prob) / self.block_size ** 2 11 for sh in input.shape[2:]: 12 gamma *= sh / (sh - self.block_size + 1) 13 M = torch.bernoulli(torch.ones_like(input) * gamma) 14 15 Msum = F.max_pool2d(M, kernel_size=[self.block_size, self.block_size], stride=1, padding=self.block_size // 2) 16 17 mask = (1 - Msum).to(device=input.device, dtype=input.dtype) 18 19 return input * mask * mask.numel() /mask.sum()

3. 结果对比

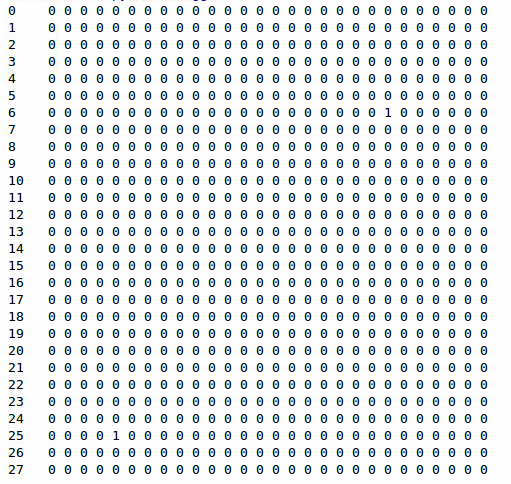

下图为直接对mnist图像使用DropBlock2D得到的伯努利分布M(两种代码结果一致)

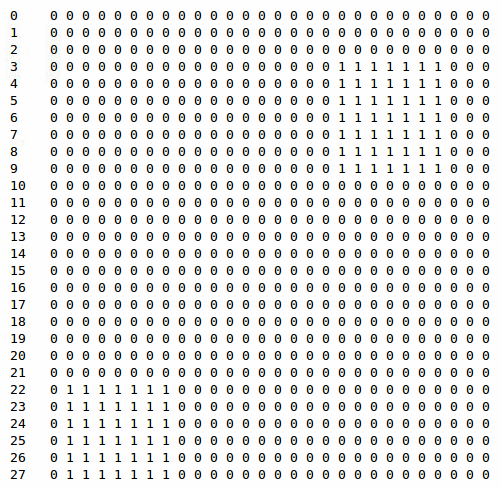

下图为得到的Msum(两种代码结果一致)

上面结果均相同。

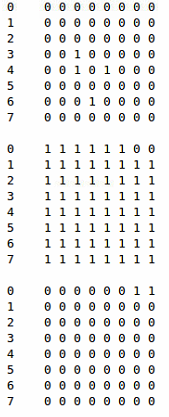

当对中间层使用DropBlock2D时,第一组为M,第二组为Msum,第三组为mask:

当对中间层使用DropBlock2DV2时,第一组为M,第二组为Msum,第三组为mask,

可见,虽然Msum不一样,但是mask结果一样。

下面为使用DropBlock2D训练结果:

Train Epoch: 1 [0/60000 (0%)] Loss: 2.361322 Train Epoch: 1 [2560/60000 (4%)] Loss: 2.341034 Train Epoch: 1 [5120/60000 (9%)] Loss: 2.287267 Train Epoch: 1 [7680/60000 (13%)] Loss: 2.274684 Train Epoch: 1 [10240/60000 (17%)] Loss: 2.260440 Train Epoch: 1 [12800/60000 (21%)] Loss: 2.259492 Train Epoch: 1 [15360/60000 (26%)] Loss: 2.240603 Train Epoch: 1 [17920/60000 (30%)] Loss: 2.207781 Train Epoch: 1 [20480/60000 (34%)] Loss: 2.177025 Train Epoch: 1 [23040/60000 (38%)] Loss: 2.137965 Train Epoch: 1 [25600/60000 (43%)] Loss: 2.029636 Train Epoch: 1 [28160/60000 (47%)] Loss: 1.967242 Train Epoch: 1 [30720/60000 (51%)] Loss: 1.948034 Train Epoch: 1 [33280/60000 (55%)] Loss: 1.856952 Train Epoch: 1 [35840/60000 (60%)] Loss: 1.786148 Train Epoch: 1 [38400/60000 (64%)] Loss: 1.657498 Train Epoch: 1 [40960/60000 (68%)] Loss: 1.604034 Train Epoch: 1 [43520/60000 (72%)] Loss: 1.550600 Train Epoch: 1 [46080/60000 (77%)] Loss: 1.425241 Train Epoch: 1 [48640/60000 (81%)] Loss: 1.455231 Train Epoch: 1 [51200/60000 (85%)] Loss: 1.263960 Train Epoch: 1 [53760/60000 (89%)] Loss: 1.255704 Train Epoch: 1 [56320/60000 (94%)] Loss: 1.156345 Train Epoch: 1 [58880/60000 (98%)] Loss: 1.272000 Test set: Average loss: 0.7381, Accuracy: 8467/10000 (85%) Train Epoch: 2 [0/60000 (0%)] Loss: 1.198182 Train Epoch: 2 [2560/60000 (4%)] Loss: 1.133391 Train Epoch: 2 [5120/60000 (9%)] Loss: 0.958502 Train Epoch: 2 [7680/60000 (13%)] Loss: 1.068815 Train Epoch: 2 [10240/60000 (17%)] Loss: 1.103716 Train Epoch: 2 [12800/60000 (21%)] Loss: 0.949479 Train Epoch: 2 [15360/60000 (26%)] Loss: 0.931306 Train Epoch: 2 [17920/60000 (30%)] Loss: 0.922673 Train Epoch: 2 [20480/60000 (34%)] Loss: 0.954674 Train Epoch: 2 [23040/60000 (38%)] Loss: 0.875961 Train Epoch: 2 [25600/60000 (43%)] Loss: 0.892115 Train Epoch: 2 [28160/60000 (47%)] Loss: 0.835847 Train Epoch: 2 [30720/60000 (51%)] Loss: 0.847458 Train Epoch: 2 [33280/60000 (55%)] Loss: 0.791372 Train Epoch: 2 [35840/60000 (60%)] Loss: 0.856625 Train Epoch: 2 [38400/60000 (64%)] Loss: 0.717881 Train Epoch: 2 [40960/60000 (68%)] Loss: 0.752228 Train Epoch: 2 [43520/60000 (72%)] Loss: 0.803537 Train Epoch: 2 [46080/60000 (77%)] Loss: 0.756578 Train Epoch: 2 [48640/60000 (81%)] Loss: 0.751846 Train Epoch: 2 [51200/60000 (85%)] Loss: 0.717995 Train Epoch: 2 [53760/60000 (89%)] Loss: 0.780597 Train Epoch: 2 [56320/60000 (94%)] Loss: 0.731817 Train Epoch: 2 [58880/60000 (98%)] Loss: 0.689091 Test set: Average loss: 0.3513, Accuracy: 9160/10000 (92%) Train Epoch: 3 [0/60000 (0%)] Loss: 0.637277 Train Epoch: 3 [2560/60000 (4%)] Loss: 0.669868 Train Epoch: 3 [5120/60000 (9%)] Loss: 0.628944 Train Epoch: 3 [7680/60000 (13%)] Loss: 0.577496 Train Epoch: 3 [10240/60000 (17%)] Loss: 0.592267 Train Epoch: 3 [12800/60000 (21%)] Loss: 0.653577 Train Epoch: 3 [15360/60000 (26%)] Loss: 0.665416 Train Epoch: 3 [17920/60000 (30%)] Loss: 0.586191 Train Epoch: 3 [20480/60000 (34%)] Loss: 0.608021 Train Epoch: 3 [23040/60000 (38%)] Loss: 0.687553 Train Epoch: 3 [25600/60000 (43%)] Loss: 0.543245 Train Epoch: 3 [28160/60000 (47%)] Loss: 0.709381 Train Epoch: 3 [30720/60000 (51%)] Loss: 0.593673 Train Epoch: 3 [33280/60000 (55%)] Loss: 0.578504 Train Epoch: 3 [35840/60000 (60%)] Loss: 0.549813 Train Epoch: 3 [38400/60000 (64%)] Loss: 0.525090 Train Epoch: 3 [40960/60000 (68%)] Loss: 0.660547 Train Epoch: 3 [43520/60000 (72%)] Loss: 0.453336 Train Epoch: 3 [46080/60000 (77%)] Loss: 0.564345 Train Epoch: 3 [48640/60000 (81%)] Loss: 0.553669 Train Epoch: 3 [51200/60000 (85%)] Loss: 0.553587 Train Epoch: 3 [53760/60000 (89%)] Loss: 0.606980 Train Epoch: 3 [56320/60000 (94%)] Loss: 0.595271 Train Epoch: 3 [58880/60000 (98%)] Loss: 0.544352 Test set: Average loss: 0.2539, Accuracy: 9336/10000 (93%) Train Epoch: 4 [0/60000 (0%)] Loss: 0.620331 Train Epoch: 4 [2560/60000 (4%)] Loss: 0.567480 Train Epoch: 4 [5120/60000 (9%)] Loss: 0.508343 Train Epoch: 4 [7680/60000 (13%)] Loss: 0.494243 Train Epoch: 4 [10240/60000 (17%)] Loss: 0.520037 Train Epoch: 4 [12800/60000 (21%)] Loss: 0.495483 Train Epoch: 4 [15360/60000 (26%)] Loss: 0.469755 Train Epoch: 4 [17920/60000 (30%)] Loss: 0.593844 Train Epoch: 4 [20480/60000 (34%)] Loss: 0.403337 Train Epoch: 4 [23040/60000 (38%)] Loss: 0.546628 Train Epoch: 4 [25600/60000 (43%)] Loss: 0.521818 Train Epoch: 4 [28160/60000 (47%)] Loss: 0.461603 Train Epoch: 4 [30720/60000 (51%)] Loss: 0.451833 Train Epoch: 4 [33280/60000 (55%)] Loss: 0.439977 Train Epoch: 4 [35840/60000 (60%)] Loss: 0.529679 Train Epoch: 4 [38400/60000 (64%)] Loss: 0.489150 Train Epoch: 4 [40960/60000 (68%)] Loss: 0.542619 Train Epoch: 4 [43520/60000 (72%)] Loss: 0.471994 Train Epoch: 4 [46080/60000 (77%)] Loss: 0.438699 Train Epoch: 4 [48640/60000 (81%)] Loss: 0.415263 Train Epoch: 4 [51200/60000 (85%)] Loss: 0.391874 Train Epoch: 4 [53760/60000 (89%)] Loss: 0.521654 Train Epoch: 4 [56320/60000 (94%)] Loss: 0.433007 Train Epoch: 4 [58880/60000 (98%)] Loss: 0.388784 Test set: Average loss: 0.2137, Accuracy: 9432/10000 (94%) Train Epoch: 5 [0/60000 (0%)] Loss: 0.531463 Train Epoch: 5 [2560/60000 (4%)] Loss: 0.501781 Train Epoch: 5 [5120/60000 (9%)] Loss: 0.392028 Train Epoch: 5 [7680/60000 (13%)] Loss: 0.506029 Train Epoch: 5 [10240/60000 (17%)] Loss: 0.437021 Train Epoch: 5 [12800/60000 (21%)] Loss: 0.447683 Train Epoch: 5 [15360/60000 (26%)] Loss: 0.392386 Train Epoch: 5 [17920/60000 (30%)] Loss: 0.416837 Train Epoch: 5 [20480/60000 (34%)] Loss: 0.451183 Train Epoch: 5 [23040/60000 (38%)] Loss: 0.433644 Train Epoch: 5 [25600/60000 (43%)] Loss: 0.470782 Train Epoch: 5 [28160/60000 (47%)] Loss: 0.421898 Train Epoch: 5 [30720/60000 (51%)] Loss: 0.445305 Train Epoch: 5 [33280/60000 (55%)] Loss: 0.479172 Train Epoch: 5 [35840/60000 (60%)] Loss: 0.375300 Train Epoch: 5 [38400/60000 (64%)] Loss: 0.370561 Train Epoch: 5 [40960/60000 (68%)] Loss: 0.532634 Train Epoch: 5 [43520/60000 (72%)] Loss: 0.383319 Train Epoch: 5 [46080/60000 (77%)] Loss: 0.506851 Train Epoch: 5 [48640/60000 (81%)] Loss: 0.408551 Train Epoch: 5 [51200/60000 (85%)] Loss: 0.392309 Train Epoch: 5 [53760/60000 (89%)] Loss: 0.477996 Train Epoch: 5 [56320/60000 (94%)] Loss: 0.414231 Train Epoch: 5 [58880/60000 (98%)] Loss: 0.430027 Test set: Average loss: 0.1803, Accuracy: 9499/10000 (95%) Train Epoch: 6 [0/60000 (0%)] Loss: 0.415102 Train Epoch: 6 [2560/60000 (4%)] Loss: 0.281096 Train Epoch: 6 [5120/60000 (9%)] Loss: 0.363706 Train Epoch: 6 [7680/60000 (13%)] Loss: 0.390821 Train Epoch: 6 [10240/60000 (17%)] Loss: 0.408141 Train Epoch: 6 [12800/60000 (21%)] Loss: 0.422376 Train Epoch: 6 [15360/60000 (26%)] Loss: 0.394143 Train Epoch: 6 [17920/60000 (30%)] Loss: 0.375477 Train Epoch: 6 [20480/60000 (34%)] Loss: 0.525811 Train Epoch: 6 [23040/60000 (38%)] Loss: 0.497835 Train Epoch: 6 [25600/60000 (43%)] Loss: 0.407303 Train Epoch: 6 [28160/60000 (47%)] Loss: 0.464176 Train Epoch: 6 [30720/60000 (51%)] Loss: 0.538057 Train Epoch: 6 [33280/60000 (55%)] Loss: 0.390377 Train Epoch: 6 [35840/60000 (60%)] Loss: 0.403131 Train Epoch: 6 [38400/60000 (64%)] Loss: 0.512176 Train Epoch: 6 [40960/60000 (68%)] Loss: 0.378995 Train Epoch: 6 [43520/60000 (72%)] Loss: 0.485860 Train Epoch: 6 [46080/60000 (77%)] Loss: 0.388245 Train Epoch: 6 [48640/60000 (81%)] Loss: 0.388625 Train Epoch: 6 [51200/60000 (85%)] Loss: 0.379450 Train Epoch: 6 [53760/60000 (89%)] Loss: 0.407995 Train Epoch: 6 [56320/60000 (94%)] Loss: 0.398069 Train Epoch: 6 [58880/60000 (98%)] Loss: 0.372017 Test set: Average loss: 0.1640, Accuracy: 9545/10000 (95%) Train Epoch: 7 [0/60000 (0%)] Loss: 0.357198 Train Epoch: 7 [2560/60000 (4%)] Loss: 0.393828 Train Epoch: 7 [5120/60000 (9%)] Loss: 0.400493 Train Epoch: 7 [7680/60000 (13%)] Loss: 0.352309 Train Epoch: 7 [10240/60000 (17%)] Loss: 0.357330 Train Epoch: 7 [12800/60000 (21%)] Loss: 0.320281 Train Epoch: 7 [15360/60000 (26%)] Loss: 0.440437 Train Epoch: 7 [17920/60000 (30%)] Loss: 0.388508 Train Epoch: 7 [20480/60000 (34%)] Loss: 0.403732 Train Epoch: 7 [23040/60000 (38%)] Loss: 0.322718 Train Epoch: 7 [25600/60000 (43%)] Loss: 0.372186 Train Epoch: 7 [28160/60000 (47%)] Loss: 0.383310 Train Epoch: 7 [30720/60000 (51%)] Loss: 0.390324 Train Epoch: 7 [33280/60000 (55%)] Loss: 0.397030 Train Epoch: 7 [35840/60000 (60%)] Loss: 0.285954 Train Epoch: 7 [38400/60000 (64%)] Loss: 0.353880 Train Epoch: 7 [40960/60000 (68%)] Loss: 0.240818 Train Epoch: 7 [43520/60000 (72%)] Loss: 0.425822 Train Epoch: 7 [46080/60000 (77%)] Loss: 0.457441 Train Epoch: 7 [48640/60000 (81%)] Loss: 0.375786 Train Epoch: 7 [51200/60000 (85%)] Loss: 0.365595 Train Epoch: 7 [53760/60000 (89%)] Loss: 0.364900 Train Epoch: 7 [56320/60000 (94%)] Loss: 0.266271 Train Epoch: 7 [58880/60000 (98%)] Loss: 0.442880 Test set: Average loss: 0.1536, Accuracy: 9577/10000 (96%) Train Epoch: 8 [0/60000 (0%)] Loss: 0.371176 Train Epoch: 8 [2560/60000 (4%)] Loss: 0.291452 Train Epoch: 8 [5120/60000 (9%)] Loss: 0.416313 Train Epoch: 8 [7680/60000 (13%)] Loss: 0.366298 Train Epoch: 8 [10240/60000 (17%)] Loss: 0.296640 Train Epoch: 8 [12800/60000 (21%)] Loss: 0.348865 Train Epoch: 8 [15360/60000 (26%)] Loss: 0.417321 Train Epoch: 8 [17920/60000 (30%)] Loss: 0.367058 Train Epoch: 8 [20480/60000 (34%)] Loss: 0.336347 Train Epoch: 8 [23040/60000 (38%)] Loss: 0.362581 Train Epoch: 8 [25600/60000 (43%)] Loss: 0.288644 Train Epoch: 8 [28160/60000 (47%)] Loss: 0.330283 Train Epoch: 8 [30720/60000 (51%)] Loss: 0.298797 Train Epoch: 8 [33280/60000 (55%)] Loss: 0.344115 Train Epoch: 8 [35840/60000 (60%)] Loss: 0.356521 Train Epoch: 8 [38400/60000 (64%)] Loss: 0.297551 Train Epoch: 8 [40960/60000 (68%)] Loss: 0.440755 Train Epoch: 8 [43520/60000 (72%)] Loss: 0.364442 Train Epoch: 8 [46080/60000 (77%)] Loss: 0.272985 Train Epoch: 8 [48640/60000 (81%)] Loss: 0.342815 Train Epoch: 8 [51200/60000 (85%)] Loss: 0.329443 Train Epoch: 8 [53760/60000 (89%)] Loss: 0.257021 Train Epoch: 8 [56320/60000 (94%)] Loss: 0.350975 Train Epoch: 8 [58880/60000 (98%)] Loss: 0.252117 Test set: Average loss: 0.1425, Accuracy: 9592/10000 (96%) Train Epoch: 9 [0/60000 (0%)] Loss: 0.308571 Train Epoch: 9 [2560/60000 (4%)] Loss: 0.354496 Train Epoch: 9 [5120/60000 (9%)] Loss: 0.454645 Train Epoch: 9 [7680/60000 (13%)] Loss: 0.340073 Train Epoch: 9 [10240/60000 (17%)] Loss: 0.304953 Train Epoch: 9 [12800/60000 (21%)] Loss: 0.266210 Train Epoch: 9 [15360/60000 (26%)] Loss: 0.414784 Train Epoch: 9 [17920/60000 (30%)] Loss: 0.324893 Train Epoch: 9 [20480/60000 (34%)] Loss: 0.367169 Train Epoch: 9 [23040/60000 (38%)] Loss: 0.346932 Train Epoch: 9 [25600/60000 (43%)] Loss: 0.382222 Train Epoch: 9 [28160/60000 (47%)] Loss: 0.356705 Train Epoch: 9 [30720/60000 (51%)] Loss: 0.287982 Train Epoch: 9 [33280/60000 (55%)] Loss: 0.280525 Train Epoch: 9 [35840/60000 (60%)] Loss: 0.244508 Train Epoch: 9 [38400/60000 (64%)] Loss: 0.290698 Train Epoch: 9 [40960/60000 (68%)] Loss: 0.352147 Train Epoch: 9 [43520/60000 (72%)] Loss: 0.352036 Train Epoch: 9 [46080/60000 (77%)] Loss: 0.398510 Train Epoch: 9 [48640/60000 (81%)] Loss: 0.291793 Train Epoch: 9 [51200/60000 (85%)] Loss: 0.276297 Train Epoch: 9 [53760/60000 (89%)] Loss: 0.345035 Train Epoch: 9 [56320/60000 (94%)] Loss: 0.246514 Train Epoch: 9 [58880/60000 (98%)] Loss: 0.306455 Test set: Average loss: 0.1283, Accuracy: 9633/10000 (96%) Train Epoch: 10 [0/60000 (0%)] Loss: 0.217172 Train Epoch: 10 [2560/60000 (4%)] Loss: 0.325854 Train Epoch: 10 [5120/60000 (9%)] Loss: 0.344469 Train Epoch: 10 [7680/60000 (13%)] Loss: 0.270222 Train Epoch: 10 [10240/60000 (17%)] Loss: 0.369047 Train Epoch: 10 [12800/60000 (21%)] Loss: 0.422427 Train Epoch: 10 [15360/60000 (26%)] Loss: 0.279517 Train Epoch: 10 [17920/60000 (30%)] Loss: 0.290899 Train Epoch: 10 [20480/60000 (34%)] Loss: 0.312549 Train Epoch: 10 [23040/60000 (38%)] Loss: 0.253973 Train Epoch: 10 [25600/60000 (43%)] Loss: 0.304302 Train Epoch: 10 [28160/60000 (47%)] Loss: 0.287465 Train Epoch: 10 [30720/60000 (51%)] Loss: 0.238241 Train Epoch: 10 [33280/60000 (55%)] Loss: 0.431481 Train Epoch: 10 [35840/60000 (60%)] Loss: 0.208366 Train Epoch: 10 [38400/60000 (64%)] Loss: 0.290634 Train Epoch: 10 [40960/60000 (68%)] Loss: 0.279229 Train Epoch: 10 [43520/60000 (72%)] Loss: 0.297195 Train Epoch: 10 [46080/60000 (77%)] Loss: 0.251031 Train Epoch: 10 [48640/60000 (81%)] Loss: 0.311252 Train Epoch: 10 [51200/60000 (85%)] Loss: 0.391167 Train Epoch: 10 [53760/60000 (89%)] Loss: 0.389775 Train Epoch: 10 [56320/60000 (94%)] Loss: 0.315159 Train Epoch: 10 [58880/60000 (98%)] Loss: 0.249528 Test set: Average loss: 0.1238, Accuracy: 9652/10000 (97%)

下面为使用DropBlock2DV2训练结果:

Train Epoch: 1 [0/60000 (0%)] Loss: 2.361322 Train Epoch: 1 [2560/60000 (4%)] Loss: 2.341034 Train Epoch: 1 [5120/60000 (9%)] Loss: 2.287267 Train Epoch: 1 [7680/60000 (13%)] Loss: 2.274684 Train Epoch: 1 [10240/60000 (17%)] Loss: 2.260440 Train Epoch: 1 [12800/60000 (21%)] Loss: 2.259492 Train Epoch: 1 [15360/60000 (26%)] Loss: 2.240603 Train Epoch: 1 [17920/60000 (30%)] Loss: 2.207781 Train Epoch: 1 [20480/60000 (34%)] Loss: 2.177025 Train Epoch: 1 [23040/60000 (38%)] Loss: 2.137965 Train Epoch: 1 [25600/60000 (43%)] Loss: 2.029636 Train Epoch: 1 [28160/60000 (47%)] Loss: 1.967242 Train Epoch: 1 [30720/60000 (51%)] Loss: 1.948036 Train Epoch: 1 [33280/60000 (55%)] Loss: 1.856993 Train Epoch: 1 [35840/60000 (60%)] Loss: 1.786044 Train Epoch: 1 [38400/60000 (64%)] Loss: 1.657677 Train Epoch: 1 [40960/60000 (68%)] Loss: 1.603945 Train Epoch: 1 [43520/60000 (72%)] Loss: 1.550625 Train Epoch: 1 [46080/60000 (77%)] Loss: 1.424808 Train Epoch: 1 [48640/60000 (81%)] Loss: 1.454958 Train Epoch: 1 [51200/60000 (85%)] Loss: 1.263500 Train Epoch: 1 [53760/60000 (89%)] Loss: 1.255482 Train Epoch: 1 [56320/60000 (94%)] Loss: 1.157445 Train Epoch: 1 [58880/60000 (98%)] Loss: 1.271838 Test set: Average loss: 0.7383, Accuracy: 8467/10000 (85%) Train Epoch: 2 [0/60000 (0%)] Loss: 1.198318 Train Epoch: 2 [2560/60000 (4%)] Loss: 1.133464 Train Epoch: 2 [5120/60000 (9%)] Loss: 0.957895 Train Epoch: 2 [7680/60000 (13%)] Loss: 1.068100 Train Epoch: 2 [10240/60000 (17%)] Loss: 1.103465 Train Epoch: 2 [12800/60000 (21%)] Loss: 0.948980 Train Epoch: 2 [15360/60000 (26%)] Loss: 0.931363 Train Epoch: 2 [17920/60000 (30%)] Loss: 0.922501 Train Epoch: 2 [20480/60000 (34%)] Loss: 0.954827 Train Epoch: 2 [23040/60000 (38%)] Loss: 0.876058 Train Epoch: 2 [25600/60000 (43%)] Loss: 0.891441 Train Epoch: 2 [28160/60000 (47%)] Loss: 0.835737 Train Epoch: 2 [30720/60000 (51%)] Loss: 0.847362 Train Epoch: 2 [33280/60000 (55%)] Loss: 0.790432 Train Epoch: 2 [35840/60000 (60%)] Loss: 0.857441 Train Epoch: 2 [38400/60000 (64%)] Loss: 0.718644 Train Epoch: 2 [40960/60000 (68%)] Loss: 0.751785 Train Epoch: 2 [43520/60000 (72%)] Loss: 0.803771 Train Epoch: 2 [46080/60000 (77%)] Loss: 0.754844 Train Epoch: 2 [48640/60000 (81%)] Loss: 0.751976 Train Epoch: 2 [51200/60000 (85%)] Loss: 0.717965 Train Epoch: 2 [53760/60000 (89%)] Loss: 0.781195 Train Epoch: 2 [56320/60000 (94%)] Loss: 0.730536 Train Epoch: 2 [58880/60000 (98%)] Loss: 0.689717 Test set: Average loss: 0.3512, Accuracy: 9149/10000 (91%) Train Epoch: 3 [0/60000 (0%)] Loss: 0.637135 Train Epoch: 3 [2560/60000 (4%)] Loss: 0.669247 Train Epoch: 3 [5120/60000 (9%)] Loss: 0.628941 Train Epoch: 3 [7680/60000 (13%)] Loss: 0.577849 Train Epoch: 3 [10240/60000 (17%)] Loss: 0.592452 Train Epoch: 3 [12800/60000 (21%)] Loss: 0.653468 Train Epoch: 3 [15360/60000 (26%)] Loss: 0.664662 Train Epoch: 3 [17920/60000 (30%)] Loss: 0.587736 Train Epoch: 3 [20480/60000 (34%)] Loss: 0.608644 Train Epoch: 3 [23040/60000 (38%)] Loss: 0.687277 Train Epoch: 3 [25600/60000 (43%)] Loss: 0.544342 Train Epoch: 3 [28160/60000 (47%)] Loss: 0.708621 Train Epoch: 3 [30720/60000 (51%)] Loss: 0.593251 Train Epoch: 3 [33280/60000 (55%)] Loss: 0.579146 Train Epoch: 3 [35840/60000 (60%)] Loss: 0.549925 Train Epoch: 3 [38400/60000 (64%)] Loss: 0.525394 Train Epoch: 3 [40960/60000 (68%)] Loss: 0.661625 Train Epoch: 3 [43520/60000 (72%)] Loss: 0.455882 Train Epoch: 3 [46080/60000 (77%)] Loss: 0.563778 Train Epoch: 3 [48640/60000 (81%)] Loss: 0.553182 Train Epoch: 3 [51200/60000 (85%)] Loss: 0.553937 Train Epoch: 3 [53760/60000 (89%)] Loss: 0.606809 Train Epoch: 3 [56320/60000 (94%)] Loss: 0.594416 Train Epoch: 3 [58880/60000 (98%)] Loss: 0.544529 Test set: Average loss: 0.2543, Accuracy: 9338/10000 (93%) Train Epoch: 4 [0/60000 (0%)] Loss: 0.619532 Train Epoch: 4 [2560/60000 (4%)] Loss: 0.567222 Train Epoch: 4 [5120/60000 (9%)] Loss: 0.508649 Train Epoch: 4 [7680/60000 (13%)] Loss: 0.494902 Train Epoch: 4 [10240/60000 (17%)] Loss: 0.521278 Train Epoch: 4 [12800/60000 (21%)] Loss: 0.495832 Train Epoch: 4 [15360/60000 (26%)] Loss: 0.468417 Train Epoch: 4 [17920/60000 (30%)] Loss: 0.595662 Train Epoch: 4 [20480/60000 (34%)] Loss: 0.403730 Train Epoch: 4 [23040/60000 (38%)] Loss: 0.547263 Train Epoch: 4 [25600/60000 (43%)] Loss: 0.523064 Train Epoch: 4 [28160/60000 (47%)] Loss: 0.460831 Train Epoch: 4 [30720/60000 (51%)] Loss: 0.452652 Train Epoch: 4 [33280/60000 (55%)] Loss: 0.439493 Train Epoch: 4 [35840/60000 (60%)] Loss: 0.528650 Train Epoch: 4 [38400/60000 (64%)] Loss: 0.487770 Train Epoch: 4 [40960/60000 (68%)] Loss: 0.540879 Train Epoch: 4 [43520/60000 (72%)] Loss: 0.470456 Train Epoch: 4 [46080/60000 (77%)] Loss: 0.437475 Train Epoch: 4 [48640/60000 (81%)] Loss: 0.415573 Train Epoch: 4 [51200/60000 (85%)] Loss: 0.392564 Train Epoch: 4 [53760/60000 (89%)] Loss: 0.521458 Train Epoch: 4 [56320/60000 (94%)] Loss: 0.433528 Train Epoch: 4 [58880/60000 (98%)] Loss: 0.389609 Test set: Average loss: 0.2143, Accuracy: 9432/10000 (94%) Train Epoch: 5 [0/60000 (0%)] Loss: 0.531064 Train Epoch: 5 [2560/60000 (4%)] Loss: 0.501403 Train Epoch: 5 [5120/60000 (9%)] Loss: 0.393153 Train Epoch: 5 [7680/60000 (13%)] Loss: 0.507973 Train Epoch: 5 [10240/60000 (17%)] Loss: 0.437351 Train Epoch: 5 [12800/60000 (21%)] Loss: 0.449640 Train Epoch: 5 [15360/60000 (26%)] Loss: 0.393346 Train Epoch: 5 [17920/60000 (30%)] Loss: 0.416081 Train Epoch: 5 [20480/60000 (34%)] Loss: 0.449489 Train Epoch: 5 [23040/60000 (38%)] Loss: 0.432535 Train Epoch: 5 [25600/60000 (43%)] Loss: 0.470373 Train Epoch: 5 [28160/60000 (47%)] Loss: 0.421085 Train Epoch: 5 [30720/60000 (51%)] Loss: 0.445716 Train Epoch: 5 [33280/60000 (55%)] Loss: 0.478192 Train Epoch: 5 [35840/60000 (60%)] Loss: 0.374121 Train Epoch: 5 [38400/60000 (64%)] Loss: 0.369466 Train Epoch: 5 [40960/60000 (68%)] Loss: 0.533549 Train Epoch: 5 [43520/60000 (72%)] Loss: 0.383733 Train Epoch: 5 [46080/60000 (77%)] Loss: 0.507195 Train Epoch: 5 [48640/60000 (81%)] Loss: 0.407680 Train Epoch: 5 [51200/60000 (85%)] Loss: 0.392881 Train Epoch: 5 [53760/60000 (89%)] Loss: 0.479417 Train Epoch: 5 [56320/60000 (94%)] Loss: 0.414116 Train Epoch: 5 [58880/60000 (98%)] Loss: 0.432079 Test set: Average loss: 0.1801, Accuracy: 9501/10000 (95%) Train Epoch: 6 [0/60000 (0%)] Loss: 0.415744 Train Epoch: 6 [2560/60000 (4%)] Loss: 0.280737 Train Epoch: 6 [5120/60000 (9%)] Loss: 0.364816 Train Epoch: 6 [7680/60000 (13%)] Loss: 0.390640 Train Epoch: 6 [10240/60000 (17%)] Loss: 0.410318 Train Epoch: 6 [12800/60000 (21%)] Loss: 0.423457 Train Epoch: 6 [15360/60000 (26%)] Loss: 0.392294 Train Epoch: 6 [17920/60000 (30%)] Loss: 0.373533 Train Epoch: 6 [20480/60000 (34%)] Loss: 0.528408 Train Epoch: 6 [23040/60000 (38%)] Loss: 0.498351 Train Epoch: 6 [25600/60000 (43%)] Loss: 0.406549 Train Epoch: 6 [28160/60000 (47%)] Loss: 0.462406 Train Epoch: 6 [30720/60000 (51%)] Loss: 0.534846 Train Epoch: 6 [33280/60000 (55%)] Loss: 0.390974 Train Epoch: 6 [35840/60000 (60%)] Loss: 0.403040 Train Epoch: 6 [38400/60000 (64%)] Loss: 0.513974 Train Epoch: 6 [40960/60000 (68%)] Loss: 0.380744 Train Epoch: 6 [43520/60000 (72%)] Loss: 0.485197 Train Epoch: 6 [46080/60000 (77%)] Loss: 0.387865 Train Epoch: 6 [48640/60000 (81%)] Loss: 0.387096 Train Epoch: 6 [51200/60000 (85%)] Loss: 0.380673 Train Epoch: 6 [53760/60000 (89%)] Loss: 0.407134 Train Epoch: 6 [56320/60000 (94%)] Loss: 0.398302 Train Epoch: 6 [58880/60000 (98%)] Loss: 0.372190 Test set: Average loss: 0.1641, Accuracy: 9549/10000 (95%) Train Epoch: 7 [0/60000 (0%)] Loss: 0.356512 Train Epoch: 7 [2560/60000 (4%)] Loss: 0.393870 Train Epoch: 7 [5120/60000 (9%)] Loss: 0.402270 Train Epoch: 7 [7680/60000 (13%)] Loss: 0.353308 Train Epoch: 7 [10240/60000 (17%)] Loss: 0.358547 Train Epoch: 7 [12800/60000 (21%)] Loss: 0.319642 Train Epoch: 7 [15360/60000 (26%)] Loss: 0.438179 Train Epoch: 7 [17920/60000 (30%)] Loss: 0.386755 Train Epoch: 7 [20480/60000 (34%)] Loss: 0.404731 Train Epoch: 7 [23040/60000 (38%)] Loss: 0.322265 Train Epoch: 7 [25600/60000 (43%)] Loss: 0.372252 Train Epoch: 7 [28160/60000 (47%)] Loss: 0.381766 Train Epoch: 7 [30720/60000 (51%)] Loss: 0.390532 Train Epoch: 7 [33280/60000 (55%)] Loss: 0.396237 Train Epoch: 7 [35840/60000 (60%)] Loss: 0.285679 Train Epoch: 7 [38400/60000 (64%)] Loss: 0.355077 Train Epoch: 7 [40960/60000 (68%)] Loss: 0.241128 Train Epoch: 7 [43520/60000 (72%)] Loss: 0.426708 Train Epoch: 7 [46080/60000 (77%)] Loss: 0.456212 Train Epoch: 7 [48640/60000 (81%)] Loss: 0.376701 Train Epoch: 7 [51200/60000 (85%)] Loss: 0.365228 Train Epoch: 7 [53760/60000 (89%)] Loss: 0.365721 Train Epoch: 7 [56320/60000 (94%)] Loss: 0.266916 Train Epoch: 7 [58880/60000 (98%)] Loss: 0.443687 Test set: Average loss: 0.1535, Accuracy: 9579/10000 (96%) Train Epoch: 8 [0/60000 (0%)] Loss: 0.370897 Train Epoch: 8 [2560/60000 (4%)] Loss: 0.289111 Train Epoch: 8 [5120/60000 (9%)] Loss: 0.414931 Train Epoch: 8 [7680/60000 (13%)] Loss: 0.367614 Train Epoch: 8 [10240/60000 (17%)] Loss: 0.296232 Train Epoch: 8 [12800/60000 (21%)] Loss: 0.348647 Train Epoch: 8 [15360/60000 (26%)] Loss: 0.418467 Train Epoch: 8 [17920/60000 (30%)] Loss: 0.364306 Train Epoch: 8 [20480/60000 (34%)] Loss: 0.336696 Train Epoch: 8 [23040/60000 (38%)] Loss: 0.364676 Train Epoch: 8 [25600/60000 (43%)] Loss: 0.286585 Train Epoch: 8 [28160/60000 (47%)] Loss: 0.332353 Train Epoch: 8 [30720/60000 (51%)] Loss: 0.295880 Train Epoch: 8 [33280/60000 (55%)] Loss: 0.344958 Train Epoch: 8 [35840/60000 (60%)] Loss: 0.355939 Train Epoch: 8 [38400/60000 (64%)] Loss: 0.297799 Train Epoch: 8 [40960/60000 (68%)] Loss: 0.443442 Train Epoch: 8 [43520/60000 (72%)] Loss: 0.366912 Train Epoch: 8 [46080/60000 (77%)] Loss: 0.272624 Train Epoch: 8 [48640/60000 (81%)] Loss: 0.340495 Train Epoch: 8 [51200/60000 (85%)] Loss: 0.332022 Train Epoch: 8 [53760/60000 (89%)] Loss: 0.256738 Train Epoch: 8 [56320/60000 (94%)] Loss: 0.351117 Train Epoch: 8 [58880/60000 (98%)] Loss: 0.253743 Test set: Average loss: 0.1426, Accuracy: 9593/10000 (96%) Train Epoch: 9 [0/60000 (0%)] Loss: 0.307950 Train Epoch: 9 [2560/60000 (4%)] Loss: 0.354884 Train Epoch: 9 [5120/60000 (9%)] Loss: 0.455334 Train Epoch: 9 [7680/60000 (13%)] Loss: 0.339795 Train Epoch: 9 [10240/60000 (17%)] Loss: 0.302723 Train Epoch: 9 [12800/60000 (21%)] Loss: 0.262783 Train Epoch: 9 [15360/60000 (26%)] Loss: 0.413777 Train Epoch: 9 [17920/60000 (30%)] Loss: 0.325851 Train Epoch: 9 [20480/60000 (34%)] Loss: 0.367753 Train Epoch: 9 [23040/60000 (38%)] Loss: 0.348576 Train Epoch: 9 [25600/60000 (43%)] Loss: 0.379523 Train Epoch: 9 [28160/60000 (47%)] Loss: 0.357496 Train Epoch: 9 [30720/60000 (51%)] Loss: 0.287231 Train Epoch: 9 [33280/60000 (55%)] Loss: 0.282984 Train Epoch: 9 [35840/60000 (60%)] Loss: 0.244869 Train Epoch: 9 [38400/60000 (64%)] Loss: 0.289696 Train Epoch: 9 [40960/60000 (68%)] Loss: 0.353052 Train Epoch: 9 [43520/60000 (72%)] Loss: 0.352727 Train Epoch: 9 [46080/60000 (77%)] Loss: 0.398184 Train Epoch: 9 [48640/60000 (81%)] Loss: 0.291148 Train Epoch: 9 [51200/60000 (85%)] Loss: 0.276232 Train Epoch: 9 [53760/60000 (89%)] Loss: 0.342424 Train Epoch: 9 [56320/60000 (94%)] Loss: 0.245837 Train Epoch: 9 [58880/60000 (98%)] Loss: 0.305476 Test set: Average loss: 0.1284, Accuracy: 9632/10000 (96%) Train Epoch: 10 [0/60000 (0%)] Loss: 0.216860 Train Epoch: 10 [2560/60000 (4%)] Loss: 0.325897 Train Epoch: 10 [5120/60000 (9%)] Loss: 0.341949 Train Epoch: 10 [7680/60000 (13%)] Loss: 0.270563 Train Epoch: 10 [10240/60000 (17%)] Loss: 0.369292 Train Epoch: 10 [12800/60000 (21%)] Loss: 0.424985 Train Epoch: 10 [15360/60000 (26%)] Loss: 0.280446 Train Epoch: 10 [17920/60000 (30%)] Loss: 0.291100 Train Epoch: 10 [20480/60000 (34%)] Loss: 0.314208 Train Epoch: 10 [23040/60000 (38%)] Loss: 0.253791 Train Epoch: 10 [25600/60000 (43%)] Loss: 0.304992 Train Epoch: 10 [28160/60000 (47%)] Loss: 0.288408 Train Epoch: 10 [30720/60000 (51%)] Loss: 0.239414 Train Epoch: 10 [33280/60000 (55%)] Loss: 0.431122 Train Epoch: 10 [35840/60000 (60%)] Loss: 0.208240 Train Epoch: 10 [38400/60000 (64%)] Loss: 0.291883 Train Epoch: 10 [40960/60000 (68%)] Loss: 0.281189 Train Epoch: 10 [43520/60000 (72%)] Loss: 0.297465 Train Epoch: 10 [46080/60000 (77%)] Loss: 0.253582 Train Epoch: 10 [48640/60000 (81%)] Loss: 0.311440 Train Epoch: 10 [51200/60000 (85%)] Loss: 0.389858 Train Epoch: 10 [53760/60000 (89%)] Loss: 0.389085 Train Epoch: 10 [56320/60000 (94%)] Loss: 0.314335 Train Epoch: 10 [58880/60000 (98%)] Loss: 0.248064 Test set: Average loss: 0.1241, Accuracy: 9655/10000 (97%)

可见总体上一样。

posted on 2018-11-19 19:36 darkknightzh 阅读(2546) 评论(2) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号