Assignment3 依存分析

首先实现parser_transitions.py,接着实现parser_model.py,最后运行run.py进行展示。

1.parser_transitions.py

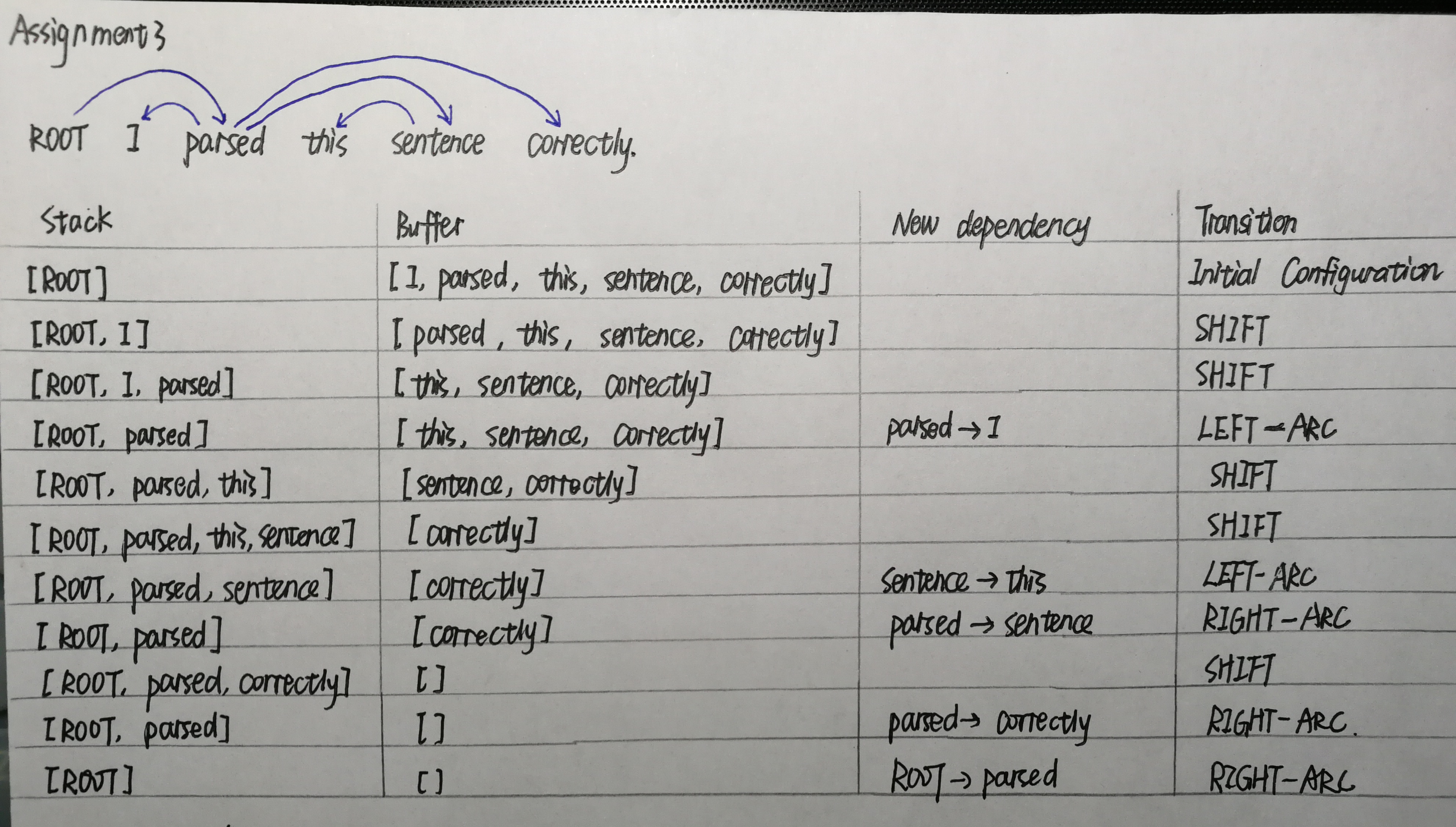

1.1PartialParse类

1 class PartialParse(object): 2 def __init__(self, sentence): 3 """Initializes this partial parse. 4 5 @param sentence (list of str): The sentence to be parsed as a list of words. 6 Your code should not modify the sentence. 7 """ 8 # The sentence being parsed is kept for bookkeeping purposes. Do not alter it in your code. 9 self.sentence = sentence 10 11 ### YOUR CODE HERE (3 Lines) 12 ### Your code should initialize the following fields: 13 ### self.stack: The current stack represented as a list with the top of the stack as the last element of the list. 14 ### self.buffer: The current buffer represented as a list with the first item on the buffer as the first item of the list 15 ### self.dependencies: The list of dependencies produced so far. Represented as a list of 16 ### tuples where each tuple is of the form (head, dependent). 17 ### Order for this list doesn't matter. 18 ### 19 ### Note: The root token should be represented with the string "ROOT" 20 ### 21 self.stack = ['ROOT'] 22 self.buffer = self.sentence.copy() 23 self.dependencies =[] 24 ### END YOUR CODE 25 26 27 def parse_step(self, transition): 28 """Performs a single parse step by applying the given transition to this partial parse 29 30 @param transition (str): A string that equals "S", "LA", or "RA" representing the shift, 31 left-arc, and right-arc transitions. You can assume the provided 32 transition is a legal transition. 33 """ 34 ### YOUR CODE HERE (~7-10 Lines) 35 ### TODO: 36 ### Implement a single parsing step, i.e. the logic for the following as 37 ### described in the pdf handout: 38 ### 1. Shift 39 ### 2. Left Arc 40 ### 3. Right Arc 41 if transition == 'S': 42 self.stack.append(self.buffer.pop(0)) 43 elif transition == 'LA': 44 dependent = self.stack.pop(-2) 45 self.dependencies.append((self.stack[-1], dependent)) 46 elif transition == 'RA': 47 dependent = self.stack.pop() 48 self.dependencies.append((self.stack[-1], dependent)) 49 ### END YOUR CODE 50 51 52 def parse(self, transitions): 53 """Applies the provided transitions to this PartialParse 54 55 @param transitions (list of str): The list of transitions in the order they should be applied 56 57 @return dsependencies (list of string tuples): The list of dependencies produced when 58 parsing the sentence. Represented as a list of 59 tuples where each tuple is of the form (head, dependent). 60 """ 61 for transition in transitions: 62 self.parse_step(transition) 63 return self.dependencies

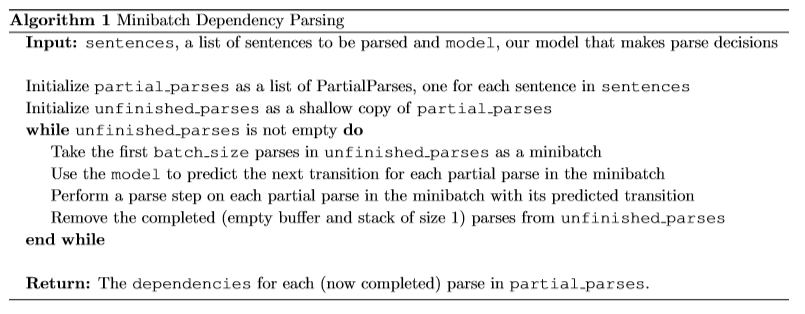

1.2minibatch_parse函数

1 def minibatch_parse(sentences, model, batch_size): 2 """Parses a list of sentences in minibatches using a model. 3 4 @param sentences (list of list of str): A list of sentences to be parsed 5 (each sentence is a list of words and each word is of type string) 6 @param model (ParserModel): The model that makes parsing decisions. It is assumed to have a function 7 model.predict(partial_parses) that takes in a list of PartialParses as input and 8 returns a list of transitions predicted for each parse. That is, after calling 9 transitions = model.predict(partial_parses) 10 transitions[i] will be the next transition to apply to partial_parses[i]. 11 @param batch_size (int): The number of PartialParses to include in each minibatch 12 13 14 @return dependencies (list of dependency lists): A list where each element is the dependencies 15 list for a parsed sentence. Ordering should be the 16 same as in sentences (i.e., dependencies[i] should 17 contain the parse for sentences[i]). 18 """ 19 dependencies = [] 20 21 ### YOUR CODE HERE (~8-10 Lines) 22 ### TODO: 23 ### Implement the minibatch parse algorithm as described in the pdf handout 24 ### 25 ### Note: A shallow copy (as denoted in the PDF) can be made with the "=" sign in python, e.g. unfinished_parses = partial_parses[:]. 26 ### Here `unfinished_parses` is a shallow copy of `partial_parses`. 27 ### In Python, a shallow copied list like `unfinished_parses` does not contain new instances 28 ### of the object stored in `partial_parses`. Rather both lists refer to the same objects. 29 ### In our case, `partial_parses` contains a list of partial parses. `unfinished_parses` 30 ### contains references to the same objects. Thus, you should NOT use the `del` operator 31 ### to remove objects from the `unfinished_parses` list. This will free the underlying memory that 32 ### is being accessed by `partial_parses` and may cause your code to crash. 33 partial_parses = [PartialParse(s) for s in sentences] #为每个句子初始化PartialParse对象 34 unfinished_parses = partial_parses.copy() 35 36 while unfinished_parses: 37 minibatch = unfinished_parses[:batch_size] 38 transitions = model.predict(minibatch) 39 for i, parse in enumerate(minibatch): #取出minibatch的每一个的parse来进行一次transition操作 40 parse.parse_step(transitions[i]) 41 if len(parse.stack)==1 and not parse.buffer: 42 unfinished_parses.remove(parse) #过滤掉已经完成的parse 43 44 dependencies = [parse.dependencies for parse in partial_parses] #获得所有的依赖 45 ### END YOUR CODE 46 47 return dependencies

1.3测试

1 def test_step(name, transition, stack, buf, deps, 2 ex_stack, ex_buf, ex_deps): 3 """Tests that a single parse step returns the expected output""" 4 pp = PartialParse([]) 5 pp.stack, pp.buffer, pp.dependencies = stack, buf, deps 6 7 pp.parse_step(transition) 8 stack, buf, deps = (tuple(pp.stack), tuple(pp.buffer), tuple(sorted(pp.dependencies))) 9 assert stack == ex_stack, \ 10 "{:} test resulted in stack {:}, expected {:}".format(name, stack, ex_stack) 11 assert buf == ex_buf, \ 12 "{:} test resulted in buffer {:}, expected {:}".format(name, buf, ex_buf) 13 assert deps == ex_deps, \ 14 "{:} test resulted in dependency list {:}, expected {:}".format(name, deps, ex_deps) 15 print("{:} test passed!".format(name)) 16 17 18 def test_parse_step(): 19 """Simple tests for the PartialParse.parse_step function 20 Warning: these are not exhaustive 21 """ 22 test_step("SHIFT", "S", ["ROOT", "the"], ["cat", "sat"], [], 23 ("ROOT", "the", "cat"), ("sat",), ()) 24 test_step("LEFT-ARC", "LA", ["ROOT", "the", "cat"], ["sat"], [], 25 ("ROOT", "cat",), ("sat",), (("cat", "the"),)) 26 test_step("RIGHT-ARC", "RA", ["ROOT", "run", "fast"], [], [], 27 ("ROOT", "run",), (), (("run", "fast"),)) 28 29 30 def test_parse(): 31 """Simple tests for the PartialParse.parse function 32 Warning: these are not exhaustive 33 """ 34 sentence = ["parse", "this", "sentence"] 35 dependencies = PartialParse(sentence).parse(["S", "S", "S", "LA", "RA", "RA"]) 36 dependencies = tuple(sorted(dependencies)) 37 expected = (('ROOT', 'parse'), ('parse', 'sentence'), ('sentence', 'this')) 38 assert dependencies == expected, \ 39 "parse test resulted in dependencies {:}, expected {:}".format(dependencies, expected) 40 assert tuple(sentence) == ("parse", "this", "sentence"), \ 41 "parse test failed: the input sentence should not be modified" 42 print("parse test passed!") 43 44 45 class DummyModel(object): 46 """Dummy model for testing the minibatch_parse function 47 """ 48 def __init__(self, mode = "unidirectional"): 49 self.mode = mode 50 51 def predict(self, partial_parses): 52 if self.mode == "unidirectional": 53 return self.unidirectional_predict(partial_parses) 54 elif self.mode == "interleave": 55 return self.interleave_predict(partial_parses) 56 else: 57 raise NotImplementedError() 58 59 def unidirectional_predict(self, partial_parses): 60 """First shifts everything onto the stack and then does exclusively right arcs if the first word of 61 the sentence is "right", "left" if otherwise. 62 """ 63 return [("RA" if pp.stack[1] is "right" else "LA") if len(pp.buffer) == 0 else "S" 64 for pp in partial_parses] 65 66 def interleave_predict(self, partial_parses): 67 """First shifts everything onto the stack and then interleaves "right" and "left". 68 """ 69 return [("RA" if len(pp.stack) % 2 == 0 else "LA") if len(pp.buffer) == 0 else "S" 70 for pp in partial_parses] 71 72 def test_dependencies(name, deps, ex_deps): 73 """Tests the provided dependencies match the expected dependencies""" 74 deps = tuple(sorted(deps)) 75 assert deps == ex_deps, \ 76 "{:} test resulted in dependency list {:}, expected {:}".format(name, deps, ex_deps) 77 78 79 def test_minibatch_parse(): 80 """Simple tests for the minibatch_parse function 81 Warning: these are not exhaustive 82 """ 83 84 # Unidirectional arcs test 85 sentences = [["right", "arcs", "only"], 86 ["right", "arcs", "only", "again"], 87 ["left", "arcs", "only"], 88 ["left", "arcs", "only", "again"]] 89 deps = minibatch_parse(sentences, DummyModel(), 2) 90 test_dependencies("minibatch_parse", deps[0], 91 (('ROOT', 'right'), ('arcs', 'only'), ('right', 'arcs'))) 92 test_dependencies("minibatch_parse", deps[1], 93 (('ROOT', 'right'), ('arcs', 'only'), ('only', 'again'), ('right', 'arcs'))) 94 test_dependencies("minibatch_parse", deps[2], 95 (('only', 'ROOT'), ('only', 'arcs'), ('only', 'left'))) 96 test_dependencies("minibatch_parse", deps[3], 97 (('again', 'ROOT'), ('again', 'arcs'), ('again', 'left'), ('again', 'only'))) 98 99 # Out-of-bound test 100 sentences = [["right"]] 101 deps = minibatch_parse(sentences, DummyModel(), 2) 102 test_dependencies("minibatch_parse", deps[0], (('ROOT', 'right'),)) 103 104 # Mixed arcs test 105 sentences = [["this", "is", "interleaving", "dependency", "test"]] 106 deps = minibatch_parse(sentences, DummyModel(mode="interleave"), 1) 107 test_dependencies("minibatch_parse", deps[0], 108 (('ROOT', 'is'), ('dependency', 'interleaving'), 109 ('dependency', 'test'), ('is', 'dependency'), ('is', 'this'))) 110 print("minibatch_parse test passed!") 111 112 113 if __name__ == '__main__': 114 args = sys.argv 115 if len(args) != 2: 116 raise Exception("You did not provide a valid keyword. Either provide 'part_c' or 'part_d', when executing this script") 117 elif args[1] == "part_c": 118 test_parse_step() 119 test_parse() 120 elif args[1] == "part_d": 121 test_minibatch_parse() 122 else: 123 raise Exception("You did not provide a valid keyword. Either provide 'part_c' or 'part_d', when executing this script")

Tip:Spyder传入参数运行的方法:点击菜单栏中的运行->单文件配置->在命令行选项前打勾,后面空格处填入传入的参数即可。

当传入参数part_c时:

SHIFT test passed!

LEFT-ARC test passed!

RIGHT-ARC test passed!

parse test passed!

当传入参数part_d时:minibatch_parse test passed!

2.parser_model.py

实质上就是搭建一个三层的前馈神经网络,用ReLU做激活函数,最后一层用softmax输出,交叉熵做损失函数,同时还加了embedding层。

2.1ParserModel类

1 class ParserModel(nn.Module): 2 """ Feedforward neural network with an embedding layer and two hidden layers. 3 The ParserModel will predict which transition should be applied to a 4 given partial parse configuration. 5 6 PyTorch Notes: 7 - Note that "ParserModel" is a subclass of the "nn.Module" class. In PyTorch all neural networks 8 are a subclass of this "nn.Module". 9 - The "__init__" method is where you define all the layers and parameters 10 (embedding layers, linear layers, dropout layers, etc.). 11 - "__init__" gets automatically called when you create a new instance of your class, e.g. 12 when you write "m = ParserModel()". 13 - Other methods of ParserModel can access variables that have "self." prefix. Thus, 14 you should add the "self." prefix layers, values, etc. that you want to utilize 15 in other ParserModel methods. 16 - For further documentation on "nn.Module" please see https://pytorch.org/docs/stable/nn.html. 17 """ 18 def __init__(self, embeddings, n_features=36, 19 hidden_size=200, n_classes=3, dropout_prob=0.5): 20 """ Initialize the parser model. 21 22 @param embeddings (ndarray): word embeddings (num_words, embedding_size) 23 @param n_features (int): number of input features 24 @param hidden_size (int): number of hidden units 25 @param n_classes (int): number of output classes 26 @param dropout_prob (float): dropout probability 27 """ 28 super(ParserModel, self).__init__() 29 self.n_features = n_features #一个单词有几个特征 30 self.n_classes = n_classes #输出的类别数 31 self.dropout_prob = dropout_prob 32 self.embed_size = embeddings.shape[1] 33 self.hidden_size = hidden_size #嵌入维度 34 self.embeddings = nn.Parameter(torch.tensor(embeddings)) 35 36 ### YOUR CODE HERE (~10 Lines) 37 ### TODO: 38 ### 1) Declare `self.embed_to_hidden_weight` and `self.embed_to_hidden_bias` as `nn.Parameter`. 39 ### Initialize weight with the `nn.init.xavier_uniform_` function and bias with `nn.init.uniform_` 40 ### with default parameters. 41 ### 2) Construct `self.dropout` layer. 42 ### 3) Declare `self.hidden_to_logits_weight` and `self.hidden_to_logits_bias` as `nn.Parameter`. 43 ### Initialize weight with the `nn.init.xavier_uniform_` function and bias with `nn.init.uniform_` 44 ### with default parameters. 45 ### 46 ### Note: Trainable variables are declared as `nn.Parameter` which is a commonly used API 47 ### to include a tensor into a computational graph to support updating w.r.t its gradient. 48 ### Here, we use Xavier Uniform Initialization for our Weight initialization. 49 ### It has been shown empirically, that this provides better initial weights 50 ### for training networks than random uniform initialization. 51 ### For more details checkout this great blogpost: 52 ### http://andyljones.tumblr.com/post/110998971763/an-explanation-of-xavier-initialization 53 ### 54 ### Please see the following docs for support: 55 ### nn.Parameter: https://pytorch.org/docs/stable/nn.html#parameters 56 ### Initialization: https://pytorch.org/docs/stable/nn.init.html 57 ### Dropout: https://pytorch.org/docs/stable/nn.html#dropout-layers 58 self.embed_to_hidden = nn.Linear(self.n_features * self.embed_size, self.hidden_size) 59 nn.init.xavier_normal_(self.embed_to_hidden.weight) 60 nn.init.uniform_(self.embed_to_hidden.bias) 61 62 self.dropout = nn.Dropout(self.dropout_prob) 63 64 self.hidden_to_logits = nn.Linear(self.hidden_size, self.n_classes) 65 nn.init.xavier_normal_(self.hidden_to_logits.weight) 66 nn.init.uniform_(self.hidden_to_logits.bias) 67 68 ### END YOUR CODE 69 70 def embedding_lookup(self, w): #选取一个张量里面索引(w)对应的元素 71 """ Utilize `w` to select embeddings from embedding matrix `self.embeddings` 72 @param w (Tensor): input tensor of word indices (batch_size, n_features) 73 74 @return x (Tensor): tensor of embeddings for words represented in w 75 (batch_size, n_features * embed_size) 76 """ 77 ### YOUR CODE HERE (~1-3 Lines) 78 ### TODO: 79 ### 1) For each index `i` in `w`, select `i`th vector from self.embeddings 80 ### 2) Reshape the tensor using `view` function if necessary 81 ### 82 ### Note: All embedding vectors are stacked and stored as a matrix. The model receives 83 ### a list of indices representing a sequence of words, then it calls this lookup 84 ### function to map indices to sequence of embeddings. 85 ### 86 ### This problem aims to test your understanding of embedding lookup, 87 ### so DO NOT use any high level API like nn.Embedding 88 ### (we are asking you to implement that!). Pay attention to tensor shapes 89 ### and reshape if necessary. Make sure you know each tensor's shape before you run the code! 90 ### 91 ### Pytorch has some useful APIs for you, and you can use either one 92 ### in this problem (except nn.Embedding). These docs might be helpful: 93 ### Index select: https://pytorch.org/docs/stable/torch.html#torch.index_select 94 ### Gather: https://pytorch.org/docs/stable/torch.html#torch.gather 95 ### View: https://pytorch.org/docs/stable/tensors.html#torch.Tensor.view 96 97 x = self.embeddings[w] #(batch_size, n_features, embed_size) 98 x = x.view(x.shape[0], -1) #(batch_size, n_features * embed_size) 99 ### END YOUR CODE 100 101 return x 102 103 104 def forward(self, w): 105 """ Run the model forward. 106 107 Note that we will not apply the softmax function here because it is included in the loss function nn.CrossEntropyLoss 108 109 PyTorch Notes: 110 - Every nn.Module object (PyTorch model) has a `forward` function. 111 - When you apply your nn.Module to an input tensor `w` this function is applied to the tensor. 112 For example, if you created an instance of your ParserModel and applied it to some `w` as follows, 113 the `forward` function would called on `w` and the result would be stored in the `output` variable: 114 model = ParserModel() 115 output = model(w) # this calls the forward function 116 - For more details checkout: https://pytorch.org/docs/stable/nn.html#torch.nn.Module.forward 117 118 @param w (Tensor): input tensor of tokens (batch_size, n_features) 119 120 @return logits (Tensor): tensor of predictions (output after applying the layers of the network) 121 without applying softmax (batch_size, n_classes) 122 """ 123 ### YOUR CODE HERE (~3-5 lines) 124 ### TODO: 125 ### Complete the forward computation as described in write-up. In addition, include a dropout layer 126 ### as decleared in `__init__` after ReLU function. 127 ### 128 ### Note: We do not apply the softmax to the logits here, because 129 ### the loss function (torch.nn.CrossEntropyLoss) applies it more efficiently. 130 ### 131 ### Please see the following docs for support: 132 ### Matrix product: https://pytorch.org/docs/stable/torch.html#torch.matmul 133 ### ReLU: https://pytorch.org/docs/stable/nn.html?highlight=relu#torch.nn.functional.relu 134 x = self.embedding_lookup(w) #(batch_size, n_features * embed_size) 135 h = self.embed_to_hidden(x) #(n_features * embed_size, hidden_size) 136 137 h = F.relu(h) 138 h = self.dropout(h) 139 logits = self.hidden_to_logits(h) 140 ### END YOUR CODE 141 142 return logits

2.2测试

1 if __name__ == "__main__": 2 3 parser = argparse.ArgumentParser(description='Simple sanity check for parser_model.py') 4 parser.add_argument('-e', '--embedding', action='store_true', help='sanity check for embeding_lookup function') 5 parser.add_argument('-f', '--forward', action='store_true', help='sanity check for forward function') 6 args = parser.parse_args() 7 8 embeddings = np.zeros((100, 30), dtype=np.float32) 9 model = ParserModel(embeddings) 10 11 def check_embedding(): 12 inds = torch.randint(0, 100, (4, 36), dtype=torch.long) 13 selected = model.embedding_lookup(inds) 14 assert np.all(selected.data.numpy() == 0), "The result of embedding lookup: " \ 15 + repr(selected) + " contains non-zero elements." 16 17 def check_forward(): 18 inputs =torch.randint(0, 100, (4, 36), dtype=torch.long) 19 out = model(inputs) 20 expected_out_shape = (4, 3) 21 assert out.shape == expected_out_shape, "The result shape of forward is: " + repr(out.shape) + \ 22 " which doesn't match expected " + repr(expected_out_shape) 23 24 if args.embedding: 25 check_embedding() 26 print("Embedding_lookup sanity check passes!") 27 28 if args.forward: 29 check_forward() 30 print("Forward sanity check passes!")

当传入参数-e时:Embedding_lookup sanity check passes!

当传入参数-f时:Forward sanity check passes!

3.run.py

3.1train函数

定义优化器和交叉熵损失函数。

1 def train(parser, train_data, dev_data, output_path, batch_size=1024, n_epochs=10, lr=0.0005): 2 """ Train the neural dependency parser. 3 4 @param parser (Parser): Neural Dependency Parser 5 @param train_data (): 6 @param dev_data (): 7 @param output_path (str): Path to which model weights and results are written. 8 @param batch_size (int): Number of examples in a single batch 9 @param n_epochs (int): Number of training epochs 10 @param lr (float): Learning rate 11 """ 12 best_dev_UAS = 0 13 14 ### YOUR CODE HERE (~2-7 lines) 15 ### TODO: 16 ### 1) Construct Adam Optimizer in variable `optimizer` 17 ### 2) Construct the Cross Entropy Loss Function in variable `loss_func` with `mean` 18 ### reduction (default) 19 ### 20 ### Hint: Use `parser.model.parameters()` to pass optimizer 21 ### necessary parameters to tune. 22 ### Please see the following docs for support: 23 ### Adam Optimizer: https://pytorch.org/docs/stable/optim.html 24 ### Cross Entropy Loss: https://pytorch.org/docs/stable/nn.html#crossentropyloss 25 optimizer = optim.Adam(parser.model.parameters(), lr=1e-3) 26 loss_func = nn.CrossEntropyLoss() 27 28 ### END YOUR CODE 29 30 for epoch in range(n_epochs): 31 print("Epoch {:} out of {:}".format(epoch + 1, n_epochs)) 32 dev_UAS = train_for_epoch(parser, train_data, dev_data, optimizer, loss_func, batch_size) 33 if dev_UAS > best_dev_UAS: 34 best_dev_UAS = dev_UAS 35 print("New best dev UAS! Saving model.") 36 torch.save(parser.model.state_dict(), output_path) 37 print("")

3.2train_for_epoch函数

1 def train_for_epoch(parser, train_data, dev_data, optimizer, loss_func, batch_size): 2 """ Train the neural dependency parser for single epoch. 3 4 Note: In PyTorch we can signify train versus test and automatically have 5 the Dropout Layer applied and removed, accordingly, by specifying 6 whether we are training, `model.train()`, or evaluating, `model.eval()` 7 8 @param parser (Parser): Neural Dependency Parser 9 @param train_data (): 10 @param dev_data (): 11 @param optimizer (nn.Optimizer): Adam Optimizer 12 @param loss_func (nn.CrossEntropyLoss): Cross Entropy Loss Function 13 @param batch_size (int): batch size 14 15 @return dev_UAS (float): Unlabeled Attachment Score (UAS) for dev data 16 """ 17 parser.model.train() # Places model in "train" mode, i.e. apply dropout layer 18 n_minibatches = math.ceil(len(train_data) / batch_size) 19 loss_meter = AverageMeter() 20 21 with tqdm(total=(n_minibatches)) as prog: 22 for i, (train_x, train_y) in enumerate(minibatches(train_data, batch_size)): 23 optimizer.zero_grad() # remove any baggage in the optimizer 24 loss = 0. # store loss for this batch here 25 train_x = torch.from_numpy(train_x).long() 26 train_y = torch.from_numpy(train_y.nonzero()[1]).long() 27 28 ### YOUR CODE HERE (~5-10 lines) 29 ### TODO: 30 ### 1) Run train_x forward through model to produce `logits` 31 ### 2) Use the `loss_func` parameter to apply the PyTorch CrossEntropyLoss function. 32 ### This will take `logits` and `train_y` as inputs. It will output the CrossEntropyLoss 33 ### between softmax(`logits`) and `train_y`. Remember that softmax(`logits`) 34 ### are the predictions (y^ from the PDF). 35 ### 3) Backprop losses 36 ### 4) Take step with the optimizer 37 ### Please see the following docs for support: 38 ### Optimizer Step: https://pytorch.org/docs/stable/optim.html#optimizer-step 39 logits = parser.model(train_x) 40 loss = loss_func(logits, train_y) 41 loss.backward() 42 optimizer.step() 43 44 ### END YOUR CODE 45 prog.update(1) 46 loss_meter.update(loss.item()) 47 48 print ("Average Train Loss: {}".format(loss_meter.avg)) 49 50 print("Evaluating on dev set",) 51 parser.model.eval() # Places model in "eval" mode, i.e. don't apply dropout layer 52 dev_UAS, _ = parser.parse(dev_data) 53 print("- dev UAS: {:.2f}".format(dev_UAS * 100.0)) 54 return dev_UAS

3.3测试

1 if __name__ == "__main__": 2 debug = args.debug 3 4 assert (torch.__version__.split(".") >= ["1", "0", "0"]), "Please install torch version >= 1.0.0" 5 6 print(80 * "=") 7 print("INITIALIZING") 8 print(80 * "=") 9 parser, embeddings, train_data, dev_data, test_data = load_and_preprocess_data(debug) 10 11 start = time.time() 12 model = ParserModel(embeddings) 13 parser.model = model 14 print("took {:.2f} seconds\n".format(time.time() - start)) 15 16 print(80 * "=") 17 print("TRAINING") 18 print(80 * "=") 19 output_dir = "results/{:%Y%m%d_%H%M%S}/".format(datetime.now()) 20 output_path = output_dir + "model.weights" 21 22 if not os.path.exists(output_dir): 23 os.makedirs(output_dir) 24 25 train(parser, train_data, dev_data, output_path, batch_size=1024, n_epochs=10, lr=0.0005) 26 27 if not debug: 28 print(80 * "=") 29 print("TESTING") 30 print(80 * "=") 31 print("Restoring the best model weights found on the dev set") 32 parser.model.load_state_dict(torch.load(output_path)) 33 print("Final evaluation on test set",) 34 parser.model.eval() 35 UAS, dependencies = parser.parse(test_data) 36 print("- test UAS: {:.2f}".format(UAS * 100.0)) 37 print("Done!")

================================================================================

INITIALIZING

================================================================================

Loading data...

took 3.21 seconds

Building parser...

took 1.41 seconds

Loading pretrained embeddings...

took 4.72 seconds

Vectorizing data...

took 1.84 seconds

Preprocessing training data...

took 56.26 seconds

0%| | 0/1848 [00:00<?, ?it/s]took 0.01 seconds

================================================================================

TRAINING

================================================================================

Epoch 1 out of 10

100%|██████████| 1848/1848 [03:54<00:00, 7.89it/s]

Average Train Loss: 0.1767250092627553

Evaluating on dev set

1445850it [00:00, 29785971.49it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 84.95

New best dev UAS! Saving model.

Epoch 2 out of 10

100%|██████████| 1848/1848 [03:47<00:00, 8.12it/s]

Average Train Loss: 0.1093398475922741

Evaluating on dev set

1445850it [00:00, 16996881.76it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 86.25

New best dev UAS! Saving model.

Epoch 3 out of 10

100%|██████████| 1848/1848 [04:21<00:00, 7.08it/s]

Average Train Loss: 0.09525894280926232

Evaluating on dev set

1445850it [00:00, 19918067.29it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 87.61

New best dev UAS! Saving model.

Epoch 4 out of 10

100%|██████████| 1848/1848 [04:41<00:00, 6.56it/s]

Average Train Loss: 0.0863158397126572

Evaluating on dev set

1445850it [00:00, 19792149.63it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 87.84

New best dev UAS! Saving model.

Epoch 5 out of 10

100%|██████████| 1848/1848 [04:18<00:00, 7.14it/s]

Average Train Loss: 0.07947457737986853

Evaluating on dev set

1445850it [00:00, 17618584.60it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 87.97

New best dev UAS! Saving model.

Epoch 6 out of 10

100%|██████████| 1848/1848 [04:16<00:00, 7.21it/s]

Average Train Loss: 0.07394953573614488

Evaluating on dev set

1445850it [00:00, 16607289.49it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 88.50

New best dev UAS! Saving model.

Epoch 7 out of 10

100%|██████████| 1848/1848 [04:50<00:00, 6.36it/s]

Average Train Loss: 0.06902175460583268

Evaluating on dev set

1445850it [00:00, 17512350.78it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 88.31

Epoch 8 out of 10

100%|██████████| 1848/1848 [04:46<00:00, 6.45it/s]

Average Train Loss: 0.06499305106014078

Evaluating on dev set

1445850it [00:00, 11467000.04it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 88.43

Epoch 9 out of 10

100%|██████████| 1848/1848 [04:43<00:00, 6.53it/s]

Average Train Loss: 0.06139369089220897

Evaluating on dev set

1445850it [00:00, 14742517.17it/s]

0%| | 0/1848 [00:00<?, ?it/s]- dev UAS: 88.36

Epoch 10 out of 10

100%|██████████| 1848/1848 [04:32<00:00, 6.78it/s]

Average Train Loss: 0.05800683121700888

Evaluating on dev set

1445850it [00:00, 24076379.68it/s]

- dev UAS: 88.11

================================================================================

TESTING

================================================================================

Restoring the best model weights found on the dev set

Final evaluation on test set

2919736it [00:00, 30076750.78it/s]

- test UAS: 88.73

Done!

浙公网安备 33010602011771号

浙公网安备 33010602011771号