tensorflow2.0——compile-fit实现多输入复合模型

将经过卷积层处理后的feature与非图像特征进行融合

import numpy as np import tensorflow as tf import pandas as pd from my_cnn_net import My_cnn import os # 设置GPU相关底层配置 physical_devices = tf.config.experimental.list_physical_devices('GPU') assert len(physical_devices) > 0, "Not enough GPU hardware devices available" tf.config.experimental.set_memory_growth(physical_devices[0], True) path5 = 'D:\学姐项目\\Nowcasting\hubei_CIKM\\test_v2\\train_nonimg_feature.npy' data5 = np.load(path5) print('data5.shape:{}'.format(data5.shape)) trn_pic = pd.read_csv('D:\学姐项目\\Nowcasting\hubei_CIKM\\test_v2\\' + 'train_SAM_IND.csv', encoding='gbk') Y = np.reshape(10 * trn_pic.label.values, [-1, 1]) trn_img = np.load('D:\学姐项目\\Nowcasting\hubei_CIKM\\test_v2\\' + 'train_global_3layer_image.npy') trn_img = 1.5 * 1e-4 * trn_img ** 2 # 超参数 learn_rate = 1.5e-3 print('lr:{}'.format(learn_rate)) epochs = 200000 data_length = 2300 test_length = 200 train_length = data_length - test_length # bat_size = train_length bat_size = 500 my_drop = 0.15 print('mydrop:{}'.format(my_drop)) all_db = tf.data.Dataset.from_tensor_slices((data5[:train_length], trn_img[:train_length], Y[:train_length])).batch(train_length) train_db = tf.data.Dataset.from_tensor_slices((data5[:train_length], trn_img[:train_length], Y[:train_length])).batch( bat_size) test_db = tf.data.Dataset.from_tensor_slices((data5[train_length:], trn_img[train_length:], Y[train_length:])).batch( test_length) sample = next(iter(train_db)) print('sample[0]:{},sample[1]:{}'.format(sample[0].shape, sample[1].shape)) # 构建复合网络 # 定义输入形状 img_input = tf.keras.layers.Input(shape=(101,101,3)) noimg_input = tf.keras.layers.Input(shape=(43,)) # 卷积模型 # 1 my_conv1 = tf.keras.layers.Conv2D(6, kernel_size=[4, 4], activation=tf.nn.relu,kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.1, seed=12),bias_initializer=tf.keras.initializers.constant(0.1))(img_input) my_conv1 = tf.keras.layers.BatchNormalization()(my_conv1) my_conv1 = tf.keras.layers.MaxPool2D(pool_size=[3, 3], strides=3, padding='same')(my_conv1) my_conv1 = tf.keras.layers.Dropout(my_drop)(my_conv1) # 2 my_conv2 = tf.keras.layers.Conv2D(12, kernel_size=[4, 4], activation=tf.nn.relu,kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.1, seed=12),bias_initializer=tf.keras.initializers.constant(0.1))(my_conv1) my_conv2 = tf.keras.layers.BatchNormalization()(my_conv2) my_conv2 = tf.keras.layers.MaxPool2D(pool_size=[3, 3], strides=3, padding='same')(my_conv2) my_conv2 = tf.keras.layers.Dropout(my_drop)(my_conv2) # 3 my_conv3 = tf.keras.layers.Conv2D(24, kernel_size=[4, 4], activation=tf.nn.relu,kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.1, seed=12),bias_initializer=tf.keras.initializers.constant(0.1))(my_conv2) my_conv3 = tf.keras.layers.BatchNormalization()(my_conv3) my_conv3 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same')(my_conv3) my_conv3 = tf.keras.layers.Dropout(my_drop)(my_conv3) # 4 my_conv4 = tf.keras.layers.Conv2D(48, kernel_size=[4, 4], activation=tf.nn.relu,kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.1, seed=12),bias_initializer=tf.keras.initializers.constant(0.1))(my_conv3) my_conv4 = tf.keras.layers.BatchNormalization()(my_conv4) my_conv4 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same')(my_conv4) my_conv4 = tf.keras.layers.Dropout(my_drop)(my_conv4) my_conv4 = tf.keras.layers.Flatten()(my_conv4) # my_conv4 = tf.squeeze(my_conv4) # 复合模型 concat = tf.keras.layers.concatenate([noimg_input,my_conv4]) out = tf.keras.layers.Dense(45, activation='relu')(concat) out = tf.keras.layers.Dense(20, activation='relu')(out) out = tf.keras.layers.Dense(1, activation='relu',bias_initializer=tf.keras.initializers.constant(11.0))(out) sum_model = tf.keras.models.Model(inputs = [noimg_input,img_input],outputs = out) sum_model.summary() # sum_model = tf.keras.models.Model(inputs = img_input,outputs = my_conv4) # 定义优化器 opt = tf.keras.optimizers.Adam(lr=learn_rate) sum_model.compile(loss=tf.losses.MSE, optimizer=opt) sum_model.fit([data5[:train_length], trn_img[:train_length]], [Y[:train_length]], validation_data = ([data5[train_length:], trn_img[train_length:]],[Y[train_length:]]), epochs = epochs,batch_size=bat_size)

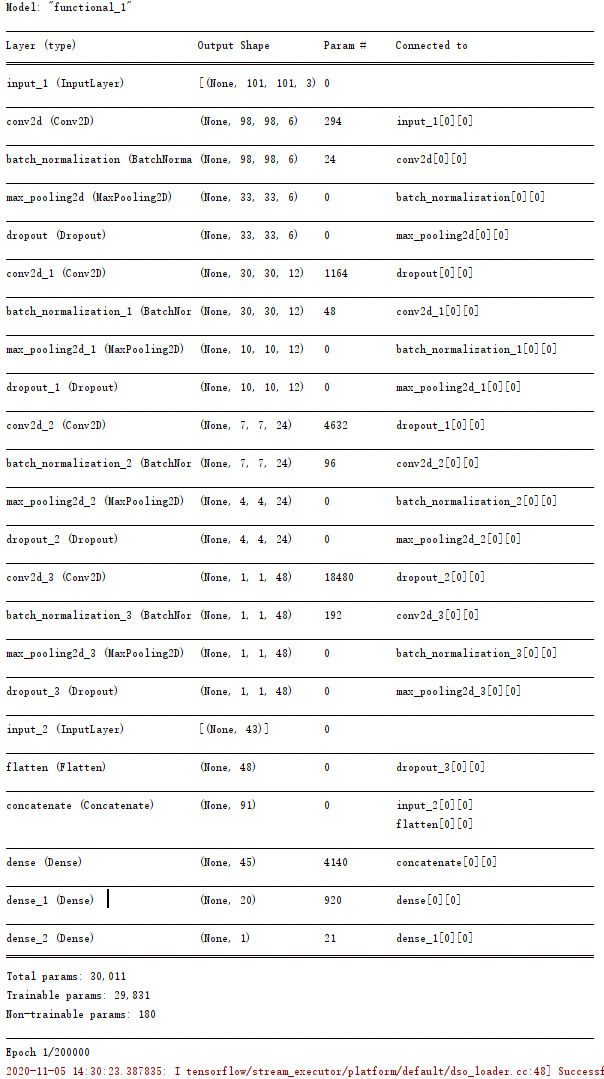

网络结构:

浙公网安备 33010602011771号

浙公网安备 33010602011771号