![]()

![]()

import tensorflow as tf

import numpy as np

x = np.array([1, 2, 3, 4])

y = np.array([0,0,1,1])

w = tf.Variable(1.)

b = tf.Variable(1.)

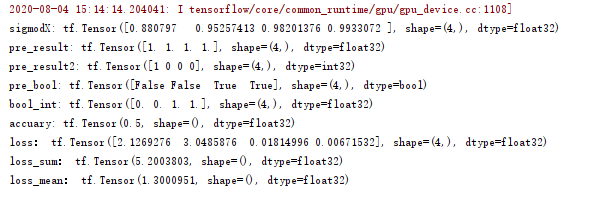

sigmodX = 1 / (1 + tf.exp(-(w * x + b))) # sigmod 函数

pre_result = tf.round(sigmodX) # 将结果四舍五入

pre_result2 = tf.where(sigmodX < 0.9,1,0) # 阈值设置

pre_bool = tf.equal(pre_result,y) # 预测值四舍五入后与标记值对比,判断预测是否正确

bool_int = tf.cast(pre_bool,tf.float32) # 将bool转化为0,1

accuary = tf.reduce_mean(bool_int) # 对正确结果数组求平均值就是准确率

loss = -(y * tf.math.log(sigmodX) + (1 - y)* tf.math.log(1 - sigmodX)) # 每个样本的损失值

loss_sum = tf.reduce_sum(loss) # 所有样本的损失总和

loss_mean= tf.reduce_mean(loss) # 所有样本的的平均损失

print('sigmodX:',sigmodX)

print('pre_result:',pre_result)

print('pre_result2:',pre_result2)

print('pre_bool:',pre_bool)

print('bool_int:',bool_int)

print('accuary:',accuary)

print('loss:',loss)

print('loss_sum:',loss_sum)

print('loss_mean:',loss_mean)

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号