【milvus】通过k8s安装milvus

背景

由于需要使用向量库,现在需要安装一个milvus向量,但是milvus的集群安装需要依赖k8s

现状k8s集群环境有问题,milvus单机版已经安装完成,现在尝试修复k8s然后安装 milvus

k8s恢复

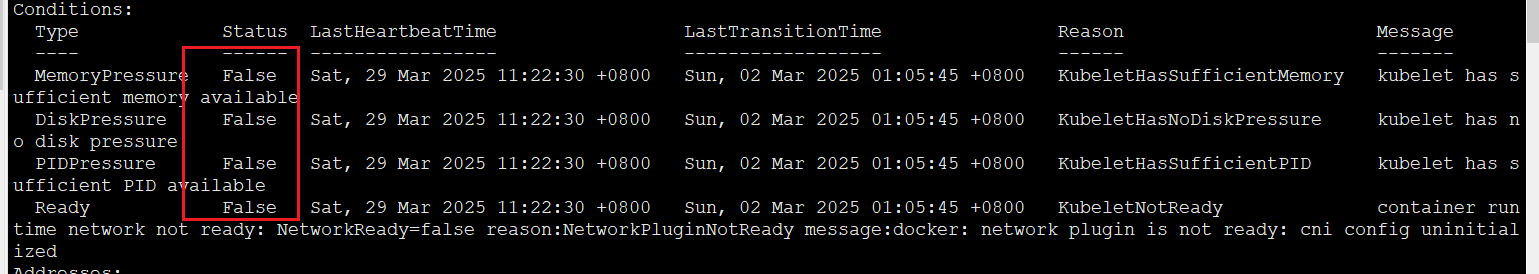

# 查看节点信息.发现节点notready

kubectl get nodes

# 查看节点详细信息

kubectl describe node xxxxx

排除内存不足,磁盘不足等资源问题

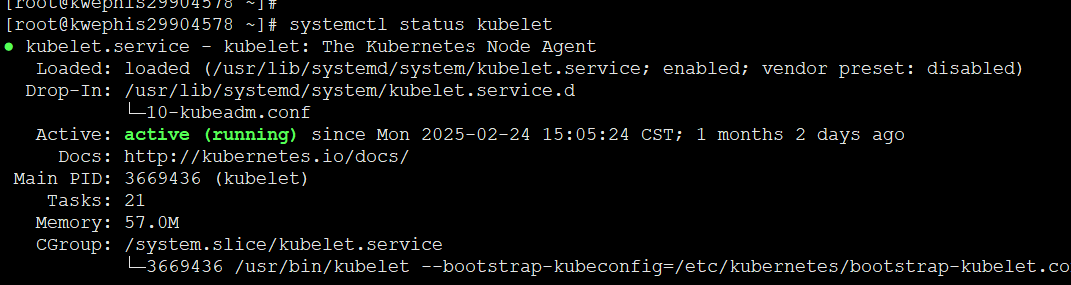

systemctl status kubelet # 查看服务状态

journalctl -u kubelet -f # 查看实时日志

尝试重启k8s

systemctl restart kubelet

# 检查网络状态

kubectl get pods -n kube-system

# 发现并没有安装网络插件

# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

kubectl apply -f calico.yaml

Calico 组件异常

查看组件状态

kubectl get pods -n kube-system | grep calico

docker images

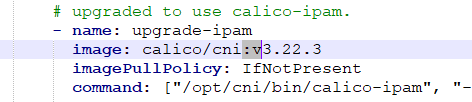

# 发现yaml中的版本和当前系统安装的版本不一致,修改yaml中的组件版本后重新安装

kubectl get deployments --all-namespaces

# 指定命名空间后删除

kubectl delete deployment calico-kube-controllers -n kube-system

kubectl delete deployment calico-node -n kube-system

kubectl delete deployment milvus-operator -n milvus-operator

kubectl apply -f calico.yaml

重新安装之后还是报错

从新修改yaml中对应的镜像名称

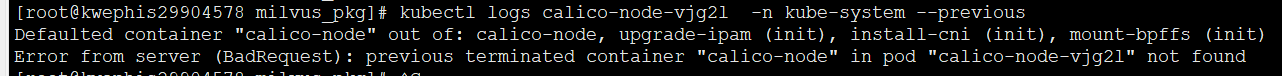

# 查看崩溃容器的日志

kubectl logs calico-node-cs4c5 -n kube-system --previous # 查看上一次崩溃的日志

kubectl describe pod calico-node-lxd7q -n kube-system

# 检查DaemonSet 的挂载配置

kubectl get daemonset calico-node -n kube-system -o yaml | grep -A 5 "volumeMounts"

# 查看 Pod 状态和日志

kubectl get pods -n kube-system -l k8s-app=calico-node

kubectl logs -n kube-system calico-node-vjg2l -c calico-node

kubectl describe pod -n kube-system calico-node-lqtd7 | grep -A 5 "Mounts"

# 确认输出包含:

# /var/lib/calico from var-lib-calico (rw)

# /lib/modules from lib-modules (ro)

docker ps | grep cali

docker stop 657a7ba65eac

kubectl describe pod calico-node-lqtd7 -n kube-system | grep -A 5 "Events"

kubectl logs calico-node-lqtd7 -n kube-system --previous

集群milvus安装

kubectl apply -f https://raw.githubusercontent.com/zilliztech/milvus-operator/main/deploy/manifests/deployment.yaml

# 网络超时,改为浏览器下载后本地上传

kubectl apply -f deployment.yaml

# 查看milvus是否运行

kubectl get pods -n milvus-operator

服务器显示pod状态为pending

安装helm

tar -zxvf helm-v3.12.2-linux-amd64.tar.gz

cp helm /usr/local/bin/helm

helm repo add milvus --insecure-skip-tls-verify https://zilliztech.github.io/milvus-helm/

helm repo update

helm install milvus-dy-v1 milvus/milvus --insecure-skip-tls-verify

# 下载对应的镜像

# 查看服务状态

kubectl get pods

# kubectl describe pod milvus-dy-v1-datanode-bbbdf468-mhmvk 查看pod报错信息

# kubectl describe pod milvus-dy-v1-pulsarv3-bookie-0 查看pod报错信息

docker pull milvusdb/milvus:v2.5.8

docker pull apachepulsar/pulsar:3.0.7

状态是Completed

kubectl describe pod milvus-dy-v1-pulsarv3-bookie-init-hc4k8

集群端口开放

ubectl edit svc dayu-milvus

# 执行下面的命令修改milvus集群的service,将下图红框部分改为NodePort

spec:

clusterIP: xxx.xxx.xx.xx

clusterIPs:

- xxx.xxx.xx.xx

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: milvus

nodePort: 30700

port: 19530

protocol: TCP

targetPort: milvus

- name: metrics

nodePort: 32333

port: 9091

protocol: TCP

targetPort: metrics

selector:

app.kubernetes.io/instance: dayu-milvus

app.kubernetes.io/name: milvus

component: proxy

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

# 2)查看dayu-milvus端口,可以通过主节点ip:暴露端口(30700)连接milvus。

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dayu-milvus NodePort xxx.xxx.xx.xx <none> 19530:30700/TCP,9091:32333/TCP 25m

dayu-milvus-datanode ClusterIP None <none> 9091/TCP 25m

dayu-milvus-etcd ClusterIP xxx.xxx.xx.xx <none> 2379/TCP,2380/TCP 25m

dayu-milvus-etcd-headless ClusterIP None <none> 2379/TCP,2380/TCP 25m

dayu-milvus-indexnode ClusterIP None <none> 9091/TCP 25m

dayu-milvus-minio ClusterIP xxx.xxx.xx.xx <none> 9000/TCP 25m

dayu-milvus-minio-svc ClusterIP None <none> 9000/TCP 25m

dayu-milvus-mixcoord ClusterIP xxx.xxx.xx.xx <none> 9091/TCP 25m

dayu-milvus-pulsarv3-bookie ClusterIP None <none> 3181/TCP,8000/TCP 25m

dayu-milvus-pulsarv3-broker ClusterIP None <none> 8080/TCP,6650/TCP 25m

dayu-milvus-pulsarv3-proxy ClusterIP xxx.xxx.xx.xx <none> 80/TCP,6650/TCP 25m

dayu-milvus-pulsarv3-recovery ClusterIP None <none> 8000/TCP 25m

dayu-milvus-pulsarv3-zookeeper ClusterIP None <none> 8000/TCP,2888/TCP,3888/TCP,2181/TCP 25m

dayu-milvus-querynode ClusterIP None <none> 9091/TCP 25m

kubernetes ClusterIP xxx.xxx.xx.xx <none> 443/TCP 14h

备份迁移

docker save -o milvusdb.milvus.v2.5.8.tar milvusdb/milvus:v2.5.8 docker save -o milvusdb.etcd.3.5.18-r1.tar milvusdb/etcd:3.5.18-r1 docker save -o apachepulsar.pulsar.3.0.7.tar apachepulsar/pulsar:3.0.7 docker save -o minio.minio.RELEASE.2023-03-20T20-16-18Z.tar minio/minio:RELEASE.2023-03-20T20-16-18Z

docker import milvusdb.milvus.v2.5.8.tar milvusdb/milvus:v2.5.8 docker import milvusdb.etcd.3.5.18-r1.tar milvusdb/etcd:3.5.18-r1 docker import apachepulsar.pulsar.3.0.7.tar apachepulsar/pulsar:3.0.7 docker import minio.minio.RELEASE.2023-03-20T20-16-18Z.tar minio/minio:RELEASE.2023-03-20T20-16-18Z

cd images/for image in $(find . -type f -name "*.tar.gz") ; do gunzip -c $image | docker load; done

在联网的机器上执行

helm template milvus-dy-v1 milvus/milvus > milvus_manifest.yaml

在新机器上执行

kubectl apply -f milvus_manifest.yaml

# 清理重新安装

kubectl delete -f milvus_manifest.yaml

kubectl delete pod milvus-dy-v1-etcd-1 milvus-dy-v1-etcd-2 --force

kubectl apply -f output.yaml

kubectl describe pod dayu-milvus-datanode-64b974cfcc-d7vbw

kubectl delete pod dayu-milvus-datanode-64b974cfcc-d7vbw --force

kubectl describe pod dayu-milvus-mixcoord-56684769b5-8kcn8

docker pull milvusdb/milvus:v2.5.8

kubectl delete pod dayu-milvus-mixcoord-56684769b5-8kcn8 --force

kubectl get pod | grep Terminating | awk '{printf("kubectl delete pod %s --force\n", $1)}'

kubectl get pod | grep Terminating | awk '{printf("kubectl delete pod %s --force\n", $1)}' | /bin/bash

kubectl describe pod dayu-milvus-datanode-64b974cfcc-mbrwq

docker load -i milvusdb.etcd.3.5.18-r1.tar

docker load -i milvusdb.milvus.v2.5.8.tar

docker load -i minio.minio.RELEASE.2023-03-20T20-16-18Z.tar

问题

ERROR CRI

I0407 10:40:02.594028 19076 checks.go:245] validating the existence and emptiness of directory /var/lib/etcd

[preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: time="2025-04-07T10:40:02+08:00" level=fatal msg="connect: connect endpoint 'unix:///run/cri-dockerd.sock', make sure you are running as root and the endpoint has been started: context deadline exceeded"

, error: exit status 1

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

error execution phase preflight

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:235

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:421

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:207

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdInit.func1

cmd/kubeadm/app/cmd/init.go:153

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:856

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:974

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:902

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1571

[2025-04-07 10:40:02,596725755] [./join_cluster.sh:49] [ERROR]: k8s init fail

查看cri状态:

systemctl status cri-docker

发现报错:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@kwephis29904577 data]# systemctl status cri-docker

● cri-docker.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/cri-docker.service; enabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Mon 2025-04-07 10:39:05 CST; 11min ago

Docs: https://docs.mirantis.com

Main PID: 18677 (code=exited, status=1/FAILURE)

Apr 07 10:39:11 kwephis29904577 systemd[1]: Failed to start CRI Interface for Docker Application Container Engine.

Apr 07 10:39:23 kwephis29904577 systemd[1]: cri-docker.service: Start request repeated too quickly.

Apr 07 10:39:23 kwephis29904577 systemd[1]: cri-docker.service: Failed with result 'exit-code'.

Apr 07 10:39:23 kwephis29904577 systemd[1]: Failed to start CRI Interface for Docker Application Container Engine.

Apr 07 10:39:35 kwephis29904577 systemd[1]: cri-docker.service: Start request repeated too quickly.

Apr 07 10:39:35 kwephis29904577 systemd[1]: cri-docker.service: Failed with result 'exit-code'.

Apr 07 10:39:35 kwephis29904577 systemd[1]: Failed to start CRI Interface for Docker Application Container Engine.

Apr 07 10:39:47 kwephis29904577 systemd[1]: cri-docker.service: Start request repeated too quickly.

Apr 07 10:39:47 kwephis29904577 systemd[1]: cri-docker.service: Failed with result 'exit-code'.

Apr 07 10:39:47 kwephis29904577 systemd[1]: Failed to start CRI Interface for Docker Application Container Engine.

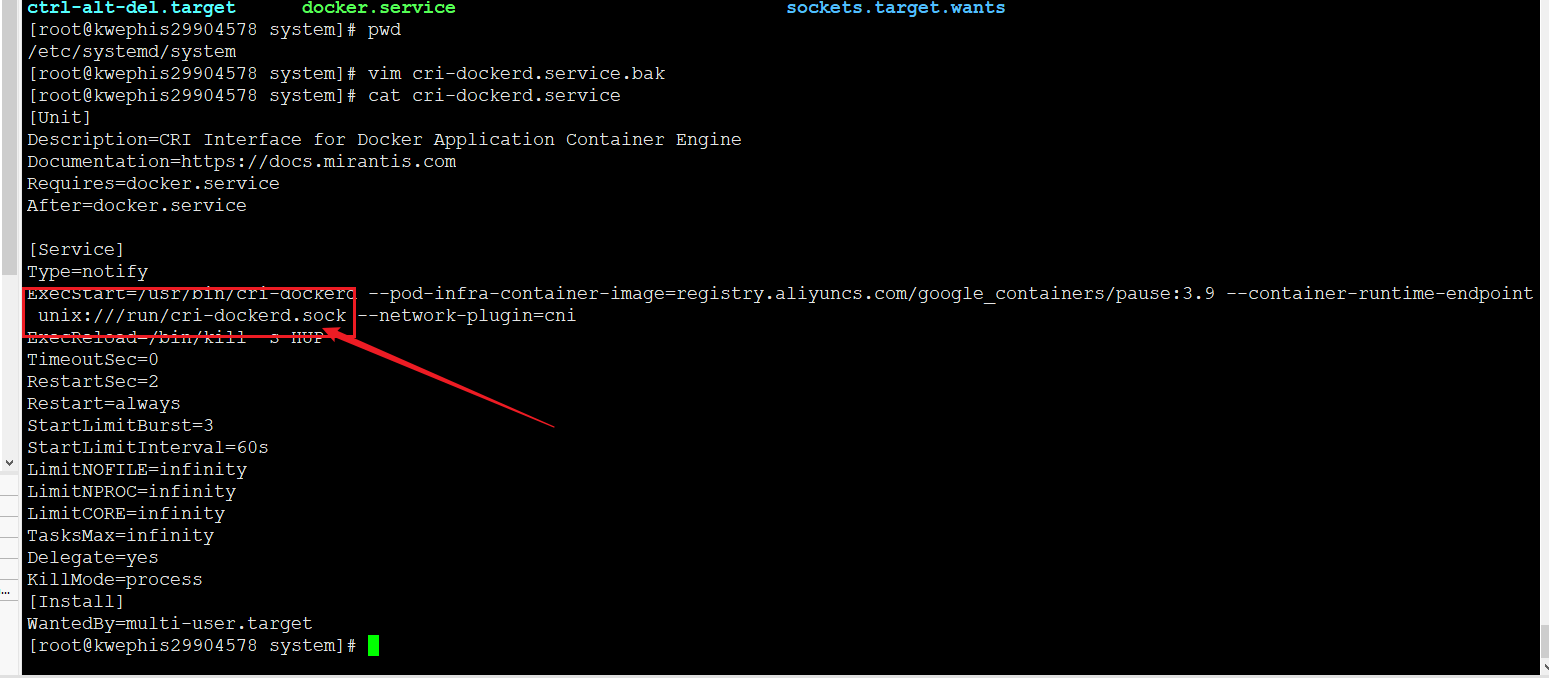

# 修改cri-docker.service中的内容,添加unix:///run/cri-dockerd.sock

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint unix:///run/cri-dockerd.sock --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni --cni-conf-dir=/etc/cni/net.d

ExecReload=/bin/kill -s HUP $MAINPID

[preflight] Some fatal errors occurred ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables

# 报错信息

[preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

error execution phase preflight

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:235

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:421

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:207

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdInit.func1

cmd/kubeadm/app/cmd/init.go:153

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:856

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:974

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:902

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1571

# 处理流程

modprobe br_netfilter

echo "br_netfilter" | sudo tee /etc/modules-load.d/br_netfilter.conf

vim /etc/sysctl.conf

# 添加如下配置

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

# 应用配置:

sysctl -p

# 检查文件是否存在

ls /proc/sys/net/bridge/bridge-nf-call-iptables

# 检查参数值是否为1

cat /proc/sys/net/bridge/bridge-nf-call-iptables

helm迁移之后,无法使用

NAME READY STATUS RESTARTS AGE

milvus-dy-v1-datanode-bbbdf468-7jrx8 0/1 CrashLoopBackOff 2 (26s ago) 46s

milvus-dy-v1-etcd-0 0/1 CreateContainerError 0 46s

milvus-dy-v1-etcd-1 0/1 CreateContainerError 0 46s

milvus-dy-v1-etcd-2 0/1 Pending 0 46s

milvus-dy-v1-indexnode-7ddfd67849-9m5pt 0/1 CrashLoopBackOff 1 (42s ago) 46s

milvus-dy-v1-minio-0 0/1 CrashLoopBackOff 1 (23s ago) 46s

milvus-dy-v1-minio-1 0/1 CrashLoopBackOff 1 (21s ago) 46s

milvus-dy-v1-minio-2 0/1 CrashLoopBackOff 1 (17s ago) 46s

milvus-dy-v1-minio-3 0/1 Pending 0 46s

milvus-dy-v1-mixcoord-68498dd47d-jgbb2 0/1 CrashLoopBackOff 1 (43s ago) 46s

milvus-dy-v1-proxy-6fd5bf96f6-qxf2q 0/1 CrashLoopBackOff 1 (42s ago) 46s

milvus-dy-v1-pulsarv3-bookie-0 0/1 Pending 0 46s

milvus-dy-v1-pulsarv3-bookie-1 0/1 Pending 0 45s

milvus-dy-v1-pulsarv3-bookie-2 0/1 Pending 0 45s

milvus-dy-v1-pulsarv3-bookie-init-gb7gm 0/1 Init:CrashLoopBackOff 1 (43s ago) 46s

milvus-dy-v1-pulsarv3-broker-0 0/1 Init:RunContainerError 2 (27s ago) 46s

milvus-dy-v1-pulsarv3-broker-1 0/1 Init:RunContainerError 2 (26s ago) 46s

milvus-dy-v1-pulsarv3-proxy-0 0/1 Init:CrashLoopBackOff 1 (40s ago) 46s

milvus-dy-v1-pulsarv3-proxy-1 0/1 Init:RunContainerError 2 (24s ago) 45s

milvus-dy-v1-pulsarv3-pulsar-init-dlb9h 0/1 Init:CrashLoopBackOff 2 (30s ago) 46s

milvus-dy-v1-pulsarv3-recovery-0 0/1 Init:CrashLoopBackOff 1 (44s ago) 46s

milvus-dy-v1-pulsarv3-zookeeper-0 0/1 Pending 0 45s

milvus-dy-v1-pulsarv3-zookeeper-1 0/1 Pending 0 45s

milvus-dy-v1-pulsarv3-zookeeper-2 0/1 Pending 0 45s

milvus-dy-v1-querynode-7c8684d6f5-dcpjw 0/1 CrashLoopBackOff 2 (23s ago) 46s

### 查看服务状态

kubectl get pods

kubectl describe pod milvus-dy-v1-datanode-bbbdf468-p6dvf 查看pod报错信息

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 116s default-scheduler Successfully assigned default/milvus-dy-v1-datanode-bbbdf468-7jrx8 to kwephis29904578

Normal Pulled 29s (x5 over 114s) kubelet Container image "milvusdb/milvus:v2.5.8" already present on machine

Normal Created 29s (x5 over 114s) kubelet Created container datanode

Warning Failed 29s (x5 over 114s) kubelet Error: failed to start container "datanode": Error response from daemon: OCI runtime create failed: container_linux.go:380: starting container process caused: exec: "milvus": executable file not found in $PATH: unknown

Warning BackOff 26s (x10 over 111s) kubelet Back-off restarting failed container

安装k8s的时候,安装calico-plugin插件报错,The connection to the server localhost:8080 was refused - did you specify the right host or port?

kubeadm reset

需要删除残留文件,否则会报错 rm -rf /etc/cni/net.d rm -rf $HOME/.kube

之后需要重新初始化k8s

admin.conf: no such file or directory

W0402 16:26:23.695380 586238 initconfiguration.go:332] [config] WARNING: Ignored YAML document with GroupVersionKind kubeadm.k8s.io/v1beta3, Kind=KubeletConfiguration error execution phase upload-certs: failed to load admin kubeconfig: open /etc/kubernetes/admin.conf: no such file or directory

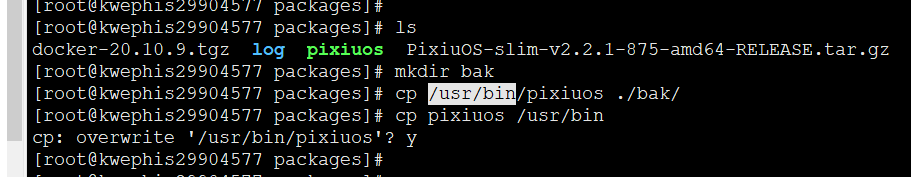

修改appctl中支持bclinux vim ./plugin/init_environment/cri-docker-plugin/appctl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u)😒(id -g) $HOME/.kube/config

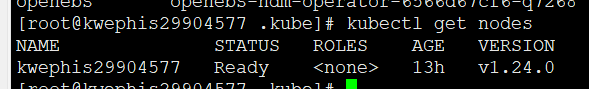

节点不是主节点

[root@kwephis29904577 .kube]# kubectl get nodes NAME STATUS ROLES AGE VERSION kwephis29904577 Ready

kubectl label nodes kwephis29904577 node-role.kubernetes.io/master= kubectl get nodes

pods服务没有启动

kubectl get pod -A

kubectl describe node kwephis29904577

kubectl describe pod calico-node-txpjp -n kube-system

cd /data/pixiuos_deploy/kube/plugin/deploy_after_master_ready/calico-plugin

kubectl apply -f calico_deploy.yaml

kubectl get pods -A

echo "kwephis29904577" | sudo tee /var/lib/calico/nodename sudo chmod 600 /var/lib/calico/nodename

cp /etc/kubernetes/admin.conf /etc/kubernetes/kubeconfig

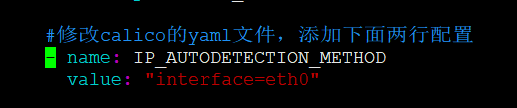

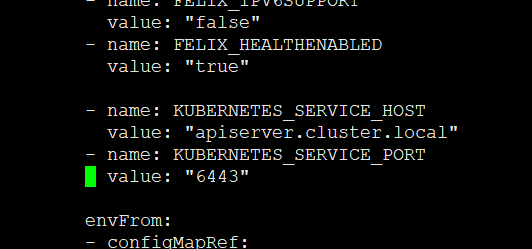

注意前面用空格 - name: KUBERNETES_SERVICE_HOST value: "apiserver.cluster.local" - name: KUBERNETES_SERVICE_PORT value: "6443"

kubectl delete pod -n kube-system kube-apiserver-calico-node-57tsn

kubectl logs calico-node-txpjp -n kube-system -c calico-node

查看calico-node容器日志(指定容器名) kubectl logs calico-node-m9w5h -n kube-system -c calico-node kubectl logs calico-node-txpjp -n kube-system -c bird kubectl logs calico-node-txpjp -n kube-system -c confd kubectl logs calico-node-txpjp -n kube-system -c felix

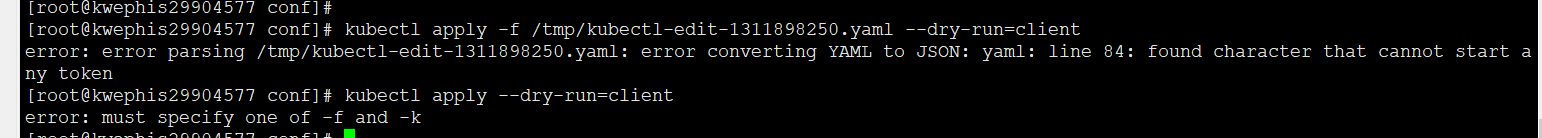

修改calico配置报错

kubectl edit daemonset calico-node -n kube-system

error: daemonsets.apps "calico-node" is invalid A copy of your changes has been stored to "/tmp/kubectl-edit-1311898250.yaml" error: Edit cancelled, no valid changes were saved.

kubectl apply --dry-run=client

连接报错calico

retry error=Get "https://apiserver.cluster.local:6443/api/v1/nodes/foo": x509: certificate is valid for kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local, kwephis29904577, not apiserver.cluster.local

kubectl logs calico-node-klkct -n kube-system -c calico-node

修改为kubeadm中的地址

kwephis29904577

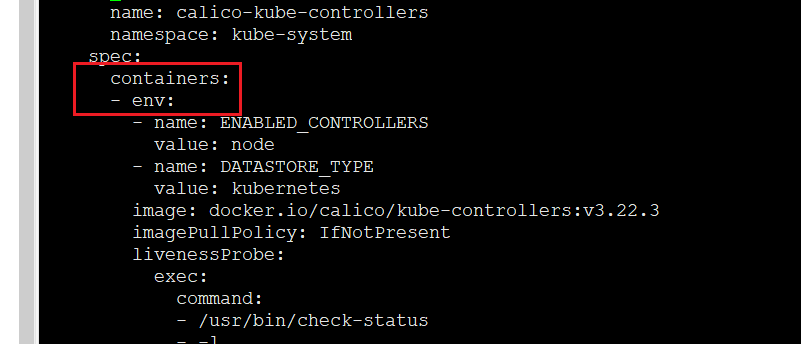

calico-kube-controllers-55ff5bfff4-47xtv 状态不对

kubectl logs calico-kube-controllers-6d6876d874-2kfr6 -n kube-system -c calico-node

kubectl delete pod -n kube-system calico-kube-controllers-55ff5bfff4-47xtv systemctl restart kubelet

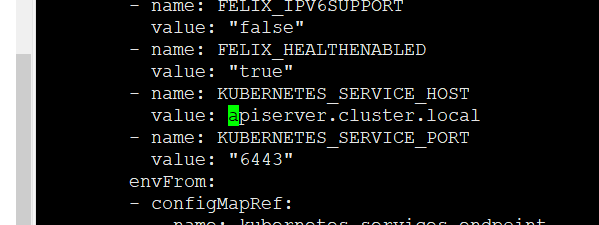

kubectl edit deployment calico-kube-controllers -n kube-system

注意前面用空格

name: KUBERNETES_SERVICE_HOST value: kwephis29904577

name: KUBERNETES_SERVICE_PORT value: "6443"

浙公网安备 33010602011771号

浙公网安备 33010602011771号