kubeasz实现k8s集群搭建

环境: Centos7

Docker v19.03.15

harbor.v2.3.2

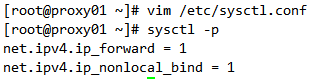

keepalived安装与配置

1. haproxy1 和 haproxy2 安装keepalived haproxy

#yum install keepalived haproxy -y

2.拷贝keepalived的vip模板配置文件

#find / -name keepalived.conf* #cp /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

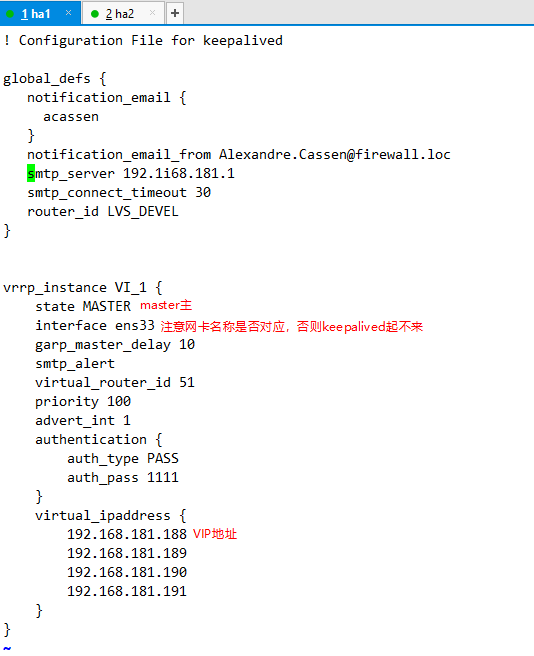

3.编辑VIP模板文件

#vim /etc/keepalived/keepalived.conf

4.启动keepalived

#systemctl restart keepalived.service

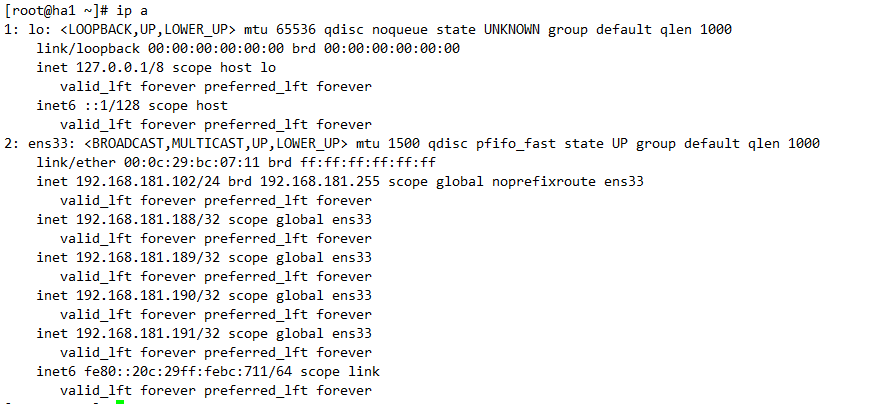

可以看到虚拟 IP 地址

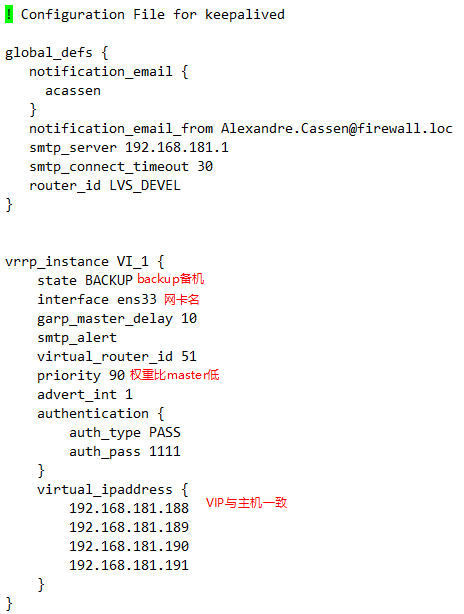

5.拷贝配置文件到h2备机并编辑配置文件

#scp /etc/keepalived/keepalived.conf 192.168.181.103:/etc/keepalived/keepalived.conf

#vim /etc/keepalived/keepalived.conf

6.启动keepalived

#systemctl start keepalived.service

7.验证

关闭haproxy1的keepalived服务

#systemctl stop keepalived.service

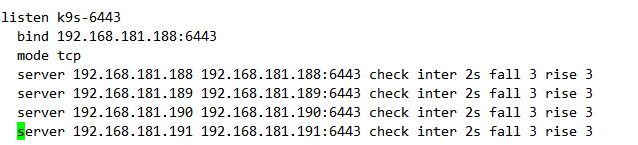

8.编辑haproxy文件末尾添加

# vim /etc/haproxy/haproxy.cfg

#cp /etc/haproxy/haproxy.cfg 192.168.181.107:/etc/haproxy/ 另外一台haproxy也编辑,直接复制过去

两台haproxy添加核心路由转发功能

最后两台haproxy重启keepalived和haproxy

在haproxy2上能ping通虚拟IP,并通过命令 ip a 查看ip地址能看到VIP,则配置完成

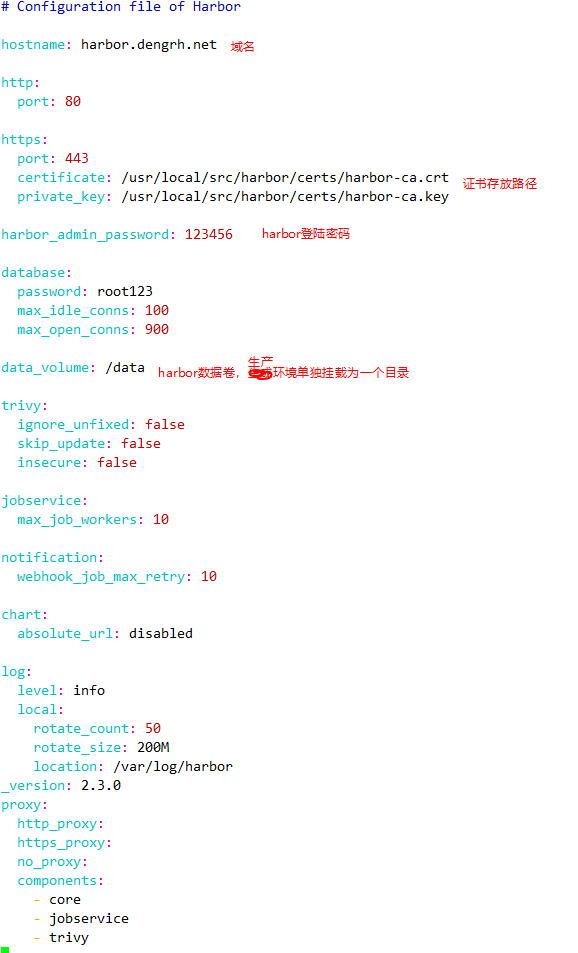

harbor仓库 https 私有证书配置

1.生成私有配

#mkdir -p /usr/local/src/harbor/certs

#cd /usr/local/src/harbor/certs

#openssl genrsa -out ./harbor-ca.key

2.自己给自己签发证书

#openssl req -x509 -new -nodes -key ./harbor-ca.key -subj "/CN=harbor.dengrh.net" -days 3650 -out ./harbor-ca.crt

3.编辑harbor.yml

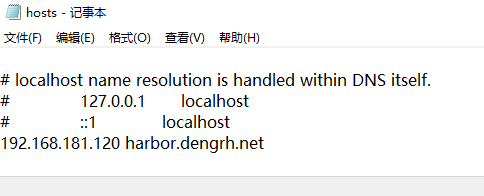

4.安装harbor、配置本地hosts文件解析即可访问harbor

ansible配置与安装

master1主机操作

1.安装ansible

#yum install -y ansible

2.配置免密认证

(一直回车)

哪台需要配就填哪台IP

3.安装环境变量

#export release=3.1.1

安装ezdown

#wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

#vim ezdown

4.修改好需要的版本后

#chmod a+x ezdown

#./ezdown -D

5.生产一个新k8集群的配置文件

#./ezctl new k8s-cluster1

6.部署k8s前配置

#cd /etc/kubeasz/clusters/k8s-cluster1

#vim /hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...) [etcd] 192.168.181.130 192.168.181.131 192.168.181.132 # master node(s) [kube_master] 192.168.181.110 192.168.181.111 # work node(s) [kube_node] 192.168.181.140 192.168.181.141 # [optional] harbor server, a private docker registry # 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one [harbor] #192.168.1.8 NEW_INSTALL=false # [optional] loadbalance for accessing k8s from outside

#haproxy配置信息 端口6443 [ex_lb] 192.168.181.102 LB_ROLE=master EX_APISERVER_VIP=192.168.181.188 EX_APISERVER_PORT=6443 192.168.181.103 LB_ROLE=backup EX_APISERVER_VIP=192.168.181.188 EX_APISERVER_PORT=6443 # [optional] ntp server for the cluster [chrony] #192.168.1.1 [all:vars] # --------- Main Variables --------------- # Secure port for apiservers SECURE_PORT="6443" # Cluster container-runtime supported: docker, containerd CONTAINER_RUNTIME="docker" # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_NETWORK="calico" # Service proxy mode of kube-proxy: 'iptables' or 'ipvs' PROXY_MODE="ipvs"

#service地址配置 # K8S Service CIDR, not overlap with node(host) networking SERVICE_CIDR="10.100.0.0/16"

#容器地址配置 # Cluster CIDR (Pod CIDR), not overlap with node(host) networking CLUSTER_CIDR="10.200.0.0/16"

#暴露端口区间 # NodePort Range NODE_PORT_RANGE="30000-40000"

#DNS域名后缀 # Cluster DNS Domain CLUSTER_DNS_DOMAIN="cluster.local" # -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

#可执行程序存放路径 bin_dir="/usr/bin"

#安装程序目录 # Deploy Directory (kubeasz workspace) base_dir="/etc/kubeasz"

#cluster地址 # Directory for a specific cluster cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

#证书地址 # CA and other components cert/key Directory ca_dir="/etc/kubernetes/ssl"

7.以下组件改为no,自己安装

#/etc/kubeasz/clusters/k8s-cluster1/config.yml

安装步骤说明

进行安装,环境初始化

#./ezctl setup k8s-cluster1 01

出现如下报错,圈起来的是没权限远程ssh的IP地址

8.安装etcd

#./ezctl setup k8s-cluster1 02

etcd1主机操作

1.查看etcd服务

2.各etcd服务器验证etcd服务

添加环境变量 #export NODE_IPS="192.168.181.130 192.168.181.131 192.168.181.132"

#for ip in ${NODE_IPS}; do ETCDCTL=API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

/etc/kubeasz/roles/kube-node/tasks/main.yml

service环境边变量配置文件存放路径

master1上安装各节点

03安装docker节点 04安装master节点 05安装node节点

#./ezctl setup k8s-cluster1 03 #./ezctl setup k8s-cluster1 04 #./ezctl setup k8s-cluster1 05

node1节点安装

#docker pull docker.io/calico/cni:v3.19.2

master01上安装网络组件

#./ezctl setup k8s-cluster1 06

安装完成后,执行以下命令可以看到pod和master到各node节点的监控路由表

创建各test容器

测试容器是否能与其他主机容器通信

k8s架构基本搭建完成

浙公网安备 33010602011771号

浙公网安备 33010602011771号