云原生学习作业6

一,基于StatefulSet部署有状态访问、基于DaemonSet在每一个node节点部署一个prometheus node-exporter

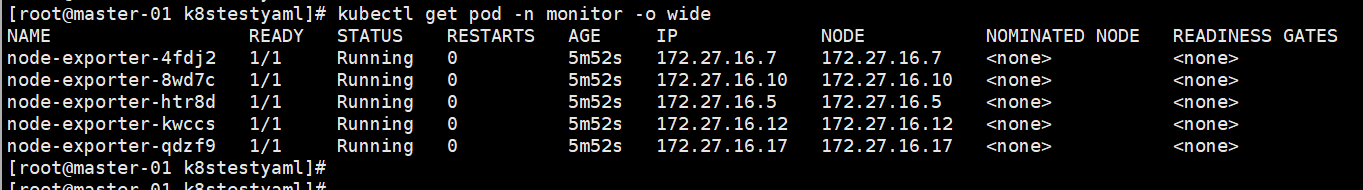

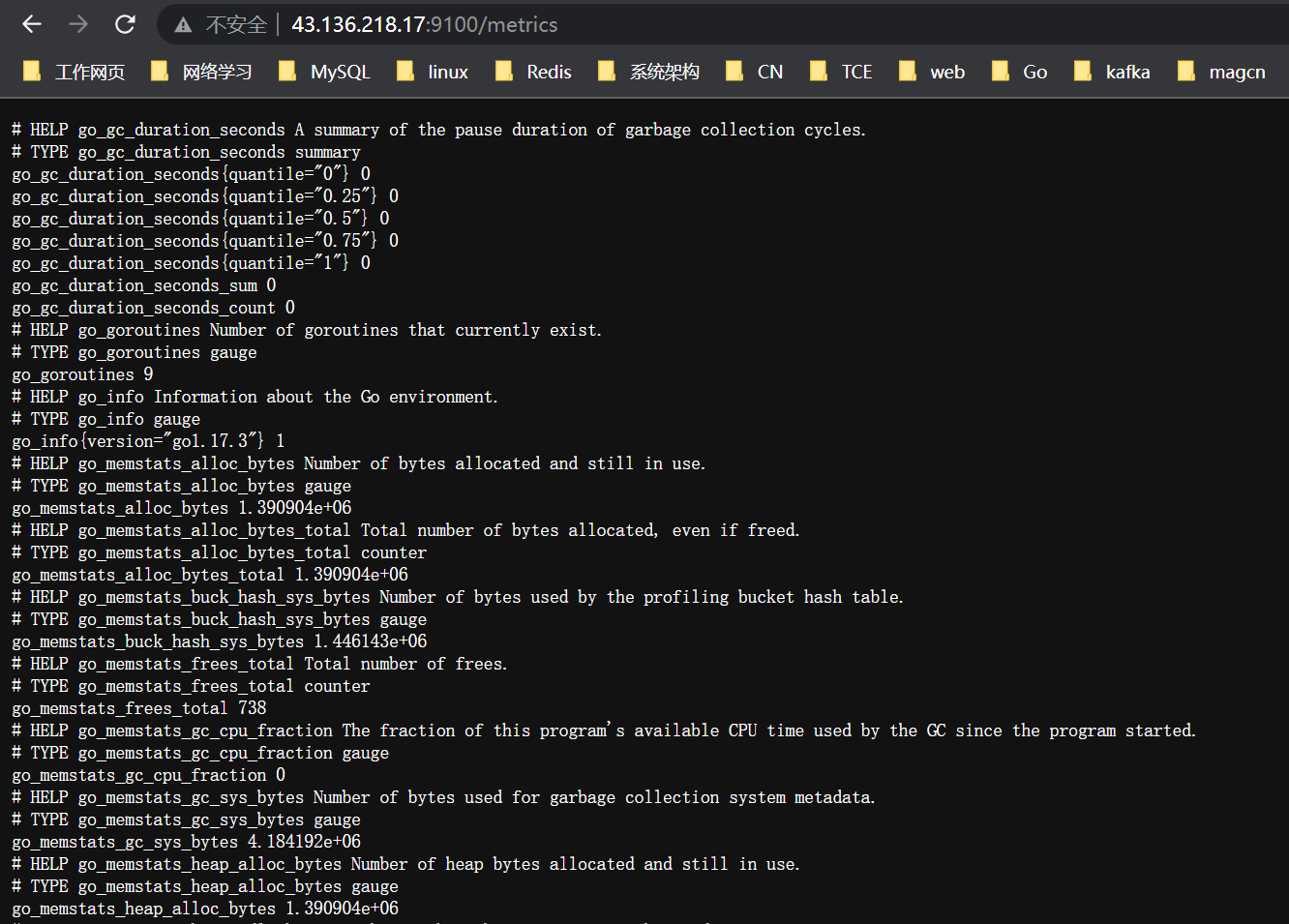

部署prometheus node-exporter

daemonset-prom-exporter.yaml

apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: monitor labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: tolerations: - effect: NoSchedule key: node-role.kubernetes.io/master containers: - name: node-exporter image: prom/node-exporter:v1.3.1 ports: - containerPort: 9100 hostPort: 9100 protocol: TCP name: metrics volumeMounts: - name: proc mountPath: /host/proc - name: sys mountPath: /host/sys - name: rootfs mountPath: /host args: - --path.procfs=/host/proc - --path.sysfs=/host/sys - --path.rootfs=/host volumes: - name: proc hostPath: path: /proc - name: sys hostPath: path: /sys - name: rootfs hostPath: path: / hostNetwork: true hostPID: true

二,熟悉pod的常见状态及故障原因

Pending:Pod创建指令已提交,但还没有完成调度,可能处在:写数据到etcd,调度,pull镜像,启动容器这四个阶段中的任何一个阶段

Running:该 Pod 已经绑定到了一个节点上,Pod 中所有的容器都已被创建。至少有一个容器正在运行,或者正处于启动或重启状态。

Succeeded:Pod中的所有的容器已经正常的执行后退出,并且不会自动重启,一般会是在部署job的时候会出现。

Failed:Pod 中的所有容器都已终止了,并且至少有一个容器是因为失败终止,容器以非0状态退出或者被系统终止。

Unkonwn:apiServer无法正常获取到Pod对象的状态信息,通常是由于其无法与所在工作节点的kubelet通信所致。

PodScheduled:pod正处于调度中,刚开始调度的时候,hostip还没绑定上,持续调度之后,有合适的节点就会绑定hostip,然后更新etcd数据

Initialized:pod中的所有初始化容器已经初启动完毕

Ready:pod中的容器可以提供服务了

Unschedulable:节点资源不满足导致容器无法被调度

CrashLoopBackOff: 容器退出,kubelet正在将它重启

InvalidImageName: 无法解析镜像名称

ImageInspectError: 无法校验镜像

ErrImageNeverPull: 策略禁止拉取镜像

ImagePullBackOff: 正在重试拉取

RegistryUnavailable: 连接不到镜像中心

ErrImagePull:通用的拉取镜像出错

CreateContainerConfigError: 不能创建kubelet使用的容器配置

CreateContainerError: 创建容器失败

RunContainerError: 启动容器失败

PostStartHookError: 执行hook报错

ContainersNotInitialized: 容器没有初始化完毕

ContainersNotReady: 容器没有准备完毕

ContainerCreating:容器创建中

PodInitializing:pod 初始化中

DockerDaemonNotReady:docker还没有完全启动

NetworkPluginNotReady: 网络插件还没有完全启动

Evicte: pod被驱赶

三,熟练使用startupProbe、livenessProbe、readinessProbe探针对pod进行状态监测

k8s三种探针:

1,启动探针 Startup Probe :用于判断容器内应用程序是否启动,如果配置了startupProbe,就会先禁止其他的探针,直到它成功为止,成功后将不再进行探测。

2,存活探针 Liveness Probe :判断容器内的应用程序是否正常,若不正常,根据 Pod 的 restartPolicy 重启策略操作,如果没有配置该探针,默认就是success。

3,就绪探针 Readiness Probe :判断容器是否已经就绪,若未就绪,容器将会处于未就绪,未就绪的容器,不会进行流量的调度。Kubernetes会把Pod从 service endpoints 中剔除。

探针的三种检测方法:

1,exec:通过在容器内执行指定命令,来判断命令退出时返回的状态码,返回状态码是0表示正常。

2,httpGet:通过对容器的 IP 地址、端口和 URL 路径来发送 GET 请求;如果响应的状态码在 200 ~ 399 间,表示正常。

3,tcpSocket:通过对容器的 IP 地址和指定端口,进行 TCP 检查,如果端口打开,发起 TCP Socket 建立成功,表示正常。##tcpSocketapiVersion: v1

kind: Pod metadata: name: probe-tcp namespace: myserver spec: containers: - name: probetcp image: nginx livenessProbe: initialDelaySeconds: 5 timeoutSeconds: 1 tcpSocket: port: 80 periodSeconds: 3

##httpGet

apiVersion: v1

kind: Pod

metadata:

name: probe-http

namespace: myserver

spec:

containers:

- name: probehttp

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

##execAction

apiVersion: v1

kind: Pod

metadata:

name: probe-exec

namespace: myserver

spec:

containers:

- name: probe-exec

image: nginx

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 10; rm -rf /tmp/healthy

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

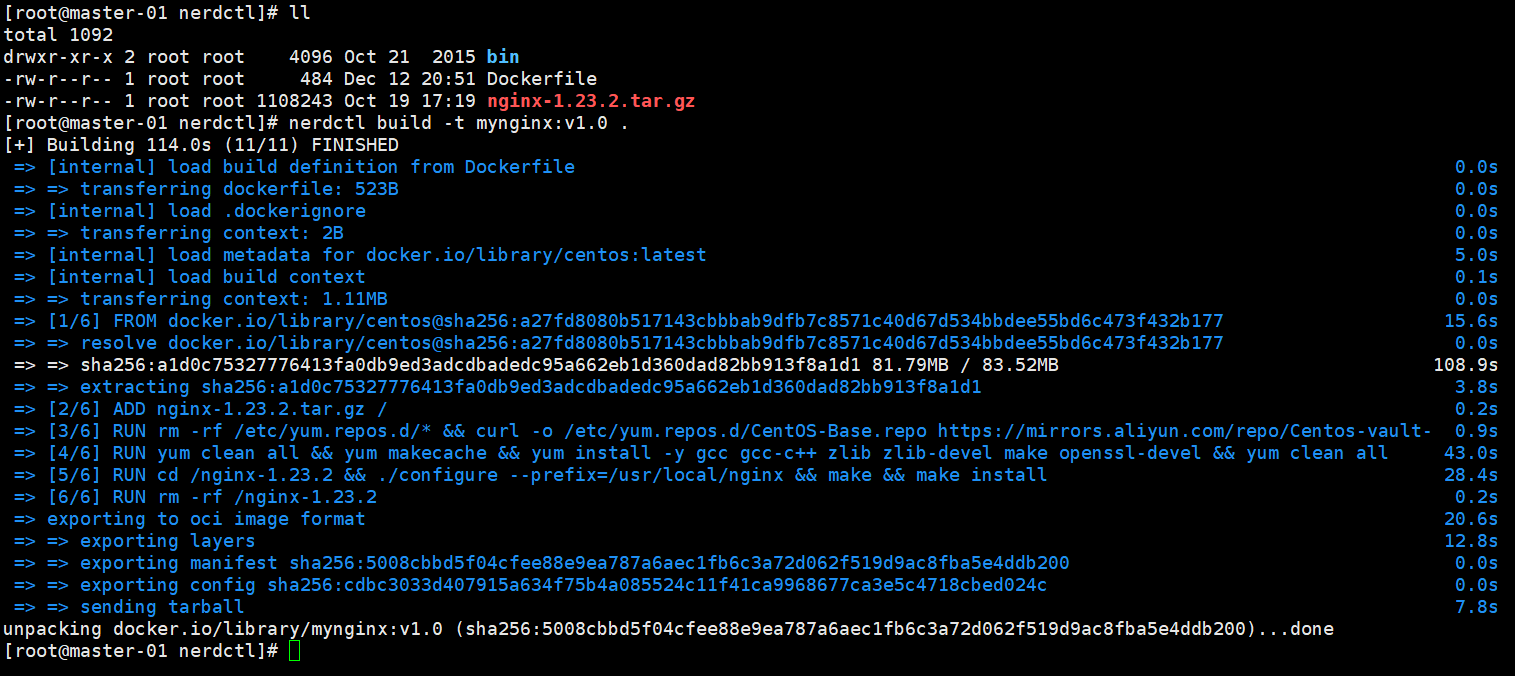

四,掌握基于nerdctl + buildkitd构建容器镜像

先安装nerdctl、buildkit、containerd,演示基于centos 通过二进制包安装nginx

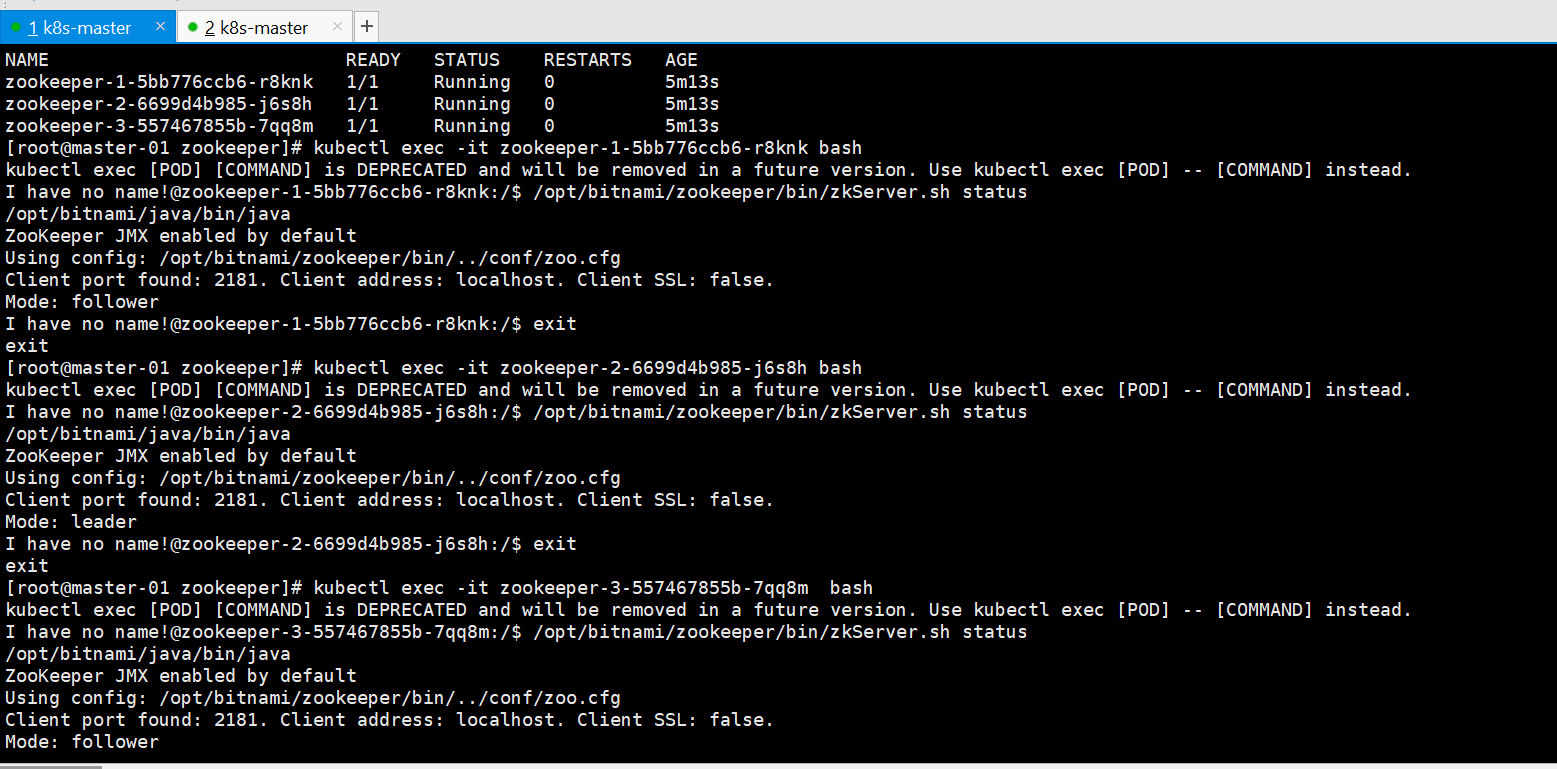

五,运行zookeeper集群

apiVersion: v1 kind: Service metadata: name: zookeeper-1 labels: app: zookeeper-1 spec: ports: - name: client port: 2181 protocol: TCP - name: follower port: 2888 protocol: TCP - name: leader port: 3888 protocol: TCP selector: app: zookeeper-1 --- apiVersion: v1 kind: Service metadata: name: zookeeper-2 labels: app: zookeeper-2 spec: ports: - name: client port: 2181 protocol: TCP - name: follower port: 2888 protocol: TCP - name: leader port: 3888 protocol: TCP selector: app: zookeeper-2 --- apiVersion: v1 kind: Service metadata: name: zookeeper-3 labels: app: zookeeper-3 spec: ports: - name: client port: 2181 protocol: TCP - name: follower port: 2888 protocol: TCP - name: leader port: 3888 protocol: TCP selector: app: zookeeper-3 --- kind: Deployment apiVersion: apps/v1 metadata: name: zookeeper-1 spec: replicas: 1 selector: matchLabels: app: zookeeper-1 template: metadata: labels: app: zookeeper-1 spec: containers: - name: zookeeper image: bitnami/zookeeper:3.6.2 ports: - containerPort: 2181 env: - name: ALLOW_ANONYMOUS_LOGIN value: "yes" - name: ZOO_LISTEN_ALLIPS_ENABLED value: "true" - name: ZOO_SERVER_ID value: "1" - name: ZOO_SERVERS value: 0.0.0.0:2888:3888,zookeeper-2:2888:3888,zookeeper-3:2888:3888 --- kind: Deployment apiVersion: apps/v1 metadata: name: zookeeper-2 spec: replicas: 1 selector: matchLabels: app: zookeeper-2 template: metadata: labels: app: zookeeper-2 spec: containers: - name: zookeeper image: bitnami/zookeeper:3.6.2 ports: - containerPort: 2181 env: - name: ALLOW_ANONYMOUS_LOGIN value: "yes" - name: ZOO_LISTEN_ALLIPS_ENABLED value: "true" - name: ZOO_SERVER_ID value: "2" - name: ZOO_SERVERS value: zookeeper-1:2888:3888,0.0.0.0:2888:3888,zookeeper-3:2888:3888 --- kind: Deployment apiVersion: apps/v1 metadata: name: zookeeper-3 spec: replicas: 1 selector: matchLabels: app: zookeeper-3 template: metadata: labels: app: zookeeper-3 spec: containers: - name: zookeeper image: bitnami/zookeeper:3.6.2 ports: - containerPort: 2181 env: - name: ALLOW_ANONYMOUS_LOGIN value: "yes" - name: ZOO_LISTEN_ALLIPS_ENABLED value: "true" - name: ZOO_SERVER_ID value: "3" - name: ZOO_SERVERS value: zookeeper-1:2888:3888,zookeeper-2:2888:3888,0.0.0.0:2888:3888

浙公网安备 33010602011771号

浙公网安备 33010602011771号