scrapy爬虫框架

scrapy爬虫框架爬取百度贴吧帖子并存库

参考:http://cuiqingcai.com/3472.html/3

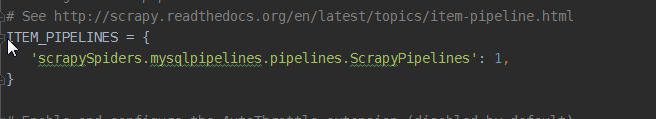

scrapySpiders.mysqlpipelines.pipelines.ScrapyPipelines

# -*- coding: utf-8 -*- __author__ = 'mmc' from sql import Sql from scrapySpiders.items import ScrapyspidersItem class ScrapyPipelines(object): @classmethod def process_item(self, item, spider): if isinstance(item, ScrapyspidersItem): print "start save data in database" tb_name = item['name'] tb_content = item['content'] Sql.insert_content(tb_name, tb_content)

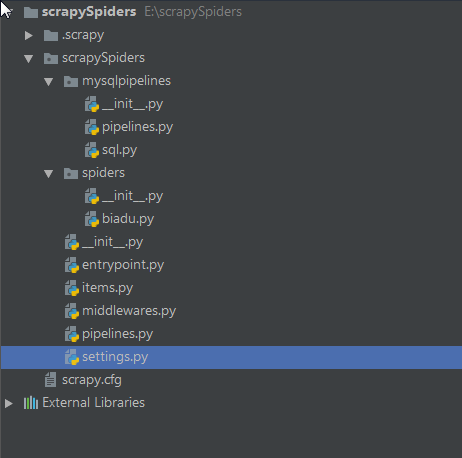

在settings.py文件中修改配置即可自动进行数据存储

spiders

# -*- coding: utf-8 -*- __author__ = 'mmc' import re import scrapy from bs4 import BeautifulSoup from scrapy.http import Request from scrapySpiders.items import ScrapyspidersItem from scrapySpiders.mysqlpipelines.pipelines import ScrapyPipelines class Myspiders(scrapy.Spider): name = 'TieBa' base_url = 'https://tieba.baidu.com/p/' def start_requests(self): url = self.base_url+'4420237089'+'?see_lz=1' yield Request(url, callback=self.parse) def parse(self, response): max_num = BeautifulSoup(response.text, 'lxml').find('li', class_='l_reply_num').find_all('span')[-1].get_text() print max_num # print response.text baseurl = str(response.url) for num in range(1, int(max_num)+1): #int(max_num)+1 url = baseurl+'&pn='+str(num) # print url yield Request(url, callback=self.get_name) def get_name(self, response): response = BeautifulSoup(response.text, 'lxml') item = ScrapyspidersItem() tmp = response.findAll("div", {"class": "d_post_content j_d_post_content "}) vmp = response.findAll("span", {"class": "tail-info"}) for val, f in zip(tmp, vmp[1:-1:3]): # print val.get_text() # print f.get_text() item['name'] = val.get_text().strip() item['content'] = f.get_text().strip() print item ScrapyPipelines().process_item(item,'') return item # item['name'] = tmp # item['content'] = vmp # # print tmp # # print vmp # return item

sql.py

# -*- coding: utf-8 -*- __author__ = 'mmc' import MySQLdb db_para = {'host': '127.0.0.1', 'port': 3306, 'user': 'mmc', 'passwd': 'mmc123456', 'db': 'test'} dbcon = MySQLdb.connect(**db_para) cur = dbcon.cursor()

#出现UnicodeEncodeError: 'latin-1' codec can't encode character执行以下代码解决 dbcon.set_character_set('utf8') cur.execute('SET NAMES utf8;') cur.execute('SET CHARACTER SET utf8;') cur.execute('SET character_set_connection=utf8;')

class Sql: @classmethod def insert_content(cls, tb_name, tb_content): sql = """INSERT INTO `test`.`tb_name` (`tb_name`, `tb_content`) VALUES (%(tb_content)s,%(tb_name)s) """ value = {'tb_name': tb_name, 'tb_content': tb_content} cur.execute(sql, value) dbcon.commit()

scrapy查询语法:

当我们爬取大量的网页,如果自己写正则匹配,会很麻烦,也很浪费时间,令人欣慰的是,scrapy内部支持更简单的查询语法,帮助我们去html中查询我们需要的标签和标签内容以及标签属性。下面逐一进行介绍:

- 查询子子孙孙中的某个标签(以div标签为例)://div

- 查询儿子中的某个标签(以div标签为例):/div

- 查询标签中带有某个class属性的标签://div[@class=’c1′]即子子孙孙中标签是div且class=‘c1’的标签

- 查询标签中带有某个class=‘c1’并且自定义属性name=‘alex’的标签://div[@class=’c1′][@name=’alex’]

- 查询某个标签的文本内容://div/span/text() 即查询子子孙孙中div下面的span标签中的文本内容

- 查询某个属性的值(例如查询a标签的href属性)://a/@href

示例代码:

from scrapy.selector import Selector

from scrapy.http import HtmlResponse

def parse(self, response): # 分析页面 # 找到页面中符合规则的内容(校花图片),保存 # 找到所有的a标签,再访问其他a标签,一层一层的搞下去 hxs = HtmlXPathSelector(response)#创建查询对象 # 如果url是 http://www.xiaohuar.com/list-1-\d+.html if re.match('http://www.xiaohuar.com/list-1-\d+.html', response.url): #如果url能够匹配到需要爬取的url,即本站url items = hxs.select('//div[@class="item_list infinite_scroll"]/div') #select中填写查询目标,按scrapy查询语法书写 for i in range(len(items)): src = hxs.select('//div[@class="item_list infinite_scroll"]/div[%d]//div[@class="img"]/a/img/@src' % i).extract()#查询所有img标签的src属性,即获取校花图片地址 name = hxs.select('//div[@class="item_list infinite_scroll"]/div[%d]//div[@class="img"]/span/text()' % i).extract() #获取span的文本内容,即校花姓名 school = hxs.select('//div[@class="item_list infinite_scroll"]/div[%d]//div[@class="img"]/div[@class="btns"]/a/text()' % i).extract() #校花学校 if src: ab_src = "http://www.xiaohuar.com" + src[0]#相对路径拼接 file_name = "%s_%s.jpg" % (school[0].encode('utf-8'), name[0].encode('utf-8')) #文件名,因为python27默认编码格式是unicode编码,因此我们需要编码成utf-8 file_path = os.path.join("/Users/wupeiqi/PycharmProjects/beauty/pic", file_name) urllib.urlretrieve(ab_src, file_path)

浙公网安备 33010602011771号

浙公网安备 33010602011771号