kubernetes ceph-rbd挂载步骤 类型storageClass

参考https://github.com/nacos-group/nacos-k8s/tree/master/deploy

https://blog.csdn.net/mailjoin/article/details/79686919

https://github.com/nacos-group/nacos-k8s/blob/master/README-ceph.md

由于kubelet本身并不支持rbd的命令,所以需要添加一个kube系统插件:

下载插件 quay.io/external_storage/rbd-provisioner

下载地址:

https://quay.io/repository/external_storage/rbd-provisioner?tag=latest&tab=tags

在k8s集群的node上面下载 docker pull quay.io/external_storage/rbd-provisioner:latest

只安装插件本身会报错:需要安装kube的角色和权限 以下是下载地址:

https://github.com/kubernetes-incubator/external-storage

https://github.com/kubernetes-incubator/external-storage/tree/master/ceph/rbd/deploy/rbac #下载kube的role的yaml文件

下载rbac文件夹:

使用: kubectl apply -f rbac/

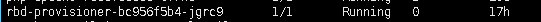

运行rbd-provisioner

如果报错:

报错因为rbd-provisioner的镜像中不能找到ceph的key和conf,需要把集群中key和conf拷贝进rbd-provisioner的镜像。

找到rbd-provisioner的镜像运行节点

docker cp /etc/ceph/ceph.client.admin.keyring <镜像名>:/etc/ceph/

docker cp /etc/ceph/ceph.conf <镜像名>:/etc/ceph/

如果又报错:

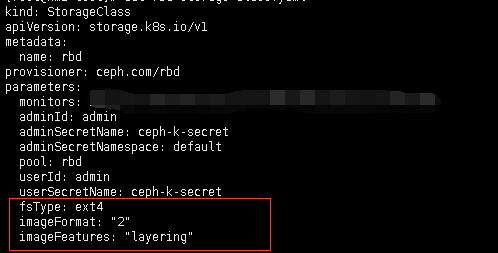

一直处于Pending,因为linux内核不支持 image format 1,所以我们要在sc中加入新建镜像时给他规定镜像的格式为2

在stroageclass中添加:

imageFormat: "2"

imageFeatures: "layering"

这样pvc就创建成功:

安装插件及角色(rbac):

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

|

#clusterrole.yamlkind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: rbd-provisionerrules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["list", "watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services"] resourceNames: ["kube-dns"] verbs: ["list", "get"]#clusterrolebinding.yamlkind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: rbd-provisionersubjects: - kind: ServiceAccount name: rbd-provisioner namespace: defaultroleRef: kind: ClusterRole name: rbd-provisioner apiGroup: rbac.authorization.k8s.io#deployment.yamlapiVersion: extensions/v1beta1kind: Deploymentmetadata: name: rbd-provisionerspec: replicas: 1 strategy: type: Recreate template: metadata: labels: app: rbd-provisioner spec: containers: - name: rbd-provisioner image: "quay.io/external_storage/rbd-provisioner:latest" env: - name: PROVISIONER_NAME value: ceph.com/rbd #定义插件的名字 serviceAccount: rbd-provisioner#role.yamlapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: rbd-provisionerrules:- apiGroups: [""] resources: ["secrets"] verbs: ["get"]#rolebinding.yamlapiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: name: rbd-provisionerroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rbd-provisionersubjects:- kind: ServiceAccount name: rbd-provisioner namespace: default#serviceaccount.yamlapiVersion: v1kind: ServiceAccountmetadata: name: rbd-provisioner |

创建storageClass:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

kind: StorageClassapiVersion: storage.k8s.io/v1metadata: name: rbdprovisioner: ceph.com/rbd #使用插件来生成scparameters: monitors: 10.101.3.9:6789,10.101.3.11:6789,10.101.3.12:6789 adminId: admin adminSecretName: ceph-k-secret adminSecretNamespace: default #这里使用default 如果使用其他就要修改还要修改插件中的 pool: rbd userId: admin userSecretName: ceph-k-secret fsType: ext4 imageFormat: "2" imageFeatures: "layering" |

参照官方文档做法如下:

1. 生成ceph-secret:

[root@walker-2 ~]# cat /etc/ceph/admin.secret | base64

QVFEdmRsTlpTSGJ0QUJBQUprUXh4SEV1ZGZ5VGNVa1U5cmdWdHc9PQo=

[root@walker-2 ~]# kubectl create secret generic ceph-secret --type="kubernetes.io/rbd" --from-literal=key='QVFEdmRsTlpTSGJ0QUJBQUprUXh4SEV1ZGZ5VGNVa1U5cmdWdHc9PQo=' --namespace=kube-system

StorageClass 配置:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: axiba

provisioner: kubernetes.io/rbd

parameters:

monitors: 172.16.18.5:6789,172.16.18.6:6789,172.16.18.7:6789

adminId: admin

adminSecretName: ceph-secret

adminSecretNamespace: hehe

pool: rbd

userId: admin

userSecretName: ceph-secret

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

adminId: ceph 客户端ID,能够在pool中创建image, 默认是admin

adminSecretName: ceph客户端秘钥名(第一步中创建的秘钥)

adminSecretNamespace: 秘钥所在的namespace

pool: ceph集群中的pool, 可以通过命令 ceph osd pool ls查看

userId: 使用rbd的用户ID,默认admin

userSecretName: 同上

imageFormat: ceph RBD 格式。”1” 或者 “2”,默认为”1”. 2支持更多rbd特性

imageFeatures: 只有格式为”2”时,才有用。默认为空值(“”)

---------------------

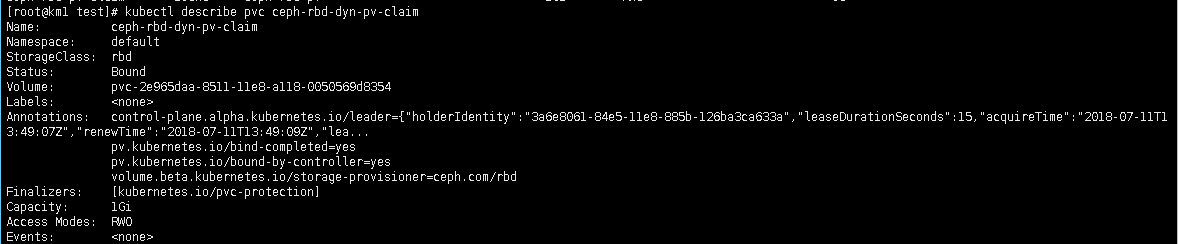

创建PVC:

|

1

2

3

4

5

6

7

8

9

10

11

|

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: ceph-rbd-dyn-pv-claimspec: accessModes: - ReadWriteOnce storageClassName: rbd resources: requests: storage: 1Gi |

浙公网安备 33010602011771号

浙公网安备 33010602011771号