第二次作业

问题1

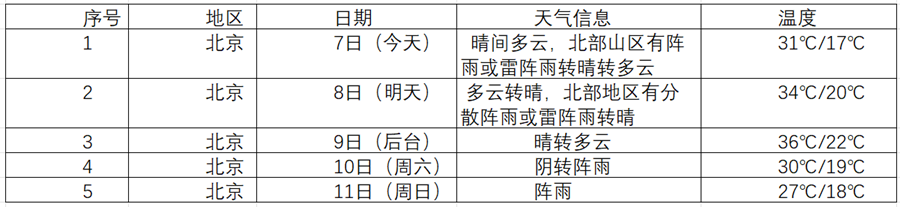

要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

输出信息:Gitee文件夹链接

代码及运行结果

码云地址

https://gitee.com/chenming333/chenmingproject/blob/master/作业2/1.py

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

#天气数据数据库操作类负责创建数据库、表结构,以及数据的插入和查询

def openDB(self):

self.con=sqlite3.connect("weathers.db") # 连接SQLite数据库

self.cursor=self.con.cursor()

try:

# 创建天气数据表,设置城市和日期为主键

self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

# 如果表已存在,则清空表中的数据

self.cursor.execute("delete from weathers")

def closeDB(self):

#关闭数据库连接

self.con.commit()

self.con.close()

def insert(self,city,date,weather,temp):

#向数据库插入天气数据

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)" ,(city,date,weather,temp))

except Exception as err:

print(err)

def show(self):

#显示数据库中的所有天气数据

self.cursor.execute("select * from weathers")

rows=self.cursor.fetchall()

# 打印表头

print("%-16s%-16s%-32s%-16s" % ("city","date","weather","temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0],row[1],row[2],row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

# 城市名称与对应城市代码的映射字典

self.cityCode={"北京":"101010100","上海":"101020100","广州":"101280101","深圳":"101280601"}

def forecastCity(self,city):

# 检查城市是否在支持的城市列表中

if city not in self.cityCode.keys():

print(city+" code cannot be found")

return

url="http://www.weather.com.cn/weather/"+self.cityCode[city]+".shtml"

try:

req=urllib.request.Request(url,headers=self.headers)

data=urllib.request.urlopen(req)

data=data.read()

dammit=UnicodeDammit(data,["utf-8","gbk"])

data=dammit.unicode_markup

# 使用BeautifulSoup解析HTML

soup=BeautifulSoup(data,"lxml")

# 选择包含天气预报信息的列表元素

lis=soup.select("ul[class='t clearfix'] li")

# 遍历每个天气条目

for li in lis:

try:

# 提取日期信息

date=li.select('h1')[0].text

# 提取天气状况

weather=li.select('p[class="wea"]')[0].text

# 提取温度信息(最高温/最低温)

temp=li.select('p[class="tem"] span')[0].text+"/"+li.select('p[class="tem"] i')[0].text

# 打印天气信息到控制台

print(city,date,weather,temp)

# 将天气信息插入数据库

self.db.insert(city,date,weather,temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self,cities):

self.db=WeatherDB()

self.db.openDB()

# 遍历每个城市,获取天气预报

for city in cities:

self.forecastCity(city)

self.db.closeDB() # 关闭数据库连接

ws=WeatherForecast()

ws.process(["北京","上海","广州","深圳"])

print("completed")

运行结果

运行过程

通过搜索对比,可以看到城市名称和城市代码的映射,下面分别对应北京和上海

检查网页源码,找到7日天气预报信息所在位置

心得体会

通过实践天气数据采集项目,我学到了可以用UnicodeDammit自动检测编码,这样就可以不用去猜是用什么编码的,而且也可以适应解决混合编码的问题

问题2

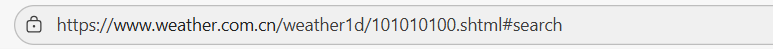

要求:用requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。网站:东方财富网:https://www.eastmoney.com/

输出信息:Gitee文件夹链接

代码及运行结果

码云地址

https://gitee.com/chenming333/chenmingproject/blob/master/作业2/2.py

import requests

import json

import sqlite3

from datetime import datetime

url = "https://push2.eastmoney.com/api/qt/clist/get?np=1&fltt=1&invt=2&fs=m%3A0%2Bt%3A6%2Bf%3A!2%2Cm%3A0%2Bt%3A80%2Bf%3A!2%2Cm%3A1%2Bt%3A2%2Bf%3A!2%2Cm%3A1%2Bt%3A23%2Bf%3A!2%2Cm%3A0%2Bt%3A81%2Bs%3A262144%2Bf%3A!2&fields=f12,f14,f2,f3,f4,f5,f6,f7,f8,f10,f15,f16,f17,f18&fid=f3&pn=1&pz=50&po=1&ut=fa5fd1943c7b386f172d6893dbfba10b&_=1761721260894"

# 创建数据库连接

conn = sqlite3.connect('stock_data.db')

cursor = conn.cursor()

# 创建股票数据表

cursor.execute('''

CREATE TABLE IF NOT EXISTS stocks (

id INTEGER PRIMARY KEY AUTOINCREMENT,

stock_code TEXT,

stock_name TEXT,

current_price REAL,

change_percent REAL,

change_amount REAL,

volume REAL,

amount REAL,

amplitude REAL,

high_price REAL,

low_price REAL,

open_price REAL,

prev_close REAL,

volume_ratio REAL,

turnover_rate REAL,

update_time TEXT

)

''')

try:

response = requests.get(url)

data = response.json()

stocks = data['data']['diff']

print("序号 代码 名称 最新价 涨跌幅 涨跌额 成交量(万) 成交额(亿) 振幅 最高 最低 今开 昨收 量比 换手率")

print("-" * 140)

for i in range(min(10, len(stocks))):

s = stocks[i]

# 逐个处理每个字段,确保都是数字

def to_float(value):

try:

return float(value)

except:

return 0.0

code = str(s.get('f12', ''))

name = str(s.get('f14', ''))

price = to_float(s.get('f2')) / 100

change_pct = to_float(s.get('f3')) / 100

change_amt = to_float(s.get('f4')) / 100

volume = to_float(s.get('f5')) / 10000

amount = to_float(s.get('f6')) / 100000000

amplitude = to_float(s.get('f7')) / 100

high = to_float(s.get('f15')) / 100

low = to_float(s.get('f16')) / 100

open_p = to_float(s.get('f17')) / 100

prev_close = to_float(s.get('f18')) / 100

vol_ratio = to_float(s.get('f10')) / 100

turnover = to_float(s.get('f8')) / 100

# 格式化输出

print(f"{i+1:2d} {code:6} {name:6} {price:6.2f} {change_pct:5.2f}% {change_amt:5.2f} "

f"{volume:7.1f} {amount:7.2f} {amplitude:4.1f}% {high:6.2f} {low:6.2f} {open_p:6.2f} "

f"{prev_close:6.2f} {vol_ratio:4.2f} {turnover:5.1f}%")

# 保存到数据库

cursor.execute('''

INSERT INTO stocks (

stock_code, stock_name, current_price, change_percent, change_amount,

volume, amount, amplitude, high_price, low_price, open_price,

prev_close, volume_ratio, turnover_rate, update_time

) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

''', (

code, name, price, change_pct, change_amt,

volume, amount, amplitude, high, low, open_p,

prev_close, vol_ratio, turnover, datetime.now().strftime('%Y-%m-%d %H:%M:%S')

))

conn.commit()

print(f"\n成功保存 {min(10, len(stocks))} 条股票数据到数据库")

# 显示数据库中的所有数据

print("\n" + "="*140)

print("数据库中的完整股票数据:")

print("="*140)

cursor.execute('''

SELECT stock_code, stock_name, current_price, change_percent, change_amount,

volume, amount, amplitude, high_price, low_price, open_price,

prev_close, volume_ratio, turnover_rate

FROM stocks

''')

db_data = cursor.fetchall()

print("代码 名称 最新价 涨跌幅 涨跌额 成交量(万) 成交额(亿) 振幅 最高 最低 今开 昨收 量比 换手率")

print("-" * 140)

for i, row in enumerate(db_data, 1):

(code, name, price, change_pct, change_amt, volume, amount,

amplitude, high, low, open_p, prev_close, vol_ratio, turnover) = row

print(f"{i:2d} {code:6} {name:6} {price:6.2f} {change_pct:5.2f}% {change_amt:5.2f} "

f"{volume:7.1f} {amount:7.2f} {amplitude:4.1f}% {high:6.2f} {low:6.2f} {open_p:6.2f} "

f"{prev_close:6.2f} {vol_ratio:4.2f} {turnover:5.1f}%")

except Exception as e:

print(f"错误: {e}")

import traceback

traceback.print_exc()

finally:

# 关闭数据库连接

cursor.close()

conn.close()

print("\n数据库连接已关闭")

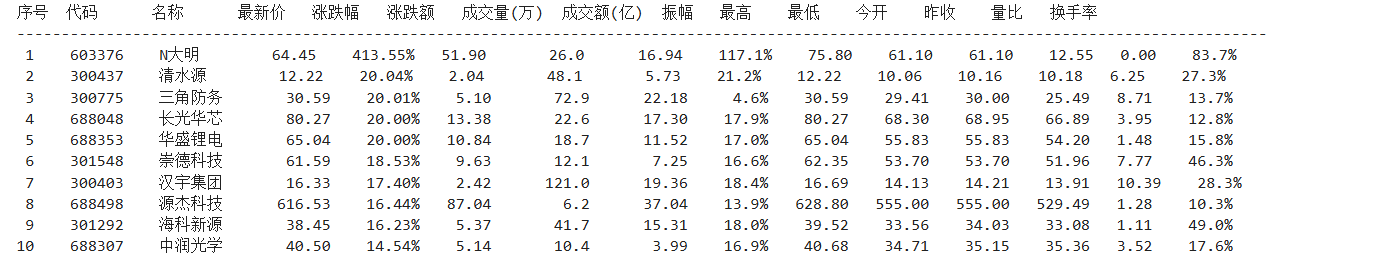

运行结果

保存到数据库之中

实验过程

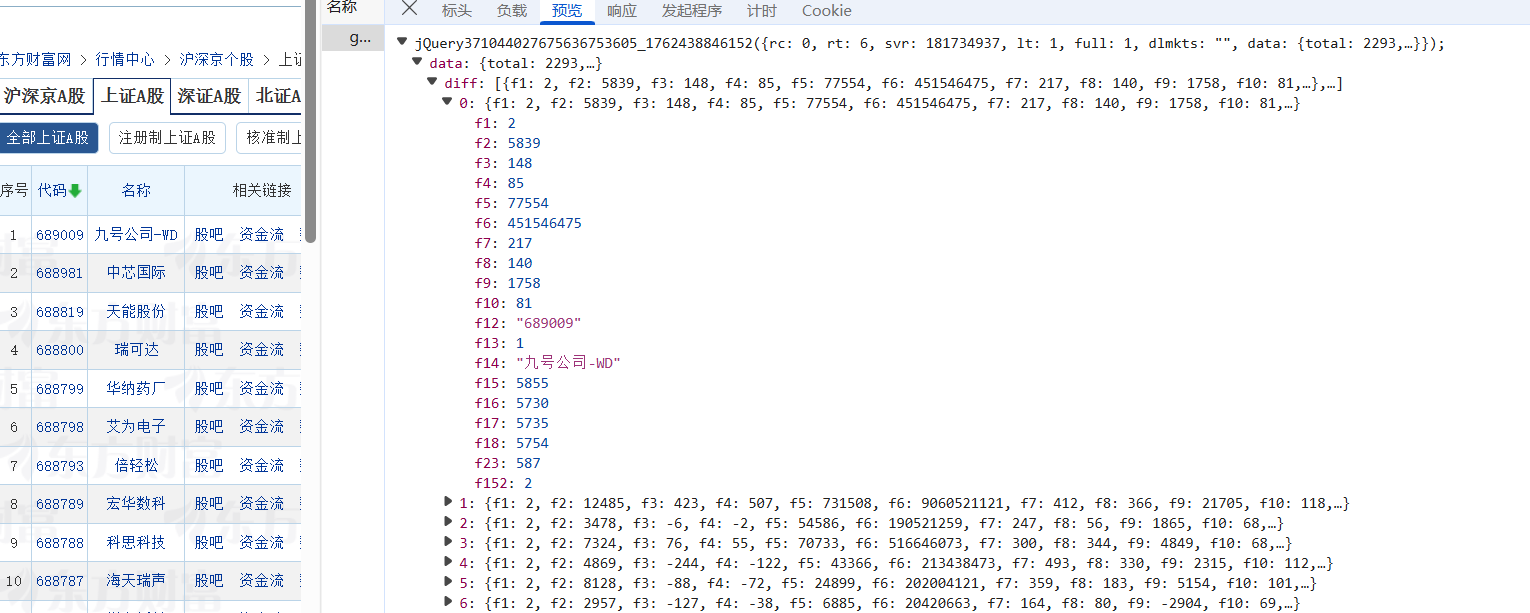

找到所要数据位置,发现所需要的数据和每一个标签对应,例如f2对应价格,提取出的数据还需做数据处理,包括百分比转换等等

心得体会

我学会了使用Python的格式化字符串来控制列宽和精度,比如通过:6、:7.2f等格式说明符让数据整齐排列。在处理数据类型转换时,我专门编写了安全转换函数来解决字符串转数字的难题。同时注意单位换算,将原始数据中的价格除以100,成交量转换为万手,成交额转换为亿元。这些是让数据从杂乱无章变得清晰可读的关键

问题3

要求:爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

输出信息:Gitee文件夹链接

| 排名 | 学校名称 | 省市 | 学校类型 | 总分 |

|---|---|---|---|---|

| 1 | 清华大学 | 北京 | 综合 | 852.5 |

| 2 |

代码及运行结果

码云地址

https://gitee.com/chenming333/chenmingproject/blob/master/作业2/3.py

代码

import requests

import sqlite3

# 连接数据库

conn = sqlite3.connect('school.db')

cur = conn.cursor()

# 创建表存储数据

cur.execute('''

CREATE TABLE IF NOT EXISTS schools (

id INTEGER PRIMARY KEY AUTOINCREMENT,

rank INTEGER,

name TEXT,

province TEXT,

type TEXT,

score REAL

)

''')

# 获取数据

url = "https://www.shanghairanking.cn/api/pub/v1/bcur?bcur_type=11&year=2021"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36"

}

response = requests.get(url, headers=headers)

data = response.json()

schools = data['data']['rankings']

# 保存到数据库

for school in schools:

cur.execute('''

INSERT INTO schools (rank, name, province, type, score)

VALUES (?, ?, ?, ?, ?)

''', (

school['ranking'],

school['univNameCn'],

school['province'],

school['univCategory'],

school['score']

))

conn.commit()

# 显示结果

print("排名 学校名称 地区 类型 总分")

print("-" * 50)

cur.execute("SELECT * FROM schools ORDER BY rank")

results = cur.fetchall()

for row in results:

rank, name, province, type, score = row[1], row[2], row[3], row[4], row[5]

print(f"{rank:<4} {name:<12} {province:<6} {type:<6} {score:.1f}")

cur.close()

conn.close()

实验过程

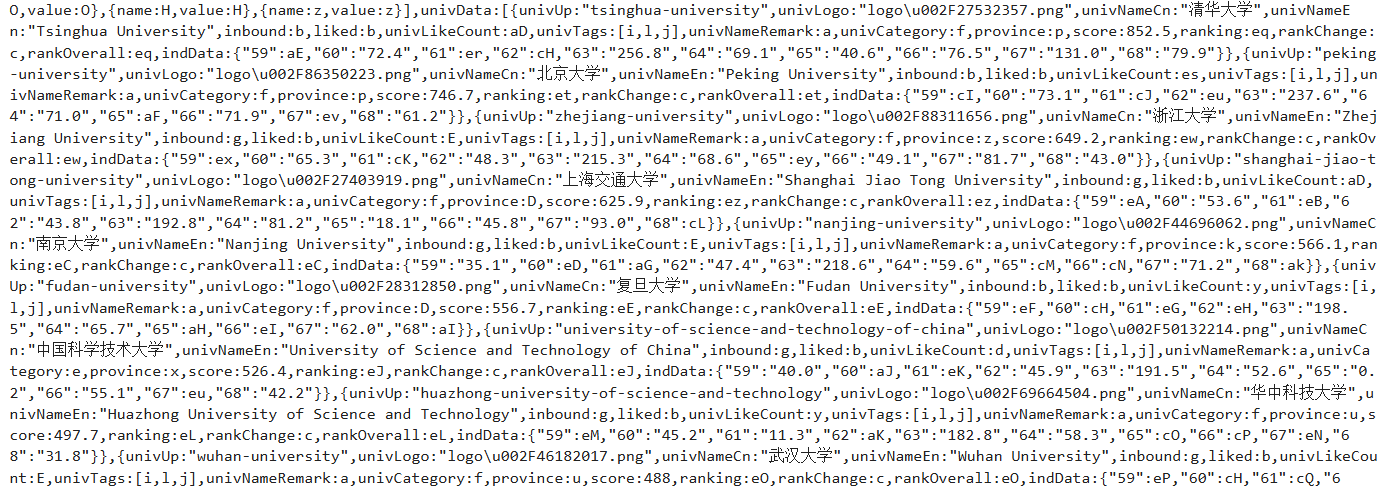

找到我们所需的所有数据在payload.js之下,查看所有数据

import requests

import json

url = "https://www.shanghairanking.cn/_nuxt/static/1761118404/rankings/bcur/2020/payload.js"

response = requests.get(url)

data = response.text

print(data)

查看数据

心得体会

这次任务是要爬取所有大学数据,之前是只做一个页面的,通过检查页面源代码,找到了所有数据存在哪里,解决了问题

浙公网安备 33010602011771号

浙公网安备 33010602011771号