文件实例应用

一,词频统计:

统计一篇文章中的单词数量:

import sys

import re

def countFile(filename,words):

#对 filename 文件进行词频分析,分析结果记在字典 words里

try:

f = open(filename,"r",encoding = "gbk" ) #文件为缺省编码。根据实际情况可以加参数 encoding="utf-8" 或 encoding = "gbk"

except Exception as e:

print(e)

return 0

txt = f.read() #全部文件内容存入字符串txt

f.close()

splitChars = set([]) #分隔串的集合

#下面找出所有文件中非字母的字符,作为分隔串

for c in txt:

if not in ( c >= 'a' and c <= 'z' or c >= 'A' and c <= 'Z'):

splitChars.add(c)

splitStr = "" #用于 re.split的正则表达式

#该正则表达式形式类似于: ",|:| |-" 之类,两个竖线之间的字符串就是分隔符

for c in splitChars:

if c in {'.','?','!','"',"'",'(',')','|','*','$','\\','[',']','^','{','}'}:

#上面这些字符比较特殊,加到splitChars里面的时候要在前面加 "\"

splitStr += "\\" + c + "|" # python字符串里面,\\其实就是 \

else:

splitStr += c + "|"

splitStr += " " # '|'后面必须要有东西,空格多写一遍没关系

lst = re.split(splitStr,txt) #lst是分隔后的单词列表

for x in lst:

if x == "": #两个相邻分隔串之间会分割出来一个空串,不理它

continue

lx = x.lower()

if lx in words:

words[lx] += 1 #如果在字典里,则改词出现次数+1

else:

words[lx] = 1 #如果不在字典里,则将该词加入字典,出现次数设为1

return 1

result = {} #结果字典。格式为 { 'a':2,'about':3 ....}

if countFile(sys.argv[1],result) ==0:# argv[1] 是 源文件,分析结果记在result里面

exit()

lst = list(result.items())

lst.sort() #单词按字典序排序

f = open(sys.argv[2],"w",encoding="gbk") #argv[2] 是结果文件, 文件为缺省编码, "w"表示写入

for x in lst:

f.write("%s\t%d\n" % (x[0],x[1]))

f.close()

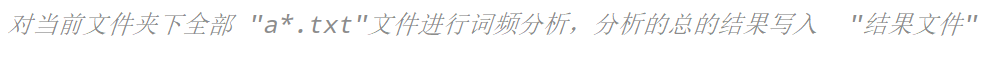

二,词频统计升级:

能够读取多个文件的文本内容,对多个文件进行统计,这里肯定就要用有关文件夹中的内容下面直接上代码把.

import sys

import re

import os

def countFile(filename,words):

#对 filename 文件进行词频分析,分析结果记在词典 words里

try:

f = open(filename,"r",encoding = "gbk" ) #文件为缺省编码。根据实际情况可以加参数 encoding="utf-8" 或 encoding = "gbk"

except Exception as e:

print(e)

return 0

txt = f.read() #全部文件内容存入字符串txt

f.close()

splitChars = set([]) #分隔串的集合

#下面找出所有文件中非字母的字符,作为分隔串

for c in txt:

if not ( c >= 'a' and c <= 'z' or c >= 'A' and c <= 'Z'):

splitChars.add(c)

splitStr = "" #用于 re.split的正则表达式

#该正则表达式形式类似于: ",|:| |-" 之类,两个竖线之间的字符串就是分隔符

for c in splitChars:

if c in {'.','?','!','"',"'",'(',')','|','*','$','\\','[',']','^','{','}'}:

#上面这些字符比较特殊,加到splitChars里面的时候要在前面加 "\"

splitStr += "\\" + c + "|" # python字符串里面,\\其实就是 \

else:

splitStr += c + "|"

splitStr += " " # '|'后面必须要有东西,空格多写一遍没关系

lst = re.split(splitStr,txt) #lst是分隔后的单词列表

for x in lst:

if x == "": #两个相邻分隔串之间会分割出来一个空串,不理它

continue

lx = x.lower()

if lx in words:

words[lx] += 1 #如果在词典里,则改词出现次数+1

else:

words[lx] = 1 #如果不在词典里,则将该词加入词典,出现次数设为1

return 1

result = {} #结果字典

lst = os.listdir() #列出当前文件夹下所有文件和文件夹

for x in lst:

if os.path.isfile(x): #如果x是文件

if x.lower().endswith(".txt") and x.lower().startswith("a"):

#x是 'a'开头, .txt结尾

countFile(x,result)

lst = list(result.items())

lst.sort() #单词按字典序排序

f = open(sys.argv[1],"w",encoding="gbk") #argv[2] 是结果文件, 文件为缺省编码, "w"表示写入

for x in lst:

f.write("%s\t%d\n" % (x[0],x[1]))

f.close()

其实和第一个文件差的就是打开文件夹的步骤

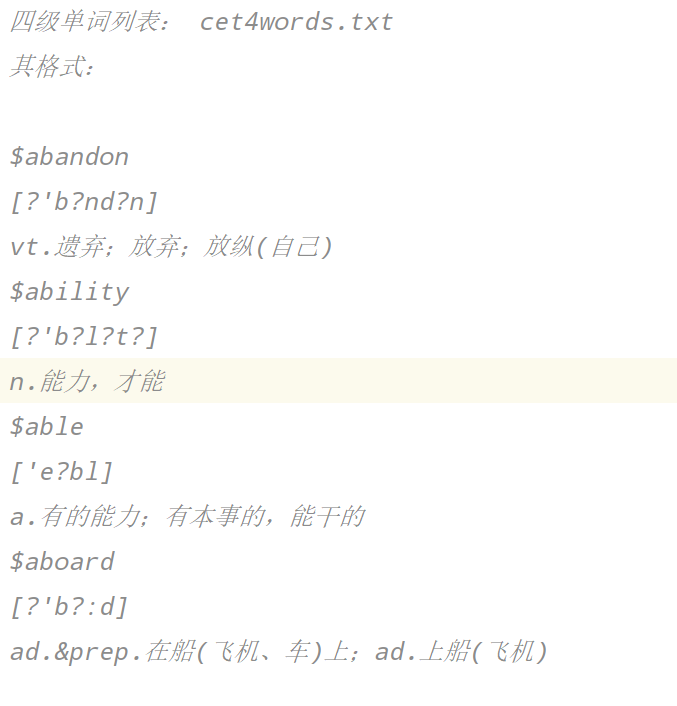

三,词汇统计升级再升级:

1 import sys

2 import re

3 def makeFilterSet():

4 cet4words = set([])

5 f = open("cet4words.txt", "r",encoding="gbk")

6 lines = f.readlines()

7 f.close()

8 for line in lines:

9 line = line.strip()

10 if line == "":

11 continue

12 if line[0] == "$":

13 cet4words.add(line[1:]) # 将四级单词加入 集合

14 return cet4words

15

16 def makeSplitStr(txt):

17 splitChars = set([])

18 #下面找出所有文件中非字母的字符,作为分隔符

19 for c in txt:

20 if not ( c >= 'a' and c <= 'z' or c >= 'A' and c <= 'Z'):

21 splitChars.add(c)

22 splitStr = ""

23 #生成用于 re.split的分隔符字符串

24 for c in splitChars:

25 if c in ['.','?','!','"',"'",'(',')','|','*','$','\\','[',']','^','{','}']:

26 splitStr += "\\" + c + "|"

27 else:

28 splitStr += c + "|"

29 splitStr+=" "

30 return splitStr

31

32 def countFile(filename,filterdict): #词频统计,要去掉在 filterdict集合里的单词

33 words = {}

34 try:

35 f = open(filename,"r",encoding="gbk")

36 except Exception as e:

37 print(e)

38 return 0

39 txt = f.read()

40 f.close()

41 splitStr = makeSplitStr(txt)

42 lst = re.split(splitStr,txt)

43 for x in lst:

44 lx = x.lower()

45 if lx == "" or lx in filterdict: #去掉在 filterdict里的单词

46 continue

47 words[lx] = words.get(lx,0) + 1

48 return words

49

50 result = countFile(sys.argv[1],makeFilterSet())

51 if result != {}:

52 lst = list(result.items())

53 lst.sort()

54 f = open(sys.argv[2],"w",encoding="gbk")

55 for x in lst:

56 f.write("%s\t%d\n" % (x[0],x[1]))

57 f.close()

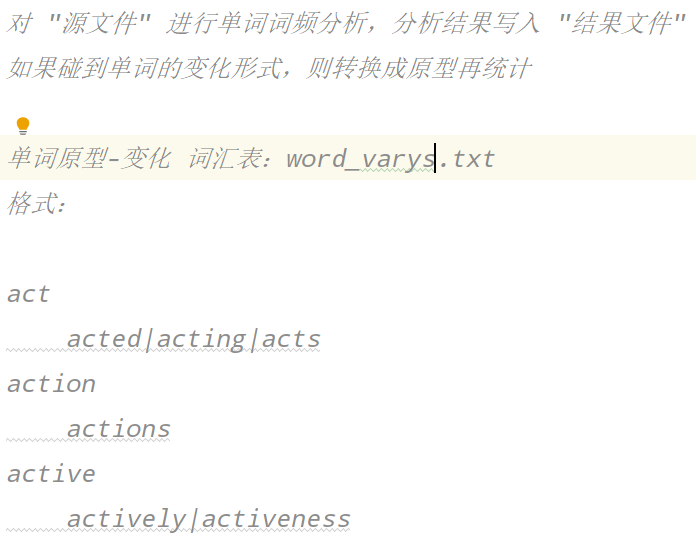

四,词频统计升级升级再升级:

1 import sys

2 import re

3 def makeVaryWordsDict():

4 vary_words = { } #元素形式: 变化形式:原型 例如 {acts:act,acting:act,boys:boy....}

5 f = open("word_varys.txt","r",encoding="gbk")

6 lines = f.readlines()

7 f.close()

8 L = len(lines)

9 for i in range(0,L,2): #每两行是一个单词的原型及变化形式

10 word = lines[i].strip() #单词原型

11 varys = lines[i+1].strip().split("|") #变形

12 for w in varys:

13 vary_words[w] = word #加入 变化形式:原型 , w的原型是 word

14 return vary_words

15

16 def makeSplitStr(txt):

17 splitChars = set([])

18 #下面找出所有文件中非字母的字符,作为分隔符

19 for c in txt:

20 if not ( c >= 'a' and c <= 'z' or c >= 'A' and c <= 'Z'):

21 splitChars.add(c)

22 splitStr = ""

23 #生成用于 re.split的分隔符字符串

24 for c in splitChars:

25 if c in ['.','?','!','"',"'",'(',')','|','*','$','\\','[',']','^','{','}']:

26 splitStr += "\\" + c + "|"

27 else:

28 splitStr += c + "|"

29 splitStr+=" "

30 return splitStr

31

32 def countFile(filename,vary_word_dict):

33 #分析 filename 文件,返回一个词典作为结果。到 vary_word_dict里查单词原型

34 try:

35 f = open(filename,"r",encoding="gbk")

36 except Exception as e:

37 print(e)

38 return None

39 txt = f.read()

40 f.close()

41 splitStr = makeSplitStr(txt)

42 words = {}

43 lst = re.split(splitStr,txt)

44 for x in lst:

45 lx = x.lower()

46 if lx == "":

47 continue

48 if lx in vary_word_dict: #如果在原型词典里能查到原型,就变成原型再统计

49 lx = vary_word_dict[lx]

50 #直接写这句可以替换上面 if 语句 lx = vary_word_dict.get(lx,lx)

51 words[lx] = words.get(lx,0) + 1

52 return words

53

54 result = countFile(sys.argv[1],makeVaryWordsDict())

55 if result != None and result != {}:

56 lst = list(result.items())

57 lst.sort()

58 f = open(sys.argv[2],"w",encoding="gbk")

59 for x in lst:

60 f.write("%s\t%d\n" % (x[0],x[1]))

61 f.close()

浙公网安备 33010602011771号

浙公网安备 33010602011771号