scapy_redis的简单学习

利用redis和scarpy配合可以实现增量式爬虫,其中scrapy_redis尤为重要

1. DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

指纹去重:其功能就是为每一个request返回一个唯一标示本身的指纹,判断和记录是否请求过,予以去重

源码中 request_fingerprint(request, include_headers=None):函数得以实现:

def request_fingerprint(request, include_headers=None): """ Return the request fingerprint. The request fingerprint is a hash that uniquely identifies the resource the request points to. For example, take the following two urls: http://www.example.com/query?id=111&cat=222 http://www.example.com/query?cat=222&id=111 Even though those are two different URLs both point to the same resource and are equivalent (ie. they should return the same response). Another example are cookies used to store session ids. Suppose the following page is only accesible to authenticated users: http://www.example.com/members/offers.html Lot of sites use a cookie to store the session id, which adds a random component to the HTTP Request and thus should be ignored when calculating the fingerprint. For this reason, request headers are ignored by default when calculating the fingeprint. If you want to include specific headers use the include_headers argument, which is a list of Request headers to include. """ if include_headers: include_headers = tuple(to_bytes(h.lower()) for h in sorted(include_headers)) cache = _fingerprint_cache.setdefault(request, {}) if include_headers not in cache: fp = hashlib.sha1() fp.update(to_bytes(request.method)) fp.update(to_bytes(canonicalize_url(request.url))) fp.update(request.body or b'') if include_headers: for hdr in include_headers: if hdr in request.headers: fp.update(hdr) for v in request.headers.getlist(hdr): fp.update(v) cache[include_headers] = fp.hexdigest() return cache[include_headers]

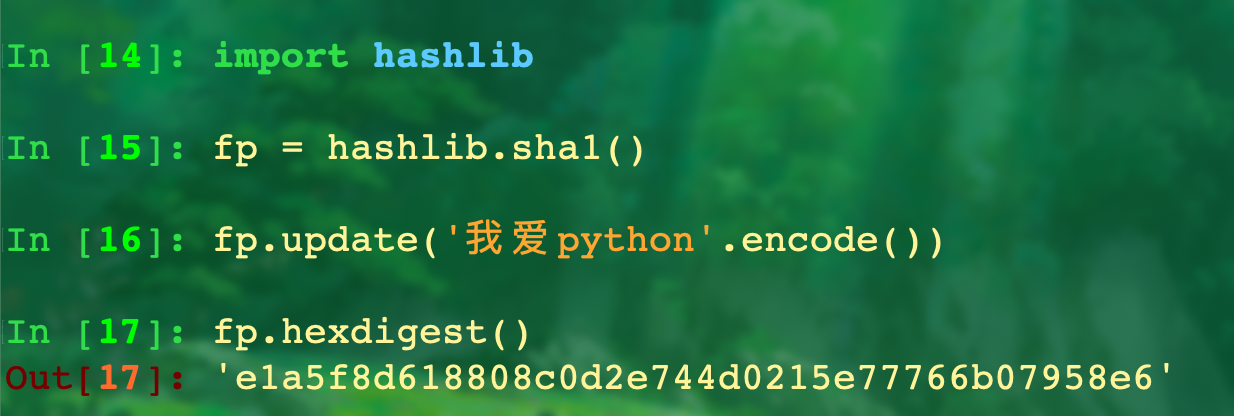

其中为每个reques返回指纹主要利用了安全哈希算法(Secure Hash Algorithm)来实现,简单过程如下:

1.1

得到sha1对象fp

fp.update()传入内容(str无法直接传入,在此之前要encoded)

fp.hexdigest() 将加密后的字符串按16进制输出,fp.digest()是按照二进制进行输出

16进制输出(scrapy_redis选择):

import. hashlib str='精神高原' #str需要转换为字节串 fp = hashlib.sha1(bytes(str,encoding='utf-8')).hexdigesst() print(fp)

二进制输出:

每个request有了指纹接下来就该是判断并实现去重功能了,部分源码调用如下:

def request_seen(self, request): """Returns True if request was already seen. Parameters ---------- request : scrapy.http.Request Returns ------- bool """ fp = self.request_fingerprint(request) # This returns the number of values added, zero if already exists. added = self.server.sadd(self.key, fp) return added == 0

指纹fp存入相对应的spider的key,利用集合判断,如果已经存在就返回zero(反之不然),判断为布尔值,如果在seen存在就返回True。

Redis Sadd 命令将一个或多个成员元素加入到集合中,已经存在于集合的成员元素将被忽略。

假如集合 key 不存在,则创建一个只包含添加的元素作成员的集合。

当集合 key 不是集合类型时,返回一个错误。

其实在原生的scrapy源码dupefilters.py里也可以发现它也是调用相似的方法request_fingerprint(request)来为请求来生成指纹,

但是init方法里是事先创建了一个set,来存放request实现去重,所以在工程停下时set里的内容就会清空,不会存储下来(存储path默认为None),无法增量式爬虫。

class RFPDupeFilter(BaseDupeFilter): """Request Fingerprint duplicates filter""" def __init__(self, path=None, debug=False): self.file = None self.fingerprints = set() self.logdupes = True self.debug = debug self.logger = logging.getLogger(__name__) if path: self.file = open(os.path.join(path, 'requests.seen'), 'a+') self.file.seek(0) self.fingerprints.update(x.rstrip() for x in self.file) @classmethod def from_settings(cls, settings): debug = settings.getbool('DUPEFILTER_DEBUG') return cls(job_dir(settings), debug) def request_seen(self, request): fp = self.request_fingerprint(request) if fp in self.fingerprints: return True self.fingerprints.add(fp) if self.file: self.file.write(fp + os.linesep)#os.linesep代表换行,多个环境可用 def request_fingerprint(self, request): return request_fingerprint(request)

2.SCHEDULER = "scrapy_redis.scheduler.Scheduler"

调度器组件,其主要功能是scheduler的open和close,控制url什么情况下入队列等

相应部分源码如下:

def close(self, reason): if not self.persist: self.flush() def flush(self): self.df.clear() self.queue.clear() def enqueue_request(self, request): if not request.dont_filter and self.df.request_seen(request): self.df.log(request, self.spider) return False if self.stats: self.stats.inc_value('scheduler/enqueued/redis', spider=self.spider) self.queue.push(request) return True

1.如果不持久化,就会对df(指纹)和queue做clear操作

2.判断请求是否入队列

启动工程后redis会生成如下结构:

dd:dupefilter 这是个集合,里面是已经过滤的url(请求),已经爬过的,以16进制存储

dd:items 这是list,是爬取的内容

dd:requests 这是ZSET,里面是请求,以二进制存储

当然这三者里面都是以spidername:keys 格式

3.从而在setting里设置 SCHEDULER_PERSIST = True 实现断点续爬功能

浙公网安备 33010602011771号

浙公网安备 33010602011771号