kubeedge 1.8.1 安装部署

1.准备环境(k8s已经安装完成)

k8s安装参考:https://www.cnblogs.com/breg/p/18502675

|

角色 |

IP |

|---|---|

| master1,node1 | 10.167.47.12 |

| master2,node2 | 10.167.47.24 |

| master3,node3 | 10.167.47.25 |

| edge | 10.167.47.22 |

| VIP(虚拟ip) | 10.167.47.86 |

2.安装keadm(按需修改版本号这里安装1.8.1)

1.部署MetalLB(可选)

主要目的是开放端口出来,否则无法边端无法使用ip端口加入

kubectl edit configmap -n kube-system kube-proxy |

#部署metallbkubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.5/config/manifests/metallb-native.yaml# advertise.yamlapiVersion: metallb.io/v1beta1kind: L2Advertisementmetadata: name: l2adver namespace: metallb-systemspec: ipAddressPools: # 如果不配置则会通告所有的IP池地址 - ip-pool# ip-pool.yamlapiVersion: metallb.io/v1beta1kind: IPAddressPoolmetadata: name: ip-pool namespace: metallb-systemspec: addresses: - 10.167.47.210-10.167.47.215 # 根据虚拟机的ip地址来配置 这些ip地址可以分配给k8s中的服务kubectl apply -f advertise.yamlkubectl apply -f ip-pool.yaml |

2.部署cloudcore

wget https://github.com/kubeedge/kubeedge/releases/download/v1.18.1/keadm-v1.18.1-linux-amd64.tar.gztar -zxvf keadm-v1.18.1-linux-amd64.tar.gz # 解压keadm的tar.gz的包cd keadm-v1.18.1-linux-amd64/keadm/cp keadm /usr/sbin/ #将其配置进入环境变量,方便使用#初始化 这里是ip-pool没有被分配的ip地址keadm init --advertise-address="10.167.47.210" --kubeedge-version=1.18.1 --set iptablesHanager.mode="external"#获取tokenkeadm gettoken |

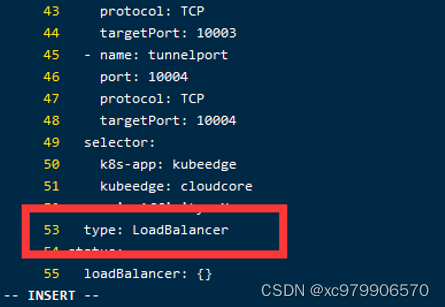

3.修改cloudcore的svc

修改服务的暴露方式,让外部可以连接

也可以使用NodePort,但是在初始化edgecore时就需要修改对应的ip映射了,上面初始化advertise-address也需要修改

kubectl edit svc cloudcore -n kubeedge |

4.打标签

因为边缘计算的硬件条件都不好,这里我们需要打上标签,让一些应用不扩展到节点上去

kubectl get daemonset -n kube-system |grep -v NAME |awk '{print $1}' | xargs -n 1 kubectl patch daemonset -n kube-system --type='json' -p='[{"op": "replace","path": "/spec/template/spec/affinity","value":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}]'kubectl get daemonset -n kuboard |grep -v NAME |awk '{print $1}' | xargs -n 1 kubectl patch daemonset -n kuboard --type='json' -p='[{"op": "replace","path": "/spec/template/spec/affinity","value":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}]'kubectl get daemonset -n metallb-system |grep -v NAME |awk '{print $1}' | xargs -n 1 kubectl patch daemonset -n metallb-system --type='json' -p='[{"op": "replace","path": "/spec/template/spec/affinity","value":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}]' |

5.边缘节点加入

# 关闭防火墙systemctl stop firewalldsystemctl disable firewalld# 禁用selinuxsetenforce 0# 网络配置,开启相应的转发机制cat >> /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1vm.swappiness=0EOF# 生效规则modprobe br_netfiltersysctl -p /etc/sysctl.d/k8s.conf# 查看是否生效cat /proc/sys/net/bridge/bridge-nf-call-ip6tablescat /proc/sys/net/bridge/bridge-nf-call-iptables# 关闭系统swapswapoff -a# 设置hostname# 边缘侧 hostnamectl set-hostname edge1.kubeedge# 配置hosts文件(示例),按照用户实际情况设置cat >> /etc/hosts << EOF10.167.47.22 edge1.kubeedgeEOF# 同步时钟,选择可以访问的NTP服务器即可# 时间同步cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backupcurl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repoyum clean all && yum makecacheyum install ntpdate -y && timedatectl set-timezone Asia/Shanghai && ntpdate time2.aliyun.com# 加入到crontabcrontab -e0 5 * * * /usr/sbin/ntpdate time2.aliyun.com# 加入到开机自动同步,/etc/rc.localvi /etc/rc.localntpdate time2.aliyun.com#安装docker的yum源yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo#或者https://files.cnblogs.com/files/chuanghongmeng/docker-ce.zip?t=1669080259 #安装yum install docker-ce-20.10.3 -ymkdir -p /data/dockermkdir -p /etc/docker/#温馨提示:由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd#如果/etc/docker 目录不存在,启动docker会自动创建。cat > /etc/docker/daemon.json <<EOF{ "exec-opts": ["native.cgroupdriver=systemd"],}EOF #温馨提示:根据服务器的情况,选择docker的数据存储路径,例如:/datavi /usr/lib/systemd/system/docker.serviceExecStart=/usr/bin/dockerd --graph=/data/docker #重载配置文件systemctl daemon-reloadsystemctl restart dockersystemctl enable docker.servicerm /etc/containerd/config.tomlcontainerd config default > /etc/containerd/config.tomlctr -n k8s.io images pull -k registry.aliyuncs.com/google_containers/pause:3.6ctr -n k8s.io images tag registry.aliyuncs.com/google_containers/pause:3.6 registry.k8s.io/pause:3.6 systemctl restart containerd# 安装 cri-tools 网络工具wget --no-check-certificate https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.20.0/crictl-v1.20.0-linux-amd64.tar.gztar zxvf crictl-v1.20.0-linux-amd64.tar.gz -C /usr/local/bin# 安装 cni 网络插件 手动 kubeedge 1.4版本后都需要安装mkdir -p /opt/cni/bincurl -L https://github.com/containernetworking/plugins/releases/download/v1.5.0/cni-plugins-linux-amd64-v1.5.0.tgz | sudo tar -C /opt/cni/bin -xz# 配置 cni 网络插件mkdir -p /etc/cni/net.dcat <<EOF | sudo tee /etc/cni/net.d/10-containerd-net.conflist{ "cniVersion": "0.4.0", "name": "containerd-net", "plugins": [ { "type": "bridge", "bridge": "cni0", "isGateway": true, "ipMasq": true, "ipam": { "type": "host-local", "ranges": [ [{"subnet": "10.10.0.0/16"}] ], "routes": [ {"dst": "0.0.0.0/0"} ] } }, { "type": "portmap", "capabilities": {"portMappings": true} } ]}EOFcurl -LO https://github.com/containerd/nerdctl/releases/download/v1.7.6/nerdctl-1.7.6-linux-amd64.tar.gzscp -rp /usr/sbin/keadm 10.167.47.22:/usr/sbin/#安装cni方式2脚本安装curl -LO https://github.com/kubeedge/kubeedge/blob/master/hack/lib/install.sh# 将脚本文件放入环境source install.sh#修改Install.sh里面subnet子网范围 { "subnet": "10.10.0.0/16" }# 执行安装cni命令install_cni_plugins#加入 ip是loadblance ip, token是keadm gettoken获取 由于这里是个虚拟ip所以需要找运维开放安全组keadm join --cloudcore-ipport=10.167.47.210:10000 \--token f3245a6f984efb5a81e412566301656701606172e2bd854f5683d1316d3124e6.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE3MzAyNzA1NzF9.dbIxjSNgewTaiRnomeWKM0s4qb7px3mcz3EddcYHShs \--edgenode-name=edge1 \--kubeedge-version v1.18.1 \--remote-runtime-endpoint=unix:///run/containerd/containerd.sock \--cgroupdriver=systemd \--with-mqtt |

6.边缘节点测试

vi nginx-deployment.yaml#nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx-metallbspec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: nodeName: edge1 # 边缘端的名字 kubectl get node里面的 hostNetwork: true # 使用主机网络 不使用主机网络,在其它主机无法进行访问 是因为两个cni网络不是同一个 containers: - name: nginx image: nginx:latest ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: name: nginx-servicespec: selector: app: nginx ports: - name: http port: 80 targetPort: 80 type: LoadBalancer |

7.开启监控

由于kubeedge是无法通过kubelet去获取的这个时候需要开启监控

#边缘节点vi /etc/kubeedge/config/edgecore.yaml#enable改成trueedgeStream: enable: true handshakeTimeout: 30 readDeadline: 15 server: 192.168.1.1:10004 tlsTunnelCAFile: /etc/kubeedge/ca/rootCA.crt tlsTunnelCertFile: /etc/kubeedge/certs/server.crt tlsTunnelPrivateKeyFile: /etc/kubeedge/certs/server.key writeDeadline: 15#执行kubectl edit cm cloudcore -nkubeedge并配置featureGates.requireAuthorization=true以下dynamiccontroller.enable=trueapiVersion: v1data: cloudcore.yaml: | apiVersion: cloudcore.config.kubeedge.io/v1alpha2 ... featureGates: requireAuthorization: true modules: ... dynamicController: enable: true #边缘节点apiVersion: edgecore.config.kubeedge.io/v1alpha2...kind: EdgeCorefeatureGates: requireAuthorization: truemodules:... metaServer: enable: truesystemctl restart edgecore.service#重启边缘核心systemctl restart edgecore#maste节点通过iptables进行转发iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -Xiptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to 10.167.47.210:10003iptables -t nat -A OUTPUT -p tcp --dport 10351 -j DNAT --to 10.167.47.210:10003iptables -t nat -A OUTPUT -p tcp --dport 10352 -j DNAT --to 10.167.47.210:10003#修改tunnelportkubectl -n kubeedge edit cm tunnelport改成10350 |

9.安装caclio

wget https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yaml#修改配置# CLUSTER_TYPE 下方添加信息- name: CLUSTER_TYPE value: "k8s,bgp"# 下方为新增内容 更改为合适的网卡- name: IP_AUTODETECTION_METHOD value: "interface=eth0"kubectl apply -f calico.yaml#防火墙设置否则可能controller报443 iptables -P INPUT ACCEPT iptables -P FORWARD ACCEPT iptables -P FORWARD ACCEPT iptables -F边缘节点需要添加路由到中心容器路由器否则容器无法访问边缘节点

route add -net 10.10.0.0/16 gw 100.12.0.1 |

浙公网安备 33010602011771号

浙公网安备 33010602011771号