application was killed.

Issue

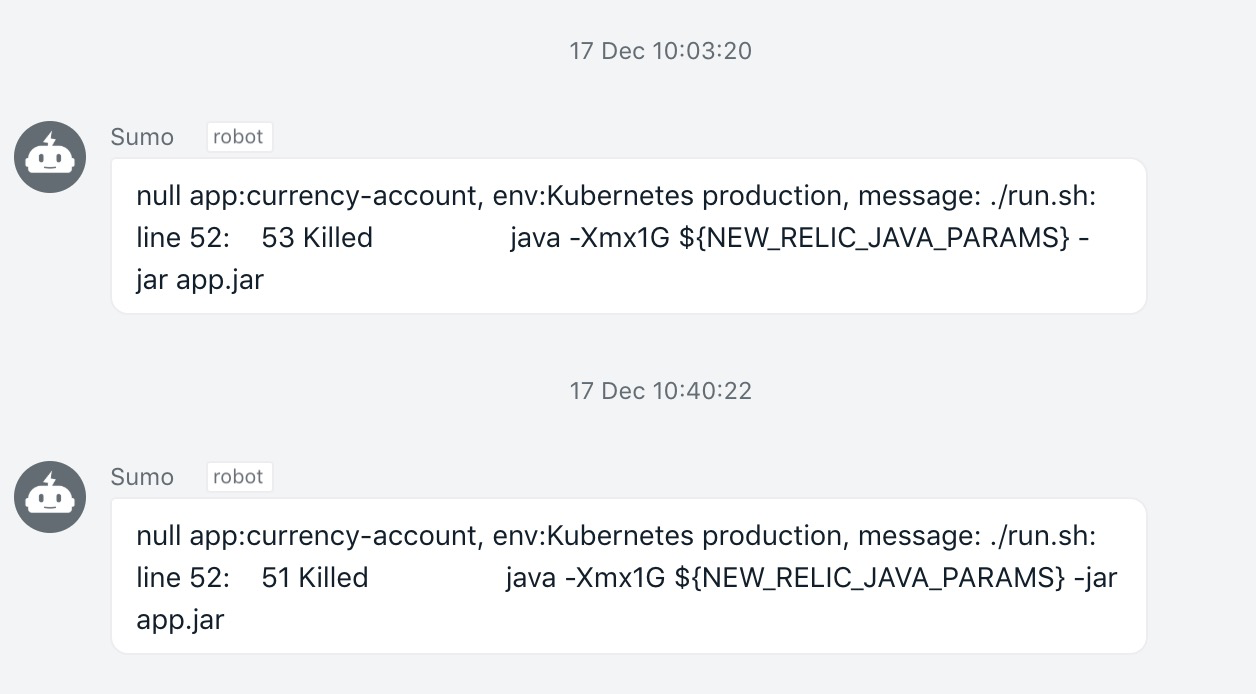

Got a curccency-account application alert from sumo logic as following:

./run.sh: line 52: 53 Killed java -Xmx1G ${NEW_RELIC_JAVA_PARAMS} -jar app.jar

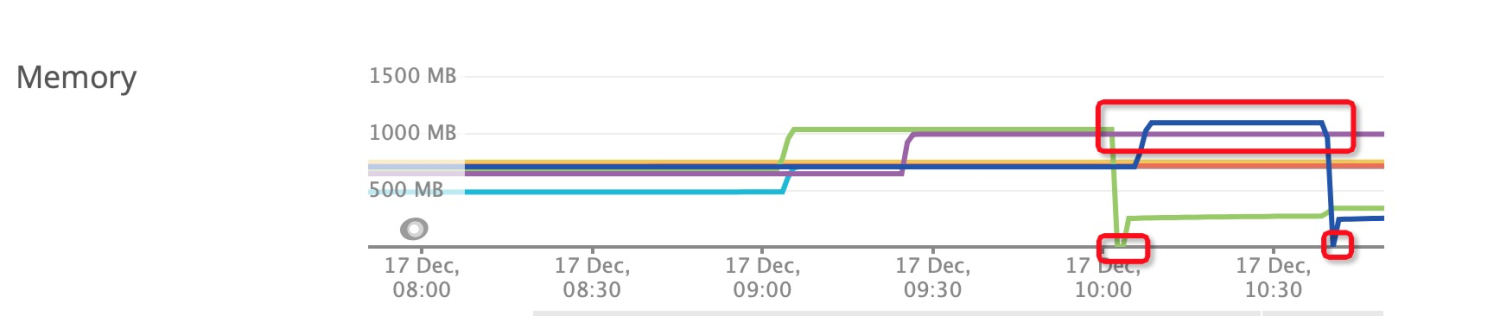

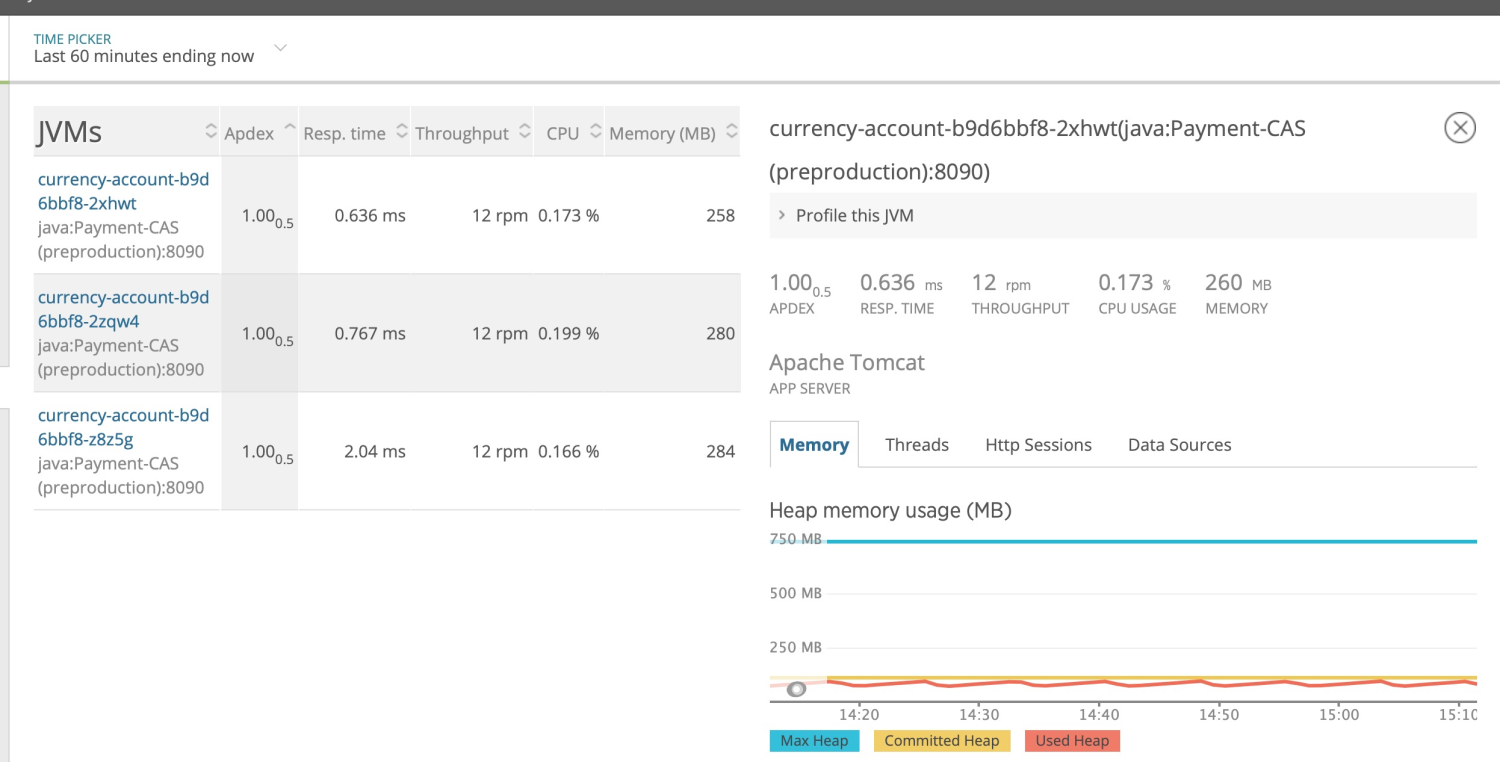

use new relic container memory graph of curccency-account application, and found that the process will be killed after memory usage over 1G .

https://rpm.newrelic.com/accounts/2391851/applications/195064602#tab_hosts=breakout

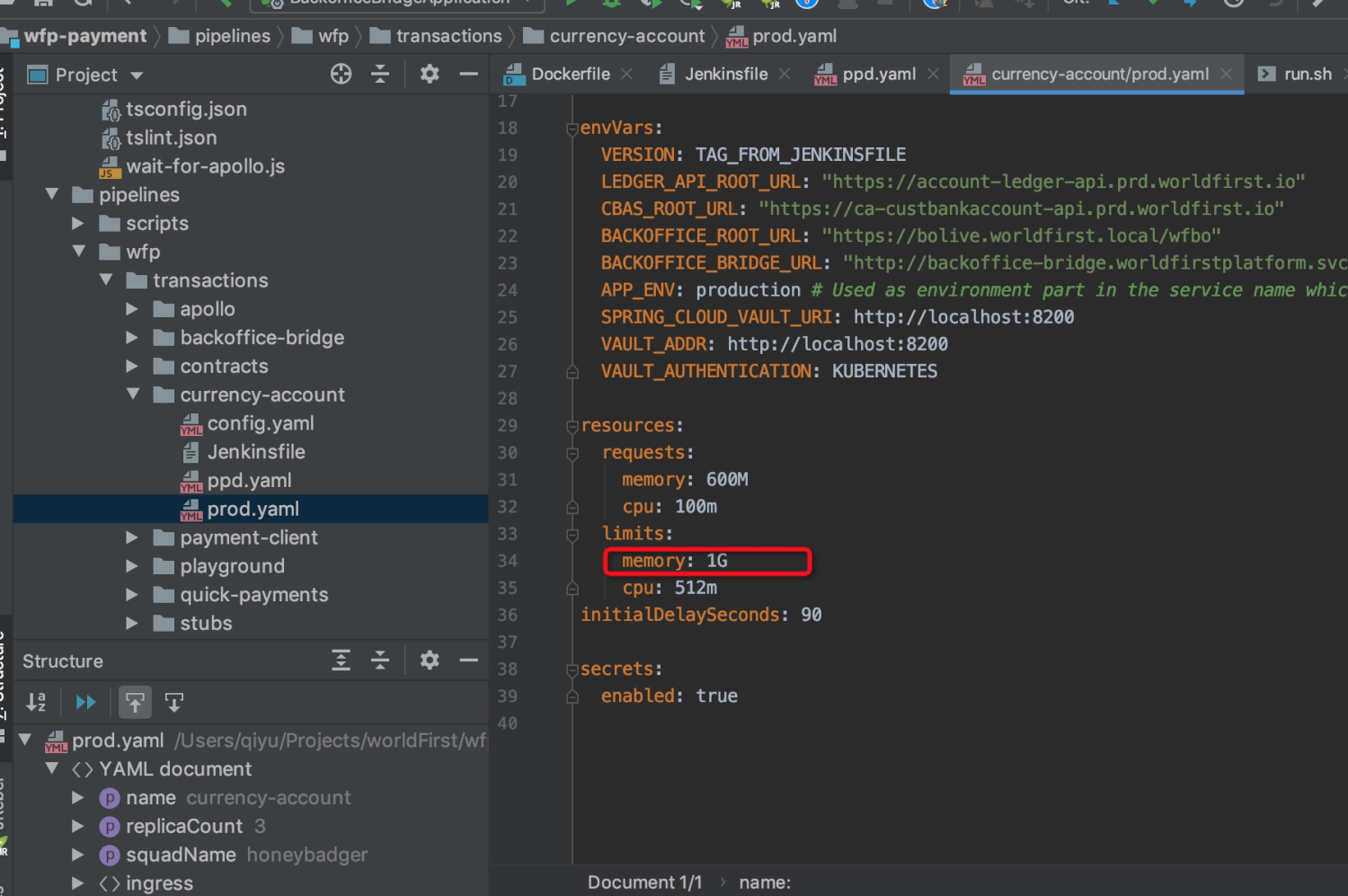

In the cerrencyAccount application start script run.sh specify the max heap memory size 1G

java -Xmx1G ${NEW_RELIC_JAVA_PARAMS} -jar app.jar

docker memory is specified to 1G as well:

Reason

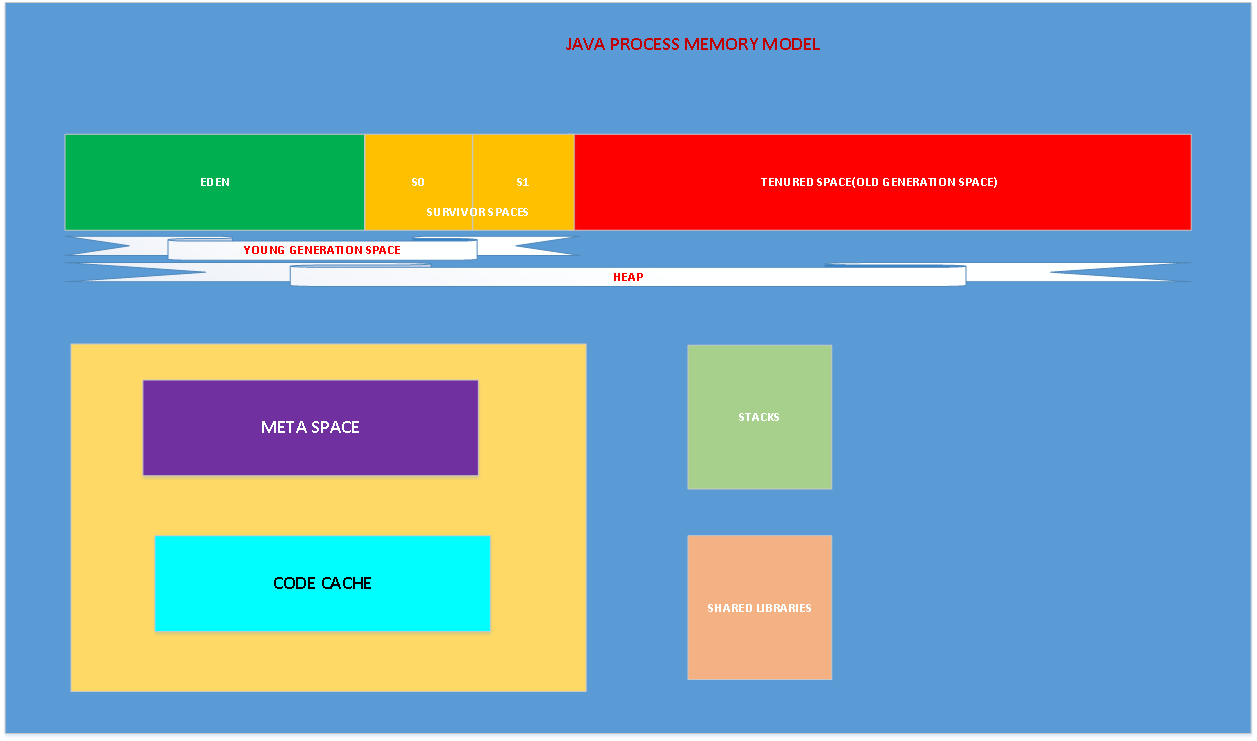

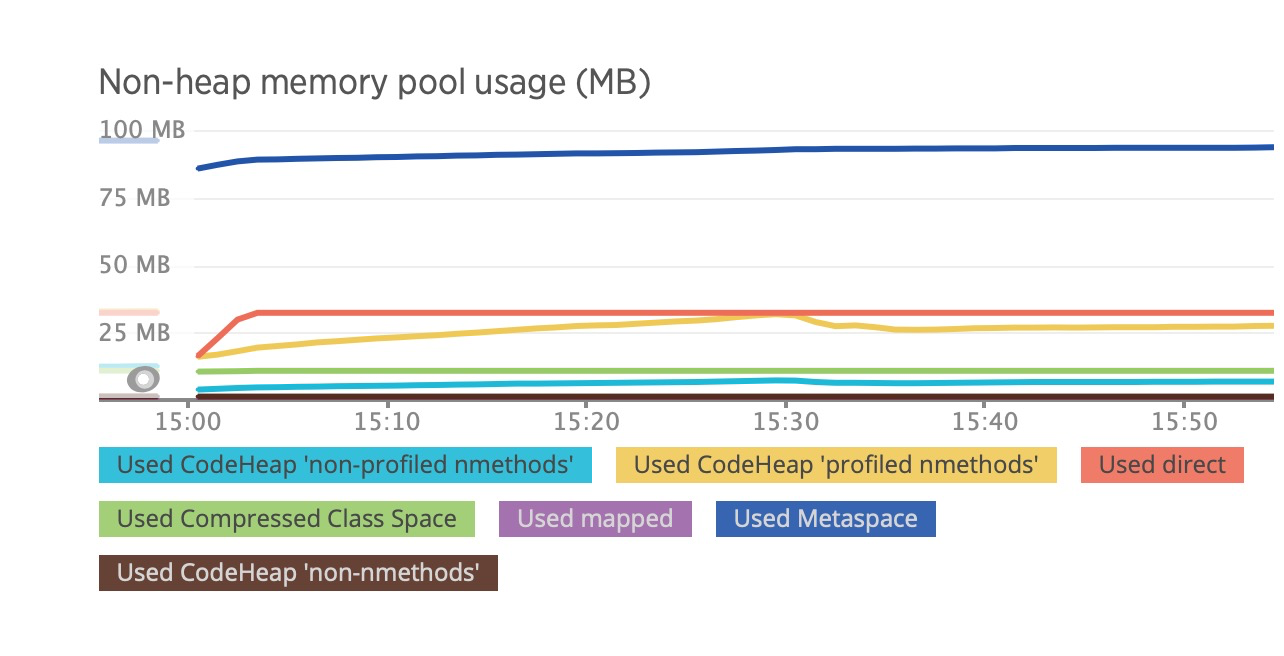

The problem is that Docker container memory limit is only 1G and the the Java max heap memory size also set to 1G. The JVM actually needs non-heap memoryaccording to the java memory model then it needs more than 1G.

Docker will then take action into its own hands and kill the process inside the container if too much memory is used! The Java process is ‘Killed’. This is not what we want…

Impact

- the Java process crash periodically, most of the WorldFirst K8S Java applications might be involved, we need to notify them to check and fix.

- Users probably get error sometime because the apllication crash will cause, It's not good user experience.

Solution

To fix this you will also need to specify to Java there is a maximum memory limit. In older Java versions (before 8u131) you needed to specify this inside your container by setting -Xmx flags to limit the heap size lower ( might be 3/4 of Docker memory). But this feels wrong, you'd rather not want to define these limits twice, nor do you want to define this ‘inside’ your container.

Luckily there are better ways to fix this now. From Java 9 onwards (and from 8u131+ onwards, backported) there are flags added to the JVM:

-XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap

These flags will force the JVM to look at the Linux cgroup configuration. This is where Docker containers specify their maximum memory settings. Now, if your application reaches the limit set by Docker (500MB), the JVM will see this limit. It’ll try to GC. If it still runs out of memory the JVM will do what it is supposed to do, throw an OutOfMemoryException. Basically this allows the JVM to ‘see’ the limit that has been set by Docker.

From Java 10 onwards these experimental flags are the new default and are enabled using the -XX:+UseContainerSupport flag (you can disable this behaviour by providing -XX:-UseContainerSupport).

From OpenJDK(Versions 8, 10 and 11) UseContainerSupport is now turned on by default , https://github.com/AdoptOpenJDK/openjdk-docker/pull/121, -XX:MinRAMPercentage=50 -XX:MaxRAMPercentage=80 should be specified.

Solution Test

If you run your Java application in a Linux container the JVM will automatically detect the Control Group memory limit with the UseContainerSupport option. You can then control the memory with the following options, InitialRAMPercentage, MaxRAMPercentage and MinRAMPercentage. As you can see we have percentage instead of fraction, which is nice and much more useful.

Let’s look at the default values for the java11

docker run -m 1GB openjdk:11-jdk-slim java \

> -XX:+PrintFlagsFinal -version \

> | grep -E "UseContainerSupport | InitialRAMPercentage | MaxRAMPercentage | MinRAMPercentage"

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

double InitialRAMPercentage = 1.562500 {product} {default}

double MaxRAMPercentage = 25.000000 {product} {default}

double MinRAMPercentage = 50.000000 {product} {default}

bool UseContainerSupport = true {product} {default}

openjdk version "11.0.5" 2019-10-15

OpenJDK Runtime Environment 18.9 (build 11.0.5+10)

OpenJDK 64-Bit Server VM 18.9 (build 11.0.5+10, mixed mode)

We can see that UseContainerSupport is activated by default. It looks like MaxRAMPercentage is 25% and MinRAMPercentage is 50%. Let’s see what heap size is calculated when we give the container 1GB of memory.

docker run -m 1GB openjdk:11-jdk-slim java \

> -XshowSettings:vm \

> -version

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

VM settings:

Max. Heap Size (Estimated): 247.50M

Using VM: OpenJDK 64-Bit Server VM

openjdk version "11.0.5" 2019-10-15

OpenJDK Runtime Environment 18.9 (build 11.0.5+10)

OpenJDK 64-Bit Server VM 18.9 (build 11.0.5+10, mixed mode)

The JVM calculated 247.50M when we have 1GB available and it’s because the default value for MaxRAMPercentage is 25%. Why they decided to use 25% is a mystery for me and MinRAMPercentage is 50%. Let’s bump up RAMPercentage a bit

docker run -m 1GB openjdk:11-jdk-slim java \

> -XX:MinRAMPercentage=50 \

> -XX:MaxRAMPercentage=80 \

> -XshowSettings:vm \

> -version

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

VM settings:

Max. Heap Size (Estimated): 792.69M

Using VM: OpenJDK 64-Bit Server VM

openjdk version "11.0.5" 2019-10-15

OpenJDK Runtime Environment 18.9 (build 11.0.5+10)

OpenJDK 64-Bit Server VM 18.9 (build 11.0.5+10, mixed mode)

That is much better and with this configuration we managed to control our JVM to start at 500MB and then grow to maximum 792.69MB

https://rpm.newrelic.com/accounts/2391850/applications/195063831/instances

浙公网安备 33010602011771号

浙公网安备 33010602011771号