Kubernetes Pod,Node节点亲和性

1 .node 节点亲和性

[root@xksmaster1 basic]# kubectl explain pods.spec.affinity KIND: Pod VERSION: v1 RESOURCE: affinity <Object> DESCRIPTION: If specified, the pod's scheduling constraints Affinity is a group of affinity scheduling rules. FIELDS: nodeAffinity <Object> Describes node affinity scheduling rules for the pod. podAffinity <Object> Describes pod affinity scheduling rules (e.g. co-locate this pod in the same node, zone, etc. as some other pod(s)). podAntiAffinity <Object> Describes pod anti-affinity scheduling rules (e.g. avoid putting this pod in the same node, zone, etc. as some other pod(s)).

[root@xksmaster1 basic]# kubectl explain pods.spec.affinity.nodeAffinity KIND: Pod VERSION: v1 RESOURCE: nodeAffinity <Object> DESCRIPTION: Describes node affinity scheduling rules for the pod. Node affinity is a group of node affinity scheduling rules. FIELDS: preferredDuringSchedulingIgnoredDuringExecution <[]Object> The scheduler will prefer to schedule pods to nodes that satisfy the affinity expressions specified by this field, but it may choose a node that violates one or more of the expressions. The node that is most preferred is the one with the greatest sum of weights, i.e. for each node that meets all of the scheduling requirements (resource request, requiredDuringScheduling affinity expressions, etc.), compute a sum by iterating through the elements of this field and adding "weight" to the sum if the node matches the corresponding matchExpressions; the node(s) with the highest sum are the most preferred. requiredDuringSchedulingIgnoredDuringExecution <Object> If the affinity requirements specified by this field are not met at scheduling time, the pod will not be scheduled onto the node. If the affinity requirements specified by this field cease to be met at some point during pod execution (e.g. due to an update), the system may or may not try to eventually evict the pod from its node.

prefered 表示有节点尽量满足这个位置定义的亲和性,这不是一个必须的条件,软亲和性

require 表示必须有节点满足这个位置定义的亲和性,这是个硬性条件,硬亲和性

apiVersion: v1 kind: Pod metadata: name: pod-node-affinity-demo namespace: default labels: app: myapp tier: frontend spec: containers: - name: myapp image: ikubernetes/myapp:v1 imagePullPolicy: Never affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: zone operator: In values: - foo - bar

我们检查当前节点中有任意一个节点拥有 zone 标签的值是 foo 或者 bar,就可以把 pod 调度到这

个 node 节点的 foo 或者 bar 标签上的节点上

[root@xksmaster1 ~]# kubectl apply -f pod-nodeaffinity-demo.yaml

[root@xksmaster1 ~]# kubectl get pods -o wide | grep pod-node

pod-node-affinity-demo 0/1 Pending 0 xksnode2

status 的状态是 pending,上面说明没有完成调度,因为没有一个拥有 zone 的标签的值是 foo 或

者 bar,而且使用的是硬亲和性,必须满足条件才能完成调度

[root@xksmaster1 ~]# kubectl label nodes xksnode2 zone=foo

给这个 xksnode2 节点打上标签 zone=foo,在查看

[root@xksmaster1 ~]#kubectl get pods -o wide 显示如下:

pod-node-affinity-demo 1/1 Running 0 xksnode2

例 2:使用 preferredDuringSchedulingIgnoredDuringExecution 软亲和性

[root@xksmaster1 basic]# cat pod-nodeaffinity-demo-2.yaml apiVersion: v1 kind: Pod metadata: name: pod-node-affinity-demo-2 namespace: default labels: app: myapp tier: frontend spec: containers: - name: myapp image: ikubernetes/myapp:v1 affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - preference: matchExpressions: - key: zone1 operator: In values: - foo1 - bar1 weight: 60

[root@xksmaster1 ~]# kubectl apply -f pod-nodeaffinity-demo-2.yaml

[root@xksmaster1 ~]# kubectl get pods -o wide |grep demo-2

pod-node-affinity-demo-2 1/1 Running 0 xksnode2

上面说明软亲和性是可以运行这个 pod 的,尽管没有运行这个 pod 的节点定义的 zone1 标签

Node 节点亲和性针对的是 pod 和 node 的关系,Pod 调度到 node 节点的时候匹配的条件

2 Pod 节点亲和性

pod 自身的亲和性调度有两种表示形式 podaffinity:pod 和 pod 更倾向腻在一起,把相近的 pod 结合到相近的位置,如同一区域,同一 机架,这样的话 pod 和 pod 之间更好通信,比方说有两个机房,这两个机房部署的集群有 1000 台 主机,那么我们希望把 nginx 和 tomcat 都部署同一个地方的 node 节点上,可以提高通信效率; podunaffinity:pod 和 pod 更倾向不腻在一起,如果部署两套程序,那么这两套程序更倾向于反 亲和性,这样相互之间不会有影响。 第一个 pod 随机选则一个节点,做为评判后续的 pod 能否到达这个 pod 所在的节点上的运行方 式,这就称为 pod 亲和性;我们怎么判定哪些节点是相同位置的,哪些节点是不同位置的;我们 在定义 pod 亲和性时需要有一个前提,哪些 pod 在同一个位置,哪些 pod 不在同一个位置,这个 位置是怎么定义的,标准是什么?以节点名称为标准,这个节点名称相同的表示是同一个位置, 节点名称不相同的表示不是一个位置。

requiredDuringSchedulingIgnoredDuringExecution: 硬亲和性

preferredDuringSchedulingIgnoredDuringExecution:软亲和性

topologyKey: 位置拓扑的键,这个是必须字段 怎么判断是不是同一个位置:

rack=rack1

row=row1

使用 rack 的键是同一个位置

使用 row 的键是同一个位置

labelSelector: 我们要判断 pod 跟别的 pod 亲和,跟哪个 pod 亲和,需要靠 labelSelector,

通过 labelSelector 选则一组能作为亲和对象的 pod 资源 namespace:

labelSelector 需要选则一组资源,那么这组资源是在哪个名称空间中呢,通过 namespace 指定,

如果不指定 namespaces,那么就是当前创建 pod 的名称空间

例 1:pod 节点亲和性

定义两个 pod,第一个 pod 做为基准,第二个 pod 跟着它走

[root@xksmaster1 basic]# cat pod-required-affinity-demo.yaml apiVersion: v1 kind: Pod metadata: name: pod-first labels: app2: myapp2 tier: frontend spec: containers: - name: myapp image: ikubernetes/myapp:v1 --- apiVersion: v1 kind: Pod metadata: name: pod-second labels: app: backend tier: db spec: containers: - name: busybox image: busybox:1.28 imagePullPolicy: IfNotPresent command: ["sh","-c","sleep 3600"] affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - {key: app2, operator: In, values: ["myapp2"]} topologyKey: kubernetes.io/hostname

#上面表示创建的 pod 必须与拥有 app2=myapp2 标签的 pod 在一个节点上

[root@xksmaster1 ~]# kubectl apply -f pod-required-affinity-demo.yaml

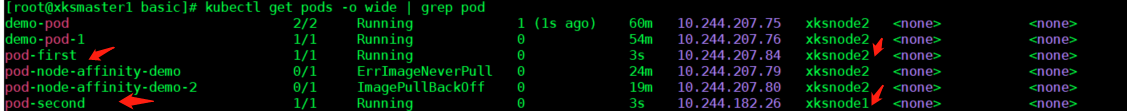

kubectl get pods -o wide 显示如下:

pod-first running xksnode1

pod-second running xksnode1

上面说明第一个 pod 调度到哪,第二个 pod 也调度到哪,这就是 pod 节点亲和性

[root@xianchaomaster1 ~]# kubectl delete -f pod-required-affinity-demo.yaml

注意:xksmaster1

[root@xksmaster1 ~]# kubectl get nodes --show-labels

例 2:pod 节点反亲和性

定义两个 pod,第一个 pod 做为基准,第二个 pod 跟它调度节点相反

[root@xksmaster1 basic]# cat pod-required-anti-affinity-demo.yaml apiVersion: v1 kind: Pod metadata: name: pod-first labels: app1: myapp1 tier: frontend spec: containers: - name: myapp image: busybox:1.28 imagePullPolicy: IfNotPresent command: ["sh","-c","sleep 3600"] --- apiVersion: v1 kind: Pod metadata: name: pod-second labels: app: backend tier: db spec: containers: - name: busybox image: busybox:1.28 imagePullPolicy: IfNotPresent command: ["sh","-c","sleep 3600"] affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - {key: app1, operator: In, values: ["myapp1"]} topologyKey: kubernetes.io/hostname

例 3:换一个 topologykey

[root@xksmaster1~]# kubectl label nodes xksnode1 zone=foo

[root@xksmaster1~]# kubectl label nodes xksnode1 zone=foo --overwrite

[root@xksmaster1 basic]# cat pod-first-required-anti-affinity-demo-1.yaml apiVersion: v1 kind: Pod metadata: name: pod-first labels: app3: myapp3 tier: frontend spec: containers: - name: myapp image: busybox:1.28 imagePullPolicy: IfNotPresent

[root@xksmaster1 basic]# cat pod-second-required-anti-affinity-demo-1.yaml apiVersion: v1 kind: Pod metadata: name: pod-second labels: app: backend tier: db spec: containers: - name: busybox image: busybox:1.28 imagePullPolicy: IfNotPresent command: ["sh","-c","sleep 3600"] affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - {key: app3 ,operator: In, values: ["myapp3"]} topologyKey: zone

[root@xksmaster1 affinity]# kubectl apply -f pod-first-required-anti-affinity-demo-1.yaml

[root@xksmaster1 affinity]# kubectl apply -f pod-second-required-anti-affinity-demo-1.yaml

[root@xksmaster1 ~]#kubectl get pods -o wide 显示如下:

pod-first running xksnode1

pod-second pending <none>

第二个节点现是 pending,因为两个节点是同一个位置,现在没有不是同一个位置的了,而且我们

要求反亲和性,所以就会处于 pending 状态,如果在反亲和性这个位置把 required 改成

preferred,那么也会运行。

podaffinity:pod 节点亲和性,pod 倾向于哪个 pod

nodeaffinity:node 节点亲和性,pod 倾向于哪个 node

浙公网安备 33010602011771号

浙公网安备 33010602011771号