Kubernetes Rook + Ceph

一、简介

容器的持久化存储

容器的持久化存储是保存容器存储状态的重要手段,存储插件会在容器里挂载一个基于网络或者其他机制的远程数据卷,使得在容器里创建的文件,实际上是保存在远程存储服务器上,或者以分布式的方式保存在多个节点上,而与当前宿主机没有任何绑定关系。这样,无论你在其他哪个宿主机上启动新的容器,都可以请求挂载指定的持久化存储卷,从而访问到数据卷里保存的内容。

由于 Kubernetes 本身的松耦合设计,绝大多数存储项目,比如 Ceph、GlusterFS、NFS 等,都可以为 Kubernetes 提供持久化存储能力。

Ceph分布式存储系统

Ceph是一种高度可扩展的分布式存储解决方案,提供对象、文件和块存储。在每个存储节点上,您将找到Ceph存储对象的文件系统和Ceph OSD(对象存储守护程序)进程。在Ceph集群上,您还可以找到Ceph MON(监控)守护程序,它们确保Ceph集群保持高可用性。

Rook

Rook 是一个开源的cloud-native storage编排, 提供平台和框架;为各种存储解决方案提供平台、框架和支持,以便与云原生环境本地集成。

Rook 将存储软件转变为自我管理、自我扩展和自我修复的存储服务,它通过自动化部署、引导、配置、置备、扩展、升级、迁移、灾难恢复、监控和资源管理来实现此目的。

Rook 使用底层云本机容器管理、调度和编排平台提供的工具来实现它自身的功能。Rook 目前支持Ceph、NFS、Minio Object Store和CockroachDB。

二、安装

地址:https://rook.io/docs/rook/v1.4/ceph-quickstart.html

2.1 集群环境准备

在集群中至少有三个节点可用,满足ceph高可用要求

rook使用存储方式

rook默认使用所有节点的所有资源,rook operator自动在所有节点上启动OSD设备,Rook会用如下标准监控并发现可用设备:

- 设备没有分区

- 设备没有格式化的文件系统

Rook不会使用不满足以上标准的设备。另外也可以通过修改配置文件,指定哪些节点或者设备会被使用。

选择需要的节点新增3块裸盘,不需要对裸盘进行额外操作,确保挂载到节点即可

2.2 部署Rook + Ceph

git clone --single-branch --branch v1.4.6 https://github.com/rook/rook.git cd rook/cluster/examples/kubernetes/ceph kubectl create -f common.yaml kubectl create -f operator.yaml

修改cluster.yaml文件

################################################################################################################# # Define the settings for the rook-ceph cluster with common settings for a production cluster. # All nodes with available raw devices will be used for the Ceph cluster. At least three nodes are required # in this example. See the documentation for more details on storage settings available. # For example, to create the cluster: # kubectl create -f common.yaml # kubectl create -f operator.yaml # kubectl create -f cluster.yaml ################################################################################################################# apiVersion: ceph.rook.io/v1 kind: CephCluster metadata: name: rook-ceph namespace: rook-ceph spec: cephVersion: # The container image used to launch the Ceph daemon pods (mon, mgr, osd, mds, rgw). # v13 is mimic, v14 is nautilus, and v15 is octopus. # RECOMMENDATION: In production, use a specific version tag instead of the general v14 flag, which pulls the latest release and could result in different # versions running within the cluster. See tags available at https://hub.docker.com/r/ceph/ceph/tags/. # If you want to be more precise, you can always use a timestamp tag such ceph/ceph:v15.2.4-20200630 # This tag might not contain a new Ceph version, just security fixes from the underlying operating system, which will reduce vulnerabilities image: ceph/ceph:v15.2.4 # Whether to allow unsupported versions of Ceph. Currently `nautilus` and `octopus` are supported. # Future versions such as `pacific` would require this to be set to `true`. # Do not set to true in production. allowUnsupported: false # The path on the host where configuration files will be persisted. Must be specified. # Important: if you reinstall the cluster, make sure you delete this directory from each host or else the mons will fail to start on the new cluster. # In Minikube, the '/data' directory is configured to persist across reboots. Use "/data/rook" in Minikube environment. dataDirHostPath: /var/lib/rook # Whether or not upgrade should continue even if a check fails # This means Ceph's status could be degraded and we don't recommend upgrading but you might decide otherwise # Use at your OWN risk # To understand Rook's upgrade process of Ceph, read https://rook.io/docs/rook/master/ceph-upgrade.html#ceph-version-upgrades skipUpgradeChecks: false # Whether or not continue if PGs are not clean during an upgrade continueUpgradeAfterChecksEvenIfNotHealthy: false # set the amount of mons to be started mon: count: 1 allowMultiplePerNode: false #mgr: # modules: # Several modules should not need to be included in this list. The "dashboard" and "monitoring" modules # are already enabled by other settings in the cluster CR and the "rook" module is always enabled. # - name: pg_autoscaler # enabled: true # enable the ceph dashboard for viewing cluster status dashboard: enabled: true # serve the dashboard under a subpath (useful when you are accessing the dashboard via a reverse proxy) # urlPrefix: /ceph-dashboard # serve the dashboard at the given port. # port: 8443 # serve the dashboard using SSL ssl: true # enable prometheus alerting for cluster monitoring: # requires Prometheus to be pre-installed enabled: false # namespace to deploy prometheusRule in. If empty, namespace of the cluster will be used. # Recommended: # If you have a single rook-ceph cluster, set the rulesNamespace to the same namespace as the cluster or keep it empty. # If you have multiple rook-ceph clusters in the same k8s cluster, choose the same namespace (ideally, namespace with prometheus # deployed) to set rulesNamespace for all the clusters. Otherwise, you will get duplicate alerts with multiple alert definitions. rulesNamespace: rook-ceph network: # enable host networking #provider: host # EXPERIMENTAL: enable the Multus network provider #provider: multus #selectors: # The selector keys are required to be `public` and `cluster`. # Based on the configuration, the operator will do the following: # 1. if only the `public` selector key is specified both public_network and cluster_network Ceph settings will listen on that interface # 2. if both `public` and `cluster` selector keys are specified the first one will point to 'public_network' flag and the second one to 'cluster_network' # # In order to work, each selector value must match a NetworkAttachmentDefinition object in Multus # #public: public-conf --> NetworkAttachmentDefinition object name in Multus #cluster: cluster-conf --> NetworkAttachmentDefinition object name in Multus # Provide internet protocol version. IPv6, IPv4 or empty string are valid options. Empty string would mean IPv4 #ipFamily: "IPv6" # enable the crash collector for ceph daemon crash collection crashCollector: disable: false cleanupPolicy: # cleanup should only be added to the cluster when the cluster is about to be deleted. # After any field of the cleanup policy is set, Rook will stop configuring the cluster as if the cluster is about # to be destroyed in order to prevent these settings from being deployed unintentionally. # To signify that automatic deletion is desired, use the value "yes-really-destroy-data". Only this and an empty # string are valid values for this field. confirmation: "" # sanitizeDisks represents settings for sanitizing OSD disks on cluster deletion sanitizeDisks: # method indicates if the entire disk should be sanitized or simply ceph's metadata # in both case, re-install is possible # possible choices are 'complete' or 'quick' (default) method: quick # dataSource indicate where to get random bytes from to write on the disk # possible choices are 'zero' (default) or 'random' # using random sources will consume entropy from the system and will take much more time then the zero source dataSource: zero # iteration overwrite N times instead of the default (1) # takes an integer value iteration: 1 # allowUninstallWithVolumes defines how the uninstall should be performed # If set to true, cephCluster deletion does not wait for the PVs to be deleted. allowUninstallWithVolumes: false # To control where various services will be scheduled by kubernetes, use the placement configuration sections below. # The example under 'all' would have all services scheduled on kubernetes nodes labeled with 'role=storage-node' and # tolerate taints with a key of 'storage-node'. # placement: # all: # nodeAffinity: # requiredDuringSchedulingIgnoredDuringExecution: # nodeSelectorTerms: # - matchExpressions: # - key: role # operator: In # values: # - storage-node # podAffinity: # podAntiAffinity: # topologySpreadConstraints: # tolerations: # - key: storage-node # operator: Exists # The above placement information can also be specified for mon, osd, and mgr components # mon: # Monitor deployments may contain an anti-affinity rule for avoiding monitor # collocation on the same node. This is a required rule when host network is used # or when AllowMultiplePerNode is false. Otherwise this anti-affinity rule is a # preferred rule with weight: 50. # osd: # mgr: # cleanup: annotations: # all: # mon: # osd: # cleanup: # prepareosd: # If no mgr annotations are set, prometheus scrape annotations will be set by default. # mgr: labels: # all: # mon: # osd: # cleanup: # mgr: # prepareosd: resources: # The requests and limits set here, allow the mgr pod to use half of one CPU core and 1 gigabyte of memory # mgr: # limits: # cpu: "500m" # memory: "1024Mi" # requests: # cpu: "500m" # memory: "1024Mi" # The above example requests/limits can also be added to the mon and osd components # mon: # osd: # prepareosd: # crashcollector: # cleanup: # The option to automatically remove OSDs that are out and are safe to destroy. removeOSDsIfOutAndSafeToRemove: false # priorityClassNames: # all: rook-ceph-default-priority-class # mon: rook-ceph-mon-priority-class # osd: rook-ceph-osd-priority-class # mgr: rook-ceph-mgr-priority-class storage: # cluster level storage configuration and selection useAllNodes: false useAllDevices: false #deviceFilter: config: # metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore. # databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GB # journalSizeMB: "1024" # uncomment if the disks are 20 GB or smaller # osdsPerDevice: "1" # this value can be overridden at the node or device level # encryptedDevice: "true" # the default value for this option is "false" # Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named # nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label. nodes: - name: "k8s-master1" devices: # specific devices to use for storage can be specified for each node - name: "sdb" - name: "k8s-master3" devices: - name: "sdb" - name: "node01" devices: - name: "sdb" # The section for configuring management of daemon disruptions during upgrade or fencing. disruptionManagement: # If true, the operator will create and manage PodDisruptionBudgets for OSD, Mon, RGW, and MDS daemons. OSD PDBs are managed dynamically # via the strategy outlined in the [design](https://github.com/rook/rook/blob/master/design/ceph/ceph-managed-disruptionbudgets.md). The operator will # block eviction of OSDs by default and unblock them safely when drains are detected. managePodBudgets: false # A duration in minutes that determines how long an entire failureDomain like `region/zone/host` will be held in `noout` (in addition to the # default DOWN/OUT interval) when it is draining. This is only relevant when `managePodBudgets` is `true`. The default value is `30` minutes. osdMaintenanceTimeout: 30 # If true, the operator will create and manage MachineDisruptionBudgets to ensure OSDs are only fenced when the cluster is healthy. # Only available on OpenShift. manageMachineDisruptionBudgets: false # Namespace in which to watch for the MachineDisruptionBudgets. machineDisruptionBudgetNamespace: openshift-machine-api # healthChecks # Valid values for daemons are 'mon', 'osd', 'status' healthCheck: daemonHealth: mon: disabled: false interval: 45s osd: disabled: false interval: 60s status: disabled: false interval: 60s # Change pod liveness probe, it works for all mon,mgr,osd daemons livenessProbe: mon: disabled: false mgr: disabled: false osd: disabled: false

# 不使用所有节点作为存储

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

# 作为存储的节点,添加裸盘的方式,取 'kubernetes.io/hostname' label作为name

nodes:

- name: "k8s-master1"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

- name: "k8s-master3"

devices:

- name: "sdb"

- name: "node01"

devices:

- name: "sdb"

可以使用节点亲和性选择相应的节点部署

创建集群

kubectl create -f cluster.yaml

最终完成

# kubectl get pods -n rook-ceph NAME READY STATUS RESTARTS AGE csi-cephfsplugin-5w6bd 3/3 Running 0 35m csi-cephfsplugin-ffg2g 3/3 Running 0 35m csi-cephfsplugin-m9c5p 3/3 Running 0 35m csi-cephfsplugin-provisioner-5c65b94c8d-mzq7c 6/6 Running 9 35m csi-cephfsplugin-provisioner-5c65b94c8d-sk292 6/6 Running 2 35m csi-cephfsplugin-xbr9d 3/3 Running 0 35m csi-rbdplugin-mlvf2 3/3 Running 0 35m csi-rbdplugin-provisioner-569c75558-fbxxg 6/6 Running 0 35m csi-rbdplugin-provisioner-569c75558-r5rtz 6/6 Running 10 35m csi-rbdplugin-qjc56 3/3 Running 0 35m csi-rbdplugin-tssf8 3/3 Running 0 35m csi-rbdplugin-x8hfs 3/3 Running 0 35m rook-ceph-crashcollector-k8s-master1-69f6c6484b-xgv8m 1/1 Running 1 17h rook-ceph-crashcollector-k8s-master2-845f75b57f-x7kb7 1/1 Running 1 10h rook-ceph-crashcollector-k8s-master3-78f56b85dd-8n7x2 1/1 Running 1 17h rook-ceph-crashcollector-node01-dccc44cf5-8tx7d 1/1 Running 1 17h rook-ceph-mgr-a-6758f456d-qv4tz 1/1 Running 1 11h rook-ceph-mon-a-775b697f6-ghrnz 1/1 Running 43 11h rook-ceph-operator-69d45cb679-2g7vf 1/1 Running 2 18h rook-ceph-osd-0-6fcdc8b979-z8zrc 1/1 Running 3 17h rook-ceph-osd-1-856bc66fb8-v2lpn 1/1 Running 3 17h rook-ceph-osd-2-94ffbf9b4-bmhqr 1/1 Running 3 17h rook-ceph-osd-prepare-k8s-master1-f8bct 0/1 Completed 0 9m43s rook-ceph-osd-prepare-k8s-master3-x8xn2 0/1 Completed 0 9m25s rook-ceph-osd-prepare-node01-dmppz 0/1 Completed 0 9m22s rook-discover-5xgpz 1/1 Running 1 18h rook-discover-6mkgh 1/1 Running 1 18h rook-discover-7czrl 1/1 Running 2 18h rook-discover-cg6fb 1/1 Running 2 18h

它会创建如下资源:

- namespace:rook-ceph,之后的所有Ceph集群相关的pod都会创建在该namespace下

- serviceaccount:ServiceAccount资源,给Ceph集群的Pod使用

- role & rolebinding:用户资源控制

- cluster:rook-ceph,创建的Ceph集群

部署的Ceph集群有:

- Ceph Monitors:默认启动三个ceph-mon,可以在cluster.yaml里配置

- Ceph Mgr:默认启动一个,可以在cluster.yaml里配置

- Ceph OSDs:根据cluster.yaml里的配置启动,默认在所有的可用节点上启动

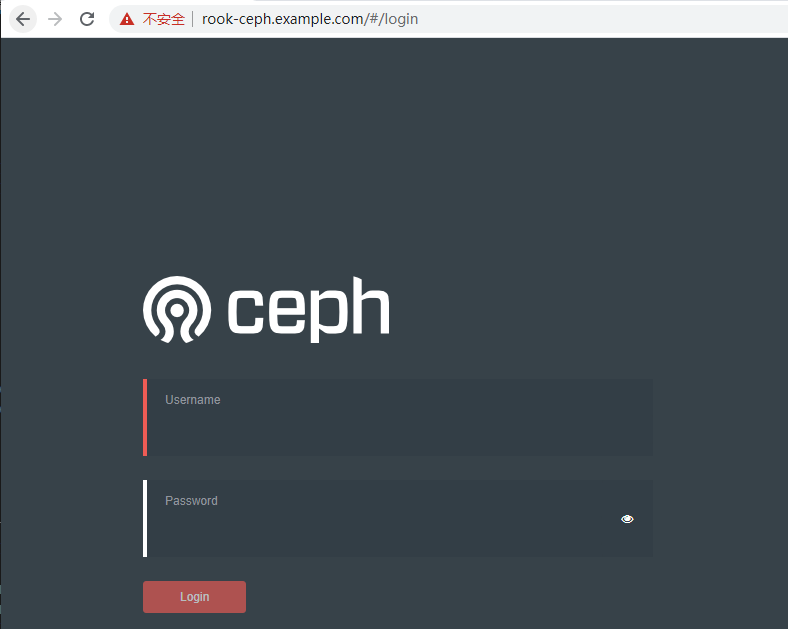

三、Dashboard

# pwd /data/rook/rook/cluster/examples/kubernetes/ceph # 选择ingress方式(前提是已经部署了ingress) # kubectl create -f dashboard-ingress-https.yaml #没有ingress可以使用NodePort

查看

# kubectl get svc -n rook-ceph | grep dashboard rook-ceph-mgr-dashboard ClusterIP 10.108.53.200 <none> 8443/TCP 18h # kubectl get ingress -n rook-ceph Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress NAME CLASS HOSTS ADDRESS PORTS AGE rook-ceph-mgr-dashboard <none> rook-ceph.example.com 10.101.32.165 80, 443 18h

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

四、安装Toolbox

默认启动的Ceph集群,是开启Ceph认证的,这样你登陆Ceph组件所在的Pod里,是没法去获取集群状态,以及执行CLI命令,这时需要部署Ceph toolbox

# pwd /data/rook/rook/cluster/examples/kubernetes/ceph

# kubectl create -f toolbox.yaml deployment.apps/rook-ceph-tools created

测试toolbox

# kubectl exec -it rook-ceph-tools-5ccd9c8d5b-8bgkm -n rook-ceph -- bash

# ceph status

cluster:

id: 908157b5-fbf4-408c-b32c-f1542b92a4aa

health: HEALTH_WARN

43 daemons have recently crashed

services:

mon: 1 daemons, quorum a (age 77m)

mgr: a(active, since 49m)

osd: 3 osds: 3 up (since 73m), 3 in (since 11h)

data:

pools: 1 pools, 1 pgs

objects: 3 objects, 0 B

usage: 3.0 GiB used, 21 GiB / 24 GiB avail

pgs: 1 active+clean

五、存储

-

Block:创建Pod使用的块存储

-

Object:创建一个在Kubernetes集群内部或外部均可访问的对象存储

-

Shared Filesystem:多个Pod之间系统

5.1 Block块存储

在提供(Provisioning)块存储之前,需要先创建StorageClass和存储池。K8S需要这两类资源,才能和Rook交互,进而分配持久卷(PV)

ceph-block-pool.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 1

创建存储池

# kubectl create -f ceph-block-pool.yaml # kubectl get cephblockpool -n rook-ceph NAME AGE replicapool 65s

- cephblockpool:是rook自定义的kind

创建storageClass

ceph-block-storageClass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

# Change "rook-ceph" provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

# clusterID is the namespace where the rook cluster is running

clusterID: rook-ceph

# Ceph pool into which the RBD image shall be created

pool: replicapool

# RBD image format. Defaults to "2".

imageFormat: "2"

# RBD image features. Available for imageFormat: "2". CSI RBD currently supports only `layering` feature.

imageFeatures: layering

# The secrets contain Ceph admin credentials.

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

# Specify the filesystem type of the volume. If not specified, csi-provisioner

# will set default as `ext4`. Note that `xfs` is not recommended due to potential deadlock

# in hyperconverged settings where the volume is mounted on the same node as the osds.

csi.storage.k8s.io/fstype: ext4

# Delete the rbd volume when a PVC is deleted

reclaimPolicy: Delete

创建

# kubectl create -f ceph-block-storageClass.yaml # kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 2m21s

5.2 创建一个statefulset

test-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: test-block-sc

name: test-block-sc

namespace: default

spec:

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: test-block-sc

serviceName: test-block-sc

template:

metadata:

labels:

app: test-block-sc

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- test-block-sc

topologyKey: kubernetes.io/hostname

weight: 100

containers:

- env:

- name: TZ

value: Asia/Shanghai

- name: LANG

value: C.UTF-8

image: nginx:1.15.2

imagePullPolicy: Always

lifecycle: {}

livenessProbe:

failureThreshold: 2

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 2

name: test-block-sc

ports:

- containerPort: 80

name: nginx-web

protocol: TCP

readinessProbe:

failureThreshold: 2

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 2

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 10m

memory: 10Mi

tty: true

volumeMounts:

- mountPath: /usr/share/zoneinfo/Asia/Shanghai

name: tz-config

- mountPath: /etc/localtime

name: tz-config

- mountPath: /usr/share/nginx/html/items

name: test-block-sts

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

type: ""

name: tz-config

updateStrategy:

rollingUpdate:

partition: 0

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-block-sts

spec:

accessModes:

- ReadWriteOnce

resources:

limits:

storage: 5Gi

requests:

storage: 1Gi

storageClassName: rook-ceph-block

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

labels:

app: test-block-sc

name: test-block-sc

namespace: default

spec:

clusterIP: None

ports:

- name: container-1-nginx-web-1

port: 80

protocol: TCP

targetPort: 80

selector:

app: test-block-sc

sessionAffinity: None

type: ClusterIP

查看pv和pvc

# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE test-block-sts-test-block-sc-0 Bound pvc-cff04799-5735-4243-aef0-8ed6725521d1 1Gi RWO rook-ceph-block 48m test-block-sts-test-block-sc-1 Bound pvc-28d34f2c-21ac-4f58-b4f1-3923bea1de91 1Gi RWO rook-ceph-block 45m

# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-28d34f2c-21ac-4f58-b4f1-3923bea1de91 1Gi RWO Delete Bound default/test-block-sts-test-block-sc-1 rook-ceph-block 45m pvc-cff04799-5735-4243-aef0-8ed6725521d1 1Gi RWO Delete Bound default/test-block-sts-test-block-sc-0 rook-ceph-block 48m

浙公网安备 33010602011771号

浙公网安备 33010602011771号