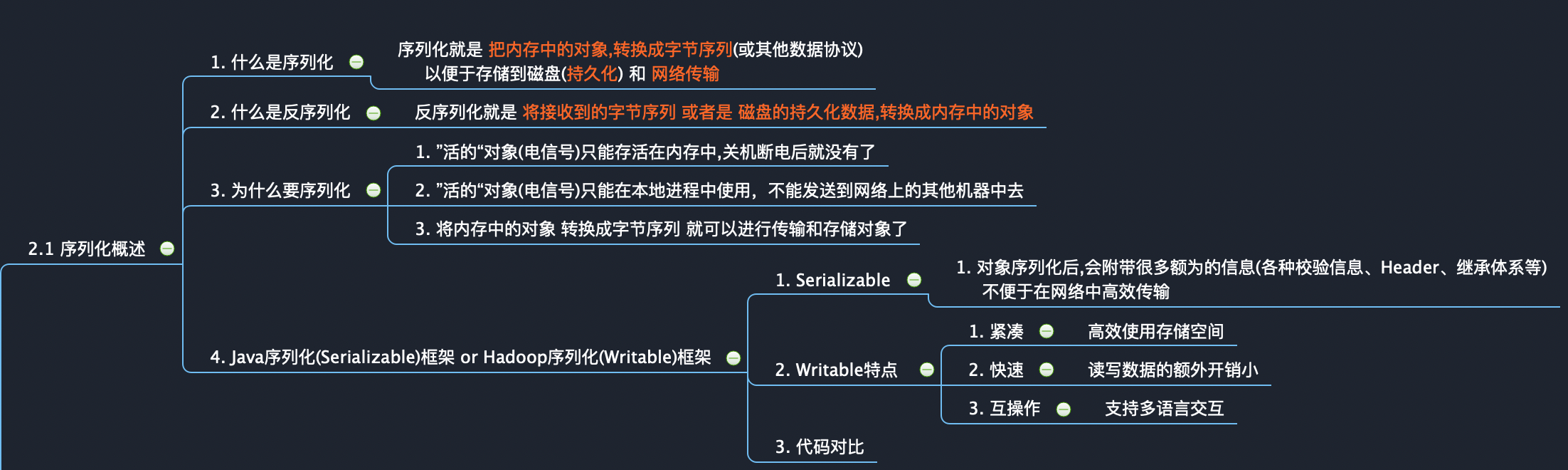

02_Hadoop序列化_2.1 序列化概述

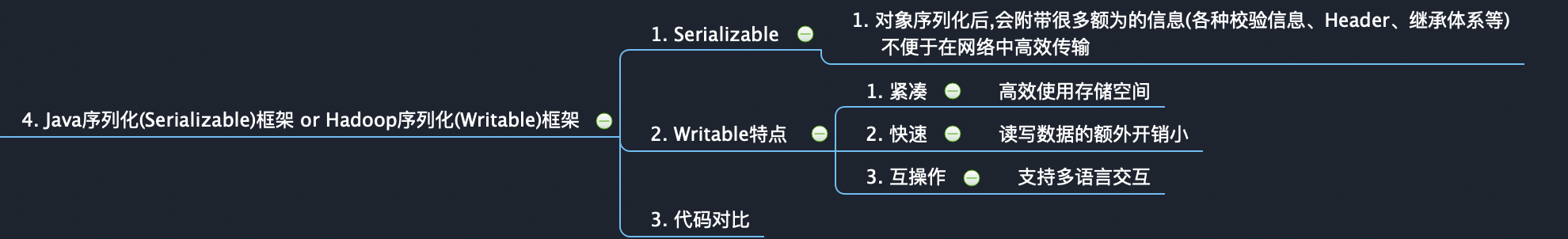

4. Java序列化(Serializable)框架 or Hadoop序列化(Writable)框架

代码示例

package twoPk { import java.io._ import java.util.Date import org.apache.hadoop.io.Writable //对比 Hadoop-Writable 和 Serializable object WritableVsSerializable extends App { private val begin: Long = new Date().getTime //1. 创建 FileOutputStream对象 val outPath = "src/main/data/output/Person.data" private val file = new File(outPath) private val fileOutputStream = new FileOutputStream(file) //2. 创建对象 var person = new Person person.id = 1 //3. 序列化对象 //WritableFun(person, fileOutputStream) //用时: 1 size : 143 bytes SerializableFun(person, fileOutputStream) //用时: 18 size : 235 bytes private val end: Long = new Date().getTime //4. 查看结果 println(s"用时: ${end - begin} size : ${file.length} bytes") // 1. Hadoop-Writable 序列化对象 def WritableFun(per: Person, fopstream: FileOutputStream) = { per.write(new DataOutputStream(fopstream)) fopstream.close } // 2. Hadoop-Writable 序列化对象 def SerializableFun(per: Person, fopstream: FileOutputStream) = { val objectOutputStream = new ObjectOutputStream(fopstream) objectOutputStream.writeObject(per) objectOutputStream.close } } //Hadoop Writable 序列化框架(序列化&反序列化) object WritableWriteTest extends App { val outPath = "src/main/data/output/Person.data" //1. 创建 DataOutput流对象 private val dataOutputStream = new DataOutputStream(new FileOutputStream(outPath)) //2. 创建对象 var person = new Person person.id = 1 person.name = "小王" //3. 持久化到磁盘 person.write(dataOutputStream) //4. 关闭资源 dataOutputStream.close } //Hadoop Writable 序列化框架(反序列化) object WritableReadTest extends App { val outPath = "src/main/data/output/Person.data" //1. 创建 DataOutput流对象 private val datainputStream = new DataInputStream(new FileInputStream(outPath)) //2. 创建对象 var person = new Person //3. 持久化到磁盘 person.readFields(datainputStream) //4. 关闭资源 datainputStream.close //5. 打印对象 println(person) } //Java Serializable 序列化对象 object ObjectOutputStreamTest extends App { // 1. 创建流对象 val outPath = "sparkcore/src/main/data/Person.data" private val outputStream = new ObjectOutputStream(new FileOutputStream(outPath)) // 2. 序列化对象 持久化到磁盘 outputStream.writeObject(new Person) // 3. 关闭流 outputStream.close println("序列化完成") } //Java Serializable 反列化对象 object ObjectInputStreamTest extends App { // 1. 创建流对象 val outPath = "sparkcore/src/main/data/Person.data" private val inputStream = new ObjectInputStream(new FileInputStream(outPath)) // 2. 读取二进制 将对象反序列化到内存 private val readObject = inputStream.readObject.asInstanceOf[Person] println(readObject) readObject.show // 3. 关闭流 inputStream.close } // Person对象 class Person extends Serializable with Writable { var id = 10 var name = "谁谓河广?一苇杭之。谁谓宋远?跂予望之。\n谁谓河广?曾不容刀。谁谓宋远?曾不崇朝。" var sum = 19.8 var serialVersionUID: Long = 888L def show = println("Person - show") override def toString = s"Person($id, $name, $sum)" override def write(out: DataOutput): Unit = { out.writeInt(id) out.writeUTF(name) out.writeDouble(sum) out.writeLong(serialVersionUID) } override def readFields(in: DataInput): Unit = { id = in.readInt name = in.readUTF sum = in.readDouble serialVersionUID = in.readLong } } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号