kubernetes之2---二进制部署k8s集群

二进制部署k8s集群

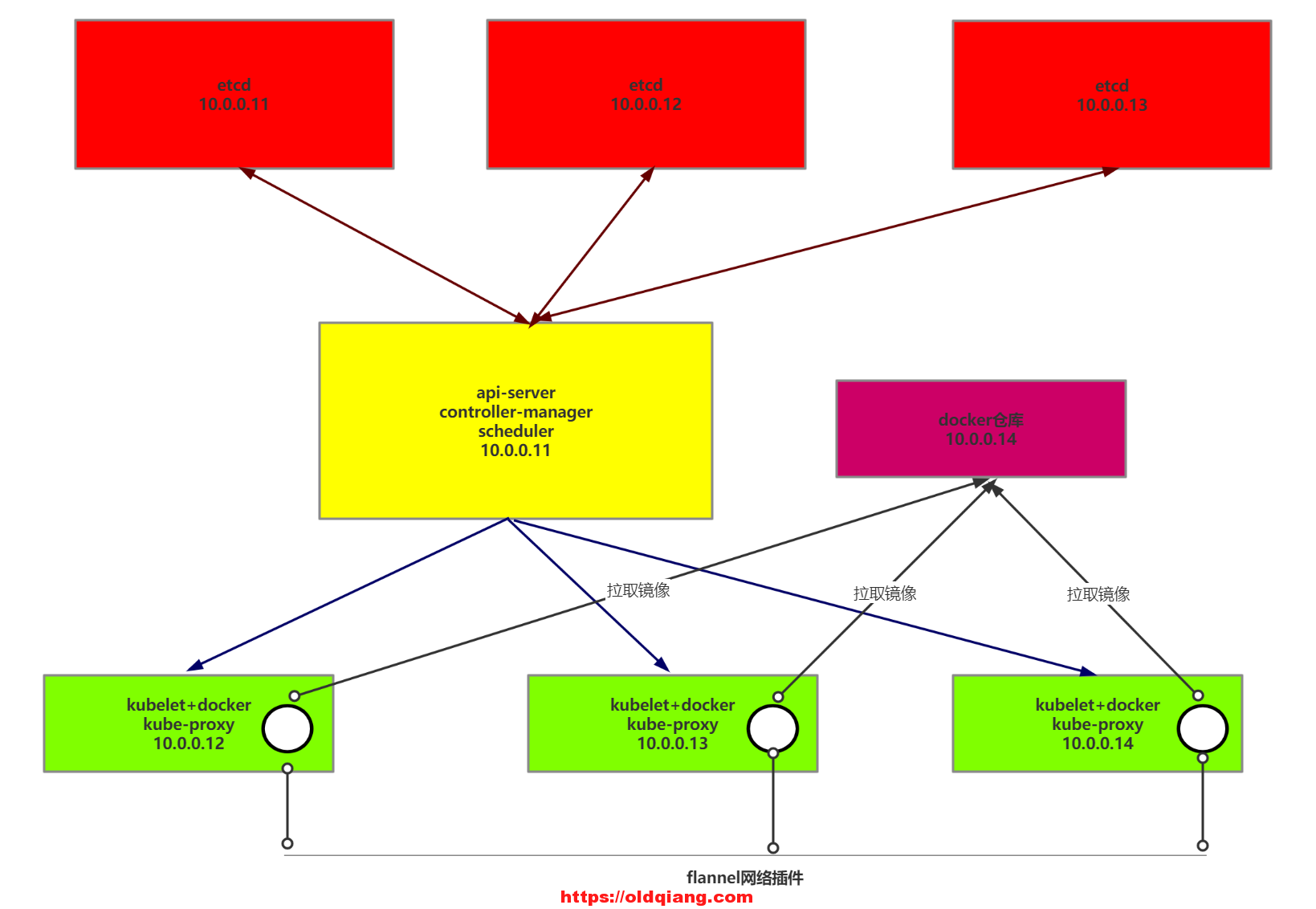

集群架构

| 服务 | 端口 |

|---|---|

| etcd | 127.0.0.1:2379,2380 |

| kubelet | 10250,10255 |

| kube-proxy | 10256 |

| kube-apiserve | 6443,127.0.0.1:8080 |

| kube-schedule | 10251,10259 |

| kube-controll | 10252,10257 |

环境准备

| 主机 | IP | 内存 | 软件 |

|---|---|---|---|

| k8s-master | 10.0.0.11 | 1G | etcd,api-server,controller-manager,scheduler |

| k8s-node1 | 10.0.0.12 | 2G | etcd,kubelet,kube-proxy,docker,flannel |

| k8s-node2 | 10.0.0.13 | 2G | ectd,kubelet,kube-proxy,docker,flannel |

| k8s-node3 | 10.0.0.14 | 2G | kubelet,kube-proxy,docker,flannel |

-

关闭:

selinux,firewalld和NetworkManager,postfix(非必须) -

修改IP地址、主机名

hostnamectl set-hostname 主机名

sed -i 's/200/IP/g' /etc/sysconfig/network-scripts/ifcfg-eth0

- 添加hosts解析

cat > /etc/hosts <<EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.11 k8s-master

10.0.0.12 k8s-node1

10.0.0.13 k8s-node2

10.0.0.14 k8s-node3

EOF

- 创建k8s配置文件目录

mkdir /etc/kubernetes

- k8s-node3配置ssh密钥免密登录所有节点

ssh-keygen

ssh-copy-id k8s-master

ssh-copy-id k8s-node1

ssh-copy-id k8s-node2

ssh-copy-id k8s-node3

注意: 如果SSH不使用默认22端口时

cat > ~/.ssh/config <<EOF Port 12345 EOF

签发HTTPS证书

根据认证对象可以将证书分成三类:

- 服务器证书

server cert:服务端使用,客户端以此验证服务端身份,例如docker服务端、kube-apiserver - 客户端证书

client cert:用于服务端认证客户端,例如etcdctl、etcd proxy、fleetctl、docker客户端 - 对等证书

peer cert(表示既是server cert又是client cert):双向证书,用于etcd集群成员间通信

kubernetes集群需要的证书如下:

etcd节点需要标识自己服务的server cert,也需要client cert与etcd集群其他节点交互,因此使用对等证书peer cert。master节点需要标识apiserver服务的server cert,也需要client cert连接etcd集群,这里分别指定2个证书。kubectl、calico、kube-proxy只需要client cert,因此证书请求中 hosts 字段可以为空。kubelet证书比较特殊,不是手动生成,它由node节点TLS BootStrap向apiserver请求,由master节点的controller-manager自动签发,包含一个client cert和一个server cert。

本架构使用的证书:参考文档

- 一套对等证书(etcd-peer):etcd<-->etcd<-->etcd

- 客户端证书(client):api-server-->etcd和flanneld-->etcd

- 服务器证书(apiserver):-->api-server

- 服务器证书(kubelet):api-server-->kubelet

- 服务器证书(kube-proxy-client):api-server-->kube-proxy

不使用证书:

-

如果使用证书,每次访问etcd都必须指定证书;为了方便,etcd监听127.0.0.1,本机访问不使用证书。

-

api-server-->controller-manager

-

api-server-->scheduler

在k8s-node3节点基于CFSSL工具创建CA证书,服务端证书,客户端证书。

CFSSL是CloudFlare开源的一款PKI/TLS工具。 CFSSL 包含一个命令行工具 和一个用于签名,验证并且捆绑TLS证书的 HTTP API 服务。 使用Go语言编写。

Github:https://github.com/cloudflare/cfssl

官网:https://pkg.cfssl.org/

参考:http://blog.51cto.com/liuzhengwei521/2120535?utm_source=oschina-app

- 准备证书颁发工具CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssl-certinfo /usr/local/bin/cfssljson

- 创建ca证书配置文件

mkdir /opt/certs && cd /opt/certs

cat > /opt/certs/ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

- 创建ca证书请求配置文件

cat > /opt/certs/ca-csr.json <<EOF

{

"CN": "kubernetes-ca",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

EOF

- 生成CA证书和私钥

[root@k8s-node3 certs]# cfssl gencert -initca ca-csr.json|cfssljson -bare ca -

2020/12/14 09:59:31 [INFO] generating a new CA key and certificate from CSR

2020/12/14 09:59:31 [INFO] generate received request

2020/12/14 09:59:31 [INFO] received CSR

2020/12/14 09:59:31 [INFO] generating key: rsa-2048

2020/12/14 09:59:31 [INFO] encoded CSR

2020/12/14 09:59:31 [INFO] signed certificate with serial number 541033833394022225124150924404905984331621873569

[root@k8s-node3 certs]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

部署etcd集群

| 主机名 | IP | 角色 |

|---|---|---|

| k8s-master | 10.0.0.11 | etcd lead |

| k8s-node1 | 10.0.0.12 | etcd follow |

| k8s-node2 | 10.0.0.13 | etcd follow |

- k8s-node3签发etcd节点之间通信的证书

cat > /opt/certs/etcd-peer-csr.json <<EOF

{

"CN": "etcd-peer",

"hosts": [

"10.0.0.11",

"10.0.0.12",

"10.0.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

EOF

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssljson -bare etcd-peer

2020/12/14 10:05:22 [INFO] generate received request

2020/12/14 10:05:22 [INFO] received CSR

2020/12/14 10:05:22 [INFO] generating key: rsa-2048

2020/12/14 10:05:23 [INFO] encoded CSR

2020/12/14 10:05:23 [INFO] signed certificate with serial number 300469497136552423377618640775350926134698270185

2020/12/14 10:05:23 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls etcd-peer*

etcd-peer.csr etcd-peer-csr.json etcd-peer-key.pem etcd-peer.pem

- k8s-master,k8s-node1,k8s-node2安装etcd服务

yum -y install etcd

- k8s-node3发送证书到k8s-master,k8s-node1,k8s-node2的/etc/etcd目录

cd /opt/certs

scp -rp *.pem root@10.0.0.11:/etc/etcd/

scp -rp *.pem root@10.0.0.12:/etc/etcd/

scp -rp *.pem root@10.0.0.13:/etc/etcd/

- k8s-master,k8s-node1,k8s-node2修改证书属主属组

chown -R etcd:etcd /etc/etcd/*.pem

- k8s-master配置etcd

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.0.0.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_NAME="node1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="node1=https://10.0.0.11:2380,node2=https://10.0.0.12:2380,node3=https://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_PEER_AUTO_TLS="true"

EOF

- k8s-node1配置etcd

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.0.0.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.12:2379,http://127.0.0.1:2379"

ETCD_NAME="node2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.12:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="node1=https://10.0.0.11:2380,node2=https://10.0.0.12:2380,node3=https://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_PEER_AUTO_TLS="true"

EOF

- k8s-node1配置etcd

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.0.0.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.13:2379,http://127.0.0.1:2379"

ETCD_NAME="node3"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.13:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="node1=https://10.0.0.11:2380,node2=https://10.0.0.12:2380,node3=https://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_PEER_AUTO_TLS="true"

EOF

- k8s-master,k8s-node1,k8s-node2同时启动etcd服务并加入开机自启

systemctl start etcd

systemctl enable etcd

- k8s-master验证etcd集群

[root@k8s-master ~]# etcdctl member list

55fcbe0adaa45350: name=node3 peerURLs=https://10.0.0.13:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.13:2379 isLeader=true

cebdf10928a06f3c: name=node1 peerURLs=https://10.0.0.11:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.11:2379 isLeader=false

f7a9c20602b8532e: name=node2 peerURLs=https://10.0.0.12:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.12:2379 isLeader=false

master节点安装

-

k8s-node3下载二进制包,解压,并推送master节点所需服务到k8s-master

本架构使用v1.15.4的kubernetes-server二进制包

mkdir /opt/softs && cd /opt/softs

wget https://storage.googleapis.com/kubernetes-release/release/v1.16.1/kubernetes-server-linux-amd64.tar.gz wget https://storage.googleapis.com/kubernetes-release/release/v1.15.4/kubernetes-server-linux-amd64.tar.gz

tar xf kubernetes-server-linux-amd64-v1.15.4.tar.gz

cd /opt/softs/kubernetes/server/bin/

scp -rp kube-apiserver kube-controller-manager kube-scheduler kubectl root@10.0.0.11:/usr/sbin/

- k8s-node3签发client证书

cd /opt/certs/

cat > /opt/certs/client-csr.json <<EOF

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

EOF

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json|cfssljson -bare client

2020/12/14 11:24:13 [INFO] generate received request

2020/12/14 11:24:13 [INFO] received CSR

2020/12/14 11:24:13 [INFO] generating key: rsa-2048

2020/12/14 11:24:13 [INFO] encoded CSR

2020/12/14 11:24:13 [INFO] signed certificate with serial number 558115824565037436109754375250535796590542635717

2020/12/14 11:24:13 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls client*

client.csr client-csr.json client-key.pem client.pem

- k8s-node3签发kube-apiserver证书

cat > /opt/certs/apiserver-csr.json <<EOF

{

"CN": "apiserver",

"hosts": [

"127.0.0.1",

"10.254.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.0.0.11",

"10.0.0.12",

"10.0.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

EOF

注意:pod资源创建时,使用环境变量导入clusterIP网段的第一个ip(10.254.0.1),做为pod访问api-server的内部IP,实现自动发现功能。

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssljson -bare apiserver

2020/12/14 11:31:42 [INFO] generate received request

2020/12/14 11:31:42 [INFO] received CSR

2020/12/14 11:31:42 [INFO] generating key: rsa-2048

2020/12/14 11:31:42 [INFO] encoded CSR

2020/12/14 11:31:42 [INFO] signed certificate with serial number 418646719184970675117735868438071556604394393673

2020/12/14 11:31:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls apiserver*

apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

- k8s-node3推送证书给k8s-master

scp -rp ca*pem apiserver*pem client*pem root@10.0.0.11:/etc/kubernetes

安装api-server服务

- master节点查看证书

[root@k8s-master kubernetes]# ls /etc/kubernetes

apiserver-key.pem apiserver.pem ca-key.pem ca.pem client-key.pem client.pem

- master节点配置api-server审计日志规则

cat > /etc/kubernetes/audit.yaml <<EOF

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

EOF

- master节点配置api-server.service

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

[Service]

ExecStart=/usr/sbin/kube-apiserver \\

--audit-log-path /var/log/kubernetes/audit-log \\

--audit-policy-file /etc/kubernetes/audit.yaml \\

--authorization-mode RBAC \\

--client-ca-file /etc/kubernetes/ca.pem \\

--requestheader-client-ca-file /etc/kubernetes/ca.pem \\

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \\

--etcd-cafile /etc/kubernetes/ca.pem \\

--etcd-certfile /etc/kubernetes/client.pem \\

--etcd-keyfile /etc/kubernetes/client-key.pem \\

--etcd-servers https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379 \\

--service-account-key-file /etc/kubernetes/ca-key.pem \\

--service-cluster-ip-range 10.254.0.0/16 \\

--service-node-port-range 30000-59999 \\

--kubelet-client-certificate /etc/kubernetes/client.pem \\

--kubelet-client-key /etc/kubernetes/client-key.pem \\

--log-dir /var/log/kubernetes/ \\

--logtostderr=false \\

--tls-cert-file /etc/kubernetes/apiserver.pem \\

--tls-private-key-file /etc/kubernetes/apiserver-key.pem \\

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

为了省事,apiserver和etcd通信,apiserver和kubelet通信共用一套

client cert证书。--audit-log-path /var/log/kubernetes/audit-log \ # 审计日志路径 --audit-policy-file /etc/kubernetes/audit.yaml \ # 审计规则文件 --authorization-mode RBAC \ # 授权模式:RBAC --client-ca-file /etc/kubernetes/ca.pem \ # client ca证书 --requestheader-client-ca-file /etc/kubernetes/ca.pem \ # 请求头 ca证书 --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \ # 启用的准入插件 --etcd-cafile /etc/kubernetes/ca.pem \ # 与etcd通信ca证书 --etcd-certfile /etc/kubernetes/client.pem \ # 与etcd通信client证书 --etcd-keyfile /etc/kubernetes/client-key.pem \ # 与etcd通信client私钥 --etcd-servers https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379 \ --service-account-key-file /etc/kubernetes/ca-key.pem \ # ca私钥 --service-cluster-ip-range 10.254.0.0/16 \ # VIP范围 --service-node-port-range 30000-59999 \ # VIP端口范围 --kubelet-client-certificate /etc/kubernetes/client.pem \ # 与kubelet通信client证书 --kubelet-client-key /etc/kubernetes/client-key.pem \ # 与kubelet通信client私钥 --log-dir /var/log/kubernetes/ \ # 日志文件路径 --logtostderr=false \ # 关闭日志标准错误输出,就会输出到文件中 --tls-cert-file /etc/kubernetes/apiserver.pem \ # api服务证书 --tls-private-key-file /etc/kubernetes/apiserver-key.pem \ # api服务私钥 --v 2 # 日志级别 2 Restart=on-failure

- master节点创建日志目录,启动并开机启动apiserver

mkdir /var/log/kubernetes

systemctl daemon-reload

systemctl start kube-apiserver.service

systemctl enable kube-apiserver.service

- master节点检验

[root@k8s-master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

安装controller-manager服务

- master节点配置kube-controller-manager.service

cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

[Service]

ExecStart=/usr/sbin/kube-controller-manager \\

--cluster-cidr 172.18.0.0/16 \\

--log-dir /var/log/kubernetes/ \\

--master http://127.0.0.1:8080 \\

--service-account-private-key-file /etc/kubernetes/ca-key.pem \\

--service-cluster-ip-range 10.254.0.0/16 \\

--root-ca-file /etc/kubernetes/ca.pem \\

--logtostderr=false \\

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- master节点启动并开机启动controller-manager

systemctl daemon-reload

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

安装scheduler服务

- master节点配置kube-scheduler.service

cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

[Service]

ExecStart=/usr/sbin/kube-scheduler \\

--log-dir /var/log/kubernetes/ \\

--master http://127.0.0.1:8080 \\

--logtostderr=false \\

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- master节点启动并开机启动scheduler

systemctl daemon-reload

systemctl enable kube-scheduler.service

systemctl start kube-scheduler.service

- master节点检验

[root@k8s-master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

node节点的安装

安装kubelet服务

- k8s-node3节点签发kubelet证书

cd /opt/certs/

cat > kubelet-csr.json <<EOF

{

"CN": "kubelet-node",

"hosts": [

"127.0.0.1",

"10.0.0.11",

"10.0.0.12",

"10.0.0.13",

"10.0.0.14",

"10.0.0.15"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

EOF

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssljson -bare kubelet

2020/12/14 14:55:00 [INFO] generate received request

2020/12/14 14:55:00 [INFO] received CSR

2020/12/14 14:55:00 [INFO] generating key: rsa-2048

2020/12/14 14:55:00 [INFO] encoded CSR

2020/12/14 14:55:00 [INFO] signed certificate with serial number 110678673830256746819664644693971611232380342377

2020/12/14 14:55:00 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls kubelet*

kubelet.csr kubelet-csr.json kubelet-key.pem kubelet.pem

- **k8s-node3生成kubelet客户端认证凭据kubelet.kubeconfig **

ln -s /opt/softs/kubernetes/server/bin/kubectl /usr/sbin/

# 设置集群参数

kubectl config set-cluster myk8s \

--certificate-authority=/opt/certs/ca.pem \

--embed-certs=true \

--server=https://10.0.0.11:6443 \

--kubeconfig=kubelet.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials k8s-node --client-certificate=/opt/certs/client.pem --client-key=/opt/certs/client-key.pem --embed-certs=true --kubeconfig=kubelet.kubeconfig

# 生成上下文参数

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

# 切换当前上下文

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

# cat kubelet.kubeconfig apiVersion: v1 clusters: - cluster: # 集群 certificate-authority-data: ... ... # ca证书 server: https://10.0.0.11:6443 # apiserve服务地址 name: myk8s # 集群名称 contexts: - context: # 上下文 cluster: myk8s user: k8s-node name: myk8s-context current-context: myk8s-context # 当前上下文 kind: Config preferences: {} users: - name: k8s-node # 用户名 user: client-certificate-data: ... ... # client证书 client-key-data: ... ... # client私钥

- master节点创建rbac权限service资源(只需要创建一次)

cat > k8s-node.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

EOF

kubectl create -f k8s-node.yaml

- node节点安装docker-ce启动并加入开机自启,并配置镜像加速,用systemd控制

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce -y

systemctl enable docker

systemctl start docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker.service

docker info

- k8s-node3推送kubelet命令,客户端认证凭据和所需证书到node节点

cd /opt/certs/

scp -rp kubelet.kubeconfig ca*pem kubelet*pem root@10.0.0.12:/etc/kubernetes

scp -rp /opt/softs/kubernetes/server/bin/kubelet root@10.0.0.12:/usr/bin/

scp -rp kubelet.kubeconfig ca*pem kubelet*pem root@10.0.0.13:/etc/kubernetes scp -rp /opt/softs/kubernetes/server/bin/kubelet root@10.0.0.13:/usr/bin/

- node节点配置kubelet.service启动并开机启动

mkdir /var/log/kubernetes

cat > /usr/lib/systemd/system/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service multi-user.target

Requires=docker.service

[Service]

ExecStart=/usr/bin/kubelet \\

--anonymous-auth=false \\

--cgroup-driver systemd \\

--cluster-dns 10.254.230.254 \\

--cluster-domain cluster.local \\

--runtime-cgroups=/systemd/system.slice \\

--kubelet-cgroups=/systemd/system.slice \\

--fail-swap-on=false \\

--client-ca-file /etc/kubernetes/ca.pem \\

--tls-cert-file /etc/kubernetes/kubelet.pem \\

--tls-private-key-file /etc/kubernetes/kubelet-key.pem \\

--hostname-override 10.0.0.12 \\

--image-gc-high-threshold 90 \\

--image-gc-low-threshold 70 \\

--kubeconfig /etc/kubernetes/kubelet.kubeconfig \\

--log-dir /var/log/kubernetes/ \\

--pod-infra-container-image t29617342/pause-amd64:3.0 \\

--logtostderr=false \\

--v=2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet.service

systemctl start kubelet.service

Requires=docker.service # 依赖服务 [Service] ExecStart=/usr/bin/kubelet \ --anonymous-auth=false \ # 关闭匿名认证 --cgroup-driver systemd \ # 用systemd控制 --cluster-dns 10.254.230.254 \ # DNS地址 --cluster-domain cluster.local \ # DNS域名,与DNS服务配置资源指定的一致 --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on=false \ # 关闭不使用swap --client-ca-file /etc/kubernetes/ca.pem \ # ca证书 --tls-cert-file /etc/kubernetes/kubelet.pem \ # kubelet证书 --tls-private-key-file /etc/kubernetes/kubelet-key.pem \ # kubelet密钥 --hostname-override 10.0.0.13 \ # kubelet主机名, 各node节点不一样 --image-gc-high-threshold 20 \ # 磁盘使用率超过20,始终运行镜像垃圾回收 --image-gc-low-threshold 10 \ # 磁盘使用率小于10,从不运行镜像垃圾回收 --kubeconfig /etc/kubernetes/kubelet.kubeconfig \ # 客户端认证凭据 --pod-infra-container-image t29617342/pause-amd64:3.0 \ # pod基础容器镜像注意:这里的pod基础容器镜像使用的是官方仓库t29617342用户的公开镜像!

-

其他node节点重复4.5.6步(注意:修改scp IP和hostname)

-

master节点验证

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.0.12 Ready <none> 4m19s v1.15.4

10.0.0.13 Ready <none> 13s v1.15.4

安装kube-proxy服务

- k8s-node3节点签发证书kube-proxy-client

cd /opt/certs/

cat > /opt/certs/kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

EOF

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json | cfssljson -bare kube-proxy-client

2020/12/14 16:20:46 [INFO] generate received request

2020/12/14 16:20:46 [INFO] received CSR

2020/12/14 16:20:46 [INFO] generating key: rsa-2048

2020/12/14 16:20:46 [INFO] encoded CSR

2020/12/14 16:20:46 [INFO] signed certificate with serial number 364147028440857189661095322729307531340019233888

2020/12/14 16:20:46 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls kube-proxy-c*

kube-proxy-client.csr kube-proxy-client-key.pem kube-proxy-client.pem kube-proxy-csr.json

- k8s-node3生成kubelet-proxy客户端认证凭据kube-proxy.kubeconfig

kubectl config set-cluster myk8s \

--certificate-authority=/opt/certs/ca.pem \

--embed-certs=true \

--server=https://10.0.0.11:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/certs/kube-proxy-client.pem \

--client-key=/opt/certs/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

- k8s-node3推送kube-proxy命令和客户端认证凭据到node节点

scp -rp /opt/certs/kube-proxy.kubeconfig root@10.0.0.12:/etc/kubernetes/

scp -rp /opt/certs/kube-proxy.kubeconfig root@10.0.0.13:/etc/kubernetes/

scp -rp /opt/softs/kubernetes/server/bin/kube-proxy root@10.0.0.12:/usr/bin/

scp -rp /opt/softs/kubernetes/server/bin/kube-proxy root@10.0.0.13:/usr/bin/

- node节点配置kube-proxy.service启动并开机启动(注意修改hostname-override)

cat > /usr/lib/systemd/system/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

ExecStart=/usr/bin/kube-proxy \\

--kubeconfig /etc/kubernetes/kube-proxy.kubeconfig \\

--cluster-cidr 172.18.0.0/16 \\

--hostname-override 10.0.0.12 \\

--logtostderr=false \\

--v=2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

--cluster-cidr 172.18.0.0/16 \ # pod IP

配置flannel网络

- 所有节点安装flannel(master节点安装方便测试)

yum install flannel -y

mkdir /opt/certs/

- k8s-node3节点签发证书(复用client证书),推送给其他所有节点

cd /opt/certs/

scp -rp ca.pem client*pem root@10.0.0.11:/opt/certs/

scp -rp ca.pem client*pem root@10.0.0.12:/opt/certs/

scp -rp ca.pem client*pem root@10.0.0.13:/opt/certs/

- etcd节点创建flannel的key

# 通过这个key定义pod的ip地址范围

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16","Backend": {"Type": "vxlan"} }'

注意:可能会失败,提示

Error: x509: certificate signed by unknown authority

多重试几次就好了。

- 所有节点配置flannel.service启动并开机启动

cat > /etc/sysconfig/flanneld <<EOF

FLANNEL_ETCD_ENDPOINTS="https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network"

FLANNEL_OPTIONS="-etcd-cafile=/opt/certs/ca.pem -etcd-certfile=/opt/certs/client.pem -etcd-keyfile=/opt/certs/client-key.pem"

EOF

systemctl enable flanneld.service

systemctl start flanneld.service

- k8s-node1和k8s-node2修改docker.service:添加参数,iptables开启转发

sed -i '/ExecStart/c ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock' /usr/lib/systemd/system/docker.service

sed -i '/ExecStart/i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT' /usr/lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

docker启动时,需要使用flannel指定的参数DOCKER_NETWORK_OPTIONS,使两者网段一致。

[root@k8s-node1 ~]# cat /run/flannel/docker DOCKER_OPT_BIP="--bip=172.18.28.1/24" DOCKER_OPT_IPMASQ="--ip-masq=true" DOCKER_OPT_MTU="--mtu=1450" DOCKER_NETWORK_OPTIONS=" --bip=172.18.28.1/24 --ip-masq=true --mtu=1450"

- master验证各节点互通

# docker0和flannel.1为172.18网段的相同网络

ifconfig

# 各node节点启动一个容器

docker run -it alpine

# 查看容器IP

ifconfig

# master节点ping所有node节点启动的容器,验证各节点互通

- master验证k8s集群

① 创建pod资源

kubectl run nginx --image=nginx:1.13 --replicas=2

kubectl get pod -o wide -A

run将在未来被移除,以后用:

kubectl create deployment test --image=nginx:1.13k8s高版本支持 -A参数

-A, --all-namespaces # 如果存在,列出所有命名空间中请求的对象

② 创建svc资源

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

kubectl get svc

③ 访问验证

[root@k8s-master ~]# curl -I 10.0.0.12:55531

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 14 Dec 2020 09:27:20 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

[root@k8s-master ~]# curl -I 10.0.0.13:55531

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 14 Dec 2020 09:27:23 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

kubectl命令行TAB键补全:

echo "source <(kubectl completion bash)" >> ~/.bashrc

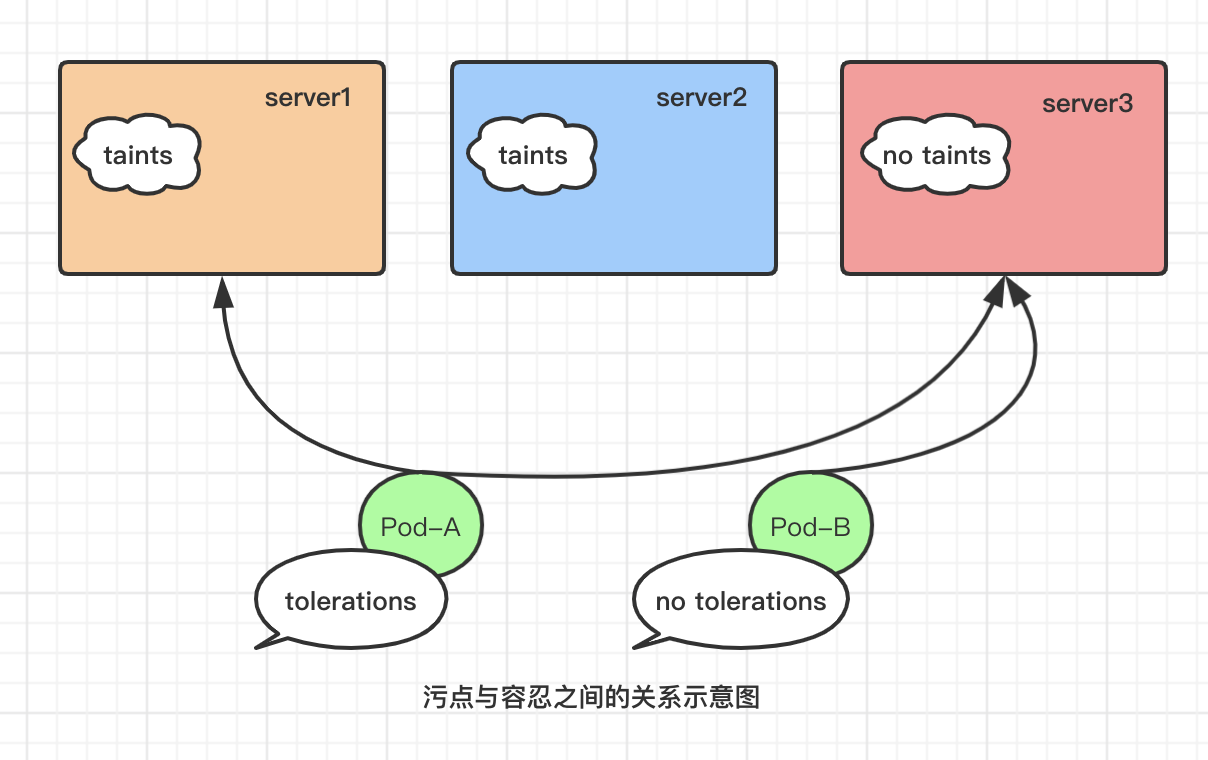

污点和容忍度

节点和Pod的亲和力,用来将Pod吸引到一组节点【根据拓扑域】(作为优选或硬性要求)。

污点(Taints)则相反,应用于node,它们允许一个节点排斥一组Pod。

污点taints是定义在节点之上的key=value:effect,用于让节点拒绝将Pod调度运行于其上, 除非该Pod对象具有接纳节点污点的容忍度。

容忍(Tolerations)应用于pod,允许(但不强制要求)pod调度到具有匹配污点的节点上。

容忍度tolerations是定义在 Pod对象上的键值型属性数据,用于配置其可容忍的节点污点,而且调度器仅能将Pod对象调度至其能够容忍该节点污点的节点之上。

污点(Taints)和容忍(Tolerations)共同作用,确保pods不会被调度到不适当的节点。一个或多个污点应用于节点;这标志着该节点不应该接受任何不容忍污点的Pod。

说明:我们在平常使用中发现pod不会调度到k8s的master节点,就是因为master节点存在污点。

多个Taints污点和多个Tolerations容忍判断:

可以在同一个node节点上设置多个污点(Taints),在同一个pod上设置多个容忍(Tolerations)。

Kubernetes处理多个污点和容忍的方式就像一个过滤器:从节点的所有污点开始,然后忽略可以被Pod容忍匹配的污点;保留其余不可忽略的污点,污点的effect对Pod具有显示效果:

污点

污点(Taints): node节点的属性,通过打标签实现

污点(Taints)类型:

- NoSchedule:不要再往该node节点调度了,不影响之前已经存在的pod。

- PreferNoSchedule:备用。优先往其他node节点调度。

- NoExecute:清场,驱逐。新pod不许来,老pod全赶走。适用于node节点下线。

污点(Taints)的 effect 值 NoExecute,它会影响已经在节点上运行的 pod:

- 如果 pod 不能容忍 effect 值为 NoExecute 的 taint,那么 pod 将马上被驱逐

- 如果 pod 能够容忍 effect 值为 NoExecute 的 taint,且在 toleration 定义中没有指定 tolerationSeconds,则 pod 会一直在这个节点上运行。

- 如果 pod 能够容忍 effect 值为 NoExecute 的 taint,但是在toleration定义中指定了 tolerationSeconds,则表示 pod 还能在这个节点上继续运行的时间长度。

- 查看node节点标签

[root@k8s-master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

10.0.0.12 NotReady <none> 17h v1.15.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=10.0.0.12,kubernetes.io/os=linux

10.0.0.13 NotReady <none> 17h v1.15.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=10.0.0.13,kubernetes.io/os=linux

- 添加标签:node角色

kubectl label nodes 10.0.0.12 node-role.kubernetes.io/node=

- 查看node节点标签:10.0.0.12的ROLES变为node

[root@k8s-master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

10.0.0.12 NotReady node 17h v1.15.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=10.0.0.12,kubernetes.io/os=linux,node-role.kubernetes.io/node=

10.0.0.13 NotReady <none> 17h v1.15.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=10.0.0.13,kubernetes.io/os=linux

- 删除标签

kubectl label nodes 10.0.0.12 node-role.kubernetes.io/node-

- 添加标签:硬盘类型

kubectl label nodes 10.0.0.12 disk=ssd

kubectl label nodes 10.0.0.13 disk=sata

- 清除其他pod

kubectl delete deployments --all

- 查看当前pod:2个

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6459cd46fd-dl2ct 1/1 Running 1 16h 172.18.28.3 10.0.0.12 <none> <none>

nginx-6459cd46fd-zfwbg 1/1 Running 0 16h 172.18.98.4 10.0.0.13 <none> <none>

NoSchedule

- 添加污点:基于硬盘类型的NoSchedule

kubectl taint node 10.0.0.12 disk=ssd:NoSchedule

- 查看污点

kubectl describe nodes 10.0.0.12|grep Taint

- 调整副本数

kubectl scale deployment nginx --replicas=5

- 查看pod验证:新增pod都在10.0.0.13上创建

kubectl get pod -o wide

- 删除污点

kubectl taint node 10.0.0.12 disk-

NoExecute

- 添加污点:基于硬盘类型的NoExecute

kubectl taint node 10.0.0.12 disk=ssd:NoExecute

- 查看pod验证:所有pod都在10.0.0.13上创建,之前10.0.0.12上的pod也转移到10.0.0.13上

kubectl get pod -o wide

- 删除污点

kubectl taint node 10.0.0.12 disk-

PreferNoSchedule

- 添加污点:基于硬盘类型的PreferNoSchedule

kubectl taint node 10.0.0.12 disk=ssd:PreferNoSchedule

- 调整副本数

kubectl scale deployment nginx --replicas=2

kubectl scale deployment nginx --replicas=5

- 查看pod验证:有部分pod都在10.0.0.12上创建

kubectl get pod -o wide

- 删除污点

kubectl taint node 10.0.0.12 disk-

容忍度

容忍度(Tolerations):pod.spec的属性,设置了容忍的Pod将可以容忍污点的存在,可以被调度到存在污点的Node上。

- 查看解释

kubectl explain pod.spec.tolerations

- 配置能够容忍NoExecute污点的deploy资源yaml配置文件

mkdir -p /root/k8s_yaml/deploy && cd /root/k8s_yaml/deploy

cat > /root/k8s_yaml/deploy/k8s_deploy.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

tolerations:

- key: "disk"

operator: "Equal"

value: "ssd"

effect: "NoExecute"

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

EOF

- 创建deploy资源

kubectl delete deployments nginx

kubectl create -f k8s_deploy.yaml

- 查看当前pod

kubectl get pod -o wide

- 添加污点:基于硬盘类型的NoExecute

kubectl taint node 10.0.0.12 disk=ssd:NoExecute

- 调整副本数

kubectl scale deployment nginx --replicas=5

- 查看pod验证:有部分pod都在10.0.0.12上创建,容忍了污点

kubectl get pod -o wide

- 删除污点

kubectl taint node 10.0.0.12 disk-

pod.spec.tolerations示例

tolerations:

- key: "key"

operator: "Equal"

value: "value"

effect: "NoSchedule"

---

tolerations:

- key: "key"

operator: "Exists"

effect: "NoSchedule"

---

tolerations:

- key: "key"

operator: "Equal"

value: "value"

effect: "NoExecute"

tolerationSeconds: 3600

说明:

- 其中key、value、effect要与Node上设置的taint保持一致

- operator的值为Exists时,将会忽略value;只要有key和effect就行

- tolerationSeconds:表示pod能够容忍 effect 值为 NoExecute 的 taint;当指定了 tolerationSeconds【容忍时间】,则表示 pod 还能在这个节点上继续运行的时间长度。

不指定key值和effect值时,且operator为Exists,表示容忍所有的污点【能匹配污点所有的keys,values和effects】

tolerations:

- operator: "Exists"

不指定effect值时,则能容忍污点key对应的所有effects情况

tolerations:

- key: "key"

operator: "Exists"

有多个Master存在时,为了防止资源浪费,可以进行如下设置:

kubectl taint nodes Node-name node-role.kubernetes.io/master=:PreferNoSchedule

常用资源

pod资源

pod资源至少由两个容器组成:一个基础容器pod+业务容器

-

动态pod:从etcd获取yaml文件。

-

静态pod:kubelet本地目录读取yaml文件。

- k8s-node1修改kubelet.service,指定静态pod路径:该目录下只能放置静态pod的yaml配置文件

sed -i '22a \ \ --pod-manifest-path /etc/kubernetes/manifest \\' /usr/lib/systemd/system/kubelet.service

mkdir /etc/kubernetes/manifest

systemctl daemon-reload

systemctl restart kubelet.service

- k8s-node1创建静态pod的yaml配置文件:静态pod立即被创建,其name增加后缀本机IP

cat > /etc/kubernetes/manifest/k8s_pod.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: static-pod

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

EOF

- master查看pod

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6459cd46fd-dl2ct 1/1 Running 0 51m

nginx-6459cd46fd-zfwbg 1/1 Running 0 51m

test-8c7c68d6d-x79hf 1/1 Running 0 51m

static-pod-10.0.0.12 1/1 Running 0 3s

kubeadm部署k8s基于静态pod。

静态pod:

创建yaml配置文件,立即自动创建pod。

移走yaml配置文件,立即自动移除pod。

secret资源

secret资源是某个namespace的局部资源,含有加密的密码、密钥、证书等。

k8s对接harbor:

首先搭建Harbor docker镜像仓库,启用https,创建私有仓库。

然后使用secrets资源管理密钥对,用于拉取镜像时的身份验证。

首先:deploy在pull镜像时调用secrets

- 创建secrets资源regcred

kubectl create secret docker-registry regcred --docker-server=blog.oldqiang.com --docker-username=admin --docker-password=a123456 --docker-email=296917342@qq.com

- 查看secrets资源

[root@k8s-master ~]# kubectl get secrets

NAME TYPE DATA AGE

default-token-vgc4l kubernetes.io/service-account-token 3 2d19h

regcred kubernetes.io/dockerconfigjson 1 114s

- deploy资源调用secrets资源的密钥对pull镜像

cd /root/k8s_yaml/deploy

cat > /root/k8s_yaml/deploy/k8s_deploy_secrets.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

imagePullSecrets:

- name: regcred

containers:

- name: nginx

image: blog.oldqiang.com/oldboy/nginx:1.13

ports:

- containerPort: 80

EOF

- 创建deploy资源

kubectl delete deployments nginx

kubectl create -f k8s_deploy_secrets.yaml

- 查看当前pod:资源创建成功

kubectl get pod -o wide

RBAC:deploy在pull镜像时通过用户调用secrets

- 创建secrets资源harbor-secret

kubectl create secret docker-registry harbor-secret --namespace=default --docker-username=admin --docker-password=a123456 --docker-server=blog.oldqiang.com

- 创建用户和pod资源的yaml文件

cd /root/k8s_yaml/deploy

# 创建用户

cat > /root/k8s_yaml/deploy/k8s_sa_harbor.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: docker-image

namespace: default

imagePullSecrets:

- name: harbor-secret

EOF

# 创建pod

cat > /root/k8s_yaml/deploy/k8s_pod.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: static-pod

spec:

serviceAccount: docker-image

containers:

- name: nginx

image: blog.oldqiang.com/oldboy/nginx:1.13

ports:

- containerPort: 80

EOF

- 创建资源

kubectl delete deployments nginx

kubectl create -f k8s_sa_harbor.yaml

kubectl create -f k8s_pod.yaml

- 查看当前pod:资源创建成功

kubectl get pod -o wide

configmap资源

configmap资源用来存放配置文件,可用挂载到pod容器上。

- 创建配置文件

cat > /root/k8s_yaml/deploy/81.conf <<EOF

server {

listen 81;

server_name localhost;

root /html;

index index.html index.htm;

location / {

}

}

EOF

- 创建configmap资源(可以指定多个--from-file)

kubectl create configmap 81.conf --from-file=/root/k8s_yaml/deploy/81.conf

- 查看configmap资源

kubectl get cm

kubectl get cm 81.conf -o yaml

- deploy资源挂载configmap资源

cd /root/k8s_yaml/deploy

cat > /root/k8s_yaml/deploy/k8s_deploy_cm.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: nginx-config

configMap:

name: 81.conf

items:

- key: 81.conf # 指定多个配置文件中的一个

path: 81.conf

containers:

- name: nginx

image: nginx:1.13

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d

ports:

- containerPort: 80

name: port1

- containerPort: 81

name: port2

EOF

- 创建deploy资源

kubectl delete deployments nginx

kubectl create -f k8s_deploy_cm.yaml

- 查看当前pod

kubectl get pod -o wide

- 但是volumeMounts只能挂目录,原有文件会被覆盖,导致80端口不能访问。

initContainers资源

在启动pod前,先启动initContainers容器进行初始化操作。

- 查看解释

kubectl explain pod.spec.initContainers

- deploy资源挂载configmap资源

初始化操作:

- 初始化容器一:挂载持久化hostPath和configmap,拷贝81.conf到持久化目录

- 初始化容器二:挂载持久化hostPath,拷贝default.conf到持久化目录

最后Deployment容器启动,挂载持久化目录。

cd /root/k8s_yaml/deploy

cat > /root/k8s_yaml/deploy/k8s_deploy_init.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: config

hostPath:

path: /mnt

- name: tmp

configMap:

name: 81.conf

items:

- key: 81.conf

path: 81.conf

initContainers:

- name: cp1

image: nginx:1.13

volumeMounts:

- name: config

mountPath: /nginx_config

- name: tmp

mountPath: /tmp

command: ["cp","/tmp/81.conf","/nginx_config/"]

- name: cp2

image: nginx:1.13

volumeMounts:

- name: config

mountPath: /nginx_config

command: ["cp","/etc/nginx/conf.d/default.conf","/nginx_config/"]

containers:

- name: nginx

image: nginx:1.13

volumeMounts:

- name: config

mountPath: /etc/nginx/conf.d

ports:

- containerPort: 80

name: port1

- containerPort: 81

name: port2

EOF

- 创建deploy资源

kubectl delete deployments nginx

kubectl create -f k8s_deploy_init.yaml

- 查看当前pod

kubectl get pod -o wide -l app=nginx

- 查看存在配置文件:81.conf,default.conf

kubectl exec -ti nginx-7879567f94-25g5s /bin/bash

ls /etc/nginx/conf.d

常用服务

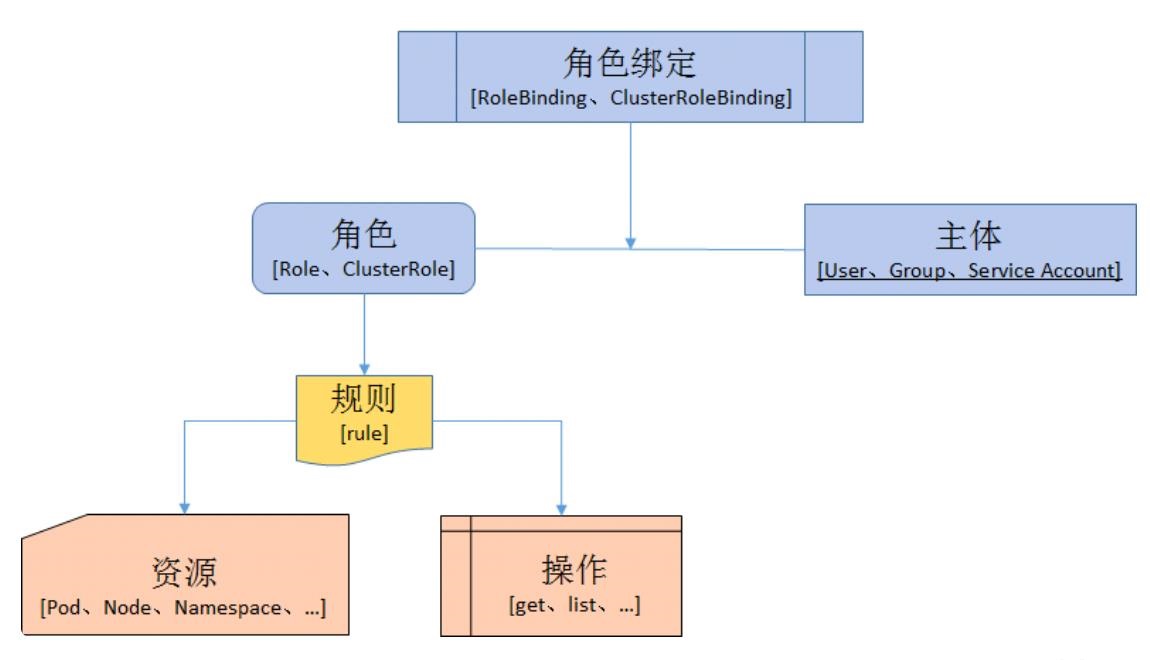

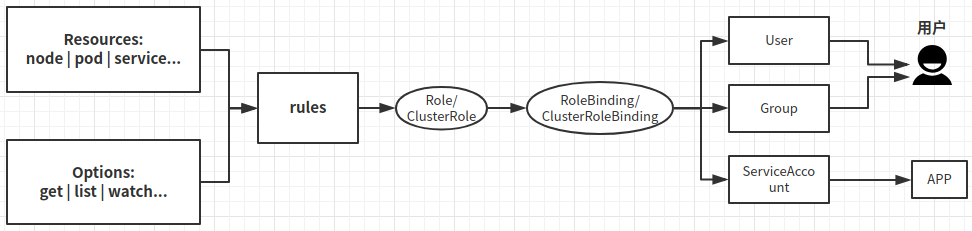

RBAC

RBAC:role base access controller

kubernetes的认证访问授权机制RBAC,通过apiserver设置-–authorization-mode=RBAC开启。

RBAC的授权步骤分为两步:

1)定义角色:在定义角色时会指定此角色对于资源的访问控制的规则;

2)绑定角色:将主体与角色进行绑定,对用户进行访问授权。

用户:sa(ServiceAccount)

角色:role

- 局部角色:Role

- 角色绑定(授权):RoleBinding

- 全局角色:ClusterRole

- 角色绑定(授权):ClusterRoleBinding

使用流程图

-

用户使用:如果是用户需求权限,则将Role与User(或Group)绑定(这需要创建User/Group);

-

程序使用:如果是程序需求权限,将Role与ServiceAccount指定(这需要创建ServiceAccount并且在deployment中指定ServiceAccount)。

部署dns服务

部署coredns,官方文档

- master节点创建配置文件coredns.yaml(指定调度到node2)

mkdir -p /root/k8s_yaml/dns && cd /root/k8s_yaml/dns

cat > /root/k8s_yaml/dns/coredns.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

beta.kubernetes.io/os: linux

nodeName: 10.0.0.13

containers:

- name: coredns

image: coredns/coredns:1.3.1

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

- name: tmp

mountPath: /tmp

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: tmp

emptyDir: {}

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.230.254

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

- master节点创建资源(准备镜像:coredns/coredns:1.3.1)

kubectl create -f coredns.yaml

- master节点查看pod用户

kubectl get pod -n kube-system

kubectl get pod -n kube-system coredns-6cf5d7fdcf-dvp8r -o yaml | grep -i ServiceAccount

- master节点查看DNS资源coredns用户的全局角色,绑定

kubectl get clusterrole | grep coredns

kubectl get clusterrolebindings | grep coredns

kubectl get sa -n kube-system | grep coredns

- master节点创建tomcat+mysql的deploy资源yaml文件

mkdir -p /root/k8s_yaml/tomcat_deploy && cd /root/k8s_yaml/tomcat_deploy

cat > /root/k8s_yaml/tomcat_deploy/mysql-deploy.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

EOF

cat > /root/k8s_yaml/tomcat_deploy/mysql-svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

namespace: tomcat

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql

EOF

cat > /root/k8s_yaml/tomcat_deploy/tomcat-deploy.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

namespace: tomcat

name: myweb

spec:

replicas: 1

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: kubeguide/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

EOF

cat > /root/k8s_yaml/tomcat_deploy/tomcat-svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

namespace: tomcat

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30008

selector:

app: myweb

EOF

- master节点创建资源(准备镜像:mysql:5.7 和 kubeguide/tomcat-app:v2)

kubectl create namespace tomcat

kubectl create -f .

- master节点验证

[root@k8s-master tomcat_demo]# kubectl get pod -n tomcat

NAME READY STATUS RESTARTS AGE

mysql-94f6bbcfd-6nng8 1/1 Running 0 5s

myweb-5c8956ff96-fnhjh 1/1 Running 0 5s

[root@k8s-master tomcat_deploy]# kubectl -n tomcat exec -ti myweb-5c8956ff96-fnhjh /bin/bash

root@myweb-5c8956ff96-fnhjh:/usr/local/tomcat# ping mysql

PING mysql.tomcat.svc.cluster.local (10.254.94.77): 56 data bytes

^C--- mysql.tomcat.svc.cluster.local ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

root@myweb-5c8956ff96-fnhjh:/usr/local/tomcat# exit

exit

- 验证DNS

- master节点

[root@k8s-master deploy]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6cf5d7fdcf-dvp8r 1/1 Running 0 177m 172.18.98.2 10.0.0.13 <none> <none>

yum install bind-utils -y

dig @172.18.98.2 kubernetes.default.svc.cluster.local +short

- node节点(kube-proxy)

yum install bind-utils -y

dig @10.254.230.254 kubernetes.default.svc.cluster.local +short

部署dashboard服务

- 官方配置文件,略作修改

k8s1.15的dashboard-controller.yaml建议使用dashboard1.10.1:kubernetes-dashboard.yaml

mkdir -p /root/k8s_yaml/dashboard && cd /root/k8s_yaml/dashboard

cat > /root/k8s_yaml/dashboard/kubernetes-dashboard.yaml <<EOF

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

nodePort: 30001

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

EOF

# 镜像改用国内源 image: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1 # service类型改为NodePort:指定宿主机端口 spec: type: NodePort ports: - port: 443 nodePort: 30001 targetPort: 8443

- 创建资源(准备镜像:registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1)

kubectl create -f kubernetes-dashboard.yaml

- 查看当前已存在角色admin

kubectl get clusterrole | grep admin

- 创建用户,绑定已存在角色admin(默认用户只有最小权限)

cat > /root/k8s_yaml/dashboard/dashboard_rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-admin

namespace: kube-system

EOF

- 创建资源

kubectl create -f dashboard_rbac.yaml

- 查看admin角色用户令牌

[root@k8s-master dashboard]# kubectl describe secrets -n kube-system kubernetes-admin-token-tpqs6

Name: kubernetes-admin-token-tpqs6

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-admin

kubernetes.io/service-account.uid: 17f1f684-588a-4639-8ec6-a39c02361d0e

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1354 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWFkbWluLXRva2VuLXRwcXM2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Imt1YmVybmV0ZXMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxN2YxZjY4NC01ODhhLTQ2MzktOGVjNi1hMzljMDIzNjFkMGUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06a3ViZXJuZXRlcy1hZG1pbiJ9.JMvv-W50Zala4I0uxe488qjzDZ2m05KN0HMX-RCHFg87jHq49JGyqQJQDFgujKCyecAQSYRFm4uZWnKiWR81Xd7IZr16pu5exMpFaAryNDeAgTAsvpJhaAuumopjiXXYgip-7pNKxJSthmboQkQ4OOmzSHRv7N6vOsyDQOhwGcgZ01862dsjowP3cCPL6GSQCeXT0TX968MyeKZ-2JV4I2XdbkPoZYCRNvwf9F3u74xxPlC9vVLYWdNP8rXRBXi3W_DdQyXntN-jtMXHaN47TWuqKIgyWmT3ZzTIKhKART9_7YeiOAA6LVGtYq3kOvPqyGHvQulx6W2ADjCTAAPovA

- 使用火狐浏览器访问:https://10.0.0.12:30001,使用令牌登录

- 生成证书,解决Google浏览器不能打开kubernetes dashboard的问题

mkdir /root/k8s_yaml/dashboard/key && cd /root/k8s_yaml/dashboard/key

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=10.0.0.11'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

- 删除原有的证书secret资源

kubectl delete secret kubernetes-dashboard-certs -n kube-system

- 创建新的证书secret资源

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kube-system

- 删除pod,自动创建新pod生效

[root@k8s-master key]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6cf5d7fdcf-dvp8r 1/1 Running 0 4h19m

kubernetes-dashboard-5dc4c54b55-sn8sv 1/1 Running 0 41m

kubectl delete pod -n kube-system kubernetes-dashboard-5dc4c54b55-sn8sv

- 使用谷歌浏览器访问:https://10.0.0.12:30001,使用令牌登录

- 令牌生成kubeconfig,解决令牌登陆快速超时的问题

DASH_TOKEN='eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWFkbWluLXRva2VuLXRwcXM2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Imt1YmVybmV0ZXMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxN2YxZjY4NC01ODhhLTQ2MzktOGVjNi1hMzljMDIzNjFkMGUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06a3ViZXJuZXRlcy1hZG1pbiJ9.JMvv-W50Zala4I0uxe488qjzDZ2m05KN0HMX-RCHFg87jHq49JGyqQJQDFgujKCyecAQSYRFm4uZWnKiWR81Xd7IZr16pu5exMpFaAryNDeAgTAsvpJhaAuumopjiXXYgip-7pNKxJSthmboQkQ4OOmzSHRv7N6vOsyDQOhwGcgZ01862dsjowP3cCPL6GSQCeXT0TX968MyeKZ-2JV4I2XdbkPoZYCRNvwf9F3u74xxPlC9vVLYWdNP8rXRBXi3W_DdQyXntN-jtMXHaN47TWuqKIgyWmT3ZzTIKhKART9_7YeiOAA6LVGtYq3kOvPqyGHvQulx6W2ADjCTAAPovA'

kubectl config set-cluster kubernetes --server=10.0.0.11:6443 --kubeconfig=/root/dashbord-admin.conf

kubectl config set-credentials admin --token=$DASH_TOKEN --kubeconfig=/root/dashbord-admin.conf

kubectl config set-context admin --cluster=kubernetes --user=admin --kubeconfig=/root/dashbord-admin.conf

kubectl config use-context admin --kubeconfig=/root/dashbord-admin.conf

- 下载到主机,用于以后登录使用

cd ~

sz dashbord-admin.conf

- 使用谷歌浏览器访问:https://10.0.0.12:30001,使用kubeconfig文件登录,可以exec

网络

映射(endpoints资源)

- master节点查看endpoints资源

[root@k8s-master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 10.0.0.11:6443 28h

... ...

可用其将外部服务映射到内部使用。每个Service资源自动关连一个endpoints资源,优先标签,然后同名。

- k8s-node2准备外部数据库

yum install mariadb-server -y

systemctl start mariadb

mysql_secure_installation

n

y

y

y

y

mysql -e "grant all on *.* to root@'%' identified by '123456';"

该项目在tomcat的index.html页面,已经将数据库连接写固定了,用户名root,密码123456。

- master节点创建endpoint和svc资源yaml文件

cd /root/k8s_yaml/tomcat_deploy

cat > /root/k8s_yaml/tomcat_deploy/mysql_endpoint_svc.yaml <<EOF

apiVersion: v1

kind: Endpoints

metadata:

name: mysql

namespace: tomcat

subsets:

- addresses:

- ip: 10.0.0.13

ports:

- name: mysql

port: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: tomcat

spec:

ports:

- name: mysql

port: 3306

protocol: TCP

targetPort: 3306

type: ClusterIP

EOF

# 可以参考系统默认创建 kubectl get endpoints kubernetes -o yaml kubectl get svc kubernetes -o yaml注意:此时不能使用标签选择器!

- master节点创建资源

kubectl delete deployment mysql -n tomcat

kubectl delete svc mysql -n tomcat

kubectl create -f mysql_endpoint_svc.yaml

- master节点查看endpoints资源及其与svc的关联

kubectl get endpoints -n tomcat

kubectl describe svc -n tomcat

-

k8s-node2查看数据库验证

[root@k8s-node2 ~]# mysql -e 'show databases;'

+--------------------+

| Database |

+--------------------+

| information_schema |

| HPE_APP |

| mysql |

| performance_schema |

+--------------------+

[root@k8s-node2 ~]# mysql -e 'use HPE_APP;select * from T_USERS;'

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

+----+-----------+-------+

kube-proxy的ipvs模式

- node节点安装依赖命令

yum install ipvsadm conntrack-tools -y

- node节点修改kube-proxy.service增加参数

cat > /usr/lib/systemd/system/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

ExecStart=/usr/bin/kube-proxy \\

--kubeconfig /etc/kubernetes/kube-proxy.kubeconfig \\

--cluster-cidr 172.18.0.0/16 \\

--hostname-override 10.0.0.12 \\

--proxy-mode ipvs \\

--logtostderr=false \\

--v=2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

--proxy-mode ipvs # 启用ipvs模式LVS默认NAT模式。不满足LVS,自动降级为iptables。

- node节点重启kube-proxy并检查LVS规则

systemctl daemon-reload

systemctl restart kube-proxy.service

ipvsadm -L -n

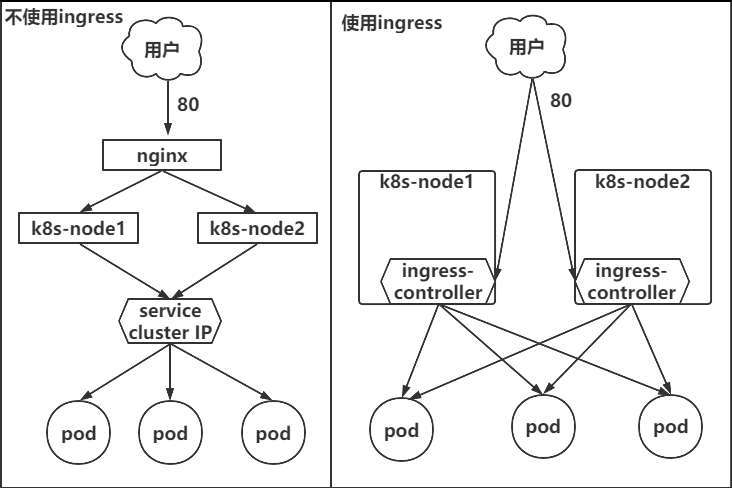

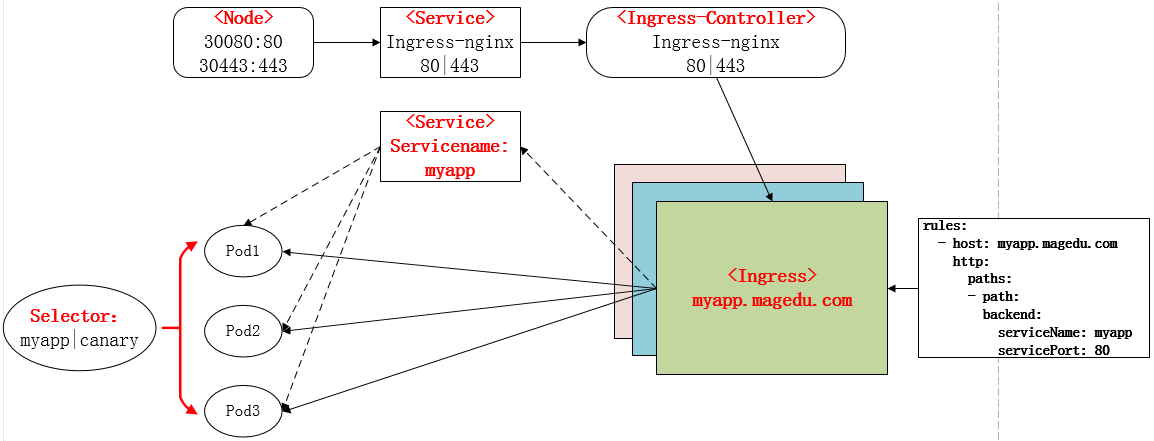

七层负载均衡(ingress-traefik)

Ingress 包含两大组件:Ingress Controller 和 Ingress。

ingress-controller(traefik)服务组件,直接使用宿主机网络。Ingress资源是基于DNS名称(host)或URL路径把请求转发到指定的Service资源的转发规则。

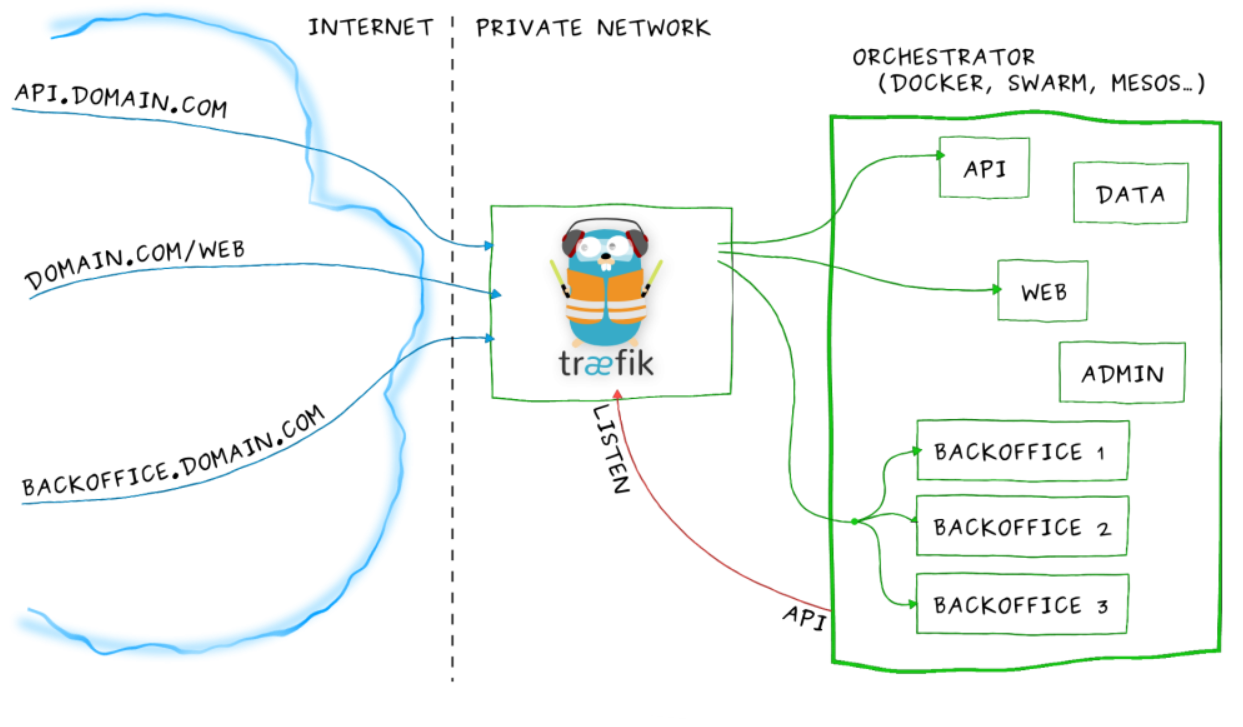

Ingress-Traefik

Traefik 是一款开源的反向代理与负载均衡工具。它最大的优点是能够与常见的微服务系统直接整合,可以实现自动化动态配置。目前支持 Docker、Swarm、Mesos/Marathon、 Mesos、Kubernetes、Consul、Etcd、Zookeeper、BoltDB、Rest API 等等后端模型。

创建rbac

- 创建rbac的yaml文件

mkdir -p /root/k8s_yaml/ingress && cd /root/k8s_yaml/ingress

cat > /root/k8s_yaml/ingress/ingress_rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

EOF

- 创建资源

kubectl create -f ingress_rbac.yaml

- 查看资源

kubectl get serviceaccounts -n kube-system | grep traefik-ingress-controller

kubectl get clusterrole -n kube-system | grep traefik-ingress-controller

kubectl get clusterrolebindings.rbac.authorization.k8s.io -n kube-system | grep traefik-ingress-controller

部署traefik服务

- 创建traefik的DaemonSet资源yaml文件

cat > /root/k8s_yaml/ingress/ingress_traefik.yaml <<EOF

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

k8s-app: traefik-ingress-lb

spec:

selector:

matchLabels:

k8s-app: traefik-ingress-lb

template:

metadata:

labels:

k8s-app: traefik-ingress-lb

name: traefik-ingress-lb

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

tolerations:

- operator: "Exists"

#nodeSelector:

#kubernetes.io/hostname: master

# 允许使用主机网络,指定主机端口hostPort

hostNetwork: true

containers:

- image: traefik:v1.7.2

imagePullPolicy: IfNotPresent

name: traefik-ingress-lb

ports:

- name: http

containerPort: 80

hostPort: 80

- name: admin

containerPort: 8080

hostPort: 8080

args:

- --api

- --kubernetes

- --logLevel=DEBUG

---

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress-lb

ports:

- protocol: TCP

port: 80

name: web

- protocol: TCP

port: 8080

name: admin

type: NodePort

EOF

- 创建资源(准备镜像:traefik:v1.7.2)

kubectl create -f ingress_traefik.yaml

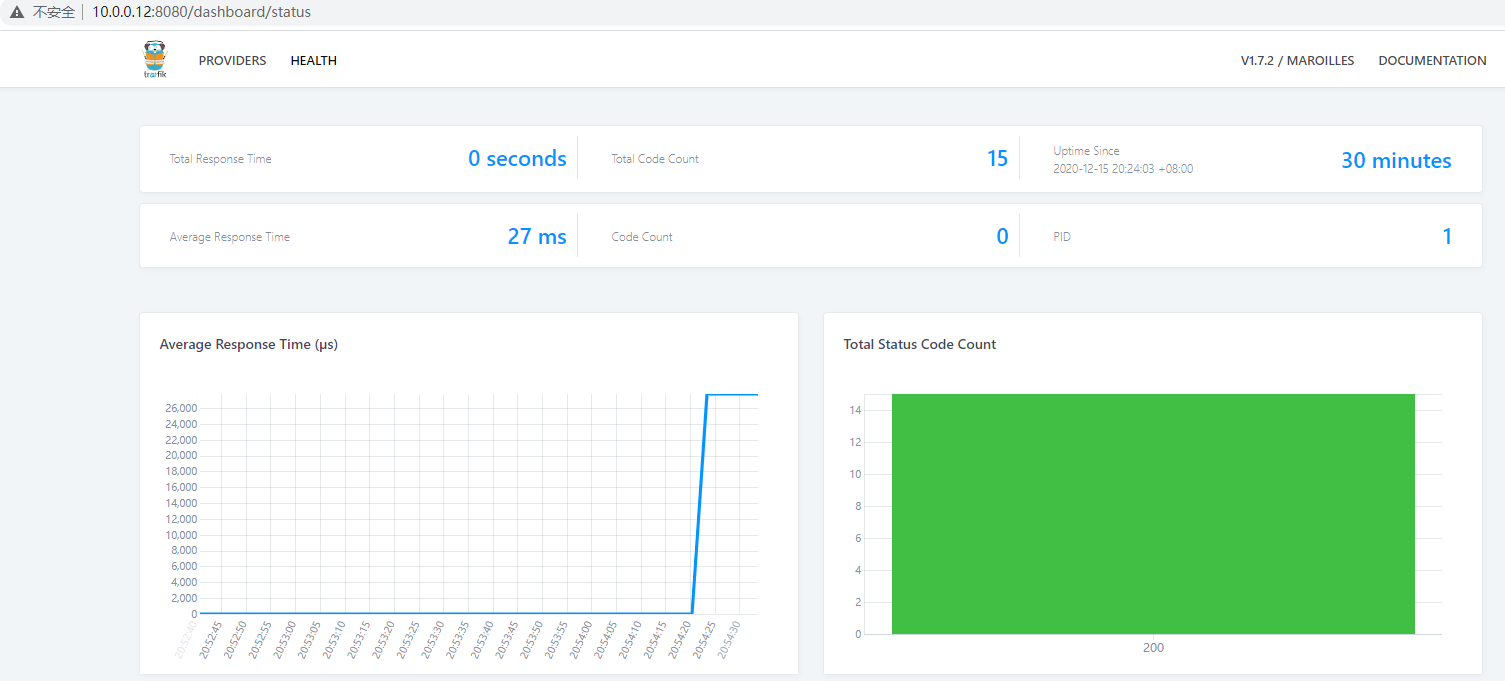

- 浏览器访问 traefik 的 dashboard:http://10.0.0.12:8080 此时没有server。

创建Ingress资源

- 查看要代理的svc资源的NAME和POST

[root@k8s-master ingress]# kubectl get svc -n tomcat

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mysql ClusterIP 10.254.71.221 <none> 3306/TCP 4h2m

myweb NodePort 10.254.130.141 <none> 8080:30008/TCP 8h

- 创建Ingress资源yaml文件

cat > /root/k8s_yaml/ingress/ingress.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-myweb

namespace: tomcat

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: tomcat.oldqiang.com

http:

paths:

- backend:

serviceName: myweb

servicePort: 8080

EOF

- 创建资源

kubectl create -f ingress.yaml

- 查看资源

kubectl get ingress -n tomcat

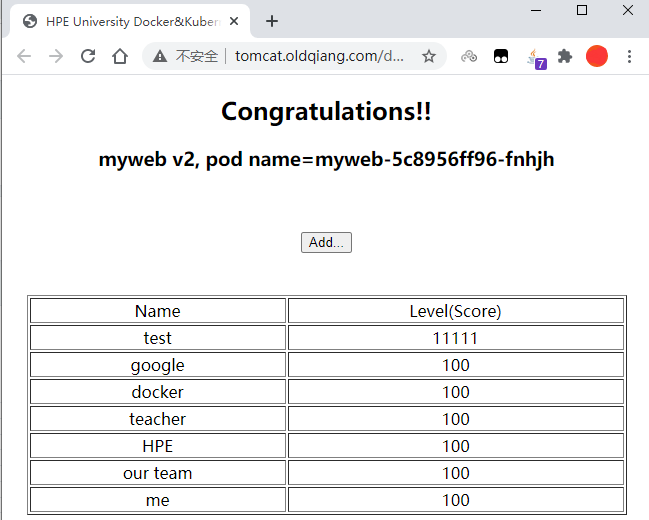

测试访问

-

windows配置:在

C:\Windows\System32\drivers\etc\hosts文件中增加10.0.0.12 tomcat.oldqiang.com -

浏览器直接访问tomcat:http://tomcat.oldqiang.com/demo/

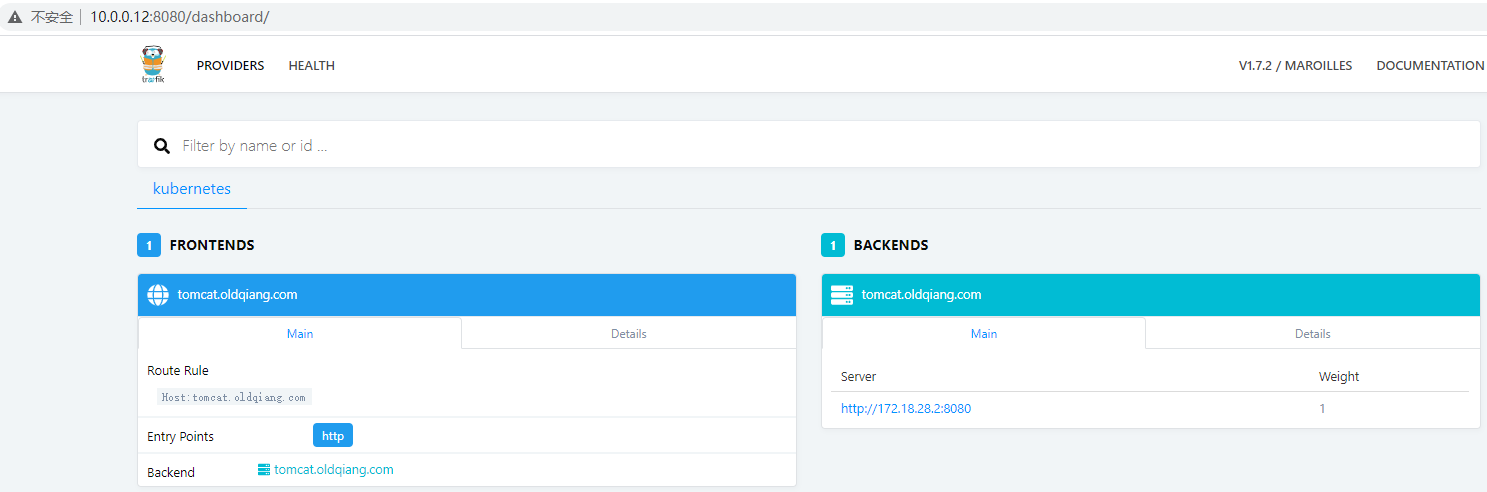

- 浏览器访问:http://10.0.0.12:8080 此时BACKENDS(后端)有Server

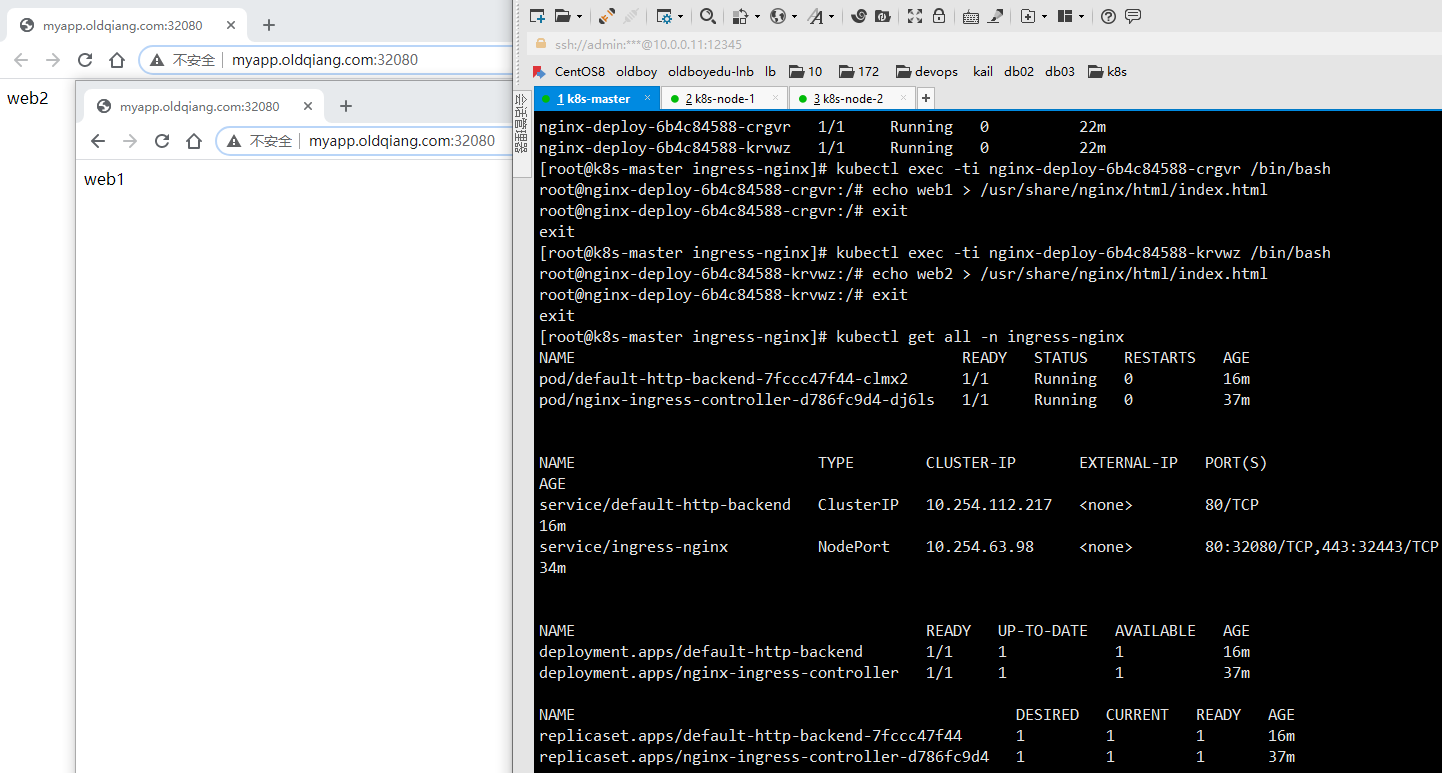

七层负载均衡(ingress-nginx)

五个基础yaml文件:

- Namespace

- ConfigMap

- RBAC

- Service:添加NodePort端口

- Deployment:默认404页面,改用国内阿里云镜像

- Deployment:ingress-controller,改用国内阿里云镜像

- 准备配置文件

mkdir /root/k8s_yaml/ingress-nginx && cd /root/k8s_yaml/ingress-nginx

# 创建命名空间 ingress-nginx

cat > /root/k8s_yaml/ingress-nginx/namespace.yaml <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

EOF

# 创建配置资源

cat > /root/k8s_yaml/ingress-nginx/configmap.yaml <<EOF

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

EOF

# 如果外界访问的域名不存在的话,则默认转发到default-http-backend这个Service,直接返回404:

cat > /root/k8s_yaml/ingress-nginx/default-backend.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissible as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

# 改用国内阿里云镜像

image: registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

ports:

- port: 80

targetPort: 8080

selector:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

EOF

# 创建Ingress的RBAC授权控制,包括:

# ServiceAccount、ClusterRole、Role、RoleBinding、ClusterRoleBinding

cat > /root/k8s_yaml/ingress-nginx/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames: