Scrapy 抓取某网站磁力 有能力破解只能抓取85页

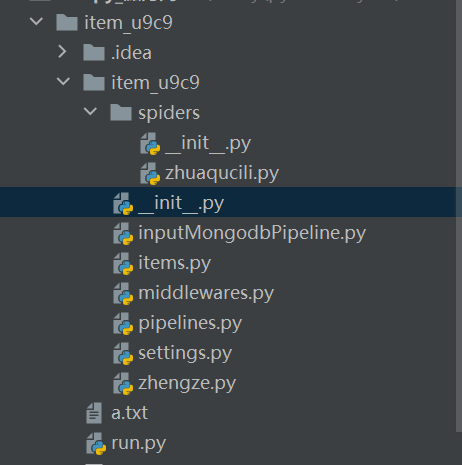

这是目录 先创建 工程item_u9c9

在spiders 里面创建 zhuaqucili.py 代码

""" """ import scrapy import re import hashlib from scrapy.selector import Selector from scrapy.http import Request from item_u9c9.items import ItemU9C9Item import time from redis import Redis class item_u9c9(scrapy.Spider): name = 'cili' start_urls = ['https://u9c9.com/?type=Video&p=1'] def parse(self, response): global urls3 #定义数据全局可用 global urls1 urls1 = response.xpath("//*[@id='navbar']/ul[1]/li/ul/li/a/@href").extract_first() #mingcheng = response.xpath("//div[@class='width1200']//a//text()").extract() #urls1.pop() #urls1.pop() e = [] urls2 = ('https://u9c9.com') #urls3 = urls2 +urls1 urls3 =('https://u9c9.com/?type=Video&p=1') '''for i in range(len(urls1)): c1 = urls2[0] + urls1[i] e.append(c1) #for urls3 in e:''' yield scrapy.Request(urls3, callback=self.fenlei) def fenlei(self, response): #self.conn = Redis(host='127.0.0.1', port=6379) daquan = response.xpath("//div[@class='table-responsive']") item = ItemU9C9Item() IP = response.xpath("//tr[@class='default']/td[2]/a//@title").extract() # IP = IP[1:] port = response.xpath("//tr[@class='default']/td[3]/a[2]//@href").extract() daxiao = response.xpath("//tr[@class='default']/td[4]//text()").extract() time = response.xpath("//tr[@class='default']/td[5]//text()").extract() # mingcheng = response.xpath("//tr[@class='default']/a/img//@alt").extract_first() mingcheng = re.findall('=.*?&', urls3) mingcheng = ''.join(mingcheng) mingcheng = re.findall(r'[^=&]', mingcheng) mingcheng = ''.join(mingcheng) # port = re.findall('[a-zA-Z]+://[^\s]*[.com|.cn]*[.m3u8]', port) # IP = re.findall('[\u4e00-\u9fa5]+', IP) # mingcheng =re.findall('h.*?=', urls3) # IP = ':'.join(IP) # mingcheng = ','.join(mingcheng) # fileName = '%s.txt' % mingcheng # 爬取的内容存入文件,文件名为:作者-语录.txt for i in range(len(IP)): item['IP'] = IP[i] item['port'] = port[i] item['daxiao'] = daxiao[i] item['time'] = time[i] yield item e = [] t = re.findall('h.*?&', urls3) t = ':'.join(t) for i in range(2007): offist = i + 1 nexthref = t + 'p='+str(offist) e.append(nexthref) for g in e: if g is not None: g = response.urljoin(g) yield scrapy.Request(g, callback=self.fenlei) # 将当前爬取的数据做哈希唯一标识(数据指纹) ''' source = item['IP'] + item['port']+item['daxiao']+item['time'] hashValue = hashlib.sha256(source.encode()).hexdigest() ex = self.conn.sadd('hashValue', hashValue) if ex == 1: yield item else: print('数据未更新')'''#有能力就用

为了写入Mongo数据库就创建一个 inputMongodbPipeline.py

""" 功能:本项目主要演示Scrapy数据存储mongodb具体操作; 运行环境:win7 64 + python3.6 + scrapy1.4 + mongodb3.4 + pymongo-3.6.0 运行方式:进入InputMongodb目录(scrapy.cfg目录)输入: scrapy crawl IntoMongodbSpider 项目详情:http://www.scrapyd.cn/jiaocheng/171.html; 注意事项:运行前请确保mongodb已安装并已启动 创建时间:2018年1月31日12:08:02 创建者:scrapy中文网(http://www.scrapyd.cn); """ import pymongo class InputmongodbPipeline(object): def __init__(self): # 建立MongoDB数据库连接 client = pymongo.MongoClient('127.0.0.1', 27017) # 连接所需数据库,ScrapyChina为数据库名 db = client['ScrapyChina'] # 连接所用集合,也就是我们通常所说的表,mingyan为表名 self.post = db['磁力'] def process_item(self, item, spider): postItem = dict(item) # 把item转化成字典形式 self.post.insert(postItem) # 向数据库插入一条记录 return item # 会在控制台输出原item数据,可以选择不写

不写入数据库,就在pipelines.py 修改

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter class ItemU9C9Pipeline: def process_item(self, item, spider): fileName = 'video.txt' f = open(fileName, "a+", encoding='utf-8') # 追加写入文件 f.write(item['IP'] + ',') f.write('\n') f.write(item['port'] + ',') f.write('\n') f.write(item['daxiao'] + ',') f.write('\n') f.write(item['time'] + ',') f.write('\n') f.close() #yield scrapy.Request(url, self.parse, dont_filter=False) return item

然后如果为了添加随即请求头和随即ip就在middlewares.py 添加代码

class NovelUserAgentMiddleWare(object): #随即user_AGENT def __init__(self): self.user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", ] def process_request(self, request, spider): import random ua = random.choice(self.user_agent_list) print('User-Agent:' + ua) request.headers.setdefault('User-Agent', ua) #也在middlewares 件中添加类 class NovelProxyMiddleWare(object): #随即IP def process_request(self, request, spider): proxy = self.get_random_proxy() print("Request proxy is {}".format(proxy)) request.meta["proxy"] = "http://" + proxy def get_random_proxy(self): import random with open('a.txt', 'r', encoding="utf-8") as f:#打开IP的地址,前提这个目录下有#IP.txt txt = f.read() return random.choice(txt.split('\n'))

添加了上面这段,就要在settings 里添加

DOWNLOADER_MIDDLEWARES = { 'item_u9c9.middlewares.NovelUserAgentMiddleWare': 544, #随即user 'item_u9c9.middlewares.NovelProxyMiddleWare': 543,#随即IP ImagesRename 换成自己的 }

在items.py 里替换

import scrapy class ItemU9C9Item(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() IP = scrapy.Field() port = scrapy.Field() daxiao = scrapy.Field() time = scrapy.Field() pass

最后在settings 里配置

BOT_NAME = 'item_u9c9' SPIDER_MODULES = ['item_u9c9.spiders'] NEWSPIDER_MODULE = 'item_u9c9.spiders' #DOWNLOAD_DELAY = 0 CONCURRENT_REQUESTS = 100 CONCURRENT_REQUESTS_PER_DOMAIN = 100 CONCURRENT_REQUESTS_PER_IP = 100 COOKIES_ENABLED = False RETRY_ENABLED = True #打开重试开关 RETRY_TIMES = 3 #重试次数 DOWNLOAD_TIMEOUT = 3 #超时 RETRY_HTTP_CODES = [429,404,403] #重试 #SCHEDULER = "scrapy_redis.scheduler.Scheduler" # Ensure all spiders share same duplicates filter through redis. #DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # Specify the full Redis URL for connecting (optional). # If set, this takes precedence over the REDIS_HOST and REDIS_PORT settings. #REDIS_URL = 'redis://:123123@192.168.229.128:8889' # Don't cleanup redis queues, allows to pause/resume crawls. #SCHEDULER_PERSIST = True ###### scrapy-redis settings end ###### #LOG_LEVEL = 'ERROR' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'item_u9c9 (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False#不听从网页机器人 # Configure item pipelines # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html DOWNLOADER_MIDDLEWARES = { 'item_u9c9.middlewares.NovelUserAgentMiddleWare': 544, #随即user 'item_u9c9.middlewares.NovelProxyMiddleWare': 543,#随即IP ImagesRename 换成自己的 } #上面加了随即 IP 请求头添加 ITEM_PIPELINES = { 'item_u9c9.inputMongodbPipeline.InputmongodbPipeline': 1, #inputMongodbPipeline.InputmongodbPipeline 后面这段连接Mongo 数据库的 ,直接写入文本要修改成pipelines.ItemU9C9Pipeline }

如果人生还有重来,那就不叫人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号