scrapy+pyppeteer指定搜索动态爬取头条

一、介绍

由于头条现在采取了动态js渲染的反爬措施,还有其他各种js加密反爬,使用简单的requests非常困难

Puppeteer 是 Google 基于 Node.js 开发的一个工具,有了它我们可以通过 JavaScript 来控制 Chrome 浏览器的一些操作,当然也可以用作网络爬虫上,其 API 极其完善,功能非常强大。 而 Pyppeteer 又是什么呢?它实际上是 Puppeteer 的 Python 版本的实现,但他不是 Google 开发的,是一位来自于日本的工程师依据 Puppeteer 的一些功能开发出来的非官方版本。

详细使用方法,官方文档: https://miyakogi.github.io/pyppeteer/reference.html

二、简单的使用

import asyncio from pyppeteer import launch async def main(): # browser = await launch() browser = await launch(headless=True) page = await browser.newPage() await page.setViewport(viewport={'width': 1366, 'height': 768}) # await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3494.0 Safari/537.36') await page.goto('https://www.toutiao.com') # 是否启用js await page.setJavaScriptEnabled(enabled=True) await page.evaluate( '''() =>{ Object.defineProperties(navigator,{ webdriver:{ get: () => false } }) }''') # 以下为插入中间js,将淘宝会为了检测浏览器而调用的js修改其结果。 await page.evaluate('''() =>{ window.navigator.chrome = { runtime: {}, }; }''') await page.evaluate( '''() =>{ Object.defineProperty(navigator, 'languages', { get: () => ['en-US', 'en'] }); }''') await page.evaluate( '''() =>{ Object.defineProperty(navigator, 'plugins', { get: () => [1, 2, 3, 4, 5,6], }); }''') await page.goto('https://www.toutiao.com/search/?keyword=%E5%B0%8F%E7%B1%B310') # 打印cookie页面 print(await page.cookies()) await asyncio.sleep(5) # # 打印页面文本 print(await page.content()) # # # 打印当前首页的标题 print(await page.title()) with open('toutiao.html', 'w', encoding='utf-8') as f: f.write(await page.content()) await browser.close() loop = asyncio.get_event_loop() task = asyncio.ensure_future(main()) loop.run_until_complete(task)

三、多次请求的使用

import asyncio from pyppeteer import launch async def main(url): browser = await launch(headless=True) page = await browser.newPage() await page.setViewport(viewport={'width': 1366, 'height': 768}) await page.setUserAgent( 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3494.0 Safari/537.36') # 是否启用js await page.setJavaScriptEnabled(enabled=True) await page.evaluate( '''() =>{ Object.defineProperties(navigator,{ webdriver:{ get: () => false } }) }''') # 以下为插入中间js,将淘宝会为了检测浏览器而调用的js修改其结果。 await page.evaluate('''() =>{ window.navigator.chrome = { runtime: {}, }; }''') await page.evaluate( '''() =>{ Object.defineProperty(navigator, 'languages', { get: () => ['en-US', 'en'] }); }''') await page.evaluate( '''() =>{ Object.defineProperty(navigator, 'plugins', { get: () => [1, 2, 3, 4, 5,6], }); }''') await page.goto(url,options={'timeout': 5000}) # await asyncio.sleep(5) # 打印页面文本 return await page.content() tlist = ["https://www.toutiao.com/a6794863795366789636/", "https://www.toutiao.com/a6791790405059871236/", "https://www.toutiao.com/a6792756350095983104/", "https://www.toutiao.com/a6792852490845946376/", "https://www.toutiao.com/a6795883286729064964/", ] task = [main(url) for url in tlist] loop = asyncio.get_event_loop() results = loop.run_until_complete(asyncio.gather(*task)) for res in results: print(res)

四、scarpy+pyppeteer

github地址:https://github.com/fengfumin/Toutiao_pyppeteer

主要功能:

1、指定搜素单词

from scrapy.cmdline import execute execute(['scrapy', 'crawl', 'toutiao','-a','keyword=小米10'])

2、爬取页面内存保存csv

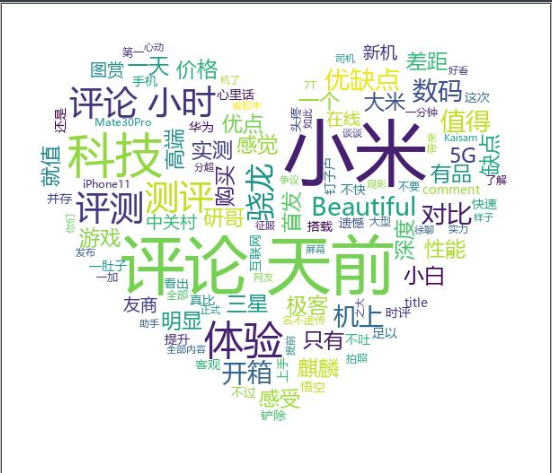

3、生成词云图片

4、主要代码scrapy中间件

from scrapy import signals import pyppeteer import asyncio import os from scrapy.http import HtmlResponse pyppeteer.DEBUG = False class ToutiaoPyppeteerDownloaderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. def __init__(self): print("Init downloaderMiddleware use pypputeer.") os.environ['PYPPETEER_CHROMIUM_REVISION'] = '588429' # pyppeteer.DEBUG = False print(os.environ.get('PYPPETEER_CHROMIUM_REVISION')) loop = asyncio.get_event_loop() task = asyncio.ensure_future(self.getbrowser()) loop.run_until_complete(task) # self.browser = task.result() print(self.browser) print(self.page) # self.page = await browser.newPage() async def getbrowser(self): self.browser = await pyppeteer.launch(headless=True) self.page = await self.browser.newPage() await self.page.setViewport(viewport={'width': 1366, 'height': 768}) await self.page.setUserAgent( 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3494.0 Safari/537.36') await self.page.setJavaScriptEnabled(enabled=True) await self.page.evaluate( '''() =>{ Object.defineProperties(navigator,{ webdriver:{ get: () => false } }) }''') # 以下为插入中间js,将淘宝会为了检测浏览器而调用的js修改其结果。 await self.page.evaluate('''() =>{ window.navigator.chrome = { runtime: {}, }; }''') await self.page.evaluate( '''() =>{ Object.defineProperty(navigator, 'languages', { get: () => ['en-US', 'en'] }); }''') await self.page.evaluate( '''() =>{ Object.defineProperty(navigator, 'plugins', { get: () => [1, 2, 3, 4, 5,6], }); }''') return self.page @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware. # Must either: # - return None: continue processing this request # - or return a Response object # - or return a Request object # - or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called loop = asyncio.get_event_loop() task = asyncio.ensure_future(self.usePypuppeteer(request)) loop.run_until_complete(task) # return task.result() return HtmlResponse(url=request.url, body=task.result(), encoding="utf-8", request=request) async def usePypuppeteer(self, request): print(request.url) # self.page = await self.getbrowser() await self.page.goto(request.url) await asyncio.sleep(3) #鼠标滚动到底 await self.page.evaluate('window.scrollBy(0, document.body.scrollHeight)') await asyncio.sleep(3) content = await self.page.content() return content def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception. # Must either: # - return None: continue processing this exception # - return a Response object: stops process_exception() chain # - return a Request object: stops process_exception() chain pass def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)

5、主要代码请求与处理

import csv import time from scrapy import Spider, Request from bs4 import BeautifulSoup from Toutiao_pyppeteer.cloud_word import * class TaobaoSpider(Spider): name = 'toutiao' allowed_domains = ['www.toutiao.com'] start_url = ['https://www.toutiao.com/','https://www.toutiao.com/search/?keyword={keyword}'] def __init__(self, keyword, *args, **kwargs): super(TaobaoSpider, self).__init__(*args, **kwargs) self.keyword = keyword def open_csv(self): self.csvfile=open('train/data.csv', 'w', newline='', encoding='utf-8') fieldnames = ['title', 'comment'] self.dict_writer = csv.DictWriter(self.csvfile, delimiter=',', fieldnames=fieldnames) self.dict_writer.writeheader() def close_csv(self): self.csvfile.close() def start_requests(self): for url in self.start_url: if 'search' in url: r_url = url.format(keyword=self.keyword) else: r_url=url yield Request(r_url, callback=self.parse_list) def parse_list(self, response): if "小米10" in response.text: soup = BeautifulSoup(response.text, 'lxml') # 具有容错功能 res = soup.prettify() # 处理好缩进,结构化显示 div_list=soup.find_all('div', class_='articleCard') # print(res) print(div_list) print(len(div_list)) self.open_csv() for div in div_list: title=div.find('span',class_='J_title') self.dict_writer.writerow({"title":title.text}) con=div.find('div',class_='y-left') self.dict_writer.writerow({"comment": con.text}) print("关闭csv") self.close_csv() print("开始分词") text_segment() print("开始制图") chinese_jieba()

浙公网安备 33010602011771号

浙公网安备 33010602011771号