Linux kernel Vhost-net 和 Virtio-net代码详解

场景

Host上运行qemu kvm虚拟机,其中虚拟机的网卡类型为virtio-net,而Host上virtio-net backend使用vhost-net

数据包进入虚拟机代码分析

首先看vhost-net模块注册,主要使用linux内核提供的内存注册机制,这部分开发过linux kernel的人都应该

很了解啦

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

static struct miscdevice vhost_net_misc = { .minor = VHOST_NET_MINOR, .name = "vhost-net", .fops = &vhost_net_fops,};static int vhost_net_init(void){ if (experimental_zcopytx) vhost_net_enable_zcopy(VHOST_NET_VQ_TX); return misc_register(&vhost_net_misc);}module_init(vhost_net_init);static void vhost_net_exit(void){ misc_deregister(&vhost_net_misc);}module_exit(vhost_net_exit);MODULE_VERSION("0.0.1");MODULE_LICENSE("GPL v2");MODULE_AUTHOR("Michael S. Tsirkin");MODULE_DESCRIPTION("Host kernel accelerator for virtio net");MODULE_ALIAS_MISCDEV(VHOST_NET_MINOR);MODULE_ALIAS("devname:vhost-net"); |

其中vhost_net_fops代表字符设备支持的用户态接口。字符设备为/dev/vhost-net

|

1

2

3

4

5

6

7

8

9

10

|

static const struct file_operations vhost_net_fops = { .owner = THIS_MODULE, .release = vhost_net_release, .unlocked_ioctl = vhost_net_ioctl,#ifdef CONFIG_COMPAT .compat_ioctl = vhost_net_compat_ioctl,#endif .open = vhost_net_open, .llseek = noop_llseek,}; |

当用户态进行使用open系统调用的使用,则执行vhost_net_open函数,该函数主要对该

字符设备进行初始化

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

static int vhost_net_open(struct inode *inode, struct file *f){ struct vhost_net *n = kmalloc(sizeof *n, GFP_KERNEL); struct vhost_dev *dev; struct vhost_virtqueue **vqs; int r, i; if (!n) return -ENOMEM; vqs = kmalloc(VHOST_NET_VQ_MAX * sizeof(*vqs), GFP_KERNEL); if (!vqs) { kfree(n); return -ENOMEM; } dev = &n->dev; vqs[VHOST_NET_VQ_TX] = &n->vqs[VHOST_NET_VQ_TX].vq; vqs[VHOST_NET_VQ_RX] = &n->vqs[VHOST_NET_VQ_RX].vq; n->vqs[VHOST_NET_VQ_TX].vq.handle_kick = handle_tx_kick; n->vqs[VHOST_NET_VQ_RX].vq.handle_kick = handle_rx_kick; for (i = 0; i < VHOST_NET_VQ_MAX; i++) { n->vqs[i].ubufs = NULL; n->vqs[i].ubuf_info = NULL; n->vqs[i].upend_idx = 0; n->vqs[i].done_idx = 0; n->vqs[i].vhost_hlen = 0; n->vqs[i].sock_hlen = 0; } r = vhost_dev_init(dev, vqs, VHOST_NET_VQ_MAX); if (r < 0) { kfree(n); kfree(vqs); return r; } vhost_poll_init(n->poll + VHOST_NET_VQ_TX, handle_tx_net, POLLOUT, dev); vhost_poll_init(n->poll + VHOST_NET_VQ_RX, handle_rx_net, POLLIN, dev); f->private_data = n; return 0;} |

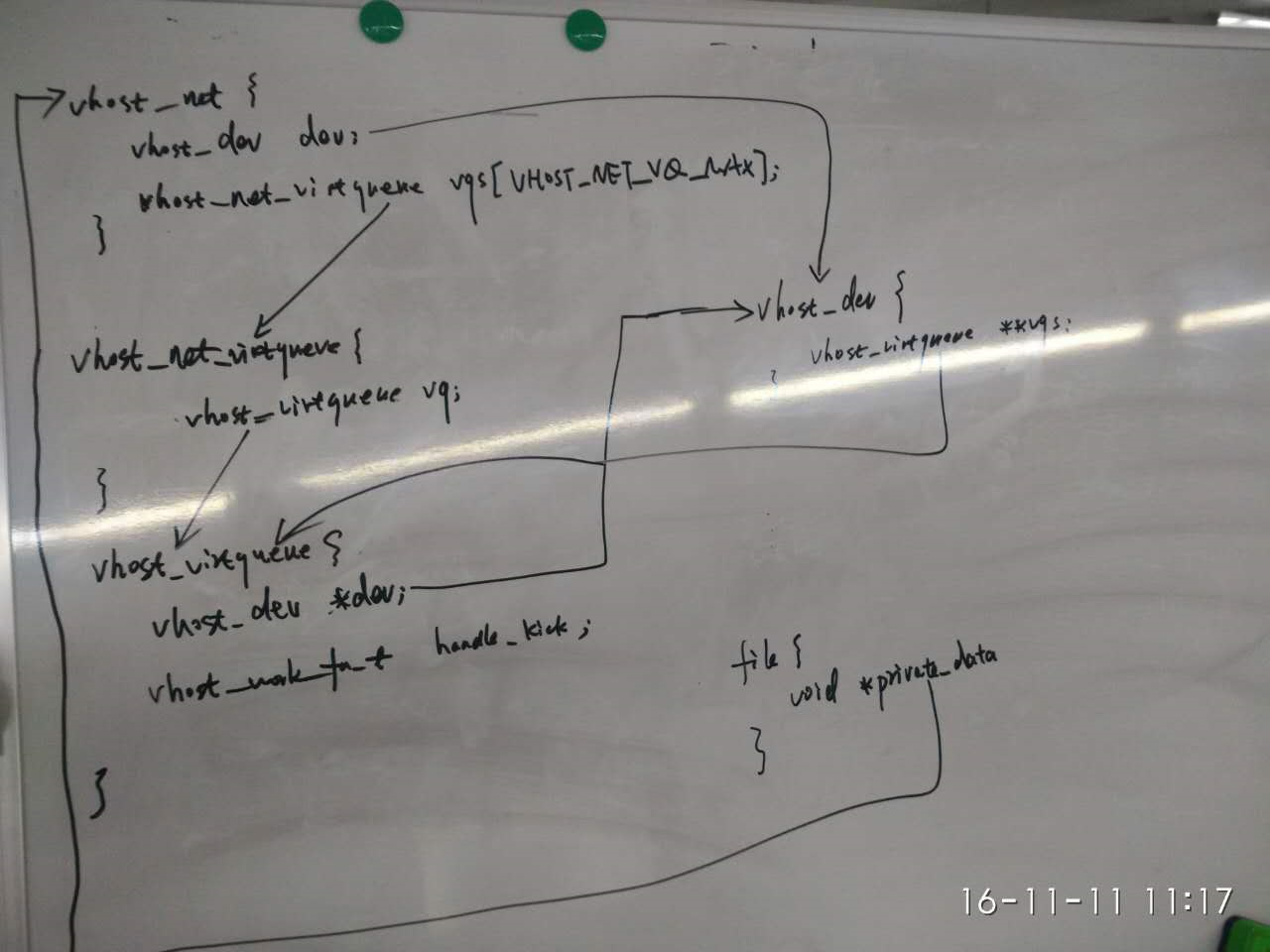

从上述代码中可以看出vhost-net模块的核心数据结果关系图如下

为了获取tap设备的数据包,vhost-net模块注册了该设备的tun scoket

|

1

2

3

4

5

6

7

8

9

10

11

12

|

static long vhost_net_set_backend(struct vhost_net *n, unsigned index, int fd){ sock = get_socket(fd); if (IS_ERR(sock)) { r = PTR_ERR(sock); goto err_vq; } vq->private_data = sock;} |

tun socket的收发包函数为

|

1

2

3

4

5

|

static const struct proto_ops tun_socket_ops = { .sendmsg = tun_sendmsg, .recvmsg = tun_recvmsg, .release = tun_release,}; |

当tap获取到数据包的时候,vhost-net会调用

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

|

static void handle_rx(struct vhost_net *net){ struct vhost_net_virtqueue *nvq = &net->vqs[VHOST_NET_VQ_RX]; struct vhost_virtqueue *vq = &nvq->vq; unsigned uninitialized_var(in), log; struct vhost_log *vq_log; struct msghdr msg = { .msg_name = NULL, .msg_namelen = 0, .msg_control = NULL, /* FIXME: get and handle RX aux data. */ .msg_controllen = 0, .msg_iov = vq->iov, .msg_flags = MSG_DONTWAIT, }; struct virtio_net_hdr_mrg_rxbuf hdr = { .hdr.flags = 0, .hdr.gso_type = VIRTIO_NET_HDR_GSO_NONE }; size_t total_len = 0; int err, mergeable; s16 headcount; size_t vhost_hlen, sock_hlen; size_t vhost_len, sock_len; struct socket *sock; mutex_lock(&vq->mutex); sock = vq->private_data; if (!sock) goto out; vhost_disable_notify(&net->dev, vq); vhost_hlen = nvq->vhost_hlen; sock_hlen = nvq->sock_hlen; vq_log = unlikely(vhost_has_feature(vq, VHOST_F_LOG_ALL)) ? vq->log : NULL; mergeable = vhost_has_feature(vq, VIRTIO_NET_F_MRG_RXBUF); while ((sock_len = peek_head_len(sock->sk))) { sock_len += sock_hlen; vhost_len = sock_len + vhost_hlen; headcount = get_rx_bufs(vq, vq->heads, vhost_len, &in, vq_log, &log, likely(mergeable) ? UIO_MAXIOV : 1); /* On error, stop handling until the next kick. */ if (unlikely(headcount < 0)) break; /* On overrun, truncate and discard */ if (unlikely(headcount > UIO_MAXIOV)) { msg.msg_iovlen = 1; err = sock->ops->recvmsg(NULL, sock, &msg, 1, MSG_DONTWAIT | MSG_TRUNC); pr_debug("Discarded rx packet: len %zd\n", sock_len); continue; } /* OK, now we need to know about added descriptors. */ if (!headcount) { if (unlikely(vhost_enable_notify(&net->dev, vq))) { /* They have slipped one in as we were * doing that: check again. */ vhost_disable_notify(&net->dev, vq); continue; } /* Nothing new? Wait for eventfd to tell us * they refilled. */ break; } /* We don't need to be notified again. */ if (unlikely((vhost_hlen))) /* Skip header. TODO: support TSO. */ move_iovec_hdr(vq->iov, nvq->hdr, vhost_hlen, in); else /* Copy the header for use in VIRTIO_NET_F_MRG_RXBUF: * needed because recvmsg can modify msg_iov. */ copy_iovec_hdr(vq->iov, nvq->hdr, sock_hlen, in); msg.msg_iovlen = in; err = sock->ops->recvmsg(NULL, sock, &msg, sock_len, MSG_DONTWAIT | MSG_TRUNC); /* Userspace might have consumed the packet meanwhile: * it's not supposed to do this usually, but might be hard * to prevent. Discard data we got (if any) and keep going. */ if (unlikely(err != sock_len)) { pr_debug("Discarded rx packet: " " len %d, expected %zd\n", err, sock_len); vhost_discard_vq_desc(vq, headcount); continue; } if (unlikely(vhost_hlen) && memcpy_toiovecend(nvq->hdr, (unsigned char *)&hdr, 0, vhost_hlen)) { vq_err(vq, "Unable to write vnet_hdr at addr %p\n", vq->iov->iov_base); break; } /* TODO: Should check and handle checksum. */ hdr.num_buffers = cpu_to_vhost16(vq, headcount); if (likely(mergeable) && memcpy_toiovecend(nvq->hdr, (void *)&hdr.num_buffers, offsetof(typeof(hdr), num_buffers), sizeof hdr.num_buffers)) { vq_err(vq, "Failed num_buffers write"); vhost_discard_vq_desc(vq, headcount); break; } vhost_add_used_and_signal_n(&net->dev, vq, vq->heads, headcount); if (unlikely(vq_log)) vhost_log_write(vq, vq_log, log, vhost_len); total_len += vhost_len; if (unlikely(total_len >= VHOST_NET_WEIGHT)) { vhost_poll_queue(&vq->poll); break; } }out: mutex_unlock(&vq->mutex);} |

从上述代码中可以看出

sock->ops->recvmsg会执行tun socket ops的tun_recvmsg函数,把tap收到的skb,放到virt_queue结构体中然后通过qemu kvm,以中断的形式唤醒virtio-net驱动的收报函数,注意vhost-net的收发包队列与virtio-net的收发包队列

是共享的

|

1

|

|

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

static int virtnet_poll(struct napi_struct *napi, int budget){ struct receive_queue *rq = container_of(napi, struct receive_queue, napi); struct virtnet_info *vi = rq->vq->vdev->priv; void *buf; unsigned int r, len, received = 0;again: while (received < budget && (buf = virtqueue_get_buf(rq->vq, &len)) != NULL) { receive_buf(vi, rq, buf, len); --rq->num; received++; } if (rq->num < rq->max / 2) { if (!try_fill_recv(vi, rq, GFP_ATOMIC)) schedule_delayed_work(&vi->refill, 0); } /* Out of packets? */ if (received < budget) { r = virtqueue_enable_cb_prepare(rq->vq); napi_complete(napi); if (unlikely(virtqueue_poll(rq->vq, r)) && napi_schedule_prep(napi)) { virtqueue_disable_cb(rq->vq); __napi_schedule(napi); goto again; } } return received;} |

该函数receive_buf会调用linux kernel标准的协议栈收报函数netif_receive_skb,至此数据包就通过tap到vhost-net

最终送到了虚拟机中

虚拟机向外发包

虚拟机向外发送数据包,首先会走linux 协议栈,协议栈发包最终都会调用网卡的xmit函数,对于

virtio-net网卡其xmit函数为

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

static netdev_tx_t start_xmit(struct sk_buff *skb, struct net_device *dev){ struct virtnet_info *vi = netdev_priv(dev); int qnum = skb_get_queue_mapping(skb); struct send_queue *sq = &vi->sq[qnum]; int err; struct netdev_queue *txq = netdev_get_tx_queue(dev, qnum); bool kick = !skb->xmit_more; /* Free up any pending old buffers before queueing new ones. */ free_old_xmit_skbs(sq); /* Try to transmit */ err = xmit_skb(sq, skb); /* This should not happen! */ if (unlikely(err)) { dev->stats.tx_fifo_errors++; if (net_ratelimit()) dev_warn(&dev->dev, "Unexpected TXQ (%d) queue failure: %d\n", qnum, err); dev->stats.tx_dropped++; kfree_skb(skb); return NETDEV_TX_OK; } /* Don't wait up for transmitted skbs to be freed. */ skb_orphan(skb); nf_reset(skb); /* Apparently nice girls don't return TX_BUSY; stop the queue * before it gets out of hand. Naturally, this wastes entries. */ if (sq->vq->num_free < 2+MAX_SKB_FRAGS) { netif_stop_subqueue(dev, qnum); if (unlikely(!virtqueue_enable_cb_delayed(sq->vq))) { /* More just got used, free them then recheck. */ free_old_xmit_skbs(sq); if (sq->vq->num_free >= 2+MAX_SKB_FRAGS) { netif_start_subqueue(dev, qnum); virtqueue_disable_cb(sq->vq); } } } if (kick || netif_xmit_stopped(txq)) virtqueue_kick(sq->vq); return NETDEV_TX_OK;} |

从代码中看就是把skb发到virtqueue中,然后调用virtqueue_kick通知qemu kvm,kvm 会把该数据包

送给vhost-net,vhost-net会调用

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

|

static void handle_tx(struct vhost_net *net){ struct vhost_net_virtqueue *nvq = &net->vqs[VHOST_NET_VQ_TX]; struct vhost_virtqueue *vq = &nvq->vq; unsigned out, in, s; int head; struct msghdr msg = { .msg_name = NULL, .msg_namelen = 0, .msg_control = NULL, .msg_controllen = 0, .msg_iov = vq->iov, .msg_flags = MSG_DONTWAIT, }; size_t len, total_len = 0; int err; size_t hdr_size; struct socket *sock; struct vhost_net_ubuf_ref *uninitialized_var(ubufs); bool zcopy, zcopy_used; mutex_lock(&vq->mutex); sock = vq->private_data; if (!sock) goto out; vhost_disable_notify(&net->dev, vq); hdr_size = nvq->vhost_hlen; zcopy = nvq->ubufs; for (;;) { /* Release DMAs done buffers first */ if (zcopy) vhost_zerocopy_signal_used(net, vq); /* If more outstanding DMAs, queue the work. * Handle upend_idx wrap around */ if (unlikely((nvq->upend_idx + vq->num - VHOST_MAX_PEND) % UIO_MAXIOV == nvq->done_idx)) break; head = vhost_get_vq_desc(vq, vq->iov, ARRAY_SIZE(vq->iov), &out, &in, NULL, NULL); /* On error, stop handling until the next kick. */ if (unlikely(head < 0)) break; /* Nothing new? Wait for eventfd to tell us they refilled. */ if (head == vq->num) { if (unlikely(vhost_enable_notify(&net->dev, vq))) { vhost_disable_notify(&net->dev, vq); continue; } break; } if (in) { vq_err(vq, "Unexpected descriptor format for TX: " "out %d, int %d\n", out, in); break; } /* Skip header. TODO: support TSO. */ s = move_iovec_hdr(vq->iov, nvq->hdr, hdr_size, out); msg.msg_iovlen = out; len = iov_length(vq->iov, out); /* Sanity check */ if (!len) { vq_err(vq, "Unexpected header len for TX: " "%zd expected %zd\n", iov_length(nvq->hdr, s), hdr_size); break; } zcopy_used = zcopy && (len >= VHOST_GOODCOPY_LEN || nvq->upend_idx != nvq->done_idx); /* use msg_control to pass vhost zerocopy ubuf info to skb */ if (zcopy_used) { vq->heads[nvq->upend_idx].id = cpu_to_vhost32(vq, head); if (!vhost_net_tx_select_zcopy(net) || len < VHOST_GOODCOPY_LEN) { /* copy don't need to wait for DMA done */ vq->heads[nvq->upend_idx].len = VHOST_DMA_DONE_LEN; msg.msg_control = NULL; msg.msg_controllen = 0; ubufs = NULL; } else { struct ubuf_info *ubuf; ubuf = nvq->ubuf_info + nvq->upend_idx; vq->heads[nvq->upend_idx].len = VHOST_DMA_IN_PROGRESS; ubuf->callback = vhost_zerocopy_callback; ubuf->ctx = nvq->ubufs; ubuf->desc = nvq->upend_idx; msg.msg_control = ubuf; msg.msg_controllen = sizeof(ubuf); ubufs = nvq->ubufs; atomic_inc(&ubufs->refcount); } nvq->upend_idx = (nvq->upend_idx + 1) % UIO_MAXIOV; } else msg.msg_control = NULL; /* TODO: Check specific error and bomb out unless ENOBUFS? */ err = sock->ops->sendmsg(NULL, sock, &msg, len); if (unlikely(err < 0)) { if (zcopy_used) { if (ubufs) vhost_net_ubuf_put(ubufs); nvq->upend_idx = ((unsigned)nvq->upend_idx - 1) % UIO_MAXIOV; } vhost_discard_vq_desc(vq, 1); break; } if (err != len) pr_debug("Truncated TX packet: " " len %d != %zd\n", err, len); if (!zcopy_used) vhost_add_used_and_signal(&net->dev, vq, head, 0); else vhost_zerocopy_signal_used(net, vq); total_len += len; vhost_net_tx_packet(net); if (unlikely(total_len >= VHOST_NET_WEIGHT)) { vhost_poll_queue(&vq->poll); break; } }out: mutex_unlock(&vq->mutex);} |

在该函数中会调用sock->ops->sendmsg,也就是tun_sendmsg,在该函数中最终会调用

netif_rx,该函数就是协议栈网卡的收报函数,代表tap网卡已经收到数据包了,然后就可以通过

linux协议的briage把数据包发送出去啦

浙公网安备 33010602011771号

浙公网安备 33010602011771号