spark streaming 消费kafka数据

1.在虚拟机启动zookeeper和kafka,新建topic test1,这里使用的topic 是test1。

2.Scala程序

要修改 3.定义 Kafka 参数 中的主机名称以及要消费的topic名称

package scala.spark import org.apache.kafka.clients.consumer.{ConsumerConfig, ConsumerRecord} import org.apache.spark.SparkConf import org.apache.spark.streaming.dstream.{DStream, InputDStream} import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies} import org.apache.spark.streaming.{Seconds, StreamingContext} object Kafka { def main(args: Array[String]): Unit = { //1.创建 SparkConf val sparkConf: SparkConf = new SparkConf().setAppName("ReceiverWordCount").setMaster("local[*]") //2.创建 StreamingContext val ssc = new StreamingContext(sparkConf, Seconds(3)) //3.定义 Kafka 参数 val kafkaPara: Map[String, Object] = Map[String, Object]( ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG -> "hadoop02:9092", ConsumerConfig.GROUP_ID_CONFIG -> "test1", "key.deserializer" -> "org.apache.kafka.common.serialization.StringDeserializer", "value.deserializer" -> "org.apache.kafka.common.serialization.StringDeserializer" ) //4.读取 Kafka 数据创建 DStream val kafkaDStream: InputDStream[ConsumerRecord[String, String]] = KafkaUtils.createDirectStream[String, String](ssc, LocationStrategies.PreferConsistent, ConsumerStrategies.Subscribe[String, String](Set("test1"), kafkaPara)) //5.将每条消息的 KV 取出 val valueDStream: DStream[String] = kafkaDStream.map(record => record.value()) //6. 计 算 valueDStream.flatMap(_.split(" ")) .map((_, 1)) .reduceByKey(_ + _) .print() //7. 开 启 任 务 ssc.start() ssc.awaitTermination() ssc.start() ssc.awaitTermination() } }

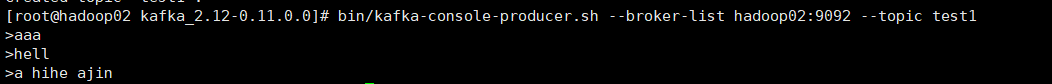

3.启动kafka生产者

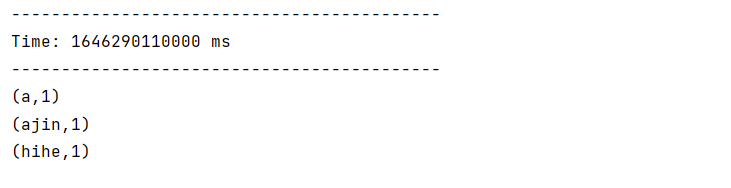

消费到了 好椰

浙公网安备 33010602011771号

浙公网安备 33010602011771号