5、朴素贝叶斯(Naive Bayesian)算法——监督、分类

1、朴素贝叶斯(Naive Bayesian)算法

朴素贝叶斯(Naive Bayesian)是基于贝叶斯定理和特征条件独立假设的分类方法,它通过特征计算分类的概率,选取概率大的情况进行分类,因此它是基于概率论的一种分类方法。因为分类的目标是确定的,所以也是属于监督学习。朴素贝叶斯算法是应用最为广泛的分类算法之一。

优点:在数据较少的情况下仍然有效,可以处理多分类问题。

缺点:对于输入数据的准备方式较为敏感。

适用数据类型:标称型数据。

2、概率论基本原理

(1)条件概率:

$P\left( {A|B} \right) = \frac{{P\left( {AB} \right)}}{{P\left( B \right)}} = \frac{{P\left( {B|A} \right)P\left( A \right)}}{{P\left( B \right)}}$

(2)全概率:

$P\left( B \right) = \sum\limits_{i = 1}^n {P\left( {B|{A_i}} \right)} P\left( {{A_i}} \right)$

(3)贝叶斯定理:

$P\left( {{A_i}|B} \right) = \frac{{P\left( {B|{A_i}} \right)P\left( {{A_i}} \right)}}{{\sum\limits_{i = 1}^n {P\left( {B|{A_i}} \right)} P\left( {{A_i}} \right)}}$

3、朴素贝叶斯算法的学习与分类

朴素贝叶斯基于条件概率、贝叶斯定理和独立性假设原则 。

给定条件:假设包含N个训练数据集$T = \left\{ {\left( {{x_1}{\rm{,}}{y_1}} \right){\rm{,}}\left( {{x_2}{\rm{,}}{y_2}} \right){\rm{,}} \cdots {\rm{,}}\left( {{x_N}{\rm{,}}{y_N}} \right)} \right\}$,其中${x^{\left( i \right)}} = \left( {x_1^{\left( i \right)}{\rm{,}}x_2^{\left( i \right)}{\rm{,}} \cdots {\rm{,}}x_n^{\left( i \right)}} \right)$,$x_j^{\left( i \right)}$为第$i$个样本的第$j$个特征,并且其取值为$x_j^{\left( i \right)} \in \left\{ {{a_{j1}}{\rm{,}}{a_{j2}}{\rm{,}} \cdots {\rm{,}}{a_{j{S_j}}}} \right\}$,其中${a_{jl}}$是第$j$个特征可能取得第$l$个值,$j = 1{\rm{,}}2{\rm{,}} \cdots {\rm{,n}}$,$l = 1{\rm{,}}2{\rm{,}} \cdots {\rm{,}}{S_j}$,${y^{\left( i \right)}} \in \left\{ {{c_1}{\rm{,}}{c_2}{\rm{,}} \cdots {\rm{,}}{c_K}} \right\}$;实例$x$。

输出:实例$x$的分类。

(1)条件独立性假设

朴素贝叶斯法对条件概率分布作了条件独立性的假设。由于这是一个较强的假设,“朴素”由此而来。具体地,条件独立性假设是:

$P\left( {X = x|Y = {c_k}} \right) = P\left( {{X_1} = {x_1}{\rm{,}} \cdots {\rm{,}}{X_n} = {x_n}|Y = {c_k}} \right) = \prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)} $

朴素贝叶斯法实际上学习到生成数据的机制,所以属于生成模型。而条件独立性假设等于是说用于分类的特征在类确定的条件下都是条件独立的。这一假设使得该方法变得简单,但有时会牺牲一定的分类准确率。

(2)后验概率最大化

朴素贝叶斯法分类时,对给定的输入$x$,通过学习到的模型计算后验概率分布$P\left( {Y = {c_k}|X = x} \right)$,将后验概率最大的类作为$x$的类输出。后验概率计算根据贝叶斯定理可得:

$P\left( {Y = {c_k}|X = x} \right) = \frac{{P\left( {X = x|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)}}{{\sum\limits_{k = 1}^K {P\left( {X = x|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} }}$

进一步,将独立性假设条件代入上式:

$P\left( {Y = {c_k}|X = x} \right) = \frac{{\prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} }}{{\sum\limits_{k = 1}^K {P\left( {Y = {c_k}} \right)\prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)} } }}$

这是朴素贝叶斯法分类的基本公式。于是,朴素贝叶斯分类器可表示为:

$y = f\left( x \right) = {\rm{arg }}\mathop {{\rm{max}}}\limits_{{c_k}} P\left( {Y = {c_k}|X = x} \right) = {\rm{arg }}\mathop {{\rm{max}}}\limits_{{c_k}} \frac{{\prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} }}{{\sum\limits_{k = 1}^K {P\left( {Y = {c_k}} \right)\prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)} } }}$

注意到,上式中分母对所有${{c_k}}$都相同,所以

$y = f\left( x \right) = {\rm{arg }}\mathop {{\rm{max}}}\limits_{{c_k}} \prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} $

后验概率最大化的含义:

朴素贝叶斯法将实例分到后验概率最大的类中,等价于期望风险最小化。

(3)概率计算

先验概率${P\left( {Y = {c_k}} \right)}$的计算:

$P\left( {Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right)} }}{N}{\rm{,}}k = 1,2, \cdots ,K$

条件概率$P\left( {{X_j} = {x_j}|Y = {c_k}} \right)$的计算:

$P\left( {{X_j} = {x_j}|Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {x_j^{\left( i \right)} = {a_{jl}}{\rm{,}}{y^{\left( i \right)}} = {c_k}} \right)} }}{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right)} }}$

注:用上式计算概率,所得到的的值可能会出现为0的情况,这时会影响到后验概率的计算结果,使分类产生偏差。解决该问题的方法是引入参数$\lambda $,上式的计算公式分别更定为:

$P\left( {Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right)} + \lambda }}{{N + K\lambda }}$

$P\left( {{X_j} = {x_j}|Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {x_j^{\left( i \right)} = {a_{jl}}{\rm{,}}{y^{\left( i \right)}} = {c_k}} \right) + \lambda } }}{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right) + {S_j}\lambda } }}$

(4)朴素贝叶斯算法

1)计算先验概率及条件概率

$P\left( {Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right)} }}{N}$

$P\left( {{X_j} = {x_j}|Y = {c_k}} \right) = \frac{{\sum\limits_{i = 1}^N {I\left( {x_j^{\left( i \right)} = {a_{jl}}{\rm{,}}{y^{\left( i \right)}} = {c_k}} \right)} }}{{\sum\limits_{i = 1}^N {I\left( {{y^{\left( i \right)}} = {c_k}} \right)} }}$

2)对于给定的实例$x = {\left( {{x_1}{\rm{,}}{x_2}{\rm{,}} \cdots {\rm{,}}{x_n}} \right)^T}$,计算

$\prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} $

3)确定实例$x$的类

$y = f\left( x \right) = {\rm{arg }}\mathop {{\rm{max}}}\limits_{{c_k}} \prod\limits_{j = 1}^n {P\left( {{X_j} = {x_j}|Y = {c_k}} \right)P\left( {Y = {c_k}} \right)} $

其中,$j = 1{\rm{,}}2{\rm{,}} \cdots {\rm{,n}}$;$l = 1{\rm{,}}2{\rm{,}} \cdots {\rm{,}}{S_j}$;$k = 1,2, \cdots ,K$。

4、朴素贝叶斯算法典型例题

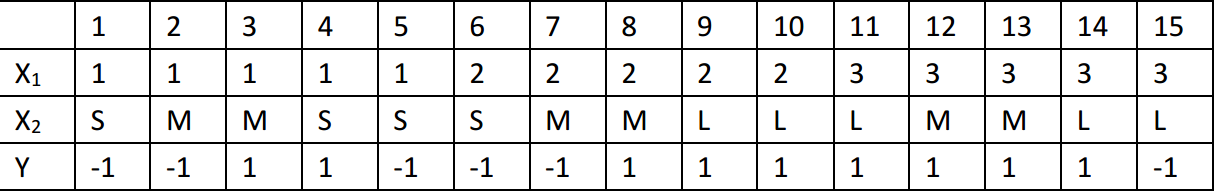

由下表的训练数据集,学习一个朴素贝叶斯分类器并确定当实例为$x = {\left( {2{\rm{,}}S} \right)^T}$时的类标记$y$。

表中,${X_1}$和${X_2}$为特征,其取值的集合分别为${A_1} = \left\{ {1{\rm{,2,3}}} \right\}$,${X_2}{A_2} = \left\{ {S,M,L} \right\}$,$Y$为类标记,$Y \in \left\{ { - 1{\rm{,1}}} \right\}$。

解:根据朴素贝叶斯算法,计算以下概率:

$P\left( {Y = 1} \right) = \frac{9}{{15}}$,$P\left( {Y = {\rm{ - }}1} \right) = \frac{6}{{15}}$

$P\left( {{X_1} = 1|Y = 1} \right) = \frac{2}{9}$,$P\left( {{X_1} = 2|Y = 1} \right) = \frac{3}{9}$,$P\left( {{X_1} = 3|Y = 1} \right) = \frac{4}{9}$

$P\left( {{X_1} = 1|Y = {\rm{ - }}1} \right) = \frac{3}{6}$,$P\left( {{X_1} = 2|Y = {\rm{ - }}1} \right) = \frac{2}{6}$,$P\left( {{X_1} = 3|Y = {\rm{ - }}1} \right) = \frac{1}{6}$

$P\left( {{X_2} = S|Y = 1} \right) = \frac{1}{9}$,$P\left( {{X_2} = M|Y = 1} \right) = \frac{4}{9}$,$P\left( {{X_2} = L|Y = 1} \right) = \frac{4}{9}$

$P\left( {{X_2} = S|Y = {\rm{ - }}1} \right) = \frac{3}{6}$,$P\left( {{X_2} = M|Y = {\rm{ - }}1} \right) = \frac{2}{6}$,$P\left( {{X_2} = L|Y = {\rm{ - }}1} \right) = \frac{1}{6}$

对于给定的实例$x = {\left( {2{\rm{,}}S} \right)^T}$,计算:

$P\left( {Y = 1} \right)P\left( {{X_1} = 2|Y = 1} \right)P\left( {{X_2} = S|Y = 1} \right){\rm{ = }}\frac{9}{{15}} \cdot \frac{4}{9} \cdot \frac{1}{9}{\rm{ = }}\frac{1}{{45}}$

$P\left( {Y = {\rm{ - }}1} \right)P\left( {{X_1} = 2|Y = {\rm{ - }}1} \right)P\left( {{X_2} = S|Y = {\rm{ - }}1} \right){\rm{ = }}\frac{6}{{15}} \cdot \frac{2}{6} \cdot \frac{3}{6}{\rm{ = }}\frac{1}{{15}}$

因为$\frac{1}{{15}} > \frac{1}{{45}}$,所以$y = - 1$

5、朴素贝叶斯算法Python实践——文本分类之过滤网站恶意留言[2]

''' Created on Oct 19, 2010 @author: Peter ''' import numpy as np #词表到向量的转换函数 def loadDataSet(): postingList=[['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'], ['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'], ['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'], ['stop', 'posting', 'stupid', 'worthless', 'garbage'], ['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'], ['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']] classVec = [0,1,0,1,0,1] #1 is abusive, 0 not return postingList,classVec #创建一个包含在所有文档中出现的不重复词的列表 def createVocabList(dataSet): vocabSet = set([]) #create empty set for document in dataSet: vocabSet = vocabSet | set(document) #union of the two sets return list(vocabSet) #输出文档向量,表示词汇表中的单词在输入文档中是否出现 def setOfWords2Vec(vocabList, inputSet): returnVec = [0]*len(vocabList) for word in inputSet: if word in vocabList: returnVec[vocabList.index(word)] = 1 else: print ("the word: %s is not in my Vocabulary!" % word) return returnVec #朴素贝叶斯分类器训练函数 def trainNB0(trainMatrix,trainCategory): numTrainDocs = len(trainMatrix) numWords = len(trainMatrix[0]) pAbusive = sum(trainCategory)/float(numTrainDocs) p0Num = np.ones(numWords); p1Num = np.ones(numWords) #change to ones() p0Denom = 2.0; p1Denom = 2.0 #change to 2.0 for i in range(numTrainDocs): if trainCategory[i] == 1: p1Num += trainMatrix[i] p1Denom += sum(trainMatrix[i]) else: p0Num += trainMatrix[i] p0Denom += sum(trainMatrix[i]) p1Vect = np.log(p1Num/p1Denom) #change to log() p0Vect = np.log(p0Num/p0Denom) #change to log() return p0Vect,p1Vect,pAbusive #朴素贝叶斯分类函数 def classifyNB(vec2Classify, p0Vec, p1Vec, pClass1): p1 = sum(vec2Classify * p1Vec) + np.log(pClass1) #element-wise mult p0 = sum(vec2Classify * p0Vec) + np.log(1.0 - pClass1) if p1 > p0: return 1 else: return 0 #朴素贝叶斯词袋模型 ,与函数 setOfWords2Vec()几乎完全一样, #不同的是遇到一个词增加词向量对应值 def bagOfWords2VecMN(vocabList, inputSet): returnVec = [0]*len(vocabList) for word in inputSet: if word in vocabList: returnVec[vocabList.index(word)] += 1 return returnVec #测试 def testingNB(): listOPosts,listClasses = loadDataSet() myVocabList = createVocabList(listOPosts) trainMat=[] for postinDoc in listOPosts: trainMat.append(setOfWords2Vec(myVocabList, postinDoc)) p0V,p1V,pAb = trainNB0(np.array(trainMat),np.array(listClasses)) testEntry = ['love', 'my', 'dalmation'] thisDoc = np.array(setOfWords2Vec(myVocabList, testEntry)) print (testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)) testEntry = ['stupid', 'garbage'] thisDoc = np.array(setOfWords2Vec(myVocabList, testEntry)) print (testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)) if __name__ == "__main__": listOPosts,listClasses = loadDataSet() myVocabList = createVocabList(listOPosts) a = setOfWords2Vec(myVocabList, listOPosts[0]) b = setOfWords2Vec(myVocabList, listOPosts[3]) #测试 testingNB()

参考文献

[1] 李航. 统计学习方法[M]. 北京:清华大学出版社,2012.

[2] Peter. 机器学习实战[M]. 北京:人民邮电出版社,2013.

[3] 赵志勇. Python机器学习算法[M]. 北京:电子工业出版社,2017.

[4] 周志华. 机器学习[M]. 北京:清华大学出版社,2016.

浙公网安备 33010602011771号

浙公网安备 33010602011771号