第三次作业

作业①

气象爬取

实验要求

在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

核心代码和运行结果

点击查看代码

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("""

CREATE TABLE weathers (

wCity VARCHAR(16),

wDate VARCHAR(16),

wWeather VARCHAR(64),

wTemp VARCHAR(32),

CONSTRAINT pk_weather PRIMARY KEY (wCity, wDate)

)

""")

except:

# 如果表已存在则清空

self.cursor.execute("DELETE FROM weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute(

"INSERT INTO weathers (wCity, wDate, wWeather, wTemp) VALUES (?, ?, ?, ?)",

(city, date, weather, temp)

)

except Exception as err:

print("Insert error:", err)

def show(self):

self.cursor.execute("SELECT * FROM weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

self.cityCode = {

"北京": "101010100",

"上海": "101020100",

"广州": "101280101",

"深圳": "101280601"

} # 城市编码

self.db = WeatherDB()

def forecastCity(self, city):

if city not in self.cityCode:

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req).read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp_high = li.select('p[class="tem"] span')

high = temp_high[0].text if temp_high else ""

low = li.select('p[class="tem"] i')[0].text

temp = high + "/" + low

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print("Parse error:", err)

except Exception as err:

print("Request error:", err)

def process(self, cities):

self.db.openDB()

for city in cities:

self.forecastCity(city)

# self.db.show() # 如果需要显示结果可以取消注释

self.db.closeDB()

if __name__ == "__main__":

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

gitee:https://gitee.com/abcman12/2025_crawl_project/tree/master/第二次作业/作业1

心得

这个项目我觉得最关键需要找到各个城市的代码的url,要通过不同城市的url来发现它们仅在城市编码这一部分有所区别,同时必须用user——agent来模拟浏览器,还有编码问题,在utf-8和gbk切换,要处理好

作业②

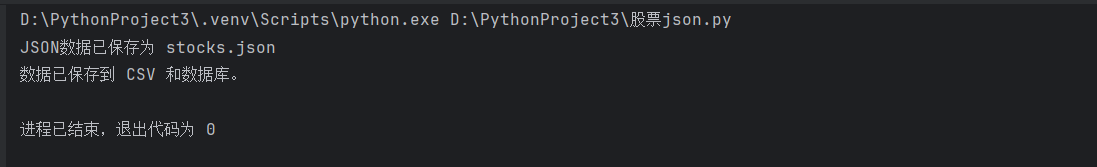

股票信息爬取

实验要求

用requests和json解析方法定向爬取股票相关信息,并存储在数据库中。

核心代码和运行结果

点击查看代码

import re

import json

import csv

import sqlite3

# 原始 JSON

jsonp_str = '''jQuery371048325911427590795_1761723060607({"rc":0,"rt":6,"svr":181669449,"lt":1,"full":1,"dlmkts":"","data":{"total":2292,"diff":[{"f1":2,"f2":11322,"f3":2428,"f4":2212,"f5":208842,"f6":2171458296.0,"f7":3296,"f8":5096,"f9":-25032,"f10":61,"f12":"688765","f13":1,"f14":"C禾元-U","f15":12003,"f16":9000,"f17":9001,"f18":9110,"f23":1383,"f152":2},{"f1":2,"f2":1484,"f3":1997,"f4":247,"f5":1627707,"f6":2264804137.0,"f7":2053,"f8":1169,"f9":3744,"f10":264,"f12":"688472","f13":1,"f14":"阿特斯","f15":1484,"f16":1230,"f17":1232,"f18":1237,"f23":235,"f152":2},{"f1":2,"f2":28030,"f3":1321,"f4":3271,"f5":62234,"f6":1677362941.0,"f7":1413,"f8":1641,"f9":7991,"f10":150,"f12":"688411","f13":1,"f14":"海博思创","f15":28498,"f16":25000,"f17":25000,"f18":24759,"f23":1237,"f152":2},{"f1":2,"f2":5998,"f3":1255,"f4":669,"f5":115230,"f6":665078154.0,"f7":1807,"f8":714,"f9":99327,"f10":268,"f12":"688210","f13":1,"f14":"统联精密","f15":6200,"f16":5237,"f17":5319,"f18":5329,"f23":774,"f152":2},{"f1":2,"f2":8675,"f3":1208,"f4":935,"f5":283724,"f6":2398387669.0,"f7":1492,"f8":6448,"f9":13437,"f10":93,"f12":"603175","f13":1,"f14":"C超颖","f15":9055,"f16":7900,"f17":7900,"f18":7740,"f23":1317,"f152":2},{"f1":2,"f2":1660,"f3":1178,"f4":175,"f5":281764,"f6":473918200.0,"f7":1003,"f8":1201,"f9":3829,"f10":861,"f12":"688410","f13":1,"f14":"山外山","f15":1749,"f16":1600,"f17":1660,"f18":1485,"f23":301,"f152":2},{"f1":2,"f2":7560,"f3":1119,"f4":761,"f5":323858,"f6":2396675147.0,"f7":1455,"f8":704,"f9":5368,"f10":146,"f12":"688676","f13":1,"f14":"金盘科技","f15":7840,"f16":6851,"f17":6982,"f18":6799,"f23":737,"f152":2},{"f1":2,"f2":51100,"f3":1109,"f4":5100,"f5":86497,"f6":4365861748.0,"f7":1717,"f8":1076,"f9":-148931,"f10":147,"f12":"688027","f13":1,"f14":"国盾量子","f15":54000,"f16":46101,"f17":46868,"f18":46000,"f23":1636,"f152":2},{"f1":2,"f2":1972,"f3":1104,"f4":196,"f5":885929,"f6":1684624450.0,"f7":1385,"f8":407,"f9":-737,"f10":266,"f12":"688599","f13":1,"f14":"天合光能","f15":1997,"f16":1751,"f17":1767,"f18":1776,"f23":189,"f152":2},{"f1":2,"f2":5920,"f3":1034,"f4":555,"f5":135225,"f6":766330019.0,"f7":1189,"f8":556,"f9":-43335,"f10":204,"f12":"688390","f13":1,"f14":"固德威","f15":5968,"f16":5330,"f17":5388,"f18":5365,"f23":528,"f152":2},{"f1":2,"f2":457,"f3":1012,"f4":42,"f5":6446580,"f6":2854921949.0,"f7":1012,"f8":555,"f9":1011,"f10":278,"f12":"600219","f13":1,"f14":"南山铝业","f15":457,"f16":415,"f17":416,"f18":415,"f23":103,"f152":2},{"f1":2,"f2":7713,"f3":1009,"f4":707,"f5":70076,"f6":521500506.0,"f7":1119,"f8":721,"f9":4352,"f10":176,"f12":"688717","f13":1,"f14":"艾罗能源","f15":7771,"f16":6987,"f17":7006,"f18":7006,"f23":272,"f152":2},{"f1":2,"f2":688,"f3":1008,"f4":63,"f5":908074,"f6":604145120.0,"f7":944,"f8":492,"f9":-1562,"f10":59,"f12":"600748","f13":1,"f14":"上实发展","f15":688,"f16":629,"f17":636,"f18":625,"f23":122,"f152":2},{"f1":2,"f2":809,"f3":1007,"f4":74,"f5":233706,"f6":186128590.0,"f7":544,"f8":587,"f9":-21942,"f10":194,"f12":"603122","f13":1,"f14":"合富中国","f15":809,"f16":769,"f17":787,"f18":735,"f23":290,"f152":2},{"f1":2,"f2":910,"f3":1004,"f4":83,"f5":788319,"f6":691158323.0,"f7":1524,"f8":1839,"f9":-1018,"f10":358,"f12":"603378","f13":1,"f14":"亚士创能","f15":910,"f16":784,"f17":784,"f18":827,"f23":349,"f152":2},{"f1":2,"f2":625,"f3":1004,"f4":57,"f5":3883723,"f6":2352559911.0,"f7":722,"f8":965,"f9":23071,"f10":622,"f12":"600516","f13":1,"f14":"方大炭素","f15":625,"f16":584,"f17":592,"f18":568,"f23":155,"f152":2},{"f1":2,"f2":713,"f3":1003,"f4":65,"f5":1116033,"f6":788501719.0,"f7":556,"f8":670,"f9":6765,"f10":198,"f12":"600478","f13":1,"f14":"科力远","f15":713,"f16":677,"f17":678,"f18":648,"f23":409,"f152":2},{"f1":2,"f2":2307,"f3":1001,"f4":210,"f5":206873,"f6":462736719.0,"f7":1035,"f8":770,"f9":17109,"f10":113,"f12":"603038","f13":1,"f14":"华立股份","f15":2307,"f16":2090,"f17":2093,"f18":2097,"f23":454,"f152":2},{"f1":2,"f2":1253,"f3":1001,"f4":114,"f5":919032,"f6":1117071873.0,"f7":948,"f8":2099,"f9":6222,"f10":454,"f12":"603088","f13":1,"f14":"宁波精达","f15":1253,"f16":1145,"f17":1179,"f18":1139,"f23":575,"f152":2},{"f1":2,"f2":5771,"f3":1001,"f4":525,"f5":14140,"f6":81604248.0,"f7":0,"f8":143,"f9":-4314,"f10":99,"f12":"605178","f13":1,"f14":"时空科技","f15":5771,"f16":5771,"f17":5771,"f18":5246,"f23":451,"f152":2}]}})'''

# 提取 JSON 内容并保存为本地文件

match = re.search(r'\((\{.*\})\)', jsonp_str)

if match:

json_data = match.group(1)

with open('stocks.json', 'w', encoding='utf-8') as f:

f.write(json_data)

print("JSON数据已保存为 stocks.json")

else:

print("未能提取JSON内容")

exit()

# 读取本地 JSON 文件

with open('stocks.json', 'r', encoding='utf-8') as f:

data = json.load(f)

# 提取股票列表

stocks = data['data']['diff']

# 初始化数据库

conn = sqlite3.connect('stocks.db')

cursor = conn.cursor()

# 删除旧表,重新创建

cursor.execute("DROP TABLE IF EXISTS stocks")

cursor.execute('''

CREATE TABLE stocks (

code TEXT,

name TEXT,

new_price REAL,

change_percent REAL,

change_amount REAL,

open_price REAL,

high REAL,

low REAL,

prev_close REAL,

volume INTEGER,

turnover REAL

)

''')

conn.commit()

# 打开 CSV 文件写入数据

with open('股票数据1.csv', 'w', newline='', encoding='utf-8-sig') as f:

writer = csv.writer(f)

writer.writerow(['代码', '名称', '最新价', '涨跌幅', '涨跌额', '今开', '最高', '最低', '昨收', '成交量', '成交额'])

for stock in stocks:

try:

row = [

stock.get('f12'), # 代码

stock.get('f14'), # 名称

stock.get('f2'), # 最新价

stock.get('f3'), # 涨跌幅

stock.get('f4'), # 涨跌额

stock.get('f17'), # 今开

stock.get('f15'), # 最高

stock.get('f16'), # 最低

stock.get('f18'), # 昨收

stock.get('f5'), # 成交量

stock.get('f6') # 成交额

]

# 写入 CSV

writer.writerow(row)

# 写入数据库

cursor.execute('''

INSERT INTO stocks (code, name, new_price, change_percent, change_amount, open_price, high, low, prev_close, volume, turnover)

VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

''', row)

except Exception as e:

print("跳过一条记录:", e)

continue

# 提交并关闭数据库连接

conn.commit()

conn.close()

print("数据已保存到 CSV 和数据库。")

心得

刚开始测试代码时由于我一次试图爬取了很多也信息结果被网站封了,还好借用了同学下载保存的json文件,并根据这一段json文件爬取了的股票信息,这里的关键是如何用正则表达式解析出json里的数据和每一页的url是在pn字段的区别,这是我实验过程中的最大困难

gitee:https://gitee.com/abcman12/2025_crawl_project/tree/master/第二次作业/作业2

作业③

大学排名爬取2021

要求

爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息。

核心代码和实验结果

点击查看代码

# -*- coding: utf-8 -*-

import ast

from bs4 import BeautifulSoup

f = "payload.js"

def g():

return open(f,"r",encoding="utf-8").read()

def b(s,i,L,R):

d=0;ins=0;esc=0;st=i

while i<len(s):

c=s[i]

if ins:

if esc: esc=0

elif c=="\\": esc=1

elif c=='"': ins=0

else:

if c=='"': ins=1

elif c==L:

d+=1

if d==1: st=i

elif c==R:

d-=1

if d==0: return st,i

i+=1

raise Exception("bad")

def m(js):

h=js.find("(function(")

pend=js.find(")",h+10)

ps=[x.strip() for x in js[h+10:pend].split(",") if x.strip()]

l,r=b(js,js.find("{",pend),"{","}")

i=r+1

while i<len(js) and js[i].isspace(): i+=1

if i<len(js) and js[i]==")": i+=1

while i<len(js) and js[i].isspace(): i+=1

a,c=b(js,i,"(",")")

s=js[a+1:c]

s=(s.replace("true","True").replace("false","False")

.replace("null","None").replace("void 0","None"))

v=ast.literal_eval("["+s+"]")

return {ps[i]:v[i] for i in range(min(len(ps),len(v)))}

def r(js):

p=js.find("return")

l=js.find("{",p)

l,r=b(js,l,"{","}")

return js[l:r+1]

def d(ret):

k=ret.find("data")

l=ret.find("[",k)

l,r=b(ret,l,"[","]")

return ret[l+1:r]

def o(txt,pos):

i=pos

while i>0 and txt[i]!="{": i-=1

l,r=b(txt,i,"{","}")

return txt[l:r+1]

def v(obj,key):

p=obj.find(key)

if p<0: return None

c=obj.find(":",p)

i=c+1

while i<len(obj) and obj[i].isspace(): i+=1

if i>=len(obj): return None

if obj[i] in "\"'":

q=obj[i];j=i+1;esc=0

while j<len(obj):

ch=obj[j]

if esc: esc=0

elif ch=="\\": esc=1

elif ch==q: return obj[i+1:j]

j+=1

return None

j=i

while j<len(obj) and obj[j] not in ",}\r\n\t ": j+=1

return obj[i:j]

def s(x,mp): return str(mp.get(x,x)) if x else ""

def num(x):

try: float(x); return 1

except: return 0

def main():

js=g()

_=BeautifulSoup("<html></html>","html.parser")

mp=m(js)

data=d(r(js))

out=[];seen=set();i=0

while 1:

p=data.find("ranking",i)

if p==-1: break

obj=o(data,p)

name=v(obj,"univNameCn")

sc=s(v(obj,"score"),mp)

if not num(sc):

i=p+10;continue

rk=s(v(obj,"ranking"),mp)

pr=s(v(obj,"province"),mp)

ct=s(v(obj,"univCategory"),mp)

try: rv=float(rk)

except: rv=1e9

k=(rv,name)

if k not in seen:

seen.add(k)

out.append((rv,name,pr,ct,sc))

i=p+10

out.sort(key=lambda x:x[0])

print("排名\t学校名称\t省市\t学校类型\t总分")

for r1,n,p,c,sc in out[:30]: # 只输出前30

r2=int(r1) if abs(r1-int(r1))<1e-9 else r1

print("{}\t{}\t{}\t{}\t{}".format(r2,n,p,c,sc))

if __name__=="__main__":

main()

心得体会

在这个实验中我先从网站开发者模式中找到了含有题目要求信息的js文件并运用python代码把其下载下来,接下来就遇到了最大的困难,也就是如何解析这段js文件,最先我想用json解析器,结果无法解析出js文件的数据。由于自己实在无法解决,在借助了大模型之后,我还询问了同学并查看自己理解了他的代码,最重要的思路就是提取关键字段,其他不管。最后回去靠着自己和大模型成功复现。关键是用m函数构建字典映射,把js里的实参转化成python对象,其次是d函数来提取data[]里的内容,为了不把[]也提取出来,我用了b函数来匹配中括号,然后就是在data字符串中查找ranking,以此为tag用o函数定位每个大学对象,之后用v函数提取这些对象里的字段值(univNameCn,score,ranking,province,univCategory),最后通过s函数把之前m函数构建的映射表来得到实际值,过滤后即解析出了正确数据。

gitee: https://gitee.com/abcman12/2025_crawl_project/tree/master/第二次作业/作业3

浙公网安备 33010602011771号

浙公网安备 33010602011771号