jmeter实测:sanic对比fastapi

好奇fastapi到底有多fast,今天用jmeter实测了一下,不过如此,也就比Flask快点吧,跟sanic是没法比的:

1. jmeter调用本地sanic服务(2个进程),服务器配置:win11,8GB内存,8核,客户端200个线程,100次循环: 平均耗时=32ms,P95=58ms,TPS=4664

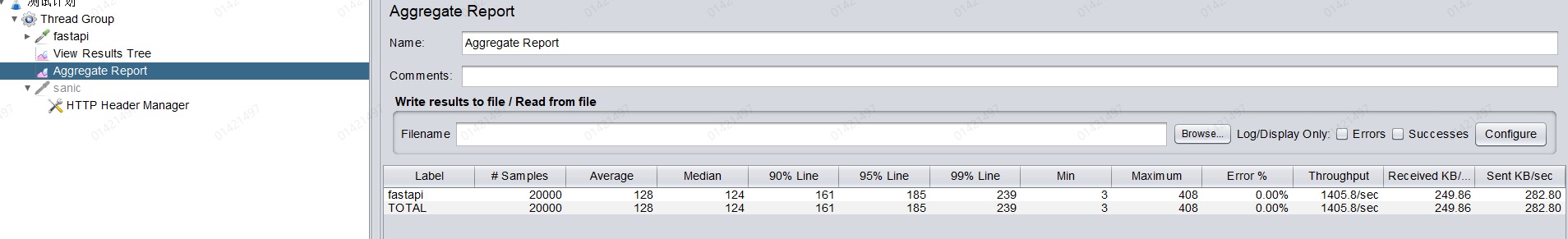

2. jmeter调用本地fastapi服务(2个进程),服务器配置:win11,8GB内存,8核,客户端200个线程,100次循环: 平均耗时=128ms,P95=185ms,TPS=1405

如果把客户端设置为50个线程,100次循环,则更差距加明显:

sanic:平均=1ms,P95=3ms,TPS=4277

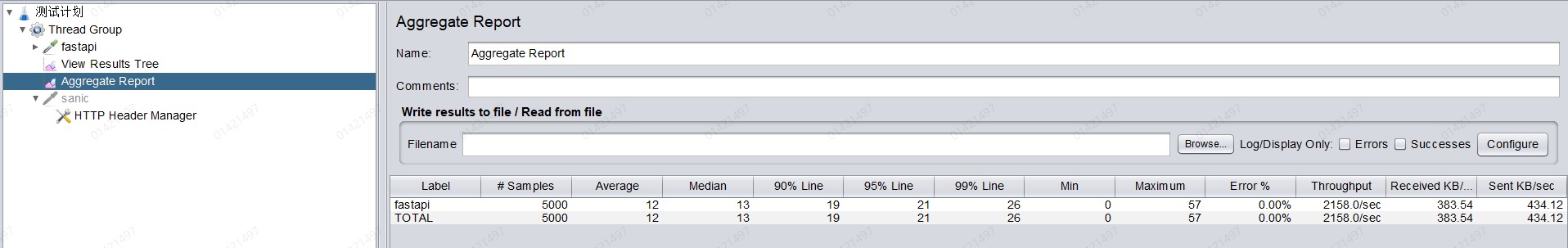

fastapi:平均=12ms,P95=21ms,TPS=2158

总结:速度 sanic是fastapi的4-10倍,TPS:sanic是fastapi的2-3倍。

sanic服务代码:

from pydantic import BaseModel from sanic import Sanic,response from sanic_pydantic import webargs class RequestModel(BaseModel): a: int b: int c: int app=Sanic('test') @app.post('/process') @webargs(body=RequestModel) async def process(request,**kwargs): # 从 kwargs 中提取参数 body = kwargs.get("payload") a = body.get('a') b = body.get('b') c = body.get('c') return response.json({"message": "Parameters received", "a": a, "b": b, "c": c}) if __name__ == "__main__": app.run(host="0.0.0.0", port=5002,workers=2)

fastapi服务代码:

from fastapi import FastAPI from uvicorn import run from pydantic import BaseModel # 创建 FastAPI 应用 app = FastAPI() # 定义请求体的 Pydantic 模型 class RequestModel(BaseModel): a: int b: int c: int # 定义 POST 接口 @app.post("/process") async def process(request: RequestModel): # 从请求体中提取 a, b, c 参数 a = request.a b = request.b c = request.c # 打印参数值(可选) print(f"a: {a}, b: {b}, c: {c}") # 返回响应 return {"message": "Parameters received", "a": a, "b": b, "c": c} if __name__=='__main__': run("fast_api_server:app",host='0.0.0.0',port=5001,workers=2)

浙公网安备 33010602011771号

浙公网安备 33010602011771号