鲁棒特征及应用

参考:https://zhuanlan.zhihu.com/p/70385018

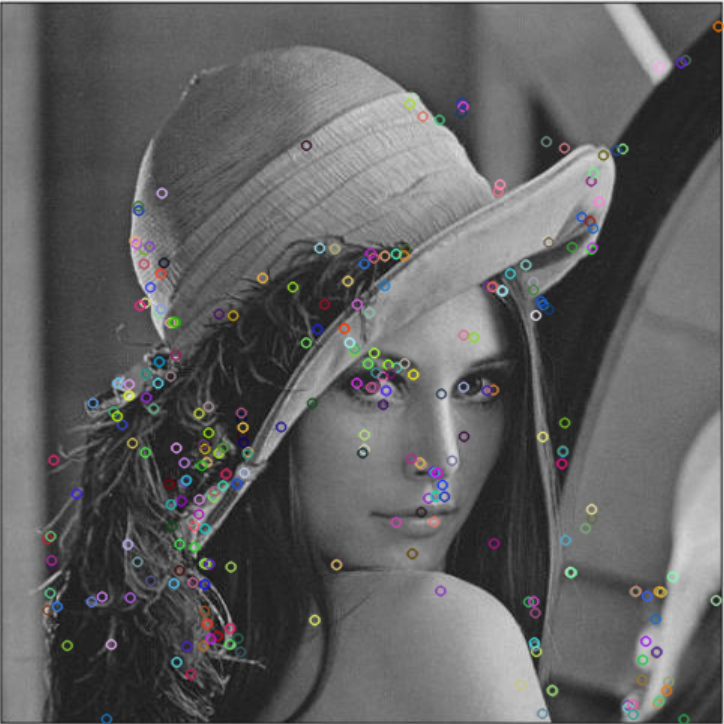

sift

import cv2 as cv

img = cv.imread('D:/lena.jpg')

gray = cv.cvtColor(img,cv.COLOR_BGR2GRAY)

sift = cv.xfeatures2d.SIFT_create()

kp,des = sift.detectAndCompute(gray,None)

img=cv.drawKeypoints(gray,kp,img)

print(des.shape)

cv.imshow("SIFT", img) # cv.imwrite('sift_keypoints.jpg',img)

cv.waitKey()

cv.destroyAllWindows()

descriptors_shape:(1073, 128)

surf

from __future__ import print_function import cv2 as cv import numpy as np img1 = cv.imread('D:/lena.jpg', cv.IMREAD_GRAYSCALE) #-- Step 1: Detect the keypoints using SURF Detector, compute the descriptors minHessian = 800 detector = cv.xfeatures2d_SURF.create(hessianThreshold=minHessian) keypoints1, descriptors1 = detector.detectAndCompute(img1, None) img = cv.drawKeypoints(img1, keypoints1, img1) print(descriptors1) print(descriptors1.shape) cv.imshow("keypoints", img) cv.waitKey() cv.destroyAllWindows()

descriptors_shape:(277, 64)

flann match

随便定义一个图像翻转操作。

def transform(origin): h, w = origin.shape generate_img = np.zeros(origin.shape) for i in range(h): for j in range(w): generate_img[i, w - 1 - j] = origin[i, j] return generate_img.astype(np.uint8)

import cv2 as cv import numpy as np import argparse def transform(origin): h, w = origin.shape generate_img = np.zeros(origin.shape) for i in range(h): for j in range(w): generate_img[i, w - 1 - j] = origin[i, j] cv.imwrite('D:/lena_in_scene.jpg', generate_img.astype(np.uint8)) return 0 parser = argparse.ArgumentParser(description='Code for Feature Matching with FLANN tutorial.') parser.add_argument('--input1', help='Path to input image 1.', default='D:/lena.jpg') parser.add_argument('--input2', help='Path to input image 2.', default='D:/lena_in_scene.jpg') args = parser.parse_args() img1 = cv.imread(args.input1, cv.IMREAD_GRAYSCALE) transform(img1) img2 = cv.imread(args.input2, cv.IMREAD_GRAYSCALE) if img1 is None or img2 is None: print('Could not open or find the images!') exit(0) #-- Step 1: Detect the keypoints using SURF Detector, compute the descriptors minHessian = 300 detector = cv.xfeatures2d_SURF.create(hessianThreshold=minHessian) keypoints1, descriptors1 = detector.detectAndCompute(img1, None) keypoints2, descriptors2 = detector.detectAndCompute(img2, None) #-- Step 2: Matching descriptor vectors with a FLANN based matcher # Since SURF is a floating-point descriptor NORM_L2 is used matcher = cv.DescriptorMatcher_create(cv.DescriptorMatcher_FLANNBASED) knn_matches = matcher.knnMatch(descriptors1, descriptors2, 2) #-- Filter matches using the Lowe's ratio test ratio_thresh = 0.7 good_matches = [] for m,n in knn_matches: if m.distance < ratio_thresh * n.distance: good_matches.append(m) #-- Draw matches img_matches = np.empty((max(img1.shape[0], img2.shape[0]), img1.shape[1]+img2.shape[1], 3), dtype=np.uint8) cv.drawMatches(img1, keypoints1, img2, keypoints2, good_matches, img_matches, flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS) #-- Show detected matches cv.imshow('Good Matches', img_matches) cv.waitKey()

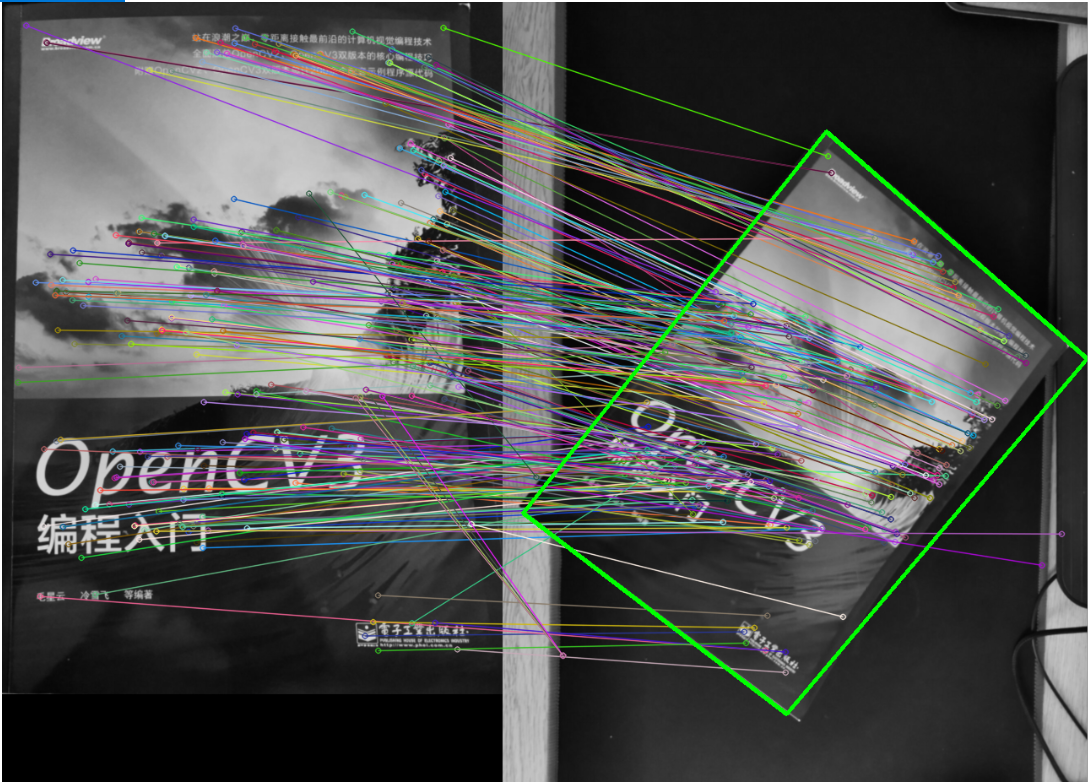

图片匹配

from __future__ import print_function import cv2 as cv import numpy as np import argparse img_object = cv.imread('D:/box.png', cv.IMREAD_GRAYSCALE) img_scene = cv.imread('D:/box_in_scene.png', cv.IMREAD_GRAYSCALE) if img_object is None or img_scene is None: print('Could not open or find the images!') exit(0) #-- Step 1: Detect the keypoints using SURF Detector, compute the descriptors minHessian = 300 detector = cv.xfeatures2d_SURF.create(hessianThreshold=minHessian) keypoints_obj, descriptors_obj = detector.detectAndCompute(img_object, None) keypoints_scene, descriptors_scene = detector.detectAndCompute(img_scene, None) #-- Step 2: Matching descriptor vectors with a FLANN based matcher # Since SURF is a floating-point descriptor NORM_L2 is used matcher = cv.DescriptorMatcher_create(cv.DescriptorMatcher_FLANNBASED) knn_matches = matcher.knnMatch(descriptors_obj, descriptors_scene, 2) #-- Filter matches using the Lowe's ratio test ratio_thresh = 0.75 good_matches = [] for m,n in knn_matches: if m.distance < ratio_thresh * n.distance: good_matches.append(m) #-- Draw matches img_matches = np.empty((max(img_object.shape[0], img_scene.shape[0]), img_object.shape[1]+img_scene.shape[1], 3), dtype=np.uint8) cv.drawMatches(img_object, keypoints_obj, img_scene, keypoints_scene, good_matches, img_matches, flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS) #-- Localize the object obj = np.empty((len(good_matches),2), dtype=np.float32) scene = np.empty((len(good_matches),2), dtype=np.float32) for i in range(len(good_matches)): #-- Get the keypoints from the good matches obj[i,0] = keypoints_obj[good_matches[i].queryIdx].pt[0] obj[i,1] = keypoints_obj[good_matches[i].queryIdx].pt[1] scene[i,0] = keypoints_scene[good_matches[i].trainIdx].pt[0] scene[i,1] = keypoints_scene[good_matches[i].trainIdx].pt[1] H, _ = cv.findHomography(obj, scene, cv.RANSAC) #-- Get the corners from the image_1 ( the object to be "detected" ) obj_corners = np.empty((4,1,2), dtype=np.float32) obj_corners[0,0,0] = 0 obj_corners[0,0,1] = 0 obj_corners[1,0,0] = img_object.shape[1] obj_corners[1,0,1] = 0 obj_corners[2,0,0] = img_object.shape[1] obj_corners[2,0,1] = img_object.shape[0] obj_corners[3,0,0] = 0 obj_corners[3,0,1] = img_object.shape[0] print(H) scene_corners = cv.perspectiveTransform(obj_corners, H) #-- Draw lines between the corners (the mapped object in the scene - image_2 ) cv.line(img_matches, (int(scene_corners[0,0,0] + img_object.shape[1]), int(scene_corners[0,0,1])),\ (int(scene_corners[1,0,0] + img_object.shape[1]), int(scene_corners[1,0,1])), (0,255,0), 4) cv.line(img_matches, (int(scene_corners[1,0,0] + img_object.shape[1]), int(scene_corners[1,0,1])),\ (int(scene_corners[2,0,0] + img_object.shape[1]), int(scene_corners[2,0,1])), (0,255,0), 4) cv.line(img_matches, (int(scene_corners[2,0,0] + img_object.shape[1]), int(scene_corners[2,0,1])),\ (int(scene_corners[3,0,0] + img_object.shape[1]), int(scene_corners[3,0,1])), (0,255,0), 4) cv.line(img_matches, (int(scene_corners[3,0,0] + img_object.shape[1]), int(scene_corners[3,0,1])),\ (int(scene_corners[0,0,0] + img_object.shape[1]), int(scene_corners[0,0,1])), (0,255,0), 4) #-- Show detected matches cv.imshow('Good Matches & Object detection', img_matches) cv.imwrite('D:/boxx.png',img_matches) cv.waitKey()

透视变换矩阵:

[[ 5.75824358e-01 -4.35665519e-01 3.83253304e+02] [ 4.86504147e-01 5.86534791e-01 1.53078867e+02] [ 7.79314893e-05 5.76010867e-05 1.00000000e+00]]

harris

from __future__ import print_function import cv2 as cv import numpy as np import argparse source_window = 'Source image' corners_window = 'Corners detected' max_thresh = 255 def cornerHarris_demo(val): thresh = val # Detector parameters blockSize = 2 apertureSize = 3 k = 0.04 # Detecting corners dst = cv.cornerHarris(src_gray, blockSize, apertureSize, k) # Normalizing dst_norm = np.empty(dst.shape, dtype=np.float32) cv.normalize(dst, dst_norm, alpha=0, beta=255, norm_type=cv.NORM_MINMAX) dst_norm_scaled = cv.convertScaleAbs(dst_norm) # Drawing a circle around corners for i in range(dst_norm.shape[0]): for j in range(dst_norm.shape[1]): if int(dst_norm[i,j]) > thresh: cv.circle(dst_norm_scaled, (j,i), 5, (0,255,128), 2) # Showing the result cv.namedWindow(corners_window) cv.imshow(corners_window, dst_norm_scaled) # Load source image and convert it to gray parser = argparse.ArgumentParser(description='Code for Harris corner detector tutorial.') parser.add_argument('--input', help='Path to input image.', default='D:/box.png') args = parser.parse_args() src = cv.imread(args.input) if src is None: print('Could not open or find the image:', args.input) exit(0) src_gray = cv.cvtColor(src, cv.COLOR_BGR2GRAY) # Create a window and a trackbar cv.namedWindow(source_window) thresh = 200 # initial threshold cv.createTrackbar('Threshold: ', source_window, thresh, max_thresh, cornerHarris_demo) cv.imshow(source_window, src) cornerHarris_demo(thresh) cv.waitKey()

浙公网安备 33010602011771号

浙公网安备 33010602011771号